All the things begins with good information, so ingestion is usually your first step to unlocking insights. Nevertheless, ingestion presents challenges, like ramping up on the complexities of every information supply, retaining tabs on these sources as they alter, and governing all of this alongside the best way.

Lakeflow Join makes environment friendly information ingestion straightforward, with a point-and-click UI, a easy API, and deep integrations with the Information Intelligence Platform. Final yr, greater than 2,000 clients used Lakeflow Connect with unlock worth from their information.

On this weblog, we’ll evaluation the fundamentals of Lakeflow Join and recap the newest bulletins from the 2025 Information + AI Summit.

Ingest all of your information in a single place with Lakeflow Join

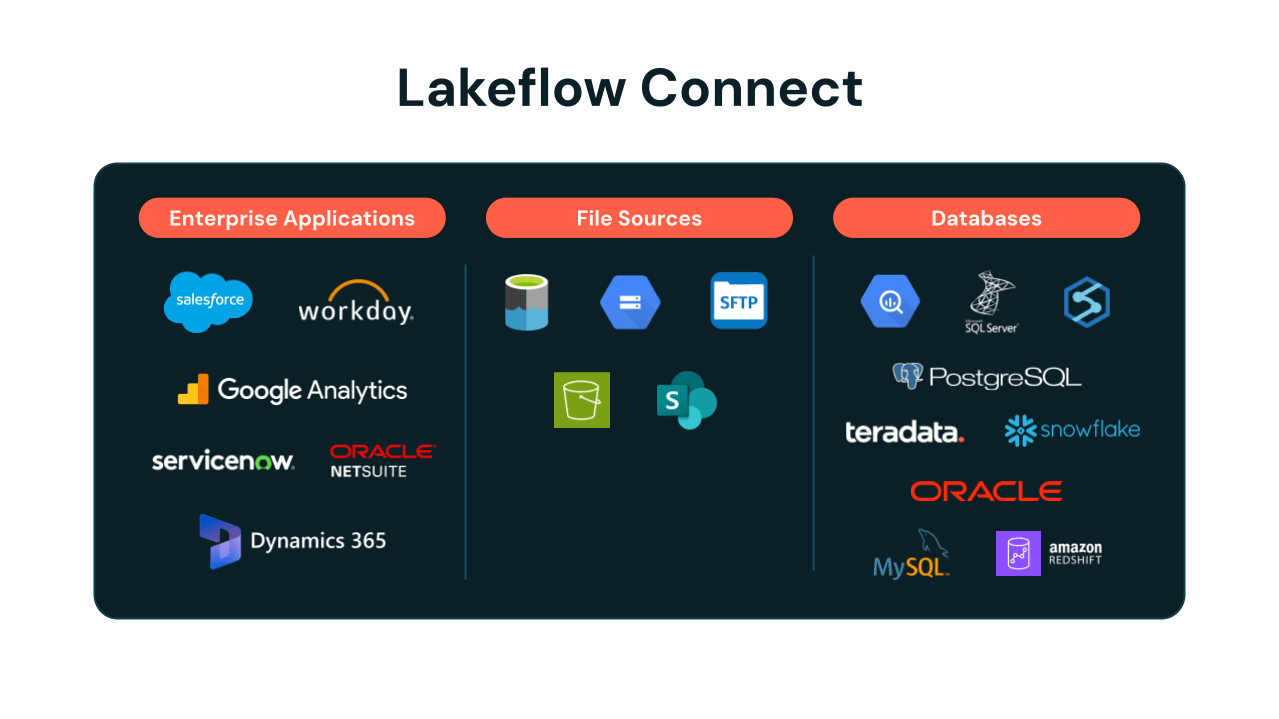

Lakeflow Join provides easy ingestion connectors for purposes, databases, cloud storage, message buses, and extra. Below the hood, ingestion is environment friendly, with incremental updates and optimized API utilization. As your managed pipelines run, we maintain schema evolution, seamless third-party API upgrades, and complete observability with built-in alerts.

Information + AI Summit 2025 Bulletins

At this yr’s Information + AI Summit, Databricks introduced the Common Availability of Lakeflow, the unified strategy to information engineering throughout ingestion, transformation, and orchestration. As a part of this, Lakeflow Join introduced Zerobus, a direct write API that simplifies ingestion for IoT, clickstream, telemetry and different comparable use instances. We additionally expanded the breadth of supported information sources with extra built-in connectors throughout enterprise purposes, file sources, databases, and information warehouses, in addition to information from cloud object storage.

Zerobus: a brand new option to push occasion information on to your lakehouse

We made an thrilling announcement introducing Zerobus, a brand new revolutionary strategy for pushing occasion information on to your lakehouse by bringing you nearer to the info supply. Eliminating information hops and lowering operational burden permits Zerobus to offer high-throughput direct writes with low latency, delivering close to real-time efficiency at scale.

Beforehand, some organizations used message buses like Kafka as transport layers to the Lakehouse. Kafka provides a sturdy, low-latency means for information producers to ship information, and it’s a well-liked selection when writing to a number of sinks. Nevertheless, it additionally provides additional complexity and prices, in addition to the burden of managing one other information copy—so it’s inefficient when your sole vacation spot is the Lakehouse. Zerobus gives a easy resolution for these instances.

Joby Aviation is already utilizing Zerobus to immediately push telemetry information into Databricks.

Joby is ready to use our manufacturing brokers with Zerobus to push gigabytes a minute of telemetry information on to our lakehouse, accelerating the time to insights — all with Databricks Lakeflow and the Information Intelligence Platform.”

— Dominik Müller, Manufacturing unit Techniques Lead, Joby Aviation, Inc.

As a part of Lakeflow Join, Zerobus can be unified with the Databricks Platform, so you’ll be able to leverage broader analytics and AI capabilities immediately. Zerobus is presently in Non-public Preview; attain out to your account crew for early entry.

🎥 Watch and be taught extra about Zerobus: Breakout session on the Information + AI Summit, that includes Joby Aviation, “Lakeflow Join: eliminating hops in your streaming structure”

Lakeflow Join expands ingestion capabilities and information sources

New absolutely managed connectors are persevering with to roll out throughout numerous launch states (see full checklist beneath), together with Google Analytics and ServiceNow, in addition to SQL Server – the primary database connector, all presently in Public Preview with Common Availability coming quickly.

We’ve got additionally continued innovating for patrons who need extra customization choices and use our current ingestion resolution, Auto Loader. It incrementally and effectively processes new information recordsdata as they arrive in cloud storage. We’ve launched some main price and efficiency enhancements for Auto Loader, together with 3X sooner listing listings and automated cleanup with “CleanSource,” each now usually obtainable, together with smarter and more cost effective file discovery utilizing file occasions. We additionally introduced native help for ingesting Excel recordsdata and ingesting information from SFTP servers, each in Non-public Preview, obtainable by request for early entry.

Supported information sources:

- Purposes: Salesforce, Workday, ServiceNow, Google Analytics, Microsoft Dynamics 365, Oracle NetSuite

- File sources: S3, ADLS, GCS, SFTP, SharePoint

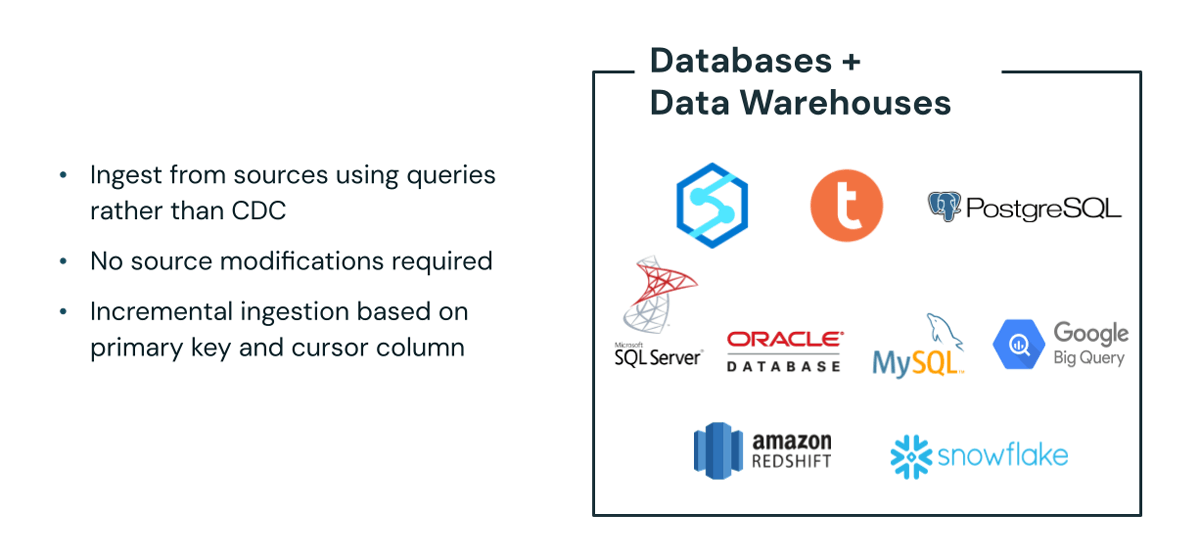

- Databases: SQL Server, Oracle Database, MySQL, PostgreSQL

- Information warehouses: Snowflake, Amazon Redshift, Google BigQuery

Throughout the expanded connector providing, we’re introducing query-based connectors that simplify information ingestion. These new connectors permit you to pull information immediately out of your supply techniques with out database modifications and work with learn replicas the place change information seize (CDC) logs aren’t obtainable. That is presently in Non-public Preview; attain out to your account crew for early entry.

🎥 Watch and be taught extra about Lakeflow Join: Breakout session on the Information + AI Summit, “Getting Began with Lakeflow Join”

🎥 Watch and be taught extra about ingesting from enterprise SaaS purposes: Breakout session on the Information + AI Summit that includes Databricks buyer Porsche Holding, “Lakeflow Join: Seamless Information Ingestion From Enterprise Apps”

🎥 Watch and be taught extra about database connectors: Breakout session on the Information + AI Summit, “Lakeflow Join: Simple, Environment friendly Ingestion From Databases”

Lakeflow Join in Jobs, now usually obtainable

We’re persevering with to develop capabilities to make it simpler so that you can use our ingestion connectors whereas constructing information pipelines, as a part of Lakeflow’s unified information engineering expertise. Databricks not too long ago introduced Lakeflow Join in Jobs, which lets you create ingestion pipelines inside Lakeflow Jobs. So, you probably have jobs as the middle of your ETL course of, this seamless integration gives a extra intuitive and unified expertise for managing ingestion.

Prospects can outline and handle their end-to-end workloads—from ingestion to transformation—multi functional place. Lakeflow Join in Jobs is now usually obtainable.

🎥 Watch and be taught extra about Lakeflow Jobs: Breakout session on the Information + AI Summit “Orchestration with Lakeflow Jobs”

Lakeflow Join: extra to come back in 2025 and past

Databricks understands the wants of knowledge engineers and organizations who drive innovation with their information utilizing analytics and AI instruments. To that finish, Lakeflow Join has continued to construct out strong, environment friendly ingestion capabilities with absolutely managed connectors to extra customizable options and APIs.

We’re simply getting began with Lakeflow Join. Keep tuned for extra bulletins later this yr, or contact your Databricks account crew to affix a preview for early entry.

To attempt Lakeflow Join, you’ll be able to evaluation the documentation, or try the Demo Heart.