In 2014, philosopher and futurist Nick Bostrom published a seminal work on the future of artificial intelligence, titled “Superintelligence: Paths, Dangers, Strategies,” which sparked widespread debate and cautionary warnings. The 1951 short story, I Robot, by Isaac Asimov, had a profound impact on popularizing the notion that superintelligent artificial intelligence (AI) could potentially surpass human capabilities, culminating in a scenario where AI, or “superintelligences,” might even pose an existential threat to humanity.

A decade after the initial warning, OpenAI CEO Sam Altman cautions that superintelligent AI may be just a few years away from becoming a reality. About a year ago, as OpenAI’s co-founder Ilya Sutskever organized a team within the company to focus on “generative models,” he and his team have now secured $1 billion to actualize this goal.

The nuances of their conversation suggest a discussion regarding the intricacies of human connection and the complexities of emotional intelligence. Broadly talking, superintelligence is . Unpacking what “which may imply” suggests in its wake can become a somewhat complicated endeavour.

Completely different Sorts of AI

According to leading experts, including renowned computer scientist Meredith Ringel Morris of Google, a particularly effective approach was conceptualized.

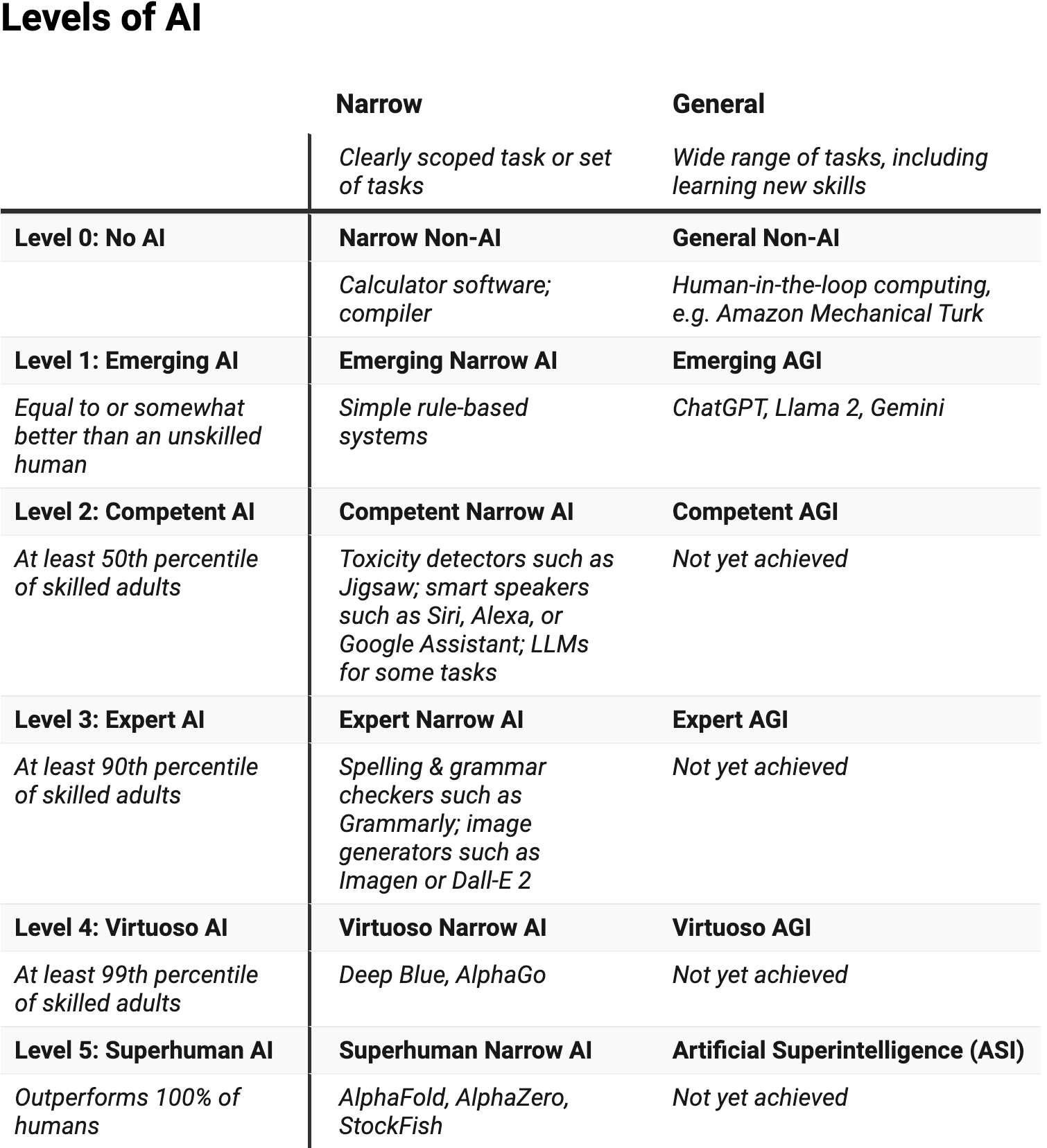

The framework outlines six tiers of AI proficiency: none, emerging, proficient, expert, masterful, and transcendent. This style of program makes a crucial distinction between lean applications that focus on executing a narrow range of functions, and more comprehensive applications.

A non-artificial intelligence system of minimal complexity is akin to a straightforward calculator. The software performs a wide range of complex calculations with precision, strictly adhering to its pre-defined parameters.

There exist numerous highly lucrative compact AI systems. In 1997, Morris’ algorithm famously challenged world chess champion Garry Kasparov, demonstrating the capabilities of a virtuoso-level artificial intelligence system.

Certain lean applications boast extraordinary abilities. Researchers have developed an AI system, leveraging machine learning algorithms, to predict the structure of protein molecules, and its creators are being celebrated globally this year. Meanwhile, what about more practical applications in everyday life? This AI-powered software program has the potential to tackle an extensive range of tasks, including the ability to learn and develop new skills.

A non-AI system that’s commonly found is actually human-based: it can accomplish multiple tasks, yet relies on actual people to complete them.

While common AI programs may have their limitations, they often lack the nuance and sophistication of their more advanced counterparts. In response to Morris, cutting-edge language models driving chatbots like ChatGPT are based on common AI, which currently sits at the “rising” level – meaning they’re equivalent to or surpass an unskilled human. However, they still have a way to go before reaching “competent,” roughly equivalent to half of expert adults.

Despite these advancements, we are still a significant distance from achieving widespread superintelligence.

What’s the Current State of Artificial Intelligence?

According to Morris, determining a system’s position is contingent on having reliable checkmarks or benchmarks.

Given the impressive capabilities of our image-generating system, which can produce images that surpass the skills of 99% of humans, it’s fair to say that its performance is virtuosic. Furthermore, while it may occasionally generate errors that no human would intentionally create – such as mutant fingers or unattainable objects – these inaccuracies only serve to underscore its remarkable ability to push the boundaries of visual representation.

The extent to which current programming is capable remains a topic of heated discussion.

A significant 2023 study on GPT-4 substantiated the notion that sparks of synthetic general intelligence have emerged.

OpenAI announces that its latest language model, , boasts impressive capabilities, capable of performing sophisticated reasoning and rivaling human experts’ proficiency across numerous benchmark tests.

Notwithstanding this, numerous mathematicians as well as linguists have crucial difficulties resolving complex logical problems in various languages. Their studies suggest that the models’ performances seem to mimic sophisticated pattern recognition rather than genuine higher-level thinking. While some have forecast a swift arrival of superintelligence, evidence suggests that this milestone may still be farther off than anticipated.

Will AI Preserve Getting Smarter?

While some predict that the rapid pace of AI advancements over recent years will continue unabated, potentially accelerating further. As tech firms invest heavily in AI hardware and capabilities, the prospect of achieving this goal no longer seems unrealistic.

While the prospect of widespread artificial intelligence (AI) emerging within the next decade is intriguing, it remains uncertain whether we will indeed witness the advent of common superintelligence. Researchers Sutskever and his team discussed a shared timeline for their project.

Recent AI breakthroughs stem from the application of deep learning, a methodology that identifies complex associations within vast datasets by uncovering intricate patterns and relationships. The Nobel Prize in Physics 2000 was awarded jointly to John Hopfield and Geoffrey Hinton for their pioneering work on the Hopfield network and Boltzmann machine, which have inspired numerous influential deep learning models currently employed.

Traditional AI models akin to ChatGPT have traditionally leveraged human-generated data, with a significant portion stemming from written texts in book form or online. As artificial intelligence systems have evolved, significant enhancements to their capabilities have resulted primarily from scaling up the size and scope of their programming, as well as the sheer volume of data upon which they are trained.

While taking this course may not yield significant benefits without further efforts to utilize information more effectively, create synthetic data, or transfer skills across domains. While acknowledging that sufficient information is available, some researchers argue that language models like ChatGPT can indeed reach a level of common competence, as Morris would describe it.

One recent paper suggests that a crucial aspect of superintelligence is, at the very least, from a human perspective? To facilitate the generation of novel and study-worthy outputs that a human observer would deem novel, it is essential to equip the system with the capability to repeatedly produce original content.

Current fashion trends often lack a skillful approach to open-endedness, and available open-ended programs are relatively scarce. The paper further emphasizes that neither novelty nor learnability, when considered independently, is a guarantee of success. To achieve superintelligence, a novel, open-ended foundation framework is needed.

What Are the Dangers?

As we continue to advance in AI development and integration into various aspects of our lives, it’s crucial that we acknowledge the potential risks and pitfalls that come with it. Can we truly expect AI systems to be objective and unbiased, or will they simply reflect the prejudices and biases embedded within their programming? In the short term, our primary concern should be ensuring that superintelligent AI does not pose an existential threat to humanity.

However, this does not mean AI is without its current dangers. As AI systems refine their capabilities, they may also attain increased autonomy. Ranging from vastly diverse capabilities and independence, existing systems present entirely distinct perilous scenarios.

When AI programs possess limited autonomy and individuals employ them as a type of marketing consultant – for instance, after requesting ChatGPT to summarise documents or allowing the YouTube algorithm to dictate our viewing preferences – we risk succumbing to an insidious threat of over-reliance on these artificial intelligence tools.

As AI advancements unfold, Morris anticipates various perils emerging, including the development of parasocial bonds between humans and AI systems, widespread job loss, and a collective sense of disillusionment that threatens societal cohesion.

What’s Subsequent?

Suppose, in the future, we develop and deploy superintelligent, totally autonomous AI brokers that are capable of making decisions without human intervention. Won’t our future encounters potentially draw AI’s attention and even prompt them to concentrate their efforts or react in ways that counteract human endeavors?

Not essentially. Autonomy and management . A highly automated system will provide an optimal blend of machine efficiency and human oversight.

Like many within the AI analysis team, I believe it’s possible. Notwithstanding the complexity of the task, constructing a new understanding will likely require a multifaceted approach that navigates unexplored territories; accordingly, researchers must venture forth with an open-minded curiosity.