Renowned actor Tom Hanks has sounded a cautionary note about the proliferation of deepfakes, revealing that scammers have successfully impersonated him using artificially generated fake videos and images.

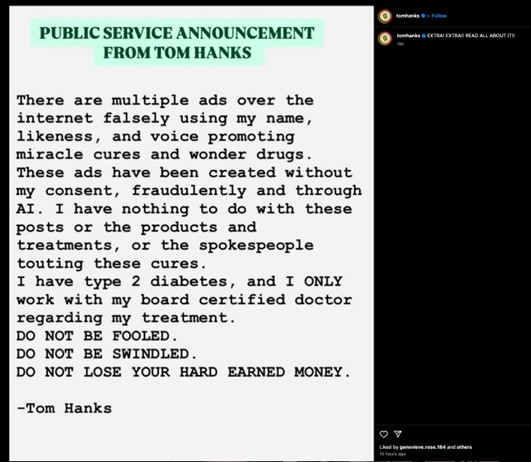

Actor Tom Hanks alerted his social media followers to a concerning issue: without his permission, his name, image, and voice had been used in deepfaked ads peddling “miracle cures”? The actor posted on:

As the digital marketing landscape continues to evolve, a novel challenge has arisen, obscuring the lines between reality and artificial construction: the proliferation of AI-generated content featuring celebrity likenesses, which threaten to upend traditional notions of authenticity and celebrity endorsement.

Tom Hanks isn’t the only sufferer of this phenomenon. In early 2024, a sophisticated deepfake scam emerged, posing as Taylor Swift to deceive victims into participating in a phony giveaway for free cookware supplies. In February 2023, a deepfake video featuring Kelly Clarkson promoted a line of weight loss supplements. Just recently, scammers created and disseminated fake deepfakes featuring Prince William endorsing a dubious fundraising initiative. Additionally, we have witnessed the proliferation of deepfakes featuring esteemed researchers promoting unproven remedies, a topic we will explore further in an upcoming blog post.

Without warning, we inhabit an era where scammers can effortlessly manipulate anyone into a deepfake. As AI instruments for generating these creations have solely become more sophisticated, increasingly accessible, and easier to employ. The authenticity of these fake testimonials seems remarkably genuine, further exacerbating the issue.

Malicious deepfakes can wreak havoc far beyond their celebrity targets. Algorithms have a profound impact on everyone who accesses the internet. While deepfakes have the potential to sully Tom Hanks’ reputation, their impact would likely extend far beyond him, causing harm to countless individuals. Deepfakes pose a significant risk to societal well-being, facilitating the spread of misinformation and fraud, which can have devastating consequences, including identity theft and voter suppression, particularly during election years – a concern that was starkly illustrated by the AI-generated Joe Biden robocalls in Vermont.

Celebrities such as Scarlett Johansson are increasingly taking legal action to combat the unauthorized use of their images. Despite existing federal regulations in the United States, remains largely unprepared to address the complexities and implications of AI-generated content. While progress remains elusive on a federal level, we’re observing promising developments at the state level within the United States.

Tennessee has recently passed legislation that grants its residents the right to control their own image and voice. Tennessee residents can take legal action against individuals or groups that create and disseminate deepfakes featuring their likenesses without authorization. Both Illinois and South Carolina have their respective laws under consideration.

Across the nation, just a small fraction of over 151 state-level initiatives have come to fruition or been distributed by this July – all focused on combating AI-generated deepfakes and misleading online media. As we delve into the realm of artificial intelligence, our next blog post will scrutinize how laws are finally adapting to the rapid advancements in this technology.

Not all AI-generated deepfakes are inherently unhealthy, as they can be used for creative purposes that bring value and entertainment to audiences. Artificial intelligence-powered deepfakes offer a plethora of innovative applications, including dubbing and subtitling films, generating educational “how-to” content, and even crafting harmless and amusing parody productions – all within the bounds of the law.

The concern lies in malicious deepfakes, reminiscent of the warnings issued by Tom Hanks regarding this technology. By honing in on specific telltale signs such as an unusual frequency of posts at odd hours, a preponderance of vague or cryptic language, and a noticeable absence of personal pronouns, one can begin to suspect that someone is using a bot.

Knowledge has been stored at a steady pace, due to its integration. The technology instantly flags any suspiciously crafted content that may have been tampered with using AI algorithms. Proper in your browser. It really works like this:

If it detects that the content you’re consuming features AI-generated audio, it notifies you immediately.

Whatever you choose to watch is yours to possess, along with the privilege of keeping it private.

If a seemingly genuine Tom Hanks or Taylor Swift deepfake appears in your social media feed, you’ll be highly confident that it’s a fabricated creation. You can simply silence notifications or toggle off scanning directly from your dashboard.

With a browser extension, the Deepfake Detector exposes the specific segments of audio that have been deepfaked, pinpointing precisely where and when this manipulated content appears within the video. The polygraph test is used to detect deception within the motion pictures. Because the video presents a performance, contrasting peaks of pink strains and troughs of grey strains offer a visual representation of what might be real and what might be staged.

As artificial intelligence detection capabilities improve, collective responsibility grows to vigilantly monitor and debunk misinformation. Especially given the proliferation of malevolent deepfakes that we currently confront.

Navigating the dawn of a new era in AI relies on cultivating self-awareness and introspection. Instruments are being developed to help detect deepfakes with precision. But ultimately, we must also develop our own abilities to spot these patterns and anomalies.

Initially, one should acknowledge just how remarkably effortless it is to generate a deepfake. Guarding our thoughts is what keeps us protected? As we observe celebrities touting miracle treatments or promoting sensational deals, our instinct is to pause and reassess before taking action.

With a comprehensive array of esteemed fact-checking resources at our disposal, we’re well-equipped to uncover the truth. Various reputable sources such as Snopes, Reuters, Politifact, the Associated Press, and FactCheck.org offer valuable tools for determining the accuracy of information presented, whether it’s factually true, misleading, or somewhere in between.

With a consciousness that permeates every aspect of existence, As the prevalence of malicious deepfakes grows, it’s becoming increasingly clear that they pose a substantial threat. It’s a safety concern. An identification theft concern. A well being concern. An election concern. Keeping a home clean and organized is a top priority for many of us? Establishing the hub was driven by our desire to create a space where you can stay informed about the latest AI-related threats, allowing for proactive measures to mitigate potential risks. Additionally, this is an opportunity for you to join the fight against deceptive deepfakes by flagging any suspicious content you come across online.

As AI’s presence offers remarkable benefits, it also presents complex difficulties to navigate. As we move forward, harmonizing innovation with moral accountability and customer security will be crucial. Let’s certainly proceed to observe and learn from these experiences right here in our blogs.

If something seems too good to be true – like a miraculous endorsement – it’s likely a red flag indicating a potential hoax or misleading information. Keep curious, keep cautious.

Introducing McAfee+

Protect Your Digital Life: Safeguarding Identity and Privacy