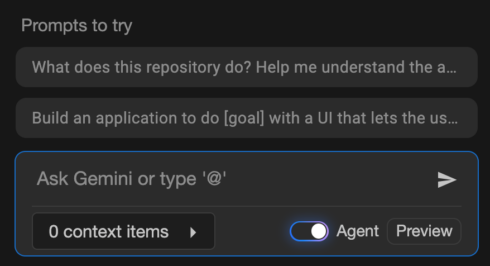

Agent Mode in Gemini Code Help now obtainable in VS Code and IntelliJ

This mode was launched final month to the Insiders Channel for VS Code to broaden the capabilities of Code Help past prompts and responses to assist actions like a number of file edits, full venture context, and built-in instruments and integration with ecosystem instruments.

Since being added to the Insiders Channel, a number of new options have been added, together with the flexibility to edit code adjustments utilizing Gemini’s Inline diff, user-friendly quota updates, real-time shell command output, and state preservation between IDE restarts.

Individually, the corporate additionally introduced new agentic capabilities in its AI Mode in Search, equivalent to the flexibility to set dinner reservations based mostly on components like occasion dimension, date, time, location, and most popular sort of meals. U.S. customers opted into the AI Mode experiment in Labs may also now see outcomes which might be extra particular to their very own preferences and pursuits. Google additionally introduced that AI Mode is now obtainable in over 180 new nations.

GitHub’s coding agent can now be launched from wherever on platform utilizing new Brokers panel

GitHub has added a brand new panel to its UI that allows builders to invoke the Copilot coding agent from wherever on the location.

From the panel, builders can assign background duties, monitor operating duties, or evaluation pull requests. The panel is a light-weight overlay on GitHub.com, however builders can even open the panel in full-screen mode by clicking “View all duties.”

The agent will be launched from a single immediate, like “Add integration exams for LoginController” or “Repair #877 utilizing pull request #855 for instance.” It may possibly additionally run a number of duties concurrently, equivalent to “Add unit take a look at protection for utils.go” and “Add unit take a look at protection for helpers.go.”

Anthropic provides Claude Code to Enterprise, Crew plans

With this change, each Claude and Claude Code might be obtainable below a single subscription. Admins will have the ability to assign customary or premium seats to customers based mostly on their particular person roles. By default, seats embody sufficient utilization for a typical workday, however further utilization will be added during times of heavy use. Admins can even create a most restrict for further utilization.

Different new admin settings embody a utilization analytics dashboard and the flexibility to deploy and implement settings, equivalent to instrument permissions, file entry restrictions, and MCP server configurations.

Microsoft provides Copilot-powered debugging options for .NET in Visible Studio

Copilot can now recommend applicable places for breakpoints and tracepoints based mostly on present context. Equally, it might probably troubleshoot non-binding breakpoints and stroll builders by the potential trigger, equivalent to mismatched symbols or incorrect construct configurations.

One other new function is the flexibility to generate LINQ queries on huge collections within the IEnumerable Visualizer, which renders information right into a sortable, filterable tabular view. For instance, a developer might ask for a LINQ question that may floor problematic rows inflicting a filter situation. Moreover, builders can hover over any LINQ assertion and get a proof from Copilot on what it’s doing, consider it in context, and spotlight potential inefficiencies.

Copilot can even now assist builders cope with exceptions by summarizing the error, figuring out potential causes, and providing focused code repair options.

Groundcover launches observability answer for LLMs and brokers

The eBPF-based observability supplier groundcover introduced an observability answer particularly for monitoring LLMs and brokers.

It captures each interplay with LLM suppliers like OpenAI and Anthropic, together with prompts, completions, latency, token utilization, errors, and reasoning paths.

As a result of groundcover makes use of eBPF, it’s working on the infrastructure layer and may obtain full visibility into each request. This permits it to do issues like comply with the reasoning path of failed outputs, examine immediate drift, or pinpoint when a instrument name introduces latency.

IBM and NASA launch open-source AI mannequin for predicting photo voltaic climate

The mannequin, Surya, analyzes excessive decision photo voltaic remark information to foretell how photo voltaic exercise impacts Earth. In response to IBM, photo voltaic storms can injury satellites, affect airline journey, and disrupt GPS navigation, which might negatively affect industries like agriculture and disrupt meals manufacturing.

The photo voltaic pictures that Surya was educated on are 10x bigger than sometimes AI coaching information, so the workforce has to create a multi-architecture system to deal with it.

The mannequin was launched on Hugging Face.

Preview of NuGet MCP Server now obtainable

Final month, Microsoft introduced assist for constructing MCP servers with .NET after which publishing them to NuGet. Now, the corporate is saying an official NuGet MCP Server to combine NuGet package deal data and administration instruments into AI growth workflows.

“Because the NuGet package deal ecosystem is all the time evolving, giant language fashions (LLMs) get out-of-date over time and there’s a want for one thing that assists them in getting data in realtime. The NuGet MCP server gives LLMs with details about new and up to date packages which were revealed after the fashions in addition to instruments to finish package deal administration duties,” Jeff Kluge, principal software program engineer at Microsoft, wrote in a weblog put up.

Opsera’s Codeglide.ai lets builders simply flip legacy APIs into MCP servers

Codeglide.ai, a subsidiary of the DevOps firm Opsera, is launching its MCP server lifecycle platform that may allow builders to show APIs into MCP servers.

The answer continually screens API adjustments and updates the MCP servers accordingly. It additionally gives context-aware, safe, and stateful AI entry with out the developer needing to put in writing customized code.

In response to Opsera, giant enterprises might preserve 2,000 to eight,000 APIs — 60% of that are legacy APIs — and MCP gives a means for AI to effectively work together with these APIs. The corporate says that this new providing can cut back AI integration time by 97% and prices by 90%.

Confluent publicizes Streaming Brokers

Streaming Brokers is a brand new function in Confluent Cloud for Apache Flink that brings agentic AI into information stream processing pipelines. It allows customers to construct, deploy, and orchestrate brokers that may act on real-time information.

Key options embody instrument calling through MCP, the flexibility to connect with fashions or databases utilizing Flink, and the flexibility to complement streaming information with non-Kafka information sources, like relational databases and REST APIs.

“Even your smartest AI brokers are flying blind in the event that they don’t have recent enterprise context,” mentioned Shaun Clowes, chief product officer at Confluent. “Streaming Brokers simplifies the messy work of integrating the instruments and information that create actual intelligence, giving organizations a strong basis to deploy AI brokers that drive significant change throughout the enterprise.”