While “observe makes excellent” is indeed a valuable principle for humans, it’s equally pertinent when introducing robots to new settings: Observe, make excellent – it’s a mantra that serves robots as well.

As a sleek, metallic behemoth emerged from the early morning mist, the dimly lit warehouse came alive with the soft hum of machinery and the faint scent of grease and ozone. The package includes the skills it learned during training, such as placing an object, and it now desires to select objects from an unfamiliar shelf. Initially, the machine encounters difficulties in adapting to its novel surroundings. For optimal improvement, a robotic system would benefit from identifying specific areas within a broader process where enhancements are desired, subsequently focusing its efforts and resources on refining or optimizing those targeted aspects.

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and The AI Institute have created a more pragmatic solution, circumventing the need for on-site human intervention to optimize robotic efficiency. At the recent Robotics: Science and Programs Convention, researchers showcased an innovative “Estimate, Extrapolate, and Situate” (EES) algorithm that enables autonomous observation by these machines, potentially accelerating their skill-building for tasks in factories, homes, and healthcare settings.

To enhance robotic performance in tasks such as floor sweeping, EES leverages an advanced vision system that accurately detects and tracks surrounding objects. The algorithm assesses the reliability with which the robot performs a task, such as sweeping, and determines whether observing more would be justified. The Expert Evaluation System (EES) predicts the robotic system’s performance in executing a broader process if it enhances that particular skill, ultimately refining its abilities through practice. The imaginative and visionary system continuously verifies the successful completion of each attempt, ensuring accuracy and precision.

Energy-efficient solutions might prove valuable in various settings such as hospitals, manufacturing facilities, residential homes, and specialty coffee shops. If you wanted a robot to clean your living room, it may require training to develop skills such as mopping or vacuuming. According to Nishanth Kumar SM ’24 and his colleagues, EES can potentially facilitate robotic enhancement without human intervention, leveraging only a few trial runs.

Kumar, co-lead developer of the project and a PhD scholar in electrical engineering and computer science at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), expressed doubt about whether their specialization could be successfully applied to a real robotic platform using a limited number of samples.

“Now, our algorithm enables robots to develop meaningful expertise at a fraction of the cost and timeframe, leveraging tens or hundreds of knowledge factors, a significant improvement over traditional reinforcement learning methods that require thousands or even millions of samples.”

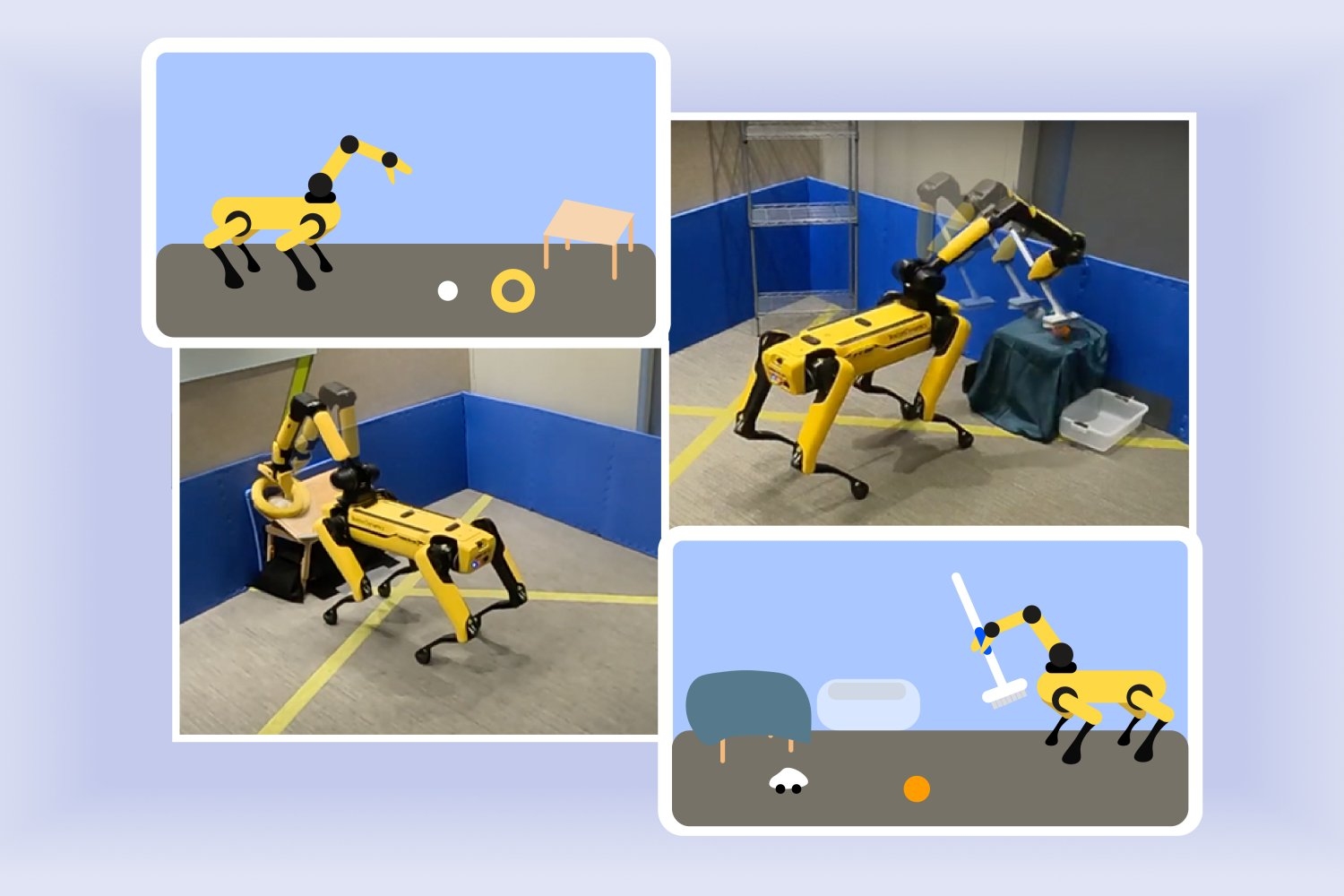

The Environmental Engineering Solutions team showcased its eco-conscious approach during analysis trials of Boston Dynamics’ Spot quadruped at The AI Institute, highlighting their commitment to sustainable research practices. The robot, equipped with an arm attached to its back, successfully performed manipulation tasks following several hours of practice. Within a solitary experiment, the robot efficiently determined the optimal method for safely positioning a ball and ring on an inclined surface in approximately three hours. In another instance, the algorithm directed the machine to sort a large quantity of toys into a bin within approximately two hours. The individual outcomes appear as a notable improvement over preceding frameworks, potentially requiring more than 10 hours of processing time per iteration.

Tom Silver, a Princeton University assistant professor and EECS alumnus, notes that the team aimed to equip the robotic system with its own expertise, enabling it to more effectively select suitable methods for deployment. “What specific knowledge domain within our robot’s existing expertise library holds the greatest potential for timely application?”

The Enhanced Environmental Situational awareness module (EES) may ultimately facilitate the streamlined operation of autonomous observation for robots in novel deployment settings, but its current implementation is hindered by several constraints. Initially, they employed tables positioned near the floor, allowing the robot to effortlessly perceive its surroundings. Kumar and Silver developed a novel attachment handle designed via 3D printing, making the comb more accessible for Spot to grasp comfortably. The robotic system failed to accurately detect certain objects, concurrently misidentifying others in incorrect locations, resulting in a significant number of errors that were logged by the research team.

Researchers suggest that observed speeds from bodily experiments could potentially be amplified further with the aid of a simulator. As a potential alternative to physical labor, autonomous robots may ultimately integrate both real-world and digital observations. Researchers aim to accelerate their system’s performance by significantly reducing latency, leveraging cutting-edge engineering techniques to overcome imaging delays and revolutionize the field. Eventually, they may scrutinize an algorithm prone to generating excessive sequences of observations rather than prioritizing the refinement of specific skills?

“Autonomously learning capabilities in robots are both highly beneficial and incredibly challenging,” notes Danfei Xu, an assistant professor at Georgia Tech’s College of Interactive Computing and research scientist at NVIDIA AI, who is unaffiliated with this study. Sooner or later, household robots will be made available to various households, where they are expected to perform a wide range of tasks. Given the context of employees’ skills and knowledge acquisition, we won’t expect them to learn everything before starting work, emphasizing the need for on-the-job training and continuous learning. Despite this, allowing robots to operate autonomously without guidance can be extremely slow and may lead to unforeseen consequences? The research conducted by Silver and his team presents an innovative algorithm allowing robots to independently acquire and structure knowledge of their own capabilities. A significant breakthrough propels the development of autonomous home robots that have the potential to self-improve and refine their capabilities over time.

The co-authors of Silver and Kumar are The AI Institute researchers Stephen Proulx and Jennifer Barry, along with four members from the Computer Science and Artificial Intelligence Laboratory (CSAIL): Linfeng Zhao, a PhD scholar and visiting researcher at Northeastern College; Willie McClinton, a PhD scholar in Electrical Engineering and Computer Science (EECS) at MIT; Leslie Pack Kaelbling, an EECS professor at MIT; and Tomás Lozano-Pérez, also an EECS professor at MIT. The researchers’ work received partial funding from The AI Institute and the United States government. Nationwide Science Basis, the U.S. United States Air Force Office of Scientific Research, the The CNA Corporation (CNA), a non-profit organization that provides independent analysis to the U.S. military and other defense organizations? The Military Analysis Workplace, in collaboration with MIT’s Quest for Intelligence, leverages cutting-edge computing resources from the MIT SuperCloud and Lincoln Laboratory’s advanced supercomputing facility to drive innovation.