In a previous discussion, we raised questions regarding the selection of a suitable vector database for our hypothetical Retrieval-Augmented Generation (RAG) use case. When building a robust and adaptable generator (RAG) software, another crucial decision that must be made is selecting a suitable vector embedding model, a fundamental component in many generative artificial intelligence applications.

A vector embedding model is responsible for transforming unstructured data (text, images, audio, video) into a numerical vector that captures semantic similarity between data objects. Embeddings play a crucial role in various applications beyond traditional Recommender-Algorithm-Games (RAGs), including advice systems, search engines, databases, and other knowledge processing techniques.

Grasping the purpose, internal workings, advantages, and disadvantages of a concept is crucial, which is exactly what we will delve into today. As we delve into textual content embedding techniques, the same principles apply to other forms of unstructured data as well?

Machine learning models don’t work directly with text, they require numerical inputs. Given the prevalence of textual content, the machine learning (ML) group has iteratively developed numerous solutions for converting text into numerical representations over time. Several approaches of varying complexity exist, but we’ll focus on a few straightforward ones for evaluation.

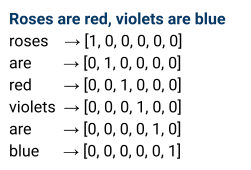

Treat phrases in the textual content as categorical variables and represent each phrase using a vector where all elements are initially set to 0, except for a single element that is set to 1.

In reality, this embedding approach is likely to be impractical due to the proliferation of distinct classes, ultimately yielding unfeasibly high-dimensional output vectors that are difficult to manage in typical scenarios. Additionally, one-hot encoding does not ensure that semantically related vectors are placed proximal to at least one another within the same vector space.

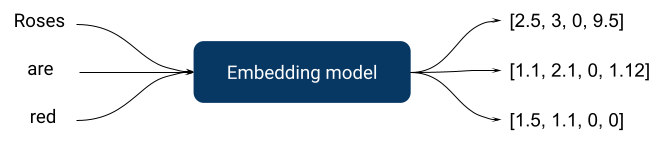

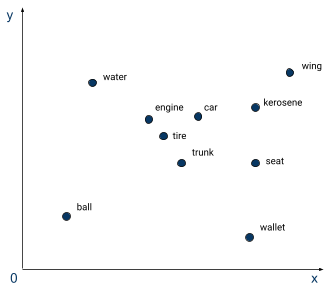

Fashion trends were developed to address these very issues. Similar in concept to one-hot encoding, these methods take textual content as input and produce vectors of numbers as output; however, they are more sophisticated because they are trained using supervised tasks, typically involving a neural network. A supervised learning task could involve, for example, accurately predicting product evaluation sentiment ratings. Given the existing embedding model, subsequent embeddings would group evaluations with similar sentiments closer together within a vector space. The choice of a suitable supervised task is crucial for generating relevant embeddings while building an embedding model.

While the diagram illustrates phrase embeddings alone, our typical requirement exceeds this scope because human language is inherently more nuanced and complex than a simple aggregation of individual words. When considering semantics, phrase order, and various linguistic factors, it becomes necessary to advance our understanding to the next level.

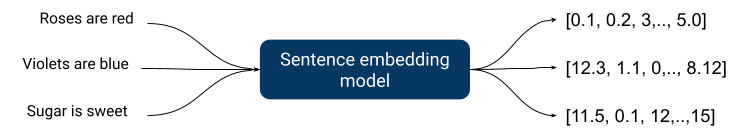

Sentence embeddings associate a given sentence with a numerical vector, which, as expected, involves a significantly greater level of complexity due to the need to capture more intricate relationships.

Due to advancements in deep learning research, the most sophisticated word embedding techniques have emerged, leveraging the capabilities of deep neural networks to more effectively capture intricate linguistic relationships inherent to human language.

A great embedding model should:

* Accurately capture the nuances of human language and sentiment

* Effectively convey the emotional tone of text data

* Be highly adaptable and scalable for large datasets

- as a standalone component, its performance and efficiency are crucial.

- Return vectors of

- What vectors should we use to return?

How most embedding styles are organized internally is typically characterized by a combination of hierarchical and linear structures.

All well-performing state-of-the-art embedding techniques are indeed deep neural networks.

This is a rapidly evolving field where top-performing fashion trends often stem from innovative structural advancements. Let’s briefly cover two crucial architectures: BERT and GPT.

In 2018, researchers at Google published BERT, a groundbreaking innovation that leveraged the power of transformer models for language processing tasks. Specifically, BERT applied bidirectional training to this popular attention-based model, enabling it to excel in natural language understanding and generation applications. Professional transformer architectures consist of two distinct components: an encoder responsible for processing the input text and a decoder tasked with generating predictions.

BERT leverages an encoder that processes entire sentences or phrases simultaneously, allowing it to learn contextual relationships by considering all environmental cues, unlike traditional methods that examine sequential text from left to right or right to left. Before fine-tuning phrase sequences for BERT, certain phrases undergo a preprocessing step where [MASK] tokens replace specific words, allowing the model to predict the original value of these masked phrases based on contextual information from unmasked phrases in the sequence?

While normal BERT models demonstrate limited effectiveness on many benchmarks, the BERT architecture inherently requires task-specific fine-tuning to achieve optimal performance. Despite being an open-source solution with a long history since 2018, this software boasts surprisingly moderate system requirements, making it possible to run efficiently even on a single mid-range GPU. Due to this development, the technology became highly sought after for numerous text-processing tasks. It’s quick, customizable, and small. Here’s an improved version: A highly discussed and influential all-MiniLM model is actually a modified variant of the widely popular BERT architecture.

The GPT (Generative Pre-Trained Skilled Transformer) by OpenAI is fundamentally distinct. Unlike BERT, it’s unidirectional, namely Textual content processing employs a unified routing approach, utilizing a transformer-based decoder optimized for predicting the next item in a sequential pattern. While these fashions may be slower and generate excessively large dimensional embeddings, they often come with additional parameters, eliminating the need for fine-tuning and offering relevance to a broader range of applications beyond their original scope? The development of GPT should not be publicly accessible in its entirety, rather being offered as a premium API service, thereby generating revenue for the creators while maintaining control over its use.

The significance of another crucial parameter in an embedding model lies in its context size. Context size refers to the number of tokens that a model can comprehend while interacting with a text, allowing for more sophisticated understanding and processing of complex linguistic structures. An extended context window enables the mannequin to capture more intricate connections within a broader corpus of text. Due to these factors, fashion can generate exceptionally high-quality outcomes, for instance seize semantic similarity higher.

To optimize the efficacy of contextual understanding, coaching wisdom should integrate comprehensive bodies of connected written material – such as books, academic papers, and the like – thereby enriching the scope of relevant information. Notwithstanding the potential benefits, increasing the context window size will indeed amplify the complexity of the model, subsequently elevating the demands on computational resources and memory required for training.

Here are strategies that help manage resource requirements, for example: While approximate considerations are indeed valuable, they often come at a surprisingly high cost. The challenge lies in balancing context length and complexity, as longer contexts can capture more nuanced relationships within human language, but necessitate additional data sources.

Standardised coaching knowledge is crucial across all fashion industry disciplines. Fashions in embedding technologies are by no means an exemption from innovation’s relentless pursuit of excellence.

Embracing novel approaches to semantic search through cutting-edge embedding techniques is a rapidly evolving area of research. For decades, researchers employed various applied sciences to analyze information, including boolean models, latent semantic indexing (LSI), and a range of probabilistic approaches.

While some of these methods prove effective in many modern scenarios, they remain widely employed across various industries.

One of the most popular and widely used probabilistic approaches to ranking search results is BM25 (Best Match), a search relevance scoring function. The algorithm is employed to assess the pertinence of a document to a query and prioritizes documents primarily based on the question phrases contained within each listed document. Recently, embedding methods have started consistently outperforming BM25; nonetheless, the latter remains widely used due to its ease of implementation, lower computational requirements, and interpretable results.

Each mannequin type does not have a comprehensive evaluation approach that aids in selecting the most relevant mannequin at present.

Fortunately, textual content embedding methods have extensive benchmark suites such as:

A novel benchmark, dubbed BEIR, was introduced to evaluate zero-shot information retrieval methods, featuring a diverse collection of datasets for assessing various data retrieval tasks. The unique BEIR benchmark comprises a collection of 19 datasets, offering diverse strategies for conducting high-quality searches in analysis. Strategies encapsulate techniques for question-answering, fact-checking, and entity retrieval, thereby fostering effective knowledge discovery and management. Now, anyone releasing a text-based embedding model for data retrieval tasks can evaluate its performance by running the benchmark and comparing it to industry-leading models.

A comprehensive benchmark for large textual content embedding, comprising the BEIR dataset alongside others covering 58 datasets and 112 languages. The publicly accessible leaderboard for MTEB outcomes can be found.

Benchmarks have been successfully executed across a range of contemporary fashion models, yielding valuable leaderboards that empower informed decisions regarding model selection.

Benchmarks for normal duties are essential, yet they represent merely one facet of a comprehensive evaluation.

Following the application of an embedding model for search purposes, we execute a dual iteration.

- Offline indexing of acquired knowledge facilitates effortless recall and sharing of previously learned concepts.

- The search query is carefully crafted to integrate a customer inquiry directly into the search results, allowing users to quickly and easily find relevant information and answers.

The two necessary penalties of this are severe consequences that follow from a wrong decision.

When updating an embedding model, the primary challenge lies in reindexing all existing knowledge to ensure seamless integration with the new framework? Upgrading is crucial for all methods leveraging embedding models since newer, more sophisticated architectures are constantly emerging, making model updates the most effective way to boost overall system performance as a result of their frequent release? An embedding model remains a relatively unstable component of the system infrastructure in this context.

As the number of users increases, the criticality of inference latency emerges as a significant consequence of leveraging an embedding model for customer inquiries. Mannequin inference can incur significant latency when utilizing high-performance fashions, especially those requiring GPU acceleration: latencies exceeding 100ms are not uncommon for models boasting more than 1 billion parameters. Smaller, more agile fashion designs prove essential in high-stress production environments where efficiency and precision are paramount.

The inherent trade-off between high-quality and low-latency performance is a crucial consideration that must always be borne in mind when choosing an embedding model, as this delicate balance can significantly impact the overall effectiveness of your application.

Embedding techniques effectively manage output vector dimensionality, thereby enhancing the performance of numerous algorithms that rely on these outputs. Typically, a smaller mannequin is associated with a shorter output vector size; however, it often remains too complex for smaller models. When dealing with high-dimensional datasets, it becomes necessary to employ dimensionality reduction techniques akin to principal component analysis (PCA), stochastic neighborhood embedding/t-distributed Stochastic Neighbor Embedding (SNE/ tSNE), or Uniform Manifold Approximation (UMAP) algorithms.

By leveraging dimensionality reduction techniques, we can effectively utilize the power of storage and optimize database performance, allowing us to store embeddings before they even make it into our database. While reduced vector embedding sizes promise to conserve storage space and accelerate retrieval times, this compression comes at the cost of compromising performance in traditional downstream applications. While vector databases can be an efficient storage solution, they may not always be the primary choice for storing information, thereby allowing for the regeneration of embeddings with increased precision using available knowledge. Their utility lies in reducing the dimensionality of the output vector, thereby accelerating the system’s performance and increasing its efficiency.

When choosing an embedding model for a specific application, numerous factors and trade-offs require careful consideration. While assessing a potential mannequin’s performance across various benchmarks is crucial, it’s equally important to recognize that larger fashion models generally receive higher ratings. While large fashion models boast impressive capabilities, they often come at the cost of significant inference times, rendering them impractical for applications demanding low latency, as these models typically serve as preprocessing steps within larger pipelines. Bigger AI models and deep learning applications often necessitate the utilization of powerful graphics processing units (GPUs) for seamless execution.

When utilizing a mannequin in a real-time setting, prioritize minimizing latency and then evaluate top-performing styles that meet this critical requirement. When designing a system utilizing an embedding model, it’s prudent to factor in future updates as newer models emerge regularly, allowing for potential performance enhancements through straightforward upgrades rather than manual retraining.

In regards to the writer

As a senior knowledge engineer at Workplace of the Chief Technology Officer, Nick Volynets thrives in the epicenter of DataRobot innovation. With a deep interest in large-scale machine learning, he is passionately exploring the realm of artificial intelligence and its profound impact.