By leveraging this configuration for the image sensor, you can capture photos faster than those using traditional technology while simultaneously delivering high-quality images in low-light conditions. The stacked sensors deliver high-quality images featuring enhanced dynamic range and precise colour accuracy. The layers appear to suggest that the components utilised may be stacked atop each other in a sequential manner. Samsung’s three-layer stacked image sensor reportedly outshines Sony’s current Exmor RS technology, employed in iPhones, in terms of performance.

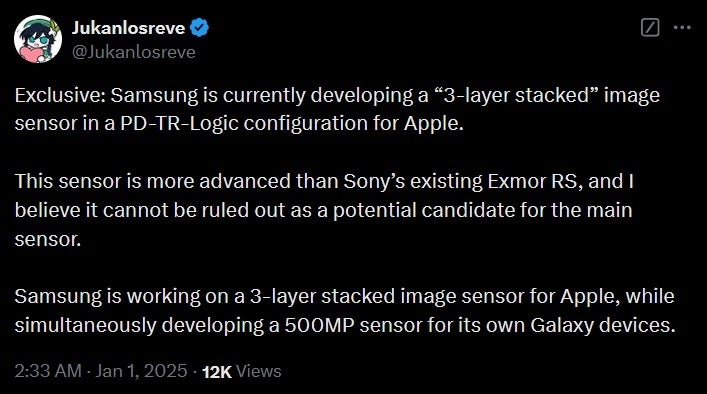

Apple reportedly poised to swap out long-standing Sony image sensor partnership for Samsung’s cutting-edge tech in upcoming iPhone 18 models? | Picture credit-@Jukanlosreve

The first iPhone that leveraged image sensors supplied by Sony was the 2011 iPhone 4S. The smartphone boasts an impressive 8-megapixel rear camera. OmniVision Technologies, a leading developer of CMOS image sensors, supplied the camera modules for Apple’s iPhone 4. OmniVision provided the camera image sensors that powered the rear-facing camera in both the iPhone 3G and iPhone 3GS. The first-generation iPhone featured a 2MP camera module supplied by Micron, which lacked basic features like autofocus and video recording capabilities.

The forthcoming iPhone 18 series is expected to mark a milestone in Apple’s smartphone evolution, as it will likely be the first device to integrate an application processor (AP) produced by Taiwan Semiconductor Manufacturing Company (TSMC) using its cutting-edge 2nm manufacturing process. The chips that could be used are the A20 and A20 Plus, which are both suitable options for this project. The anticipated launch of the iPhone 18 is scheduled for September 2026, with a possible release date sometime during that month.