AI-driven drone from College of Klagenfurt makes use of IDS uEye digital camera for real-time, object-relative navigation—enabling safer, extra environment friendly, and exact inspections.

The inspection of important infrastructures reminiscent of power vegetation, bridges or industrial complexes is important to make sure their security, reliability and long-term performance. Conventional inspection strategies at all times require the usage of folks in areas which can be troublesome to entry or dangerous. Autonomous cell robots supply nice potential for making inspections extra environment friendly, safer and extra correct. Uncrewed aerial automobiles (UAVs) reminiscent of drones specifically have change into established as promising platforms, as they can be utilized flexibly and might even attain areas which can be troublesome to entry from the air. One of many greatest challenges right here is to navigate the drone exactly relative to the objects to be inspected in an effort to reliably seize high-resolution picture knowledge or different sensor knowledge.

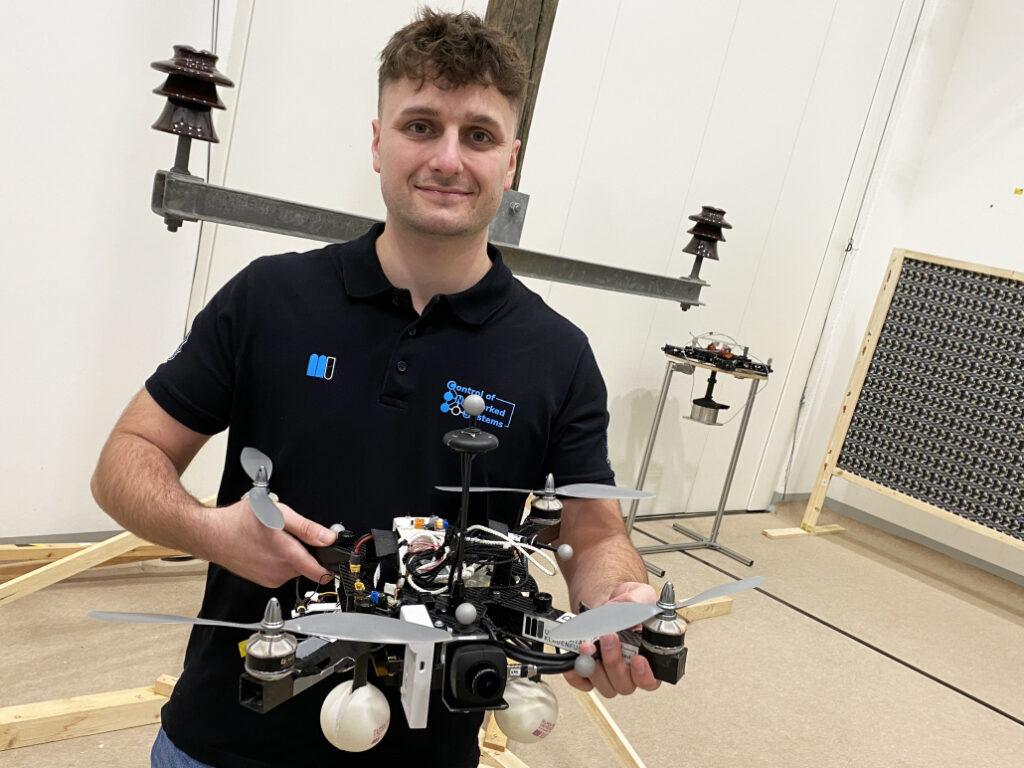

A analysis group on the College of Klagenfurt has designed a real-time succesful drone primarily based on object-relative navigation utilizing synthetic intelligence. Additionally on board: a USB3 Imaginative and prescient industrial digital camera from the uEye LE household from IDS Imaging Growth Techniques GmbH.

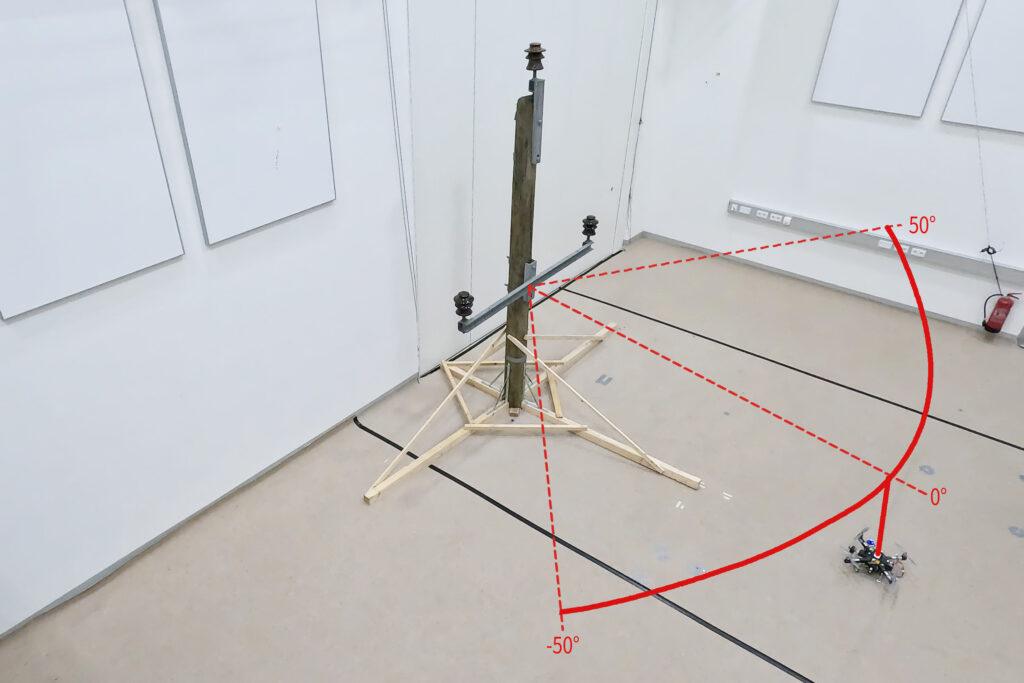

As a part of the analysis venture, which was funded by the Austrian Federal Ministry for Local weather Motion, Setting, Vitality, Mobility, Innovation and Expertise (BMK), the drone should autonomously recognise what’s an influence pole and what’s an insulator on the facility pole. It’s going to fly across the insulator at a distance of three meters and take footage. „Exact localisation is essential such that the digital camera recordings may also be in contrast throughout a number of inspection flights,“ explains Thomas Georg Jantos, PhD scholar and member of the Management of Networked Techniques analysis group on the College of Klagenfurt. The prerequisite for that is that object-relative navigation should be capable to extract so-called semantic details about the objects in query from the uncooked sensory knowledge captured by the digital camera. Semantic data makes uncooked knowledge, on this case the digital camera photos, „comprehensible“ and makes it potential not solely to seize the atmosphere, but in addition to accurately determine and localise related objects.

On this case, because of this a picture pixel isn’t solely understood as an impartial color worth (e.g. RGB worth), however as a part of an object, e.g. an isolator. In distinction to traditional GNNS (International Navigation Satellite tv for pc System), this strategy not solely gives a place in area, but in addition a exact relative place and orientation with respect to the item to be inspected (e.g. „Drone is situated 1.5m to the left of the higher insulator“).

The important thing requirement is that picture processing and knowledge interpretation have to be latency-free in order that the drone can adapt its navigation and interplay to the particular circumstances and necessities of the inspection process in actual time.

Semantic data by means of clever picture processing

Object recognition, object classification and object pose estimation are carried out utilizing synthetic intelligence in picture processing. „In distinction to GNSS-based inspection approaches utilizing drones, our AI with its semantic data allows the inspection of the infrastructure to be inspected from sure reproducible viewpoints,“ explains Thomas Jantos. „As well as, the chosen strategy doesn’t endure from the standard GNSS issues reminiscent of multi-pathing and shadowing attributable to giant infrastructures or valleys, which may result in sign degradation and thus to security dangers.“

How a lot AI suits right into a small quadcopter?

The {hardware} setup consists of a TWINs Science Copter platform geared up with a Pixhawk PX4 autopilot, an NVIDIA Jetson Orin AGX 64GB DevKit as on-board pc and a USB3 Imaginative and prescient industrial digital camera from IDS. „The problem is to get the factitious intelligence onto the small helicopters.

The computer systems on the drone are nonetheless too gradual in comparison with the computer systems used to coach the AI. With the primary profitable exams, that is nonetheless the topic of present analysis,“ says Thomas Jantos, describing the issue of additional optimising the high-performance AI mannequin to be used on the on-board pc.

The digital camera, however, delivers excellent fundamental knowledge right away, because the exams within the college’s personal drone corridor present. When deciding on an appropriate digital camera mannequin, it was not only a query of assembly the necessities when it comes to velocity, measurement, safety class and, final however not least, value. „The digital camera’s capabilities are important for the inspection system’s progressive AI-based navigation algorithm,“ says Thomas Jantos. He opted for the U3-3276LE C-HQ mannequin, a space-saving and cost-effective venture digital camera from the uEye LE household. The built-in Sony Pregius IMX265 sensor might be the very best CMOS picture sensor within the 3 MP class and allows a decision of three.19 megapixels (2064 x 1544 px) with a body charge of as much as 58.0 fps. The built-in 1/1.8″ international shutter, which doesn’t produce any ‚distorted‘ photos at these brief publicity instances in comparison with a rolling shutter, is decisive for the efficiency of the sensor. „To make sure a protected and strong inspection flight, excessive picture high quality and body charges are important,“ Thomas Jantos emphasises. As a navigation digital camera, the uEye LE gives the embedded AI with the excellent picture knowledge that the on-board pc must calculate the relative place and orientation with respect to the item to be inspected. Based mostly on this data, the drone is ready to right its pose in actual time.

The IDS digital camera is related to the on-board pc through a USB3 interface. „With the assistance of the IDS peak SDK, we are able to combine the digital camera and its functionalities very simply into the ROS (Robotic Working System) and thus into our drone,“ explains Thomas Jantos. IDS peak additionally allows environment friendly uncooked picture processing and easy adjustment of recording parameters reminiscent of auto publicity, auto white Balancing, auto achieve and picture downsampling.

To make sure a excessive stage of autonomy, management, mission administration, security monitoring and knowledge recording, the researchers use the source-available CNS Flight Stack on the on-board pc. The CNS Flight Stack consists of software program modules for navigation, sensor fusion and management algorithms and allows the autonomous execution of reproducible and customisable missions. „The modularity of the CNS Flight Stack and the ROS interfaces allow us to seamlessly combine our sensors and the AI-based ’state estimator‘ for place detection into your entire stack and thus realise autonomous UAV flights. The performance of our strategy is being analysed and developed utilizing the instance of an inspection flight round an influence pole within the drone corridor on the College of Klagenfurt,“ explains Thomas Jantos.

Exact, autonomous alignment by means of sensor fusion

The high-frequency management alerts for the drone are generated by the IMU (Inertial Measurement Unit). Sensor fusion with digital camera knowledge, LIDAR or GNSS (International Navigation Satellite tv for pc System) allows real-time navigation and stabilisation of the drone – for instance for place corrections or exact alignment with inspection objects. For the Klagenfurt drone, the IMU of the PX4 is used as a dynamic mannequin in an EKF (Prolonged Kalman Filter). The EKF estimates the place the drone must be now primarily based on the final recognized place, velocity and angle. New knowledge (e.g. from IMU, GNSS or digital camera) is then recorded at as much as 200 Hz and incorprated into the state estimation course of.

The digital camera captures uncooked photos at 50 fps and a picture measurement of 1280 x 960px. „That is the utmost body charge that we are able to obtain with our AI mannequin on the drone’s onboard pc,“ explains Thomas Jantos. When the digital camera is began, an automated white steadiness and achieve adjustment are carried out as soon as, whereas the automated publicity management stays switched off. The EKF compares the prediction and measurement and corrects the estimate accordingly. This ensures that the drone stays secure and might preserve its place autonomously with excessive precision.

Outlook

„With regard to analysis within the discipline of cell robots, industrial cameras are needed for a wide range of purposes and algorithms. It will be important that these cameras are strong, compact, light-weight, quick and have a excessive decision. On-device pre-processing (e.g. binning) can be crucial, because it saves priceless computing time and assets on the cell robotic,“ emphasises Thomas Jantos.

With corresponding options, IDS cameras are serving to to set a brand new normal within the autonomous inspection of important infrastructures on this promising analysis strategy, which considerably will increase security, effectivity and knowledge high quality.

The Management of Networked Techniques (CNS) analysis group is a part of the Institute for Clever System Applied sciences. It’s concerned in educating within the English-language Bachelor’s and Grasp’s applications „Robotics and AI“ and „Data and Communications Engineering (ICE)“ on the College of Klagenfurt. The group’s analysis focuses on management engineering, state estimation, path and movement planning, modeling of dynamic methods, numerical simulations and the automation of cell robots in a swarm: Extra data

Mannequin used:USB3 Imaginative and prescient Industriekamera U3-3276LE Rev.1.2

Digicam household: uEye LE

Picture rights: Alpen-Adria-Universität (aau) Klagenfurt

© 2025 IDS Imaging Growth Techniques GmbH