As this conversation unfolds on July 21st, we’re witnessing the culmination of intense competition in its thrilling finale. As we conclude our series of articles, this piece provides a taste of the excitement that unfolded on the final day. If you’ve missed previous updates, they are easily accessible at this link.

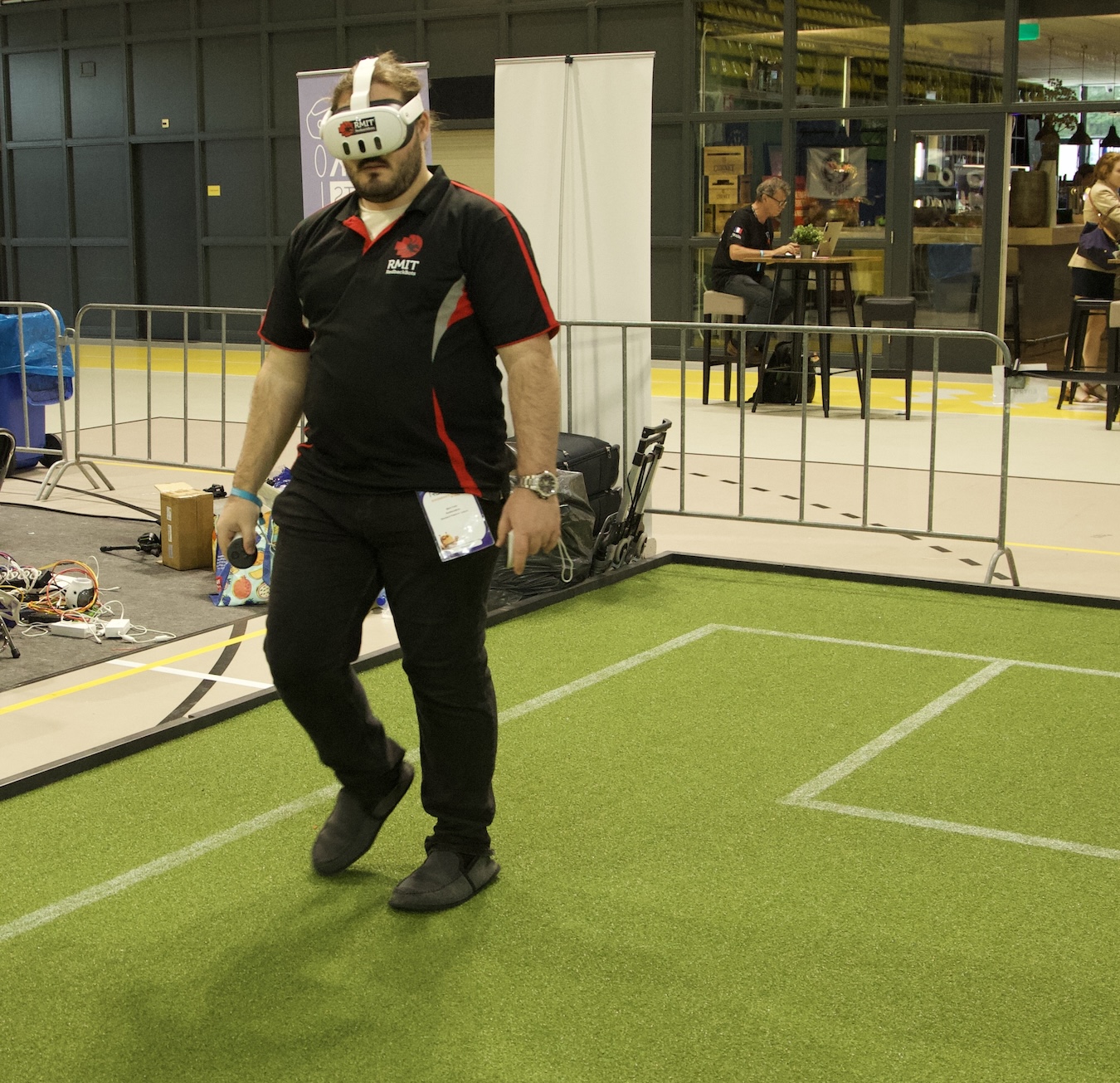

The inaugural port of call on my morning agenda was the Commonplace Platform League, where Dr. Timothy Wiley and Tom Ellis from Staff RedbackBots at RMIT University in Melbourne, Australia, showcased an innovative breakthrough unique to their team. Developed is an augmented reality (AR) system designed to improve the comprehension and transparency of in-game actions.

As the leader of the educational group, Timothy outlined: “The culmination of last year’s student proposals, aimed at contributing to the league, centered on developing an augmented reality (AR) visualization of the league’s.” A graphical user interface will be developed as a software program, projecting real-time data onto TV screens for both viewers and referees. This display provides a clear visual representation of the robot’s current position, game statistics, and ball location. As a direct consequence of our expectations, we developed an AR system to overlay it onto the relevant context. The Augmented Reality (AR) enables us to scrutinize and validate all information that remains within our designated sector as robotic transfers unfold.

The group showcased the system to the league during this opportune moment, presenting their findings with palpable enthusiasm and promising prospects. During a test run of their augmented reality (AR) system, one group unexpectedly stumbled upon an error in their software program while playing a game. Tom reported acquiring a diverse array of ideas and proposals from counterpart teams to inform future growth initiatives.

As a pioneering effort, this may be one of the initial AR systems tested across competing entities, marking its debut within the Commonplace Platform League. Having previously had the opportunity to receive a demo from Tom, I must say that it significantly enhanced my overall viewing experience. The prospect of witnessing the system’s evolution will likely prove captivating.

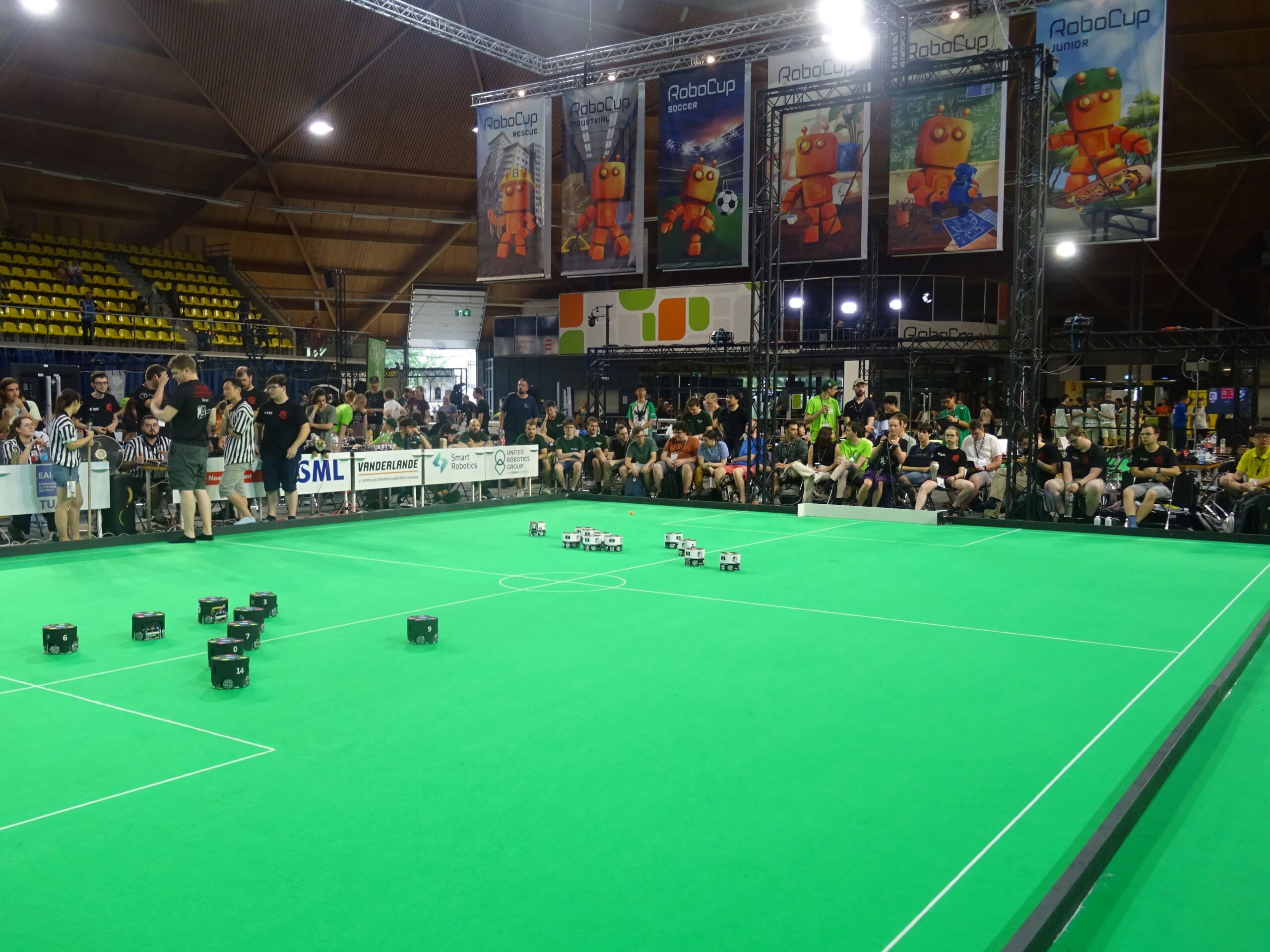

I traversed to the primary soccer area and then proceeded to the RoboCupJunior zone, where I was fortunate enough to receive a guided tour from Rui Baptista, a member of the Government Committee, who introduced me to various teams utilizing machine learning models to enhance their robotic capabilities? The RoboCupJunior program is a premier international competition for students aged 13 to 19, featuring three distinct leagues: Soccer, Rescue Robotics, and On-Stage.

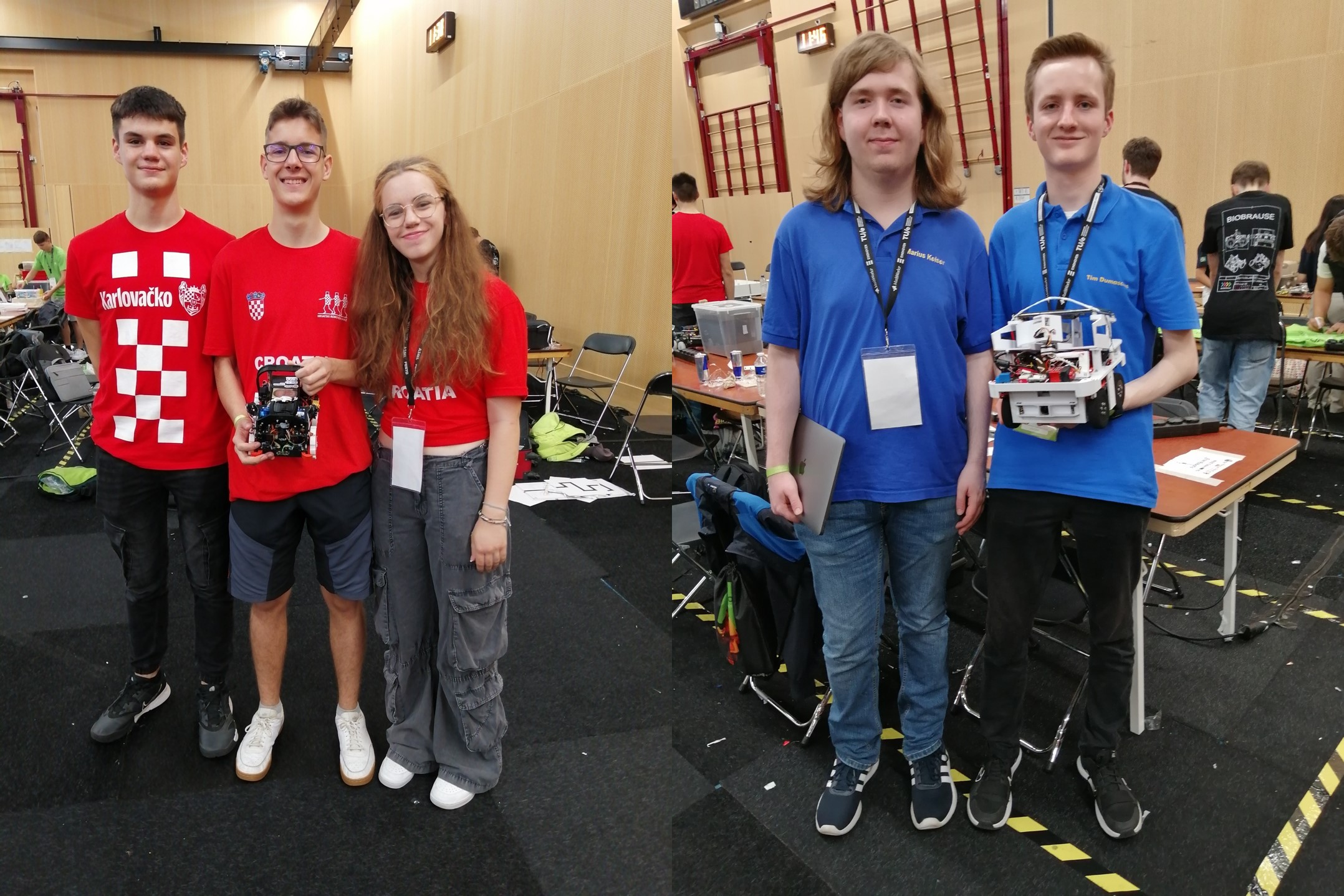

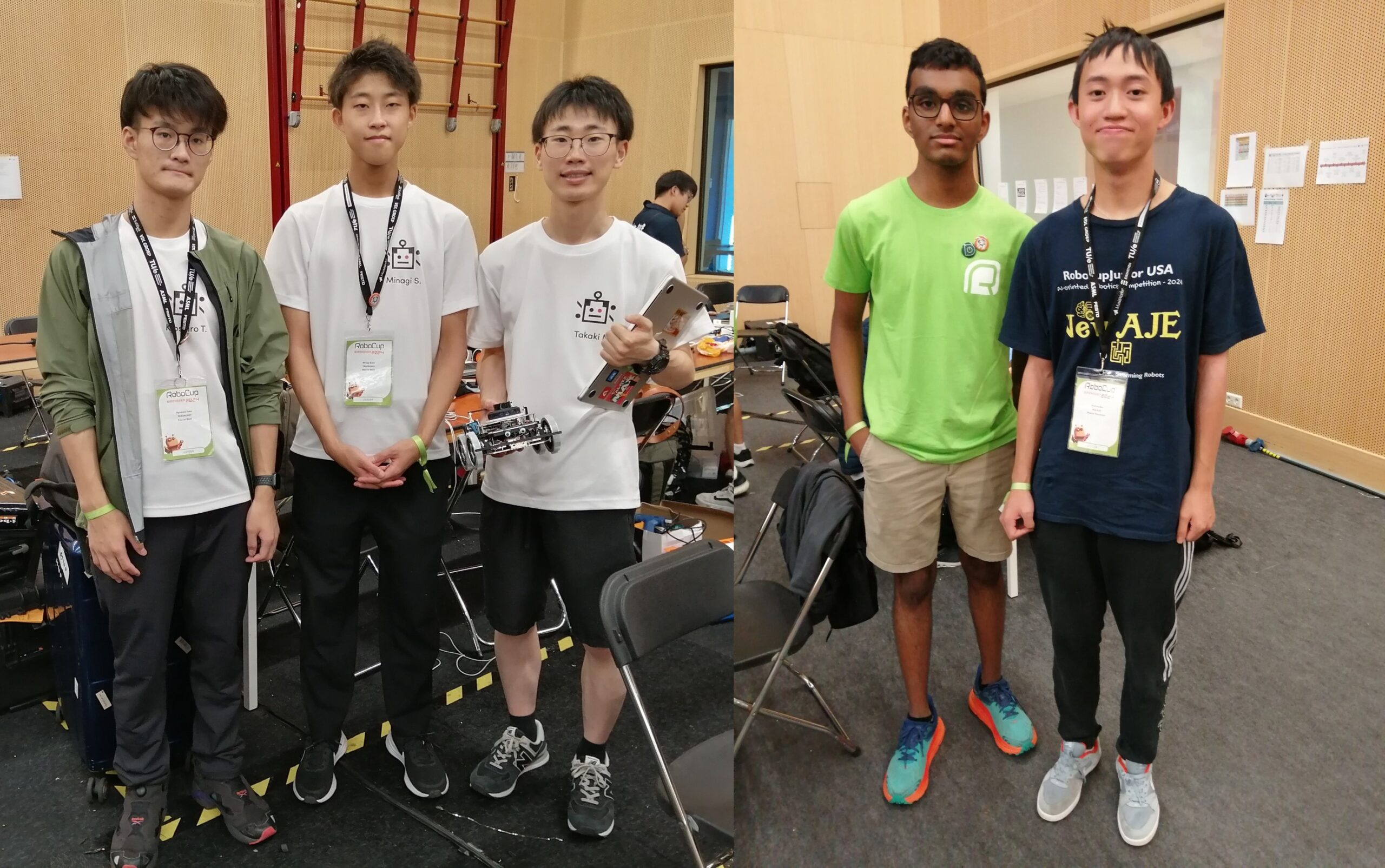

I initially connected with four teams from the Rescue League. In simulated disaster scenarios, robots identify and adapt to “victims” with varying levels of complexity, ranging from straightforward line-following exercises on flat surfaces to navigating intricate obstacle courses on uneven terrain. There are three distinct strands to the league: one is Rescue Line, where robots observe a black line guiding them to a victim; another is Rescue Maze, in which robots must navigate a maze to locate and identify victims; and the third is Rescue Simulation, a simulated model of the maze competition.

The Staff of Skoll’s Gate Library, participating in the Rescue Line initiative, employed a YOLOv8 deep learning model to identify and locate individuals in distress within designated evacuation areas. Through a self-directed initiative, they empowered their community by sharing around 5,000 photographs. Additionally, a competing team within the Rescue Line was Staff Overengineering 2. Additionally, they leveraged the power of YOLO v8 neural networks to facilitate two critical components within their system. The primary model was utilised to identify survivors within the designated evacuation area and pinpoint structural weaknesses. The secondary mannequin is employed consistently along the trajectory, allowing the robot to recognize when the dominant black line shifts to a distinctive silver line, signifying entry into the designated evacuation zone.

Staff Tanorobo! have collaborated with fellow maze competitors. Moreover, they employed a machine learning model for patient identification, training it on a dataset comprising 3,000 images per type of patient, distinguished by unique letter designations within the maze. Additionally, they captured images of partitioned areas and impediments to prevent misclassification. The Staff of New Age had been actively participating in the simulation competition together. Using a graphical user interface, they guided the development of their machine learning model and facilitated debugging of navigation algorithms through visual means. The team employs a trio of distinct navigation algorithms, each boasting varying computational efficacy, which they seamlessly switch between based on their location and the maze’s complexity within.

I encountered two new groups that recently debuted at the OnStage event. The Staff Medics’ operational efficacy was largely contingent upon a specific medical scenario, wherein they collaborated with two artificial intelligence components. The primary applications include voice recognition for seamless communication with “affected individuals” in robotics, as well as picture recognition capabilities to accurately categorize X-ray images. Staff Jam Session utilizes robotic technology to translate American Sign Language (ASL) symbols into musical notes, enabling the creation of a piano melody. Utilizing the MediaPipe detection algorithm, researchers identified distinct features on the hand that diverged from conventional assumptions, while employing random forest classifiers to accurately categorize images displayed.

The subsequent cessation was the site of a high-stakes humanoid tournament, where the final match was underway. Crowds surged to capacity, eager to witness the spectacle unfolding on stage.

Tech United Eindhoven convincingly defeated BigHeroX 6-1 in the final of the Center Measurement League, solidifying their dominance in the tournament. Watch the livestream of the event’s final day’s action.

The grand finale pitted Tech United Eindhoven, the champions of the Centre Measurement League, against a quintet of esteemed RoboCup trustees. The people emerged victorious, triumphing 5-2 with a display of exceptional passing and movement that left Tech United struggling to keep up.

A non-profit organization is committed to bridging the gap between the artificial intelligence community and the broader public through provision of freely accessible, top-tier information on AI.

AIhub

Is a non-profit organization dedicated to bridging the gap between the artificial intelligence community and the general public through the provision of free, premium quality information on AI.

Lucy Smith

is Managing Editor for AIhub.