Introduction

dense vectors in a high-dimensional space.

Dense vectors representing actual numerical values where semantically equivalent phrases are projected onto.

close by factors. Representative phrases on this vector housing assistance?

Algorithms achieve greater efficacy when implemented in a programming language that is free from extraneous features.

Processing duties efficiently by leveraging computational power and clustering techniques through efficient algorithms and parallel processing capabilities for rapid analysis and evaluation.

comparable phrases. Within our anticipated timeframe, we expect to establish an immersive experience.

since both cats and canines are closely related species.

Each animal, whether mammal, pet, or otherwise.

Implementing the Skip-Gram Model Developed by Mikolov et al. in R Using the caret Package.

The Skip-Gram model is a fundamental concept in the Word2Vec framework, a family of algorithms for learning vector representations of words.

Computationally efficient predictive models for studying phrasing?

embeddings from uncooked textual content. We won’t delve into theoretical specifics about embeddings and their applications.

the skip-gram mannequin. To acquire more detailed information, you are invited to study the report.

linked above. TensorFlow’s tutorial contains extensive details, similarly, so do many other resources.

Vector representations of phrases can be generated using various techniques. For instance,

Glove embeddings were applied within a bundle by Dmitriy Selivanov.

According to Julia Silge’s blog post, there’s also a neat method.

Getting the Knowledge

What do you want to do with the period?

The Amazon reviews dataset contains a vast array of opinions regarding exceptional dining experiences. The data spanned a period of more than 10 years up to October 2012, incorporating approximately 500,000 user opinions at that time. Evaluations aggregate product and consumer information, along with scores and descriptive content.

Would you like to download a vast amount of knowledge (~116MB) simply by putting in the work?

Now we’re going to load the plain textual content opinions into R.

We’ve garnered a diverse array of perspectives within our dataset.

[1] "After acquiring several units of Vitality's canned pet food products, I experienced some issues... [2] "The product arrived with a misleading label stating 'Jumbo Salted Peanuts', only to find that the contents were not as described..." Preprocessing

Text preprocessing for Keras-based models will begin with tokenization? text_tokenizer(). The tokenizer will probably be

liable for reworking every overview into a sequence of consecutive integer tokens (which can subsequently be used as

enter into the skip-gram mannequin).

Be aware that the tokenizer The object is transformed in situ as a result of the choice made. fit_text_tokenizer().

A unique numerical identifier will likely be assigned to each of the 20,000 most frequent phrases, with all other phrases being treated as outliers.

be assigned to token 0).

Skip-Gram Mannequin

Within the skip-gram framework, we’ll employ each phrase as input to a log-linear classifier.

With a projection layer, predictions of phrases within a sentence often prove more accurate both preceding and subsequent to the target phrase.

this phrase. Computing the likelihood function for complex models with many parameters can be a very computationally expensive task.

The distribution of all vocabulary over each goal phrase entered into the model. As an alternative,

We’re going to employ detrimental sampling, a technique that involves intentionally introducing certain phrases that don’t quite fit the narrative or tone, in order to create an air of ambiguity and keep the listener engaged.

Within the given context, practicing a binary classifier to predict whether the context phrase warrants a specific response.

It’s unclear whether handed is being used as an adjective or noun in this sentence, and without more context it’s difficult to determine what the intended meaning is.

Within the context of a skip-gram model, we will input a one-dimensional integer vector consisting of

The goal is to phrase tokens with a one-dimensional integer vector of sampled contextual phrase tokens. We are going to

The sun was shining brightly in the clear blue sky? 1

We’re now outlining a generator that performs to yield batches for machine learning.

A

A function that generates a distinct value each time it’s invoked (generator capabilities can be leveraged to provide streaming or dynamic data for training models) Our generator performs well in obtaining a vector of texts.

A tokenizer and the arguments for the Skip-Gram model: the dimensions of the window around every target word.

What specific goals do we aim to achieve with our phrase studies, and how many adverse examples should we anticipate?

A consistent template for each goal-oriented sentence.

Let’s define our Keras model now. We are going to utilize the Keras API.

We utilize placeholders to first write for the inputs. layer_input perform.

Let’s outline the embedding matrix: The embedding matrix represents the fixed-size vectors that capture the semantic meanings of each word in a vocabulary, thereby enabling efficient computation of similarities between words and phrases in natural language processing tasks. The embedding is a matrix of dimensionality ? that captures the semantic meaning of input data.

A vocabulary, paired with an embedding size, serves as a lookup table for phrase vectors.

The next step is to develop a comprehensive plan outlining how target_vector Will likely be linked to the context_vector

to enable our community to output a value of 1 whenever the specified contextual phrase is explicitly present within

Context? We wish target_vector to be to the context_vector

If they had occurred in the same setting. A common metric for assessing the closeness between two datasets is the. Give two vectors and

the cosine similarity is characterized by the Euclidean dot product of and , normalized by their

magnitude. We will avoid any resemblance of normalization within our community, and instead, focus solely on calculation.

The dot product of the dense layer’s output is followed by a dense layer with sigmoid activation function.

Let’s assemble the mannequin and finalize its construction.

We’re able to discern the comprehensive essence of a mannequin simply by making a single phone call? abstract:

Layer Output Form Param # Related to --------------------- ------------- -------- ------------- Input Layer (None, 1) 0 - Input Layer (None, 1) 0 - Embedding (None, 1, 128) 2560128 Input Layer[0][0], Input Layer[0][0] Flatten (None, 128) 0 Embedding[0][0] Flatten (None, 128) 0 Embedding[1][0] Dot (None, 1) 0 Flatten[0][0], Flatten[0][0] Dense (None, 1) 2 Dot[0][0] Complete params: 2,560,130 Trainable params: 2,560,130 Non-trainable params: 0Mannequin Coaching

We plan to utilize matching technology for the mannequin. fit_generator() Should we clarify the type of?

Coaching steps, in conjunction with a variety of epochs, require dedicated practice to optimize results. We are going to practice for the big game on Saturday.

100,000 steps for five epochs. While that’s relatively slow (~1,000 seconds per epoch on modern hardware), Be aware that you just

Might even secure affordable results with just a single cycle of mentoring.

Epoch 1 of 5 complete: 1092 seconds, Loss: 0.3749 Epoch 2 of 5 complete: 1094 seconds, Loss: 0.3548 Epoch 3 of 5 complete: 1053 seconds, Loss: 0.3630 Epoch 4 of 5 complete: 1020 seconds, Loss: 0.3737 Epoch 5 of 5 complete: 1017 seconds, Loss: 0.3823 We’re capable of extracting the embeddings matrix from the model thanks to the use of get_weights()

perform. We additionally added row.names to refine our embedding matrix, thereby allowing us to uncover

the place every phrase is.

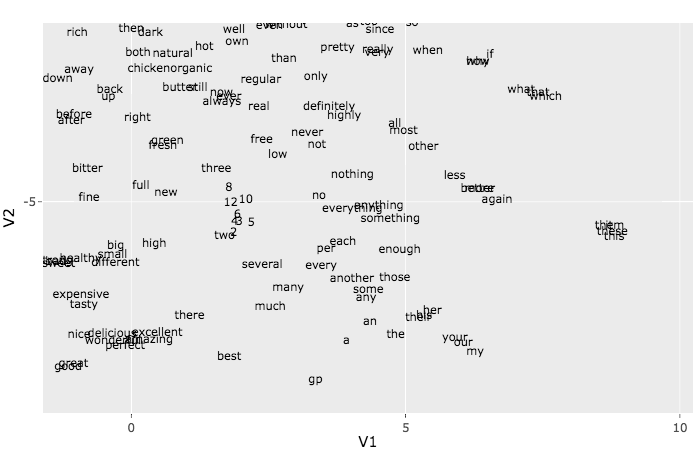

Understanding the Embeddings

With our new capabilities, we’re able to identify phrases that are spatially proximal in the embedding space. We are going to

Use the cosine similarity, as that’s what we trained the model to utilise?

decrease.

Two 4 3 1.0000000 0.9830254 0.9777042 0.9765668 0.9722549 A slight nuance in terminology and some formatting adjustments can enhance the clarity of this text. Here is the revised version: Terms for small quantities: a little, a bit, few, small; dealing with a significant deal. 1.0000000 | 0.9501037 | 0.9478287 | 0.9309829 | 0.9286966 What delightful treats and intriguing data! Here's the refined version: Adjectives: Scrumptious, Tasty, Fantastic, Wonderful, Yummy. Statistics: 1.0000000 0.9632145 0.9619508 0.9617954 0.9529505 Comparison of Similarity Scores | Category | Similarity Score | |-----------------|------------------| | Cats | 1.0000000 | | Canines | 0.9844937 | | Children | 0.9743756 | | Cat | 0.9676026 | | Canine | 0.9624494 | (Note: I reformatted the text to make it more readable and added a header row for clarity) The algorithm can be leveraged to visually represent and interpret the learned embeddings effectively. Due to time constraints we

The company will leverage this technology to streamline its operations and increase efficiency within the next six months. To gain a deeper understanding of this technique, please refer to our latest article.

Despite appearing as a complex array at first glance, upon closer inspection of individual units, distinct trends emerge.

Here are some revised suggestions in a different style:

Can you identify clusters of web-related expressions such as http, href, and so forth. One other group

The following pronouns are commonly used in English: he, she, it, we, they, me, him, her, us, them. she, he, her, and so forth.