The monetary providers sector is present process speedy change. Digital banks, which function completely on-line with out the necessity for bodily branches, are coming to the forefront. Cell banking and user-friendly banking functions have gotten more and more necessary, giving customers versatile entry to monetary providers.

On this context, trendy banking can’t be imagined with out versatile and dependable software program options. Software program platforms are the “core” of digital banks: they supply uninterrupted real-time transaction processing, guaranteeing safety on buyer knowledge, simplification of connecting new providers, and seamless consumer expertise, amongst different issues.

Digital Banking Market Overview

The expansion of digital banking can be confirmed by statistics. In response to Statista, in Germany alone, the digital banking market has reached a web curiosity revenue of $71.33 billion in 2025. With a mean annual development fee of 5.68% from 2025 to 2030, reaching $94.03 billion by the tip of the last decade.

A rise of 11.3% in 2025 clearly demonstrates how quickly the market is creating beneath the affect of cellular applied sciences and progressive fintech options. This surge isn’t taking place in isolation — as per Statista, the worldwide software program market has already crossed the $740 billion mark and is steadily climbing.

What Is Banking Software program Growth?

Banking software program growth is the method of making digital options that assist banks and different monetary establishments work sooner, extra securely, and extra conveniently for his or her clients.

It’s not nearly acquainted on-line banking platforms or cellular apps, but in addition about complicated inside programs that handle transactions, analyze knowledge, guarantee safety, and automate processes.

The primary objective of creating banking software program is to make monetary organizations extra environment friendly, clear, and user-focused. Such options assist banks cut back transaction processing occasions, enhance service high quality, enhance reliability, and adjust to rising regulatory necessities.

As well as, they typically function a basis for a variety of further providers and integrations — from microtransactions and numerous installment or fee assure choices to automated credit score options linked to exterior marketplaces, comparable to automobile gross sales platforms.

A particular position right here is performed by customized software program — options constructed for particular duties and processes. In contrast to off-the-shelf merchandise, customized programs could be tailor-made to a financial institution’s actual wants, simply built-in with different platforms, and rapidly tailored to altering market circumstances.

That is why software program growth in banking has turn out to be a strategic course that helps monetary establishments not simply sustain with the occasions, however keep forward of the competitors.

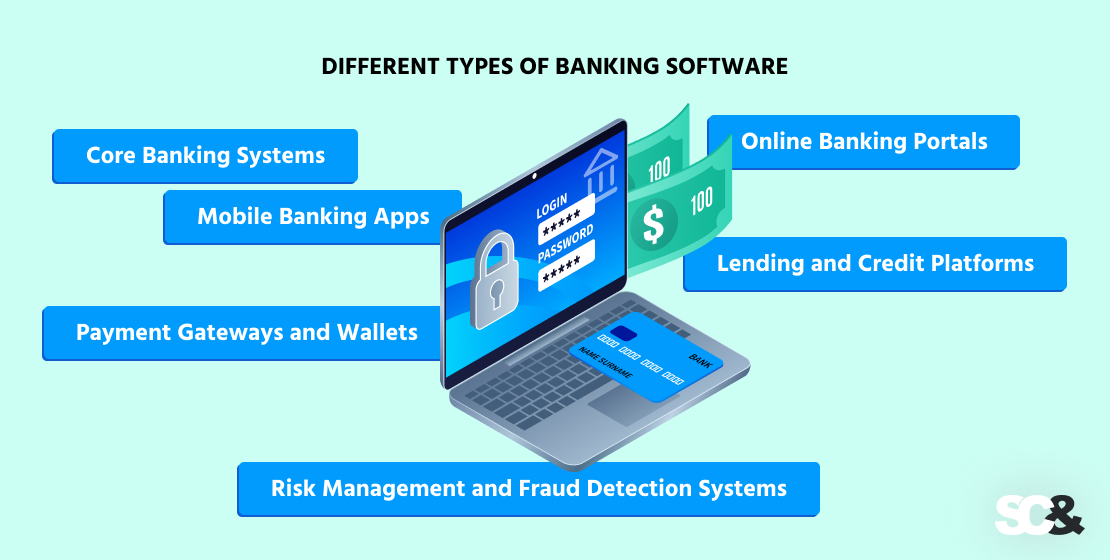

Completely different Sorts of Banking Software program

Fashionable banks depend on a variety of digital instruments that work collectively to ship seamless monetary providers. From core programs that energy every day operations to customer-facing apps and superior safety modules, every sort of software program performs a particular position inside this ecosystem. Under, we’ll have a look at the primary varieties of banking software program that assist create such ecosystems.

Core Banking Programs

Core banking programs are the center of a financial institution. They deal with key operations comparable to opening and managing accounts, processing deposits and funds, monitoring transactions, and sustaining balances. These programs permit clients to entry their funds from any department or on-line service, whereas enabling the financial institution to course of huge volumes of transactions in actual time.

Cell Banking Apps

Cell banking apps have turn out to be an on a regular basis device for thousands and thousands of customers. They permit clients to pay payments, switch cash, apply for loans, and handle their funds instantly from their smartphones. For banks, these apps are greater than only a handy service channel — they assist increase buyer loyalty and improve the shopper expertise.

On-line Banking Portals

On-line banking portals provide performance much like cellular banking apps however designed for browser entry. These are safe and dependable net platforms the place clients can handle their accounts, arrange automated funds, obtain statements, and use further monetary providers.

Fee Gateways and Wallets

Fee gateways and digital wallets present safe and quick fee processing each domestically and internationally. They’re necessary not just for banks but in addition for fintech corporations and on-line retailers. Supporting a number of fee strategies and guaranteeing excessive transaction velocity instantly impacts consumer comfort and belief.

Lending and Credit score Platforms

Lending and credit score platforms assist banks and microfinance organizations automate the lending course of — from credit score historical past checks to rate of interest calculations and digital contract signing.

Danger Administration and Fraud Detection Programs

As digital transactions develop, so do cyber threats. Danger administration and fraud detection programs allow banks to establish suspicious transactions in actual time, forestall fraud, and adjust to safety and compliance necessities. Because of machine studying and large knowledge processing, such programs are capable of acknowledge dangers upfront and act sooner than attackers.

Fashionable Banking Software program: Key Options

Fashionable banking programs go far past normal on-line banking. Fashionable banking software program brings collectively innovation, personalization, and adaptability to satisfy consumer expectations and market calls for. Under are the important thing options that outline the leaders within the business.

Innovation and Technological Growth

The banking sector is actively adopting superior applied sciences — from synthetic intelligence and machine studying to cloud options and automation. These instruments assist analyze giant quantities of knowledge in actual time, detect anomalies, forestall fraud, and optimize inside processes.

Innovation allows banks to adapt to market adjustments extra rapidly, provide new providers, and enhance service high quality with out the necessity for an entire infrastructure overhaul.

Personalization and Enhanced Buyer Expertise

Prospects more and more count on greater than normal banking operations — they need handy, personalised, and proactive interactions. Fashionable banking software program makes it doable to investigate consumer conduct and provide related services, from personalised mortgage affords to tailor-made notifications and monetary steering.

Nicely-designed personalization has a direct influence on the shopper expertise, rising satisfaction and strengthening buyer loyalty.

Integration with Open Banking and APIs

Open APIs and open banking rules have gotten the usual for banking options. They permit monetary establishments to securely alternate knowledge with fintech corporations, third-party providers, and different banks.

This type of integration opens the door to new enterprise fashions, simplifies the launch of further providers, and improves the shopper expertise by providing a broader vary of options.

Assist for Cell Apps and Banking Functions

Cell channels are one of many key components of contemporary banking. Cell apps and banking functions give clients 24/7 entry to providers, make interacting with the financial institution as handy as doable, and allow options that aren’t accessible offline.

Customized Banking Software program vs Off-the-Shelf Options

The selection between customized growth and ready-made options is determined by the corporate’s enterprise technique, scale, and long-term targets. To make this determination simpler, let’s have a look at two frequent situations.

State of affairs 1: A Giant Financial institution with Distinctive Necessities

Giant monetary establishments typically work with a variety of merchandise, a big buyer base, and complicated inside processes. For them, it’s essential to make sure excessive system efficiency, compliance with business safety requirements, and the pliability to scale additional.

In such circumstances, customized banking software program growth is the optimum alternative. Tailored options permit banks to exactly adapt performance to their enterprise logic, combine new providers with out limitations, preserve a excessive degree of safety and compliance, and regularly evolve the system alongside the group. This can be a long-term funding that gives stability and a powerful aggressive benefit.

State of affairs 2: A Fintech Startup or a Small Financial institution

Small corporations and startups within the banking and monetary sector typically intention to enter the market rapidly, check their concepts, and preserve prices beneath management. For these wants, utilizing a ready-made banking software program resolution is often the smarter strategy.

This strategy has the advantages of speedy implementation, a easy value construction, and a focus on product design, and bettering the shopper expertise. Off-the-shelf platforms match nicely for fundamental duties and could be prolonged or personalized into your personal resolution as your organization grows and necessities change.

Tips on how to Select the Proper Choice

If creating a product that’s distinctive, adaptable, and contains your personal controls is the precedence, then contemplate investing the time, cash, and energy in creating customized software program.

Alternatively, if attending to market quick and with minimal prices is the better precedence, then beginning with one thing off-the-shelf or ready-made is one of the best step.

Banking Software program Growth Course of: Complete Information

The event of banking software program is a multi-stage and structured course of wherein every section impacts the ultimate high quality, safety, and stability of the product. Cautious planning, a transparent sequence of actions, and a focus to element make it doable to construct options that meet trendy market calls for and regulatory necessities.

Enterprise Evaluation, Aim Definition, and Know-how Stack Choice

Each banking software program mission begins with analyzing enterprise necessities and setting clear goals. At this stage, the event group defines the product’s core duties, vital options, consumer expectations, and regulatory constraints. This types the inspiration for all subsequent growth work.

On the similar time, the mission’s expertise stack is chosen. The selection of programming languages, frameworks, databases, and infrastructure instruments instantly impacts efficiency, safety, and scalability. A correctly chosen expertise stack ensures secure system operation and simplifies additional growth.

UI/UX and Buyer Expertise

Interface and consumer expertise play an important position in banking, the place the comfort and reliability of digital providers instantly affect buyer belief.

The design of digital providers ought to mix visible simplicity, intuitive interplay, and strict safety requirements. Buyer expertise has turn out to be a aggressive benefit, making this stage of growth simply as necessary because the technical structure.

Software program Growth Course of: Agile, Waterfall, Hybrid

The event methodology that you just select will dictate all the course of. The Waterfall mannequin (conventional technique) could be efficient when necessities are fastened and work is accomplished inside strict deadlines.

With Agile, you possibly can obtain flexibility, permitting groups to iterate and develop particulars as you’re employed, which many organisations will discover particularly useful when creating new digital providers.

Most organisations will undertake a hybrid strategy, offering a level of certainty whereas nonetheless enabling some flexibility to adapt as required. That is helpful with merchandise like banking options, the place strict safety and regulatory necessities exist, however it will also be fast to develop a product.

Testing and QA of Banking Options

The standard and safety of banking options can’t be ensured and not using a rigorous strategy to testing. The product is examined at each stage of growth to detect errors in each enterprise logic and structure. Particular consideration is paid to load testing, safety audits, and check automation, which ensures secure system efficiency beneath excessive transaction volumes.

Early and steady involvement of QA groups helps keep away from expensive fixes at later levels and ensures the product’s reliability at launch.

Deployment and Assist

As soon as growth and testing are full, the product strikes into the deployment section. For banking programs, this requires particular consideration to fault tolerance, knowledge backups, safety, and strict launch procedures. Any mistake at this stage will probably have penalties, so implementation is rigorously deliberate.

An equally necessary a part of this stage is documentation. Within the banking business, detailed documentation isn’t only a formality — it’s a vital requirement. Banks count on each perform, integration, and workflow to be clearly described and supported with exams. In some circumstances, creating and validating documentation might even take longer than the event itself.

Subsequent comes help, which performs a task no much less necessary than growth itself: it ensures the graceful operation of digital providers, will increase their reliability, and strengthens consumer confidence.

Safety and Compliance within the Banking and Monetary Sector

Any knowledge breach or regulatory violation can result in critical fines, lack of buyer belief, and restrictions on enterprise actions. On this part, we’ll study the rationale behind the necessary safety requirements that banks adhere to, in addition to the important thing laws that influence the business.

Safety Requirements for Banks

Fashionable banks deal with huge quantities of confidential data and course of thousands and thousands of transactions on daily basis. To make sure knowledge safety and decrease dangers, strict safety requirements are utilized, together with:

- Information encryption in any respect levels of processing and transmission

- Multi-factor authentication for each customers and staff

- Actual-time transaction monitoring programs

- Common audits and vulnerability testing

- Infrastructure segmentation to reduce the influence of potential assaults

Rules (GDPR, PSD2, and Others)

The banking sector is topic to quite a few laws governing the storage, transmission, and use of knowledge.

- GDPR (Basic Information Safety Regulation) — a European regulation that units strict guidelines for processing clients’ private knowledge

- PSD2 (Fee Companies Directive 2) — an EU directive that introduces open banking rules and requires banks to work together securely with third-party providers by APIs

- PCI DSS (Fee Card Business Information Safety Normal) — a global safety normal that applies to all organizations that retailer, course of, or transmit cardholder knowledge. It defines strict technical and organizational necessities for fee safety.

- Nationwide knowledge safety and monetary supervision legal guidelines, which can complement or strengthen worldwide laws

Compliance with these laws not solely reduces dangers but in addition builds buyer belief, positioning the financial institution as a dependable accomplice.

Affect on Customized Banking Software program Growth

For growth groups and banks, adhering to safety and compliance requirements isn’t a separate step — it’s an integral a part of customized banking software program growth from the very starting.

The system structure have to be developed in accordance with security-by-design rules, and testing and replace processes have to be structured to satisfy present and future regulatory necessities.

Integrating safety programs early within the growth cycle helps cut back future prices, accelerates product certification, and ensures resolution resilience in an ever-changing regulatory panorama.

Growth Value and Funds Elements

The price of banking software program growth is determined by many variables — from the quantity of research to the variety of integrations and the complexity of the structure.

To obviously illustrate which components form the funds and the way they have an effect on the ultimate value, the desk beneath summarizes the important thing levels and elements of banking software program growth. This can assist you to perceive how mission prices are shaped and the place you possibly can most precisely plan sources.

| Stage / Issue | Description | Affect on Funds | Instance of Affect |

| Evaluation and Planning | Necessities gathering, enterprise course of evaluation, documentation | Medium — is determined by the depth of labor | Thorough evaluation can cut back growth prices in a while |

| Design and UX | Designing interfaces for net and cellular apps | Medium | The extra complicated the consumer situations, the upper the trouble required |

| Growth and Testing | Core stage: programming, QA, DevOps | Excessive | Extra options and customized modules imply increased total prices |

| Integrations and Infrastructure | Connecting APIs, fee gateways, exterior providers | Medium–Excessive | Quite a few integrations enhance each timelines and bills |

| Assist and Updates | Publish-release upkeep, enhancements, compliance updates | Ongoing, long-term | Sometimes 15–25% of the preliminary funds yearly |

| Measurement of Growth Group | Quantity and {qualifications} of specialists | Direct influence | A bigger group accelerates growth however will increase prices |

| Mission Complexity | Variety of options, degree of customization, total scale | Direct influence | Extra complicated options require extra time and sources |

| Variety of Integrations | Amount of third-party providers and programs to attach | Direct influence | Integrations with a number of banks or APIs increase the whole value |

Funds Elements

Selecting the Proper Growth Accomplice

Deciding on a dependable software program growth accomplice is a key issue within the success of any banking mission. Even essentially the most highly effective concept or essentially the most detailed technique will fail if the unsuitable group is tasked with its implementation. Subsequently, choosing the proper accomplice is simply as necessary as creating the product itself.

Why It’s Vital to Work with a Dependable Banking Software program Growth Firm

Tasks in banking software program growth are complicated, extremely regulated, and demand precision. Partnering with a trusted banking software program growth firm helps cut back the chance of failures, accelerates approval and certification levels, and ensures the long-term stability of the product. A robust accomplice not solely handles the technical facet but in addition contributes to shaping a sustainable digital technique.

Tips on how to Select the Proper Growth Accomplice for Lengthy-Time period Collaboration

Lengthy-term partnerships are particularly necessary for banking tasks, which hardly ever finish with a single launch. When choosing the proper growth accomplice, it’s value taking an in depth have a look at their status — learn shopper critiques, case research, and testimonials to grasp how they really work in actual tasks.

Take a while to assessment their portfolio to see if examples much like yours are represented downstream. Consider the standard, scale, and industries they’ve served. Technical and organizational interviews with key members will assist you to consider their expertise, communication type, and mindset.

Be sure that your approaches to mission administration and communication align from the beginning — clean collaboration is determined by it. And at last, talk about their imaginative and prescient for the product after launch.

Standards for Selecting a Software program Growth Accomplice

When looking for a accomplice to develop banking options, a number of key elements have to be thought of. That can assist you make your alternative, the desk beneath presents the primary standards to contemplate when evaluating potential companions. It explains why every issue is necessary and what particular elements to contemplate when selecting.

| Standards | Why It Issues | What to Look For |

| Business Expertise | Reveals understanding of laws, safety, and banking specifics. | Related tasks, compliance data, confirmed observe file. |

| Technical Experience | Ensures a stable, future-proof basis. | Fashionable tech stack, open banking, API, cellular growth. |

| Communication | Retains timelines lifelike and builds belief. | Transparency on deadlines, pricing, and progress. |

| Safety & Compliance | Basic for safeguarding knowledge and assembly laws. | Robust compliance practices, audit expertise, security-first focus. |

| Scalability | Permits the accomplice to develop with what you are promoting. | Skill to scale groups, add modules, and help long-term development. |

Standards for Selecting a Software program Growth Accomplice

SCAND: Your Banking Software program Growth Accomplice

Selecting the best expertise accomplice is the important thing to efficiently delivering banking tasks. SCAND is a trusted software program growth firm with over 25 years of expertise constructing complicated and safe IT options for the monetary sector.

Experience in Monetary Software program Growth

SCAND has intensive expertise in monetary software program growth, together with tasks for banks, fintech corporations, and fee programs.

Our group has efficiently delivered cellular functions, inside administration programs, analytics instruments, and integrations with numerous fee gateways. We comply with regulatory necessities, implement trendy knowledge safety mechanisms, and design system architectures with scalability and fault tolerance in thoughts.

Our Banking Software program Growth Companies

We provide a full vary of banking software program growth providers, together with:

- Customized banking software program growth — from evaluation and design to help and scaling;

- Constructing banking functions and cellular banking options with intuitive UX and strong safety;

- Integrations with exterior APIs, open banking programs, and fee platforms;

- Modernizing legacy programs and migrating to trendy expertise stacks;

- Creating inside instruments to optimize processes and enhance knowledge administration.

Why SCAND Is the Proper Growth Accomplice for Your Banking Resolution

Working with SCAND means partnering with a group of skilled banking software program builders who perceive the specifics of the business and know align expertise with enterprise targets.

We construct partnerships primarily based on transparency, clear timelines, and a versatile growth strategy, serving to our purchasers create options that meet trendy market calls for and safety requirements.

Because of our expertise, reliability, and complete strategy, SCAND turns into the precise growth accomplice for corporations seeking to take their banking providers to the subsequent degree.

Conclusion

In 2025, banking software program growth is extra than simply implementing expertise — it’s a strategic device that defines the competitiveness of economic organizations. Fashionable options assist banks enhance effectivity, improve the shopper expertise, guarantee knowledge safety and regulatory compliance, and adapt rapidly to a continuously evolving market.

To efficiently develop a banking resolution, it’s important to take a complete strategy to each stage — from enterprise evaluation and expertise choice to growth, testing, integrations, and ongoing help.

Selecting the best growth accomplice additionally performs an important position, as they should be able to delivering the mission at a excessive degree and supporting its development sooner or later.

SCAND has the expertise, experience, and group to turn out to be such a accomplice. We create dependable, scalable, and trendy banking options, serving to purchasers efficiently innovate and obtain business management.

Contact us to debate your mission and begin creating a banking resolution that meets immediately’s necessities and tomorrow’s challenges.

Theorists

Theorists When he wrote it down, it was simply concept. Different theorists within the Thirties and ’40s took Einstein’s concept and supplied the impetus for constructing the atomic bomb. (Leo Szilard conceived neutron chain response thought, Hans Bethe led the Theoretical Division at Los Alamos, Edward Teller developed hydrogen bomb concept.) Einstein’s concept was demonstrably proved right over Hiroshima and Nagasaki.

When he wrote it down, it was simply concept. Different theorists within the Thirties and ’40s took Einstein’s concept and supplied the impetus for constructing the atomic bomb. (Leo Szilard conceived neutron chain response thought, Hans Bethe led the Theoretical Division at Los Alamos, Edward Teller developed hydrogen bomb concept.) Einstein’s concept was demonstrably proved right over Hiroshima and Nagasaki.

Professors (particularly in Science, Know-how, Engineering and Math) run labs that operate like mini startups. They ask analysis questions, then rent grad college students, postdocs, and employees and write grant proposals to fund their work, usually spending 30–50% of their time writing and managing grants. After they get a grant the lead researcher (usually a college member/head of the lab) is known as the Principal Investigator (PI).

Professors (particularly in Science, Know-how, Engineering and Math) run labs that operate like mini startups. They ask analysis questions, then rent grad college students, postdocs, and employees and write grant proposals to fund their work, usually spending 30–50% of their time writing and managing grants. After they get a grant the lead researcher (usually a college member/head of the lab) is known as the Principal Investigator (PI).