Amazon Managed Workflows for Apache Airflow (Amazon MWAA) is a totally managed orchestration service that makes it easy to run information processing workflows at scale. Amazon MWAA takes care of working and scaling Apache Airflow so you’ll be able to give attention to creating workflows. Nonetheless, though Amazon MWAA offers excessive availability inside an AWS Area by way of options like Multi-AZ deployment of Airflow elements, recovering from a Regional outage requires a multi-Area deployment.

In Half 1 of this collection, we highlighted challenges for Amazon MWAA catastrophe restoration and mentioned greatest practices to enhance resiliency. Particularly, we mentioned two key methods: backup and restore and heat standby. On this put up, we dive deep into the implementation for each methods and supply a deployable resolution to appreciate the architectures in your personal AWS account.

The answer for this put up is hosted on GitHub. The README within the repository affords tutorials in addition to additional workflow particulars for each backup and restore and heat standby methods.

Backup and restore structure

The backup and restore technique entails periodically backing up Amazon MWAA metadata to Amazon Easy Storage Service (Amazon S3) buckets within the major Area. The backups are replicated to an S3 bucket within the secondary Area. In case of a failure within the major Area, a brand new Amazon MWAA surroundings is created within the secondary Area and hydrated with the backed-up metadata to revive the workflows.

The challenge makes use of the AWS Cloud Improvement Package (AWS CDK) and is ready up like a regular Python challenge. Seek advice from the detailed deployment steps within the README file to deploy it in your personal accounts.

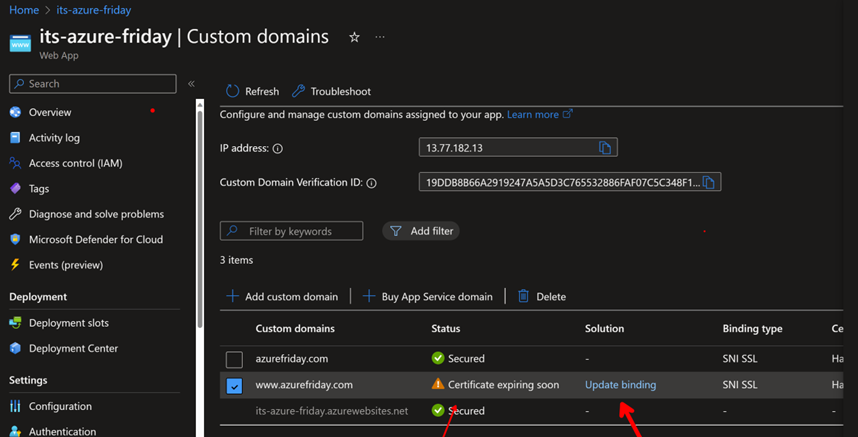

The next diagram reveals the structure of the backup and restore technique and its key elements:

- Major Amazon MWAA surroundings – The surroundings within the major Area hosts the workflows

- Metadata backup bucket – The bucket within the major Area shops periodic backups of Airflow metadata tables

- Replicated backup bucket – The bucket within the secondary Area syncs metadata backups by way of Amazon S3 cross-Area replication

- Secondary Amazon MWAA surroundings – This surroundings is created on-demand throughout restoration within the secondary Area

- Backup workflow – This workflow periodically backups up Airflow metadata to the S3 buckets within the major Area

- Restoration workflow – This workflow displays the first Amazon MWAA surroundings and initiates failover when wanted within the secondary Area

Determine 1: The backup restore structure

There are basically two workflows that work in conjunction to attain the backup and restore performance on this structure. Let’s discover each workflows intimately and the steps as outlined in Determine 1.

Backup workflow

The backup workflow is liable for periodically taking a backup of your Airflow metadata tables and storing them within the backup S3 bucket. The steps are as follows:

- [1.a] You’ll be able to deploy the offered resolution out of your steady integration and supply (CI/CD) pipeline. The pipeline features a DAG deployed to the DAGs S3 bucket, which performs backup of your Airflow metadata. That is the bucket the place you host all your DAGs in your surroundings.

- [1.b] The answer allows cross-Area replication of the DAGs bucket. Any new modifications to the first Area bucket, together with DAG recordsdata, plugins, and

necessities.txtrecordsdata, are replicated to the secondary Area DAGs bucket. Nonetheless, for current objects, a one-time replication must be carried out utilizing S3 Batch Replication. - [1.c] The DAG deployed to take metadata backup runs periodically. The metadata backup doesn’t embrace among the auto-generated tables and the checklist of tables to be backed up is configurable. By default, the answer backs up variable, connection, slot pool, log, job, DAG run, set off, activity occasion, and activity fail tables. The backup interval can be configurable and needs to be primarily based on the Restoration Level Goal (RPO), which is the information loss time throughout a failure that may be sustained by your corporation.

- [1.d] Just like the DAGs bucket, the backup bucket can be synced utilizing cross-Area replication, by way of which the metadata backup turns into obtainable within the secondary Area.

Restoration workflow

The restoration workflow runs periodically within the secondary Area monitoring the first Amazon MWAA surroundings. It has two features:

- Retailer the surroundings configuration of the first Amazon MWAA surroundings within the secondary backup bucket, which is used to recreate an similar Amazon MWAA surroundings within the secondary Area throughout failure

- Carry out the failover when a failure is detected

The next are the steps for when the first Amazon MWAA surroundings is wholesome (see Determine 1):

- [2.a] The Amazon EventBridge scheduler begins the AWS Step Capabilities workflow on a offered schedule.

- [2.b] The workflow, utilizing AWS Lambda, checks Amazon CloudWatch within the major Area for the SchedulerHeartbeat metrics of the first Amazon MWAA surroundings. The surroundings within the major Area sends heartbeats to CloudWatch each 5 seconds by default. Nonetheless, to not invoke a restoration workflow spuriously, we use a default aggregation interval of 5 minutes to test the heartbeat metrics. Subsequently, it will probably take as much as 5 minutes to detect a major surroundings failure.

- [2.c] Assuming that the heartbeat was detected in 2.b, the workflow makes the cross-Area GetEnvironment name to the first Amazon MWAA surroundings.

- [2.d] The response from the

GetEnvironmentname is saved within the secondary backup S3 bucket for use in case of a failure within the subsequent iterations of the workflow. This makes certain the most recent configuration of your major surroundings is used to recreate a brand new surroundings within the secondary Area. The workflow completes efficiently after storing the configuration.

The next are the steps for the case when the first surroundings is unhealthy (see Determine 1):

- [2.a] The EventBridge scheduler begins the Step Capabilities workflow on a offered schedule.

- [2.b] The workflow, utilizing Lambda, checks CloudWatch within the major Area for the scheduler heartbeat metrics and detects failure. The scheduler heartbeat test utilizing the CloudWatch API is the really useful method to detect failure. Nonetheless, you’ll be able to implement a customized technique for failure detection within the Lambda operate resembling deploying a DAG to periodically ship customized metrics to CloudWatch or different information shops as heartbeats and utilizing the operate to test that metrics. With the present CloudWatch-based technique, the unavailability of the CloudWatch API might spuriously invoke the restoration circulate.

- [2.c] Skipped

- [2.d] The workflow reads the beforehand saved surroundings particulars from the backup S3 bucket.

- [2.e] The surroundings particulars learn from the earlier step is used to recreate an similar surroundings within the secondary Area utilizing the CreateEnvironment API name. The API additionally wants different secondary Area particular configurations resembling VPC, subnets, and safety teams which might be learn from the user-supplied configuration file or surroundings variables throughout the resolution deployment. The workflow in a polling loop waits till the surroundings turns into obtainable and invokes the DAG to revive metadata from the backup S3 bucket. This DAG is deployed to the DAGs S3 bucket as part of the answer deployment.

- [2.f] The DAG for restoring metadata completes hydrating the newly created surroundings and notifies the Step Capabilities workflow of completion utilizing the activity token integration. The brand new surroundings now begins working the lively workflows and the restoration completes efficiently.

Concerns

Think about the next when utilizing the backup and restore technique:

- Restoration Time Goal – From failure detection to workflows working within the secondary Area, failover can take over half-hour. This contains new surroundings creation, Airflow startup, and metadata restore.

- Value – This technique avoids the overhead of working a passive surroundings within the secondary Area. Prices are restricted to periodic backup storage, cross-Area information switch fees, and minimal compute for the restoration workflow.

- Information loss – The RPO is dependent upon the backup frequency. There’s a design trade-off to contemplate right here. Though shorter intervals between backups can reduce potential information loss, too frequent backups can adversely have an effect on the efficiency of the metadata database and consequently the first Airflow surroundings. Additionally, the answer can’t get well an actively working workflow halfway. All lively workflows are began recent within the secondary Area primarily based on the offered schedule.

- Ongoing administration – The Amazon MWAA surroundings and dependencies are routinely saved in sync throughout Areas on this structure. As specified within the Step 1.b of the backup workflow, the DAGs S3 bucket will want a one-time deployment of the prevailing sources for the answer to work.

Heat standby structure

The nice and cozy standby technique entails deploying similar Amazon MWAA environments in two Areas. Periodic metadata backups from the first Area are used to rehydrate the standby surroundings in case of failover.

The challenge makes use of the AWS CDK and is ready up like a regular Python challenge. Seek advice from the detailed deployment steps within the README file to deploy it in your personal accounts.

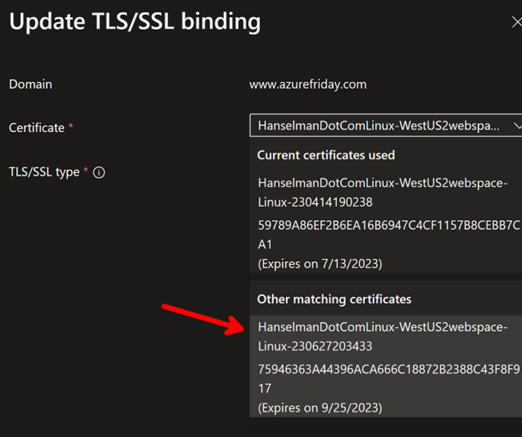

The next diagram reveals the structure of the nice and cozy standby technique and its key elements:

- Major Amazon MWAA surroundings – The surroundings within the major Area hosts the workflows throughout regular operation

- Secondary Amazon MWAA surroundings – The surroundings within the secondary Area acts as a heat standby able to take over at any time

- Metadata backup bucket – The bucket within the major Area shops periodic backups of Airflow metadata tables

- Replicated backup bucket – The bucket within the secondary Area syncs metadata backups by way of S3 Cross-Area Replication.

- Backup workflow – This workflow periodically backups up Airflow metadata to the S3 buckets in each Areas

- Restoration workflow – This workflow displays the first surroundings and initiates failover to the secondary surroundings when wanted

Determine 2: The nice and cozy standby structure

Just like the backup and restore technique, the backup workflow (Steps 1a–1d) periodically backups up important Amazon MWAA metadata to S3 buckets within the major Area, which is synced within the secondary Area.

The restoration workflow runs periodically within the secondary Area monitoring the first surroundings. On failure detection, it initiates the failover process. The steps are as follows (see Determine 2):

- [2.a] The EventBridge scheduler begins the Step Capabilities workflow on a offered schedule.

- [2.b] The workflow checks CloudWatch within the major Area for the scheduler heartbeat metrics and detects failure. If the first surroundings is wholesome, the workflow completes with out additional actions.

- [2.c] The workflow invokes the DAG to revive metadata from the backup S3 bucket.

- [2.d] The DAG for restoring metadata completes hydrating the passive surroundings and notifies the Step Capabilities workflow of completion utilizing the activity token integration. The passive surroundings begins working the lively workflows on the offered schedules.

As a result of the secondary surroundings is already warmed up, the failover is quicker with restoration occasions in minutes.

Concerns

Think about the next when utilizing the nice and cozy standby technique:

- Restoration Time Goal – With a heat standby prepared, the RTO might be as little as 5 minutes. This contains simply the metadata restore and reenabling DAGs within the secondary Area.

- Value – This technique has an added value of working related environments in two Areas always. With auto scaling for employees, the nice and cozy occasion can preserve a minimal footprint; nevertheless, the net server and scheduler elements of Amazon MWAA will stay lively within the secondary surroundings always. The trade-off is considerably decrease RTO.

- Information loss – Just like the backup and restore mannequin, the RPO is dependent upon the backup frequency. Quicker backup cycles reduce potential information loss however can adversely have an effect on efficiency of the metadata database and consequently the first Airflow surroundings.

- Ongoing administration – This method comes with some administration overhead. Not like the backup and restore technique, any modifications to the first surroundings configurations should be manually reapplied to the secondary surroundings to maintain the 2 environments in sync. Automated synchronization of the secondary surroundings configurations is a future work.

Shared issues

Though the backup and restore and heat standby methods differ of their implementation, they share some widespread issues:

- Periodically take a look at failover to validate restoration procedures, RTO, and RPO.

- Allow Amazon MWAA surroundings logging to assist debug points throughout failover.

- Use the AWS CDK or AWS CloudFormation to handle the infrastructure definition. For extra particulars, see the next GitHub repo or Fast begin tutorial for Amazon Managed Workflows for Apache Airflow, respectively.

- Automate deployments of surroundings configurations and catastrophe restoration workflows by way of CI/CD pipelines.

- Monitor key CloudWatch metrics like

SchedulerHeartbeatto detect major surroundings failures.

Conclusion

On this collection, we mentioned how backup and restore and heat standby methods provide configurable information safety primarily based in your RTO, RPO, and price necessities. Each use periodic metadata replication and restoration to reduce the realm of impact of Regional outages.

Which technique resonates extra along with your use case? Be happy to check out our resolution and share any suggestions or questions within the feedback part!

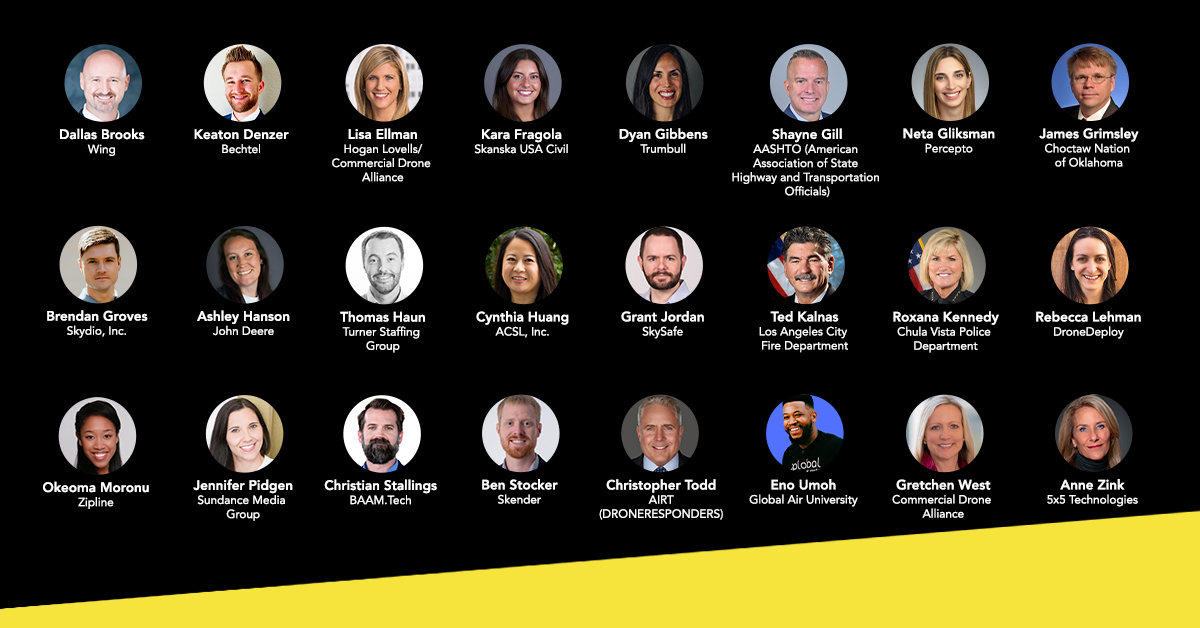

Concerning the Authors

Chandan Rupakheti is a Senior Options Architect at AWS. His most important focus at AWS lies within the intersection of Analytics, Serverless, and AdTech providers. He’s a passionate technical chief, researcher, and mentor with a knack for constructing progressive options within the cloud. Outdoors of his skilled life, he loves spending time along with his household and pals apart from listening and enjoying music.

Chandan Rupakheti is a Senior Options Architect at AWS. His most important focus at AWS lies within the intersection of Analytics, Serverless, and AdTech providers. He’s a passionate technical chief, researcher, and mentor with a knack for constructing progressive options within the cloud. Outdoors of his skilled life, he loves spending time along with his household and pals apart from listening and enjoying music.

Parnab Basak is a Senior Options Architect and a Serverless Specialist at AWS. He makes a speciality of creating new options which might be cloud native utilizing fashionable software program growth practices like serverless, DevOps, and analytics. Parnab works carefully within the analytics and integration providers area serving to prospects undertake AWS providers for his or her workflow orchestration wants.

Parnab Basak is a Senior Options Architect and a Serverless Specialist at AWS. He makes a speciality of creating new options which might be cloud native utilizing fashionable software program growth practices like serverless, DevOps, and analytics. Parnab works carefully within the analytics and integration providers area serving to prospects undertake AWS providers for his or her workflow orchestration wants.