Key Takeaways

- YouTube Music Premium is competitively priced at just $9.99 per month for the basic plan, undercutting Spotify’s standard rate.

- YouTube Music Premium offers an extensive library of official songs, popular podcasts, and original content from your favorite artists and creators.

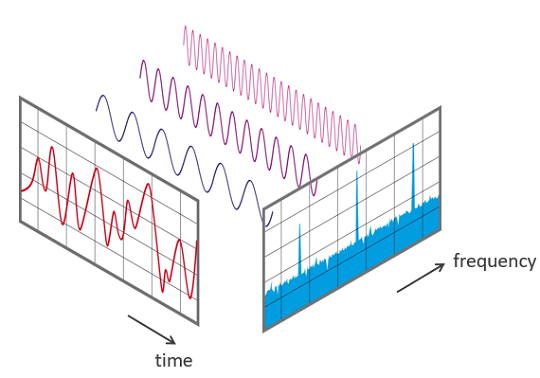

- The YouTube Music Premium algorithm has become the most popular among users, surpassing even Spotify’s capabilities as it continually learns and adapts to user preferences through increased utilization.

What drives the value of membership is a significant factor in persuading people to join a service, making it nearly as omnipresent as it is. While several alternatives to the platform exist, you might be familiar with names such as and . However, another, perhaps lesser-known option is worth considering.

YouTube Music Premium is an often-underappreciated service that offers a compelling solution for those seeking high-quality music listening experiences. Staying loyal to a particular service often stems from the satisfaction of discovering content tailored to one’s tastes through a carefully honed algorithm. Nevertheless, if you’re seeking a change of pace, YouTube Music Premium is definitely worth exploring. A cursory glance reveals that the service offers a considerable array of features; yet, one must dedicate some time to optimize the algorithm to suit individual listening tastes. Staying with the service for even a brief period – just a month or so – may leave you utterly astonished by its transformative impact. Here are a few key factors that influenced my decision to switch from Spotify to YouTube Music Premium – you may find them convincing as well.

YouTube is retesting a revamped version of its former Premium Lite subscription, which it had discontinued just 12 months prior. Although restrictions on advertisements have been implemented,

It is cheaper than Spotify

YouTube Premium

At a surprisingly affordable price point, YouTube Music Premium offers a compelling alternative to the industry giant Spotify. A basic YouTube Music Premium subscription costs $9.99 per month, while the entry-level Spotify Premium plan starts at $12.99. Although it’s just a single dollar difference, this disparity holds immense significance for many individuals. As streaming services consistently hike up their prices, even small savings can have a significant impact on one’s budget.

A YouTube Premium Music subscription can also be bundled with, but taking this path increases the value to $13.99. Here’s a revised version: The major draw for many viewers lies in having an ad-free experience while watching their favorite content on YouTube. While YouTube Premium may not be worth the investment for casual viewers, it can still provide a pleasant perk for those who regularly consume YouTube content and enjoy exclusive features like ad-free videos and offline playback. College students can access streaming services for just $5.49 a month, with an additional annual subscription option available. Methods exist to obtain what one desires, turning their value into reality.

Apple Music introduces a new Set Checklist feature, empowering artists to curate playlists around their live shows and concerts, allowing them to easily share these with their fans.

Some visible aptitude

While music remains the primary attraction, YouTube Music Premium stands out from other streaming services by offering exclusive access to a vast library of films as well. Considering YouTube’s primary purpose as a video platform, the idea still holds water; yet, if you’re ever not in the mood for solely audio-based content, you can always switch to watching videos instead. YouTube’s competitive edge lies in offering a small yet significant motivator for users to opt for the platform over other music streaming services.

YouTube offers an extensive collection of songs paired with music videos, providing users with a vast library to explore. If your interest wanes while watching a film, simply toggle to an audio-only experience at the touch of a button.

When encountering difficulties with YouTube’s app or desktop site functionality, a straightforward initial step is to clear your browser cache.

Tons and many music

Streaming companies typically offer vast libraries of popular music, but YouTube’s extensive catalog of user-generated content gives it a significant edge in terms of overall availability. Here’s a revised version:

This perk is particularly significant, as it allows users to access and enjoy live performances that may have never been officially shared or promoted by the artists themselves. Despite implying a decrease in quality, it’s still a pleasant experience.

You’re unlikely to stumble upon professional-quality music when browsing through YouTube’s vast library without a filter, as the platform is open to creators of all skill levels.

This allows for the discovery of lesser-known gems that might not be available on popular streaming platforms due to copyright or licensing restrictions. Regardless of the context, you can listen to it on YouTube Music Premium. While songs uploaded without an artist’s permission may seem acceptable now, is this approach still valid in the future?

Despite the YouTube app’s requirement of a premium subscription for background music, there’s still a way to salvage your hopes.

Construct up your library

When you have old CDs lying around and want to transfer their contents to your computer to integrate them into a digital music library, it’s possible to do so. To successfully complete the process, the initial hurdle of ripping the songs has been overcome, leaving fewer complex tasks in its wake.

- Go to

- Click on your profile icon.

- Which playlists do you want to curate?

If you’re an artist, this feature is especially valuable, allowing you to share your original music with others and make it accessible through our platform. Establishing a strong connection with this service allows you access to an extensive library of music, surpassing what other companies can provide, and the process of setting it up is surprisingly straightforward.

Vintage vinyl records have made a comeback, but one format stands poised to endure: high-quality digital audio.

Your mileage could range

The effectiveness of YouTube Music Premium’s algorithm compared to Spotify’s is a matter of debate. While some may argue that this is true, numerous factors contribute to a healthy algorithm. Without discovering the perfect algorithm on your first attempt, it’s crucial to commit to a service for a reasonable period, allowing you to develop a deeper understanding of your musical preferences and ultimately unlock more tailored recommendations.

At just a fraction of the cost of Spotify, YouTube Music Premium stands out as an affordable option for music enthusiasts.

For fans of YouTube’s algorithm, the likelihood is high that they will appreciate the similarities with YouTube Music’s algorithm, ultimately coming down to private preference. Having a top-notch algorithm has a significant impact on individuals seeking to explore and discover new music. If you’re familiar with your preferred artists, the quality of recommendation isn’t as crucial as it is for those who are still exploring their musical tastes.

To keep your iPhone going strong for a longer period, consider using these strategies.