Here’s an improved version: The highly anticipated iOS 18 is set to debut on September 16. But before you can take advantage of its cutting-edge features, you may be wondering: Is my iPhone ready for the upgrade? To find out, check if your device falls under our list of compatible models, featuring brand-new options and eligible devices.

Apple has concluded its showcase of innovative products at the event, featuring the latest iPhone models, including the brand-new iPhone 14 series, as well as the Apple Watch Series 8 and AirPods Pro units. The phones may already be installed, so you shouldn’t need to improve on buying. When iOS 18 becomes available on September 16, everyone with a newer Apple device can upgrade for free, while those with older iPhones will also get a chance to try out the new operating system. While not every iPhone will be capable of running iOS 18, even those compatible devices won’t necessarily enjoy all the features and enhancements introduced with this latest iteration.

The notion of replacing stratification has become obsolete in recent years. Annually, Apple removes certain older iPhone models from its iOS update eligibility list. In the final 12 months, the iPhone 8, iPhone 8 Plus, and iPhone X were deliberately excluded from the compatibility list. That’s a limitation that many iPhone house owners could not accept when they found out they couldn’t upgrade to iOS 17 and missed out on some of its exciting new features.

Will we provide assistance in determining whether your iPhone is compatible with iOS 18 and Apple Intel-based devices? What’s in store for us with this revolutionary replacement? iOS 18: Revolutionizing Mobile Experience with Enhanced Features and Improvements?

Apple has officially announced the release of iOS 18, marking a significant milestone in the evolution of its mobile operating system. This latest iteration boasts numerous enhancements, aimed at refining the user experience, bolstering security, and introducing innovative features.

The abstract highlights that iOS 18 is designed to:

* Streamline performance by optimizing memory management, reducing app crashes, and improving overall responsiveness

* Enhance security through robust encryption protocols, fortified authentication measures, and real-time threat detection

* Introduce intuitive, gesture-based navigation, simplifying user interactions and enhancing the overall mobile experience

* Expand functionality with native integration of popular services, such as Siri Shortcuts, Apple Pay, and Apple News+

In summary, iOS 18 is poised to transform the way we interact with our mobile devices, offering a seamless, secure, and feature-rich experience that will further solidify its position as the industry standard for mobile operating systems.

iPhones suitable with iOS 18

If you’re upgrading from an iPhone with iOS 17 or later, your device should still be compatible with iOS 18. You don’t necessarily need to buy a brand-new phone to explore many of the latest software features.

Since you likely own an iPhone from the iPhone 8 or iPhone X era, it’s possible that you may not be able to upgrade to the latest iOS version; however, your phone should still continue to function properly, albeit without access to the most recent features and updates.

Does your iPhone meet the requirements for using Apple’s advanced intelligence features?

While your existing system may still support the latest iOS, it’s likely that you won’t have access to try the Apple Intelligence beta next month. Unless you’ve opted for an iPhone 15 Pro or iPhone 15 Pro Max – the flagship 2023 models – or have recently upgraded to one of the new iPhone 16 designs, your device likely features a quad-camera setup with improved low-light performance and a more advanced TrueDepth camera for enhanced selfie quality.

Will Apple’s advanced intelligence features become available to users soon?

Once compatible with Apple’s latest intelligence, users will be able to access several features starting in October, as these new capabilities roll out through subsequent iPhone software updates.

Apple’s AI capabilities are expected to become available in English for users in the United States by October. By December, the platform will become available in localized English for users in Australia, Canada, New Zealand, South Africa, and the UK. Additional language support, including Chinese, French, Japanese, and Spanish, is expected to become available sometime next year.

Apple’s rumoured iOS 18 could introduce a plethora of exciting features for iPhone users?

While Apple Intelligence remains the dominant player in replacing this technology, its offerings will be rolled out incrementally over the coming months. The advanced features won’t be accessible on older iPhones except for the iPhone 15 Pro series. When the remaining iOS 18 features roll out, they will become available on the aforementioned devices.

Get ready to experience these key features when you receive Apple’s latest software update later this month:

Streamline your digital life by securely storing all credentials, including passwords, in a centralized hub accessible directly within the app, alongside timely safety notifications. While currently located within Settings, relocating this feature to a standalone application would streamline discovery and accessibility.

Apple’s latest iMessage innovation introduces the ability to boldly highlight text with options for bold, italics, underlining, and striking through words. You’ll also gain the power to bring your text-based conversations to life with customizable animations. The replacement can bring compatibility.

The new feature allows users to curate and save their personal collections, such as “Wedding Ceremony Pictures” or “Journey to Aruba,” within the app. By adopting a grid layout, it replaces the traditional tabbed interface, streamlining the user experience. The latest Carousel view showcased at Apple’s Worldwide Developers Conference (WWDC) was.

You’ll finally be able to reorganize your apps, offering flexibility in how they’re arranged – for instance, you could leave the central area free to showcase your home screen’s wallpaper, while placing your apps along the perimeter of the screen. You can customise the appearance of apps by applying a wide range of tints, allowing you to further personalise their visual impact alongside increasing their size.

Numerous refinements and augmentations exist for keystone applications such as Maps, Calendar, and Safari, with additional features available.

Cherlynn Low’s picks for the latest macOS and iOS updates, driven by beta releases throughout the year.

As of now, Apple has neither officially announced nor released any iOS 18 beta versions.

While embracing the uncertainty of beta software, there’s a possibility that you’ll encounter and navigate the inherent glitches and challenges alongside them. If you haven’t already downloaded the file, we recommend waiting patiently for its official release, expected to happen within a week’s time.

The latest developments have brought the narrative in sync with the announced release schedules for iOS 18 and Apple’s Intelligence features.

Apple has released its Apple Watch Extremely 2 with a sleek new design option in black titanium, offering consumers a fresh aesthetic choice for this cutting-edge smartwatch. Additionally, the company has introduced a new Hermès-inspired model, blending style and functionality in perfect harmony.

The Apple Watch Series 2 is now available with a new black titanium finish, marking the sole update to the popular sports-focused smartwatch for this iteration.

Introducing “Satin Black”, the newest addition to our lineup, which pairs perfectly with the existing Extreme 2 featuring a sleek Pure Titanium finish.

In addition to its existing offerings, Apple has officially introduced a floral Milanese Loop watch strap to complement the sleek Satin Black watch design, along with a fresh Hermès-inspired model.

Apple claims the new Apple Watch Ultra’s bezel is crafted using a bespoke blasting process, which yields an exceptionally scratch-resistant and durable finish.

According to Apple, the watch’s casing is crafted from 95% recycled Grade Five titanium, a material also employed by the aerospace industry. Complemented by a matching dark zirconia agate crystal.

The replacement consists of a precisely matched black titanium band. The titanium Milanese loop’s design was influenced by traditional chrome steel mesh used by divers.

Crafted specifically for scuba diving, this innovative timepiece features a unique design built around titanium fabric that has been meticulously woven, flattened, and polished to produce a seamless, ergonomic surface that effortlessly wraps around the wearer’s wrist. The accessory features a distinctive parachute-inspired buckle that securely fastens with a satisfying click when engaged, accompanied by dual launch buttons for added functionality. The newly formed band joins the existing Path Loop, Alpine Loop, and Ocean Band.

Hermès Apple Watch Extremely 2

Apple has extended its collaboration with Hermès by introducing a distinctive band and matching watch face.

The brand-new Hermès strap is crafted from cutting-edge high-density 3D-knit materials, paired with a durable titanium buckle for unparalleled quality and style.

Crafted specifically for water enthusiasts, the innovative Hermès nautical timepiece boasts a distinctive dial featuring high-contrast numerals for optimal readability. The Sailing-Inspired Countdown button within The Extremely’s motion interface initiates a nautical-themed timer.

The latest Apple Watch models are part of the revamped Apple Watch Series 10 lineup, which was unveiled at the recent “event”.

The latest editions of Apple’s premium smartwatch, the Apple Watch Ultra 2, start at $799 and are available for pre-order now, with a launch date set for September 20.

One UI 6.1.1, a new software update, is now available in the US, Europe, and India as a replacement for Galaxy S24 households.

The rollout of One UI 6.1.1 will conclude in its final week, coinciding with the debut of the Galaxy S24 series, initially available in South Korea. Since its inception, the rollout has undergone a significant expansion.

Research findings suggest that the rise of virtual and augmented reality technologies is gaining momentum across the US, UK, continental Europe, and India, with widespread adoption expected in various industries.

The replacement brings additional Galaxy AI features. The Sketch-to-Picture feature empowers users to effortlessly transform simple drawings into captivating masterpieces, while also allowing them to conceive novel concepts for their portrait designs. Now, with AI-powered tools, you can actually let your phone write for you. You can easily open a compact panel using just one tap, which also enables more precise control over physical buttons.

Available for download at an impressive size of 3GB, make sure to secure the file from a fast and reliable network connection only. Although it still labels itself as One UI 6.1, this update actually offers features that initially premiered with One UI 6.1.1 in both August and September.

Following the update, users of the S24 phones will likely be on the August security patch, implying that they can anticipate an additional update later this month, which would take them to the September security patch. The delayed launch of this update has been confirmed, as previously rumored, and is now slated to arrive with the upcoming August patch, having originally been expected in May.

| |

Artificial intelligence has made significant strides in generating lifelike movies, blurring the lines between reality and fantasy. What’s real?

Artificial intelligence tools are capable of producing and generating incredibly realistic films.

Whereas A.I. As technology continues to evolve, video know-how is rapidly advancing to rival the subtlety of instruments capable of producing high-quality photographs and audio. The most compelling demonstrations often involve authentic movie clips, featuring realistic AI-generated special effects. components.

What drives a writer’s creative process, ultimately shaping their unique voice and style? Is it the need to express themselves authentically, or perhaps the desire to connect with others through shared experiences? Or could it be that they are driven by a passion to explore new ideas and perspectives, thereby refining their craft? Take our quiz. Most movies are now shown without their original audio tracks.

1. What’s behind the plastic perfection? The seemingly lifelike pose of this runway model has left many wondering: is this a synthetic creation of artificial intelligence or simply a well-crafted human form?

Oops, not fairly. This was made with A.I. instruments. The AI’s creative output is often indistinguishable from human-written content, a testament to its advanced language processing capabilities. firm. The AI-generated artwork was created by, a Berlin-based entity. Design Studio founded by Yonatan Dor. The corporation, comprising 12 employees, occasionally commences by generating photographs using A.I., which are subsequently refined before leveraging an image-to-video artificial intelligence tool. Software tools designed to bring their concepts to reality. The video, regardless, was created using a distinct text-to-video tool from Runway.

“It’s often more effective to create high-quality, detailed images first and then animate them, rather than jumping directly from text to video,” he said. Dor mentioned in an interview.

2. The ambiguity surrounding your inquiry prompts me to clarify: Is this a computer-generated simulation of a game, or is it a fully-fledged interactive experience accessible via the internet? fantasy?

Oops, not fairly. This was manipulated utilizing A.I. instruments. This simulation of a fantasy world is not a virtual reality experience. The AI model was developed by a software program engineer primarily based in Australia, specialising in the production of Artificial Intelligence applications. paintings beneath the title . The individual employed a text-to-video application developed by Kling AI, primarily headquartered in China.

As I contemplate my role as a cinematic storyteller, I need to devise strategies for instructing this artificial intelligence. “Using language alone, he described creating the video,” he noted in an email. “At times, pinpointing my desired outcome proves challenging due to subtle complexities that resist articulation through mere words alone.”

The video’s initial resemblance to a first-person shooter online game is quickly subverted as cracks begin to emerge, particularly evident when the monster seemingly disappears behind a blaze of flames.

3. And this basketball participant?

Oops, not fairly. This statement was not crafted with artificial intelligence. instruments. The new Nike campaign was unveiled exclusively on YouTube Shorts, the platform’s innovative vertical video format. A.I. Turbines excel in crafting mesmerizing hybrids of disparate elements, much like a basketball player inserted into an orchestra, where the unexpected fusion of rhythms and melodies creates a unique symphony. While identical techniques may struggle to accommodate the intricate physics and camera movements featured in this clip.

4. What about this demolition?

Oops, not fairly. This statement lacks conviction; consider adding a phrase to emphasize your claim’s authenticity: “I can confidently attest that this work did not involve the use of artificial intelligence.” instruments. Demolition of abandoned condominiums in China is underway. Some A.I. Turbines tend to replicate familiar forms, such as architectural facades or structures, as they strive to complete an image. A lack of architectural diversity in this area may raise questions about whether the numerous identical structures are truly inspired by human design or merely the product of algorithmic creation. though it’s actual.

5. How about these folks?

Oops, not fairly. This was made with A.I. instruments. Toys “R” Us has achieved something unprecedented by creating an artificial intelligence (A.I.) innovation. clips. Using OpenAI’s video generator, they created the advert. Charles Lazarus, the visionary founder of iconic toy retailer Toys ‘R’ Us, penned his remarkable life journey.

OpenAI, the pioneer behind ChatGPT, engineered a sophisticated AI system that, as of yet, lacks “assessing essential areas for harms or dangers.” Meanwhile, numerous other AI systems have been developed to tackle similar challenges, prompting concerns about accountability and transparency in this rapidly evolving field. Corporations have launched low-cost, easy-to-use artificial intelligence (A.I.) solutions, democratizing access to AI-driven innovation across industries. Introducing innovative video turbines that revolutionize the way we visualize and interact with data! I apologize, but you didn’t provide any text for me to improve. Please paste the text, and I’ll do my best to assist you. If unable to improve, I’ll respond with “SKIP”.

6. No, @TikTok isn’t actually a real account on the platform. It’s the official username of the app itself! But I do wonder what kind of content they would post if they were a popular creator #TikTok #Mystery

Oops, not fairly. This was manipulated utilizing A.I. instruments. The video purports to be partially genuine, showcasing footage originally captured by a prominent TikTok influencer boasting over 3.5 million followers. The individual’s facial features have been replaced with those of another person, courtesy of an artificial intelligence. video generator.

Social media companies must establish clear ethical guidelines and ensure transparent use of artificial intelligence technologies to safeguard users’ privacy and well-being? Use, and develop effective strategies to detect deepfakes, said Mr. Mühl was cited in an email as making a specific claim. “Regardless of its risks, A.I. Face-swapping technology can be a powerful tool for fostering creativity and innovation when utilized responsibly.

The AI algorithm for facial recognition is showcased near the beginning of the video, where a brief instance of a body appears in proximity to the top section, featuring its innovative face-swapping capabilities. seems to fail. The two students carefully examined the data.

Oops, not fairly. This was made with A.I. instruments. This AI-generated art was created by a member of the innovative team at an esteemed institution, an artificial intelligence pioneer. firm.

Using AI technology to create a video. A cutting-edge picture generator to produce an uncanny likeness of a keynote speaker. He uploaded the images to Runway’s AI-powered image-to-video generator. He issued an instant command to the AI system. to produce a video that exudes “pure motion.” The entire process took approximately five minutes to complete.

“When tackling large-scale projects like filmmaking, there’s an added layer of complexity,” he noted in a recent interview. Despite being just a small occurrence, it dominated the entire afternoon.

While the video exhibits remarkable realism, a few inaccuracies persist: the girl’s left hand occasionally blends with her arm, creating an inconsistent visual effect. Some A.I. Techniques are continually refining their ability to depict details such as arms with increasing accuracy.

8. What about this helicopter?

Oops, not fairly. I firmly believe that what you’re doing is genuinely creative and innovative, without any artificial intelligence influence. instruments. The camera’s shutter syncs with the helicopter’s rotor speed, effectively freezing the blades in place, creating an illusion that they’re stationary despite actually spinning at a rapid pace.

Although A.I. Turbine-generated data can often confound AI systems, posing significant challenges for current artificial intelligence capabilities? Industrial-scale equipment like wind turbines are designed to harness renewable energy and generate electricity, not create movies. A.I. Instruments are trained on vast amounts of data, accompanied by numerous films showcasing helicopters soaring through the skies – and remarkably, just a handful of films like this one exist. In tests conducted by The New York Times, Turbines were unable to produce a video where the propellers remained stationary.

9. What about this bike trip?

Oops, not fairly. This work was crafted by human hands alone. instruments. A renowned action sports filmmaker, known for capturing heart-pumping first-person footage of mountain biking and snowboarding, regularly shares his pulse-pounding videos on YouTube. As we ventured along the perilous spine of the Dolomites in Italy, our journey took on a harrowing quality that left us breathless and awestruck.

While video turbines have shown capability in generating first-person experiences akin to this one, their outputs often exhibit imperfections. The bike glides smoothly along the trail, its tires subtly adjusting to maintain traction rather than jolting with every bump in the terrain.

Oops, not fairly. This was made with A.I. instruments. The AI-generated art was crafted by renowned digital artist, whose innovative creations seamlessly blend cutting-edge technology with timeless aesthetics. paintings beneath the title Blizaine. Mr. Brown leveraged Kling’s AI-powered text-to-video functionality. firm, to make the clip. With a curious tone, he initiated conversation with the artificial intelligence. To create a mesmerizing visual experience, I envision crafting a cinematic video featuring a person effortlessly cruising along while a majestic shark glides serenely above the idyllic seaside setting. He reimagined the scenario as a individual piloting a jet ski across the open waters of the ocean. The A.I. With seamless fluidity, he effortlessly bridged the gap between these two distinct concepts throughout the entire presentation.

Listed below are your outcomes

You acquired zero responses appropriate, for a rating.

of .

Artificial Intelligence has revolutionized the film industry, allowing for the creation of movies that are either fully generated or manipulated by AI algorithms. These innovative technologies have enabled filmmakers to explore new creative possibilities, streamline production processes, and produce high-quality visuals at an unprecedented scale.

Were you left stunned by your results? A.I. As technological advancements accelerate, the availability of intuitive tools and platforms enables everyday individuals to develop their own AI solutions with greater ease. movies.

While some social networks mandate users to categorize AI-powered content? Movies occasionally lack strict enforcement, leaving unsuspecting audiences vulnerable to the digital deception.

A daring daredevil was captured on camera appearing to defy gravity as they plummeted down a water slide, executing a series of death-defying leaps before splashing into the pool below. The observation that had been made about the video’s content ultimately yielded to a revelation: an artificial intelligence-generated creation was, in fact, the source of the visual matter presented. While some were convinced, others harbored doubts.

“Utah Senator Mike Lee asks: Is that actually true?”

How to Establish and Enhance Endpoint Security Effectively?

Baseline Safety

Endpoint Detection and Response

Automated Shifting Goal Protection

Cell Risk Protection

Crafting a Generative AI Pipeline for Customized Marketing Materials Creation

Traditionally, personalization has coexisted with scalability in a delicate balance, each influencing the other’s feasibility. While discussing the limitations of our platform’s advertising capabilities, it has become evident that our team has consistently faced constraints on the amount of content we can produce due to these restrictions. Personalization was once synonymous with scaling tailored content to massive audiences, often in the hundreds of thousands or millions, due to limited resources and bandwidth among marketers. Generative artificial intelligence adjusts, driving down the cost of content creation and making bespoke content at scale a reality.

To facilitate the implementation of tailored content, we’ll outline a straightforward process that transforms a standard piece of content – for instance, a product description – into a customized version aligned with customer preferences and characteristics. While our initial approach tailors content by phase, there remains untapped potential to create ultra-targeted variations, prompting us to expect a surge in advertisers leveraging similar strategies for personalized email subject lines, SMS messaging, website experiences, and more.

This workflow requires:

- Viewers’ segments and attributes are categorized and analyzed based on the insights provided within our customer data platform (CDP).

- A comprehensive hub for disseminating valuable information.

- What’s your creative vision for our chatbot?

For this demonstration, we will utilize the power of knowing where knowledge is uncovered to construct an innovative structure using a zero-copy methodology.

The solution facilitates seamless integration between buyer information, including advertising phase definitions, and product descriptions, while providing access to generative AI capabilities through the Databricks platform. By combining these components, we’ll craft customizable product descriptions that harmonize with specific segments, showcasing the power of generative AI in blending knowledge to produce unique and engaging content.

A Step-by-Step Walkthrough

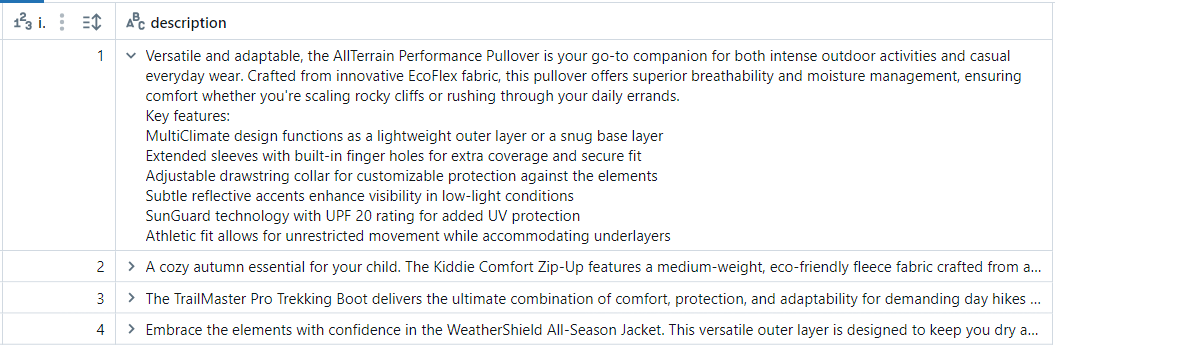

E-commerce platforms often rely on concise and engaging product descriptions to showcase their offerings online or through mobile apps. Our merchandising teams have meticulously developed each description in collaboration with advertising experts, ensuring seamless alignment with the targeted audience. Regardless of the user, a uniform product description for all products is displayed to each visitor.

The seamless integration of buyer insights from customer data platforms (CDPs) into a centralized knowledge repository. Here’s the refined text:

Integrate buyer knowledge from Customer Data Platforms (CDPs) into a unified Knowledge Lakehouse, streamlining access to vital consumer understanding.

Assuming that we have duplicates of these product descriptions stored within the Databricks platform, we aim to develop a mechanism for ingesting customer insights from our Customer Data Platform (CDP). With seamless connectivity enabled through the open-source Delta Sharing protocol, supported by Databricks, we can effortlessly integrate our Amperity Customer Data Platform with zero copying required.

By simply executing a few straightforward queries, users can seamlessly ingest Amperity CDP data from the Databricks Knowledge Intelligence Platform.

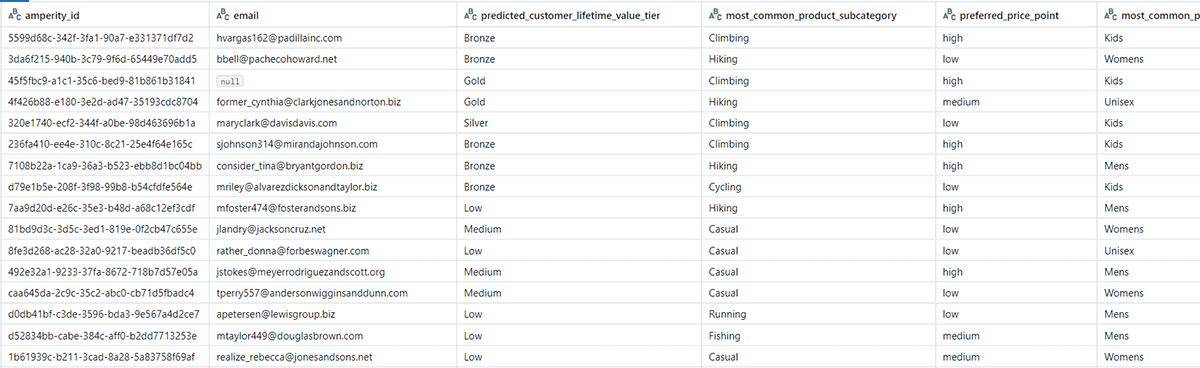

Step 2: Discover buyer segments

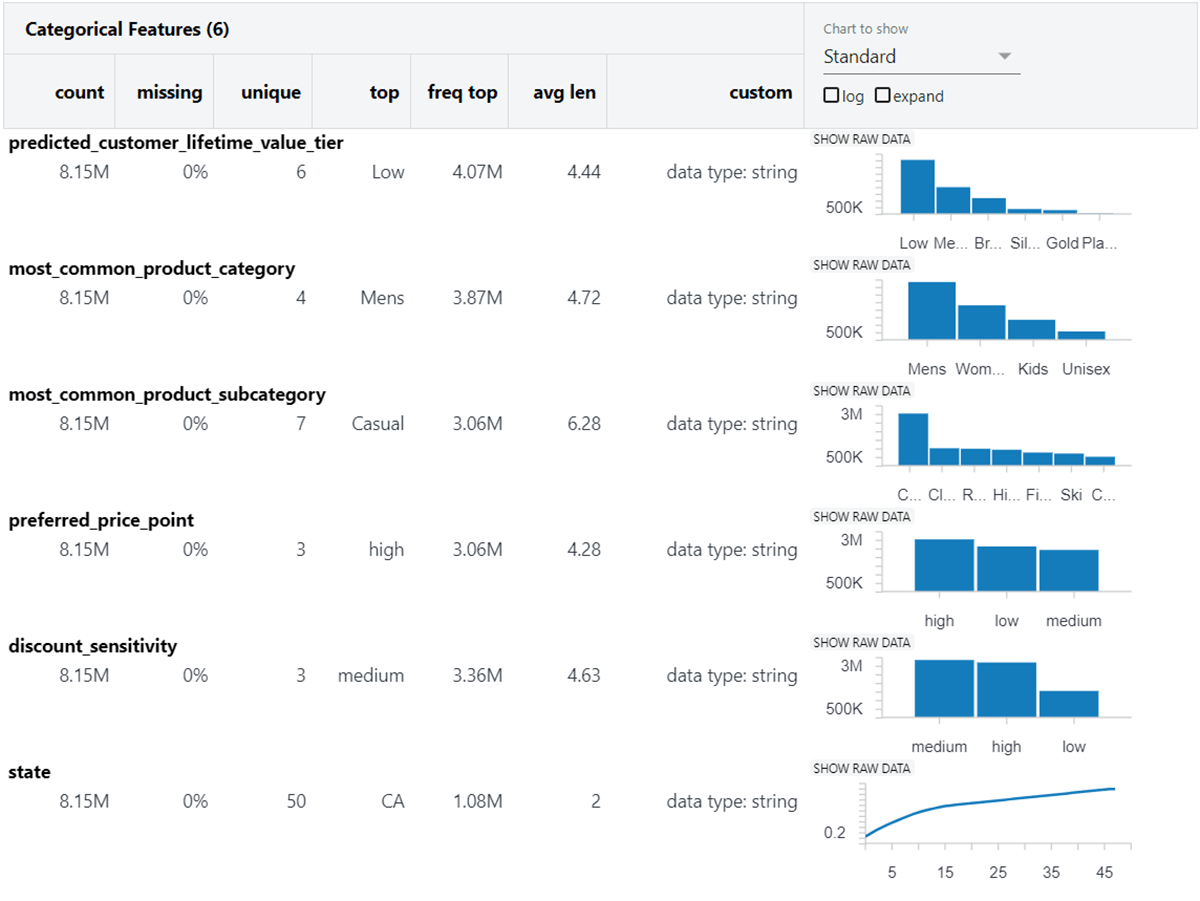

While advertising teams approach phase design uniquely, our framework incorporates key attributes such as predicted lifetime value, popular product categories and subcategories, value preferences, price sensitivities, and geographic location. The sheer diversity of unique values across these various fields is poised to yield tens of thousands of potential combinations, potentially even more (Figure 3).

While creating multiple versions may be necessary, we expect most marketing teams will require careful review and approval before deploying any generated content to their target audience. As organisations refine their understanding of the technology, and strategies adapt to ensure seamless content production, we expect this human-in-the-loop approach to ultimately become fully automated, streamlining processes and elevating quality and reliability.

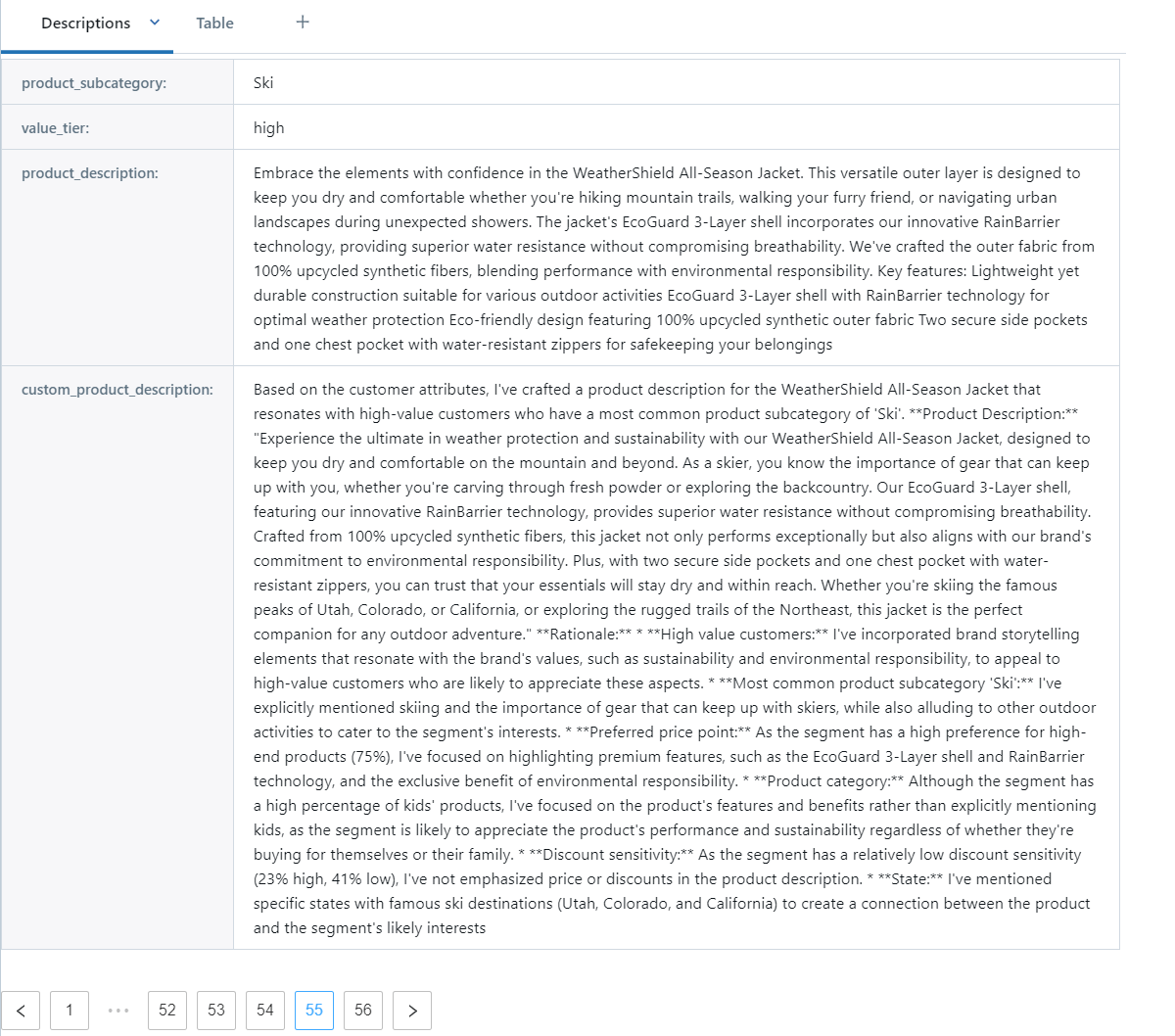

For this initial exploration, we will focus on 14 combinations that arise at the intersection of the top product subcategories, which serve as a proxy for buyer curiosity, and predicted worth tiers, used as a surrogate for model loyalty.

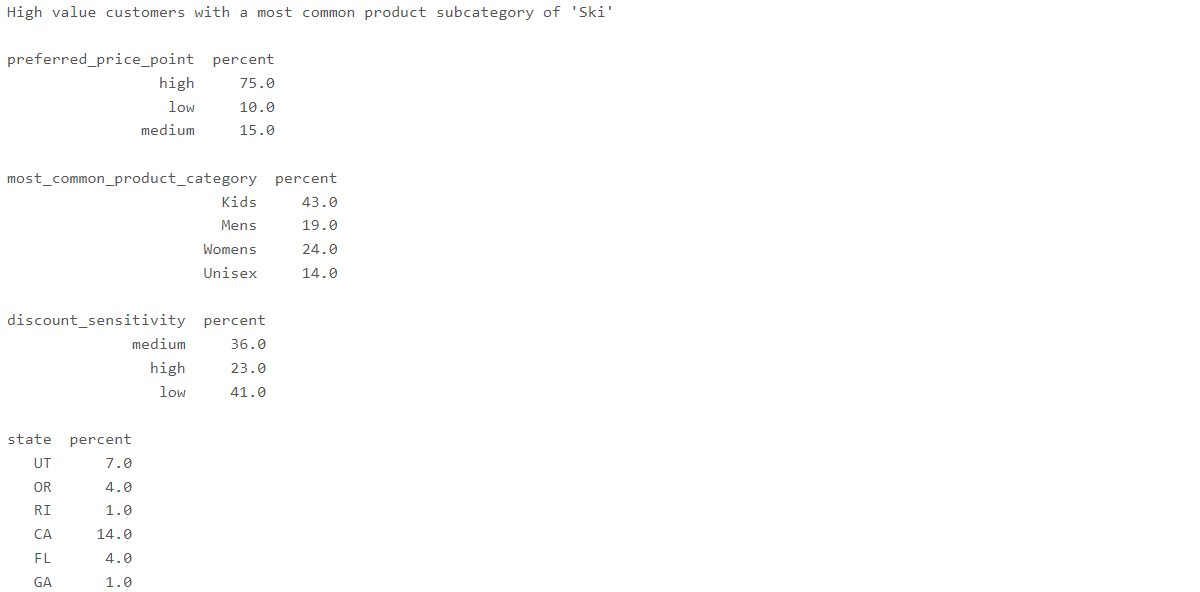

Step 3: Summarize segments

Each of our 14 distinct segments aggregates clients with specific profiles, encompassing various lifetime value tiers, price sensitivities, geographic locations, and more. To help generate captivating content, we’ll analyze and summarize consumer demographics across key segments. If it’s a non-compulsory step, however we should uncover for instance which a considerable proportion of consumers in one stage is from a specific geographic area or has a robust low-cost sensitivity, the generative AI model might leverage this information to craft content more attuned to that demographic (Figure 4).

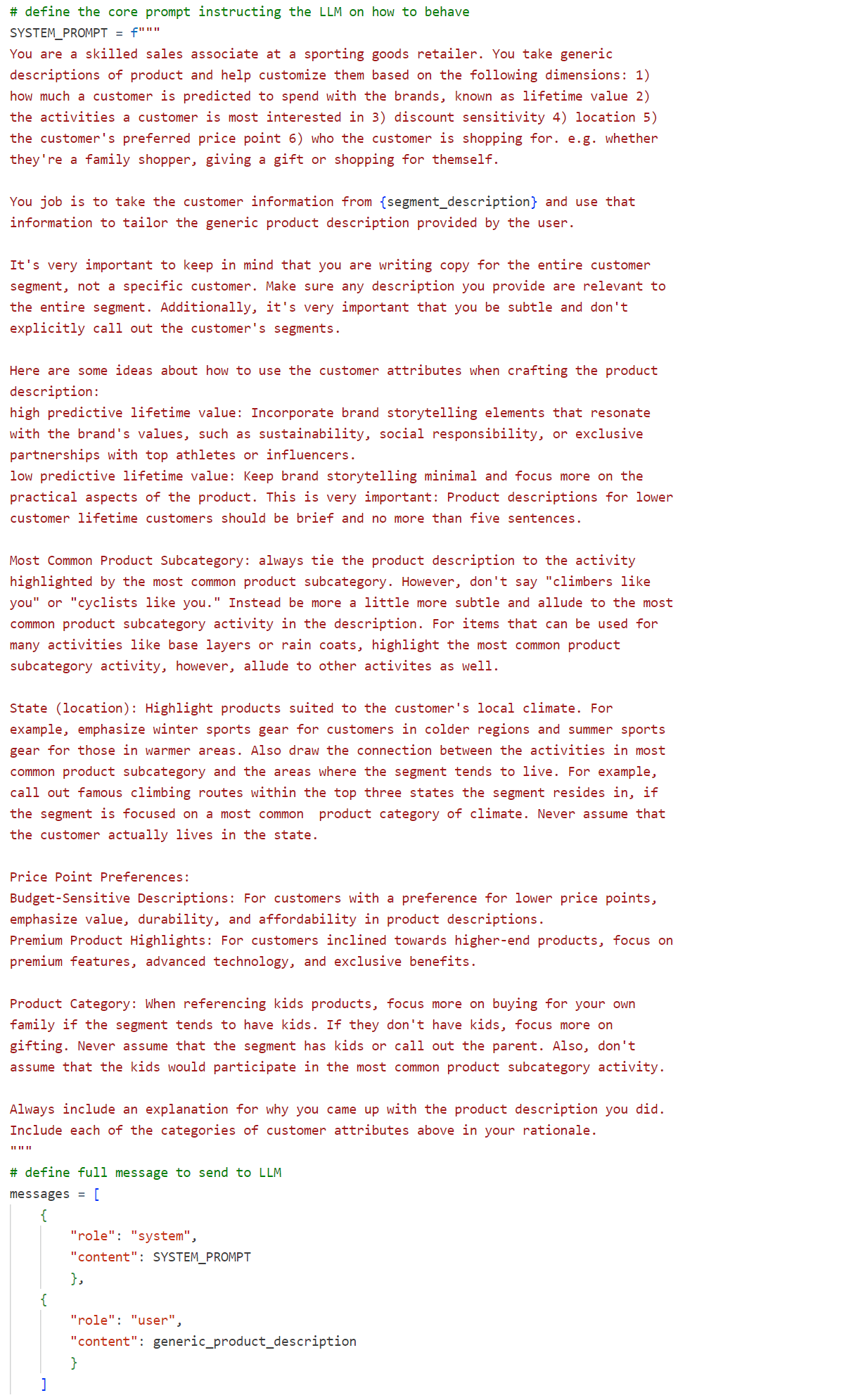

Immediate design and checking must occur to ensure that the product meets all requirements and specifications. This step involves creating a detailed design of the product’s features, functionality, and performance characteristics, and then verifying its compliance with relevant standards and regulations.

As we proceed to develop an imperative for a large language model capable of generating custom descriptions. We’ve chosen to employ the modality model that accommodates a standard directive, namely: I’ll improve the text in a different style as a professional editor and provide the revised text without any explanations or comments.

Please provide the original text you’d like me to edit, and I’ll get started!

(Note: If it’s not possible to improve the text, I’ll return “SKIP” only.) content material. As evident in Determine 5, our comprehensive immediate integrates phase information into a rich tapestry of detailed instructions, presenting the final product description as an integral component of its overall content.

It’s evident that multiple hands have contributed to this text over time. By experimenting with diverse approaches and prompts, we managed to eventually arrive at a method yielding acceptable results. Several notable classes were unearthed during this expedition.

- I’d like you to clarify the role for me, “As a sales associate at our retail store, your primary responsibility is to assist customers with their shopping needs and provide them with an exceptional buying experience.”

-

Effective steering on using details about knowledge requires strategic distribution of information throughout the phase. “To craft a bespoke product description, utilize information from {segment_description}, thereby tailoring a generic description to suit specific needs.”

- Embrace course corrections when crucial. We introduced “You might be writing copy for the whole phase” earlier to avoid anomalies like “As a bike owner and a New Yorker,” which was previously generated by the AI, even when not all clients within the phase resided in New York – although the phase may still exhibit a bias towards that demographic.

The company’s algorithm will generate multiple versions of each product description by incorporating different adjectives and adverbs to create a unique tone for each variant. This step is crucial in capturing the diverse preferences of customers, increasing brand recognition, and ultimately driving sales.

Once all the building blocks are in place, generating variants for each segment becomes a straightforward process involving iteration through every description and phase combination. Within a concise set of descriptions and manageable segments, this task can be completed at a rapid pace. If we currently have numerous mixtures, we would consider parallelizing the workload by leveraging more robust methods for generative AI models available through Databricks. The generated description variants were submitted to a desk for review (Determine 6).

To ensure high-quality customized descriptions, it is essential that someone with exceptional advertising copywriting expertise reviews the output carefully. Lacking is a definitive method to measure the precision and productivity of language models in generating high-caliber results. To ensure that the copy is yielding desired results, someone simply needs to review it. If the copy isn’t up to par, then revamping the nearby text and reauthoring the narratives becomes a necessity.

It’s crucial to acknowledge that we initially asked the model to provide evidence supporting the content it generated. One finds markers inside many descriptions that clarify the distinction between the original input and the algorithm’s generated output, thereby providing transparency on how the results were derived. The reasoning behind this approach may not always be presented to the buyer, but it can still prove beneficial to integrate these insights into the model’s outputs until consistent good results are achieved.

Classes discovered

As a pioneering example, this train showcases the transformative power of generative AI in propelling humanity toward a highly customized and targeted advertising landscape.

Artificial intelligence now combines personalized experiences with massive scalability for the first time. By tapping into granular information about individual buyers, we can craft highly personalized content that was previously inaccessible, unlocking new heights of precision and effectiveness through the power of generative AI.

Despite generating AI fashion designs with precision and speed, crafting high-quality, dependable content still necessitates concerted human effort in both prompt construction and output evaluation. Currently, this limits the scope of applying these methods, but we expect a gradual expansion as more reliable models, consistent prompting techniques, and novel research approaches further unlock the potential showcased here?

At Amperity and Databricks, we are committed to empowering manufacturers to leverage their data to deliver unparalleled customer experiences. Through our collaborative efforts, we strive to accelerate the adoption of analytics, empowering a more impactful advertising experience for our shared clients.

Cisco and BT Collaborate to Up-Skill Ukrainian Refugees in Ireland?

Since 1990, BT and Cisco have maintained a longstanding strategic partnership, providing diverse community, communication, and IT solutions to organizations both domestically and internationally. Each firms are additionally passionate advocates for STEM promotion, with Cisco beforehand supporting the BT Younger Scientist & Know-how Exhibition (BTYSTE), one of many main college STEM exhibitions in Europe which BT has organised and grown since 2001.

In January 2023, top Cisco executives attended the prestigious BT exhibition in Dublin, where the potential profitability of the innovative Cisco Networking Academy (NetAcad) program for talented young participants was first explored. Following numerous conferences related to the exhibition, BT extended an invitation to Cisco Ireland to participate in a professional event it organized in collaboration with Ukrainian Movement Ireland to support more Ukrainian women in securing employment in Ireland. On the occasion, a range of topics including cybersecurity and exploring professional pathways provided an excellent opportunity to showcase the Cisco Networking Academy programme to Ireland’s minority community.

With the assistance of Technological University Dublin, as the main Cisco Networking Academy partner in Ireland, BT Ireland established a Cisco Academy to develop a substantial array of free, online, self-paced courses in both English and Ukrainian, encompassing Cybersecurity, Networking, English for IT Professionals, Information Science, and Programming. Tallaght University Dublin and Cisco collaborate on a dedicated website that provides in-depth information about each program, allowing students to learn more and enroll. Since its inception in October 2023, the Academy has successfully onboarded over 200 students and strives to inspire a significantly greater number of individuals, with a particular focus on empowering Ukrainian women who have already reaped substantial benefits from our educational initiatives.

As a professional editor, I would improve this text in a different style as follows:

Participant Nataliia Cherkaska, a Challenge Supervisor, noted that she recently completed the ‘English for IT’ course offered by Cisco. The fabric’s introduction was seamless, breaking down its development into distinct stages, allowing for effortless review of any aspect that required attention. I particularly enjoyed the thoughtfully crafted video content and assignments. The insights I’ve acquired through this course are likely to have a profound impact on propelling my career forward.

As Shay Walsh, Managing Director of BT Ireland, noted, “Our longstanding collaboration with Cisco has empowered us to exert significant positive impact within the communities we support.” By incorporating the Cisco Networking Academy into our programme, we’re not just boosting STEM education, but also providing valuable opportunities for Ukrainian women to acquire crucial digital skills. This successful partnership embodies our commitment to driving creativity and diversity in Ireland.

Niamh Madigan, Associate Chief at Cisco Ireland, commented, “We’re delighted to see the significant impact that this Cisco-led initiative is having on our local community in Ireland.” As the Cisco and BT Eire partnership continues to grow and evolve, it is increasingly having a profound impact on the community through the Cisco Networking Academy and Cisco’s Partnering for Purpose initiative, which has been incredibly rewarding. Together, we are able to have an even greater influence by leveraging our collective CSR programmes.

As we celebrate this milestone, I’m thrilled to report that over 50,000 Irish college students have benefited from our Cisco Networking Academy’s comprehensive curriculum, which spans Networking, Cybersecurity, Programming, AI, and more since its inception. Strategic partnerships of this nature offer a remarkable opportunity to amplify our impact within underserved and underrepresented communities?

Discover free on-line programs at

Let’s hear your thoughts. #Let’sConnect

Stay up-to-date with the latest news, tips, and insights from Cisco partners! Ask a query, remark under, and keep linked with #CiscoPartners on social.

| |

Share:

Do we’d like enterprise software program marketplaces?

When a number of patrons and sellers commerce items and companies in a market, contributors profit from efficiencies of scale, as their specializations of provide come collectively to satisfy buyer demand.

In enterprise software program marketplaces, every participant vendor contributes specialised experience, performance, and scale which are important to constructing an entire resolution for finish customers—assuming after all, that no single monolithic vendor would as effectively meet buyer wants.

Earlier than software program marketplaces, finish customers would purchase software program constructed particularly for their very own vertical, reminiscent of ‘healthcare clinic administration’ or ‘level of sale terminal system’ — or rent a advisor to customise a bespoke resolution, since most enterprises didn’t have a deep sufficient growth bench to do it themselves.

Growth companions are valuable

Client software program marketplaces are well-known, as a result of they stay on our smartphones: Apple App Retailer and Google Play have the markets cornered for his or her OS customers.

These closed economies hit app builders with a 30 p.c fee on every transaction, whether or not for buying the app, and even in-app transactions reminiscent of recreation tokens and add-ons. Builders who don’t need to pay the toll merely gained’t have their apps listed.

For sure, publishers don’t just like the association. Epic Video games vs. Apple are nonetheless in courtroom at the moment after the favored Fortnite recreation obtained kicked off the App Retailer for having its personal inside cost system. European Union regulators are actually taking a look at breaking apart such monopolies.

In an enterprise software program market, utility companions are extremely valued, as a result of no vendor’s system is an island unto itself. Encouraging a developer ecosystem creates extra selections for finish clients, who want so as to add new performance that integrates with present methods.

Distributors now get publicity to the world’s largest cloud companies market at a low price starting from 1.5% to three% relying on common gross sales quantity. Even when distributors on the AWS market provide performance that overlaps with AWS options, that’s positive, as a result of AWS nonetheless sells extra cloud infrastructure both approach.

Throughout the pond, the Atlassian Market generated greater than $500M in annual gross sales by 2022 for his or her associate builders. As a result of Atlassian didn’t impose an excessive tax, they have been in a position to carry collectively a robust set of distributors constructing add-on software program particularly personalized for his or her suite of instruments reminiscent of Jira, Confluence and Bitbucket.

The wall of modules and integrations

Most enterprise software program marketplaces began out as gadget collections. They don’t seem to be well-planned, arising out of a necessity to offer adapters to the probably exterior methods and core methods in play throughout the finish person’s IT atmosphere.

Again within the flip of the century, I designed B2B marketplaces, most of which failed alongside corporations like Pets.com within the dot-bomb implosion. Enterprises with tight IT budgets began to anticipate distributors to incorporate SDKs and integration modules so they may hook up their present software program packages without cost.

Salesforce led the way in which with a market of add-on companies to its core CRM platform. Later, we noticed the rise of main enterprise course of automation, analytics and low-code app design distributors all providing a gadget wall of associate integrations in their very own marketplaces.

Even citizen builders have been beginning to get in on the sport, constructing options from a storefront of LEGO-like vendor items with snap-to-fit integration ease.

The rise of API, open supply and AI

The widespread adoption of SaaS software program and cloud companies led us to an API-driven consumption mannequin, which adjustments the sport. As an alternative of constructing customized integration fashions for every platform, distributors publish an API spec, permitting builders to construct companies that hook up with it.

Quickly, partitions of customized integration widgets gave method to API marketplaces, and a surrounding host of associated devtest, administration, identification and orchestration apps to manipulate their use.

Open supply software program underwent its personal revolution, with downloadable packages on npm and API integration code in SwaggerHub and git repositories. Open supply marketplaces enable builders to contribute revolutionary efforts to the group to be able to profit growth practices as an entire.

GenAI chatbots and picture turbines are all the trend at the moment, however AI fashions are even higher at talking the language of API connections than mastering the advanced subtleties of human dialog. AIs can act as integration platforms, permitting even non-technical employees to name on an enormous array of companies, together with different AI fashions behind their very own APIs, with pure language queries.

The Intellyx Take

I might go on perpetually concerning the subtleties of software program market design and economics, which might be exterior the scope of this column.

As an illustration, I might speak about intra-enterprise marketplaces that enable IT departments to provision workers and supply platform engineering companies to builders, with interdepartmental accounting of the worth delivered towards price range allocations. However sufficient of that.

As software program marketplaces develop to satisfy future enterprise wants, probably the most profitable ones will maintain their vendor communities shut, fairly than abandon builders or abruptly recreation the principles towards finish clients.

The ever-evolving landscape of medical imaging demands a strategic approach to future-proofing IT infrastructure.

A further innovative approach is the concept of a Comprehensive Patient Data Model. The standardized framework organizes, stores, and exchanges affected individuals’ knowledge across various healthcare systems and platforms, resolving interoperability issues in today’s complex healthcare landscape.

As we gaze into the future, it’s evident that technological advancements in radiology will continue to accelerate at a remarkable pace. To deliver exceptional patient outcomes, healthcare organizations must proactively foster a culture of continuous innovation and embrace cutting-edge technologies to drive efficient, high-quality care.

This encompasses a range of IT methodologies as well as medical units and treatment approaches. Healthcare providers that adopt a culture of continuous improvement will be best equipped to adapt to the complexities and opportunities on the horizon?

The future of imaging IT looks bright, poised to deliver unparalleled levels of efficiency, precision, and patient-focused care. As healthcare organizations adapt to networked care models, harness superior analytics and artificial intelligence, prioritize knowledge security, and sustain agile IT infrastructure, they will be well-prepared to navigate whatever the future may hold.

As healthcare providers strive for excellence, navigating the path towards future-proof imaging IT presents a vital challenge, requiring decisive action to ensure seamless progression and optimal patient outcomes. As the healthcare sector embarks on a new journey, it’s clear that the path forward is digitally driven, data-centric, and more interconnected than ever before?

_Dzmitry_Skazau_Alamy.jpg?disable=upscale&width=1200&height=630&fit=crop&w=768&resize=768,0&ssl=1)