That is a pivotal component in Rockset’s Making Sense of Real-Time Analytics on Streaming Data collection. We presented a comprehensive overview of our expertise in providing real-time analytics for streaming data. What follows is an examination of the differences between real-time analytics databases and stream processing frameworks. Within the next few weeks, we will be publishing our next installment.

- Will explore methods for implementing streaming knowledge, accompanied by several pattern architectures.

Given your existing familiarity with basic streaming concepts, let’s proceed to explore half one, assuming some level of prior understanding. With that, let’s dive in.

Differing Paradigms

Stream processing techniques and real-time analytics (RTA) databases are rapidly gaining recognition. While discussing the nuances of “options” may seem challenging, the reality is that “options” and “variations” can be used interchangeably in many contexts, rendering distinctions between them somewhat irrelevant? Two distinct methods exist in their respective ways of addressing the topic.

This blog post clarifies conceptual differences, provides an overview of in-style tools, and offers a framework for determining the most suitable instruments for specific technical requirements.

Stream processing and Real-time Analytics (RTA) databases: A concise overview.

Stream Processing Databases:

? Apache Kafka – scalable event-driven architecture.

? Amazon Kinesis – managed service for real-time data processing.

? Google Cloud Pub/Sub – messaging service for event-driven architectures.

? Apache Flink – distributed stream processing engine.

? Apache Storm – open-source platform for real-time computation.

RTA Databases:

? Apache Ignite – in-memory database for real-time analytics.

? TimescaleDB – time-series database for IoT and sensor data.

? InfluxDB – open-source time-series database for machine learning.

? OpenTSDB – distributed, scalable time-series database.

? Cassandra – NoSQL database for real-time analytics. Stream processing techniques enable efficient combination, filtering, aggregation, and analysis of real-time data streams, empowering organizations to extract insights and make informed decisions in a timely manner. In a relational database context, “streams” take center stage alongside tables as prominent entities in stream processing. Stream processing closely mimics a continuous inquiry; each event traversing the system is scrutinized according to predefined criteria, potentially yielding insights for diverse applications. Stream processing techniques rarely serve as a reliable form of persistent storage. They are a “course of” rather than a retailer, which leads me to ask…

Actual-time analytics databases are frequently employed for long-term storage, albeit they also accommodate occasional use cases where storage is not the primary concern. This type of database typically operates within a well-defined scope, characterized by a bounded context rather than an unbounded one, thereby facilitating efficient data management and query performance. These databases can process real-time events, indexing data instantly, and support sub-millisecond analytics queries on that information. Actual-time analytics databases share significant parallels with stream processing, enabling the combination, filtering, aggregation, and analysis of vast volumes of real-time data for applications such as anomaly detection, predictive modeling, and more. While traditional relational databases (RTAs) and stream processing tools differ fundamentally, the key distinction lies in the fact that databases offer persistent storage capabilities, bounded query execution, and indexing features.

No changes possible. Each? Let’s delve into the intricacies of fine print.

Stream Processing…How Does It Work?

Stream processing instruments process streaming data as it flows through a streaming data platform, such as Kafka or other suitable alternatives. Processing unfolds incrementally as streaming knowledge becomes available.

Stream processing techniques often leverage the efficiency of a directed acyclic graph (DAG), comprising nodes dedicated to distinct operations such as aggregations, filtering, and joins. The nodes function in a hierarchical manner, connecting and transmitting data through a chain-like structure. As information flows, it encounters a specific neuron, where it undergoes analysis, before being transmitted onward to the next point of processing. Upon processing the provided information, predetermined standards, specifically a topology, are adhered to for comprehensive evaluation. Nodes can operate independently across distinct servers, connected through a community framework, enabling scalable and efficient processing of vast amounts of data. The concept of a steady question is rooted in the idea that clarity and simplicity can foster deeper understanding. Knowledge is continuously generated, reworked, and made available. As processing reaches completion, various functionalities or methodologies can register with the resultant flow and leverage it for analytical purposes or integration within a utility or service. While some stream processing platforms support declarative languages such as SQL, they also accommodate more advanced languages like Java, Scala, or Python, suitable for sophisticated applications like machine learning.

Stateful Or Not?

Stream processing operations can either be stateless or stateful in nature. Stream processing sans state: a welcome respite from complexity! A stateless course does not depend contextually on anything prior to its existence. What specific insights do you seek from this dataset? The stream processor filters out buys below $50 in a manner that is independent of specific instances and remains stateless as a result.

Stateful stream processing considers the cumulative history of information to inform its decisions and outputs. Each incoming shipment depends not only on its own contents, but also on the cumulative content of all preceding shipments. The seamless transfer of knowledge and complex operations between streams requires a state that facilitates working totals and other intricate processes.

An example of an application that effectively processes a stream of sensor data. To illustrate the computation of a typical temperature for each sensor across a chosen timeframe. The stateful processing logic aims to maintain a comprehensive record of temperature readings for each sensor, including a count of the number of readings processed per sensor. The provided data enables computation of the average temperature for each sensor across a specified timeframe or window.

These state designations are linked to the concept of the “steady question” introduced earlier. When inquiring about a database, you’re essentially asking about the current state of its stored information. In stream processing, a consistent, stateful query necessitates maintaining distinct state separate from the directed acyclic graph (DAG), achieved through querying a state repository, i.e., A built-in database integrated within the framework. State stores can exist in various forms – memory, disk, or deep storage – each with its unique latency-value tradeoff.

Stream processing requires a deep understanding of complex event flows and transient state management. While architectural details lie outside the purview of this blog, four inherent challenges in stateful stream processing are worth noting:

- Sustaining and updating the state demands critical processing resources. To remain current with the influx of new knowledge, states must ensure seamless integration of updates, a task that can prove arduous, especially when dealing with high-volume knowledge flows.

- Ensuring exactly-once processing guarantees for all stateful stream processing is a crucial requirement. When knowledge arrives in an unconventional sequence, the system must be adjusted to ensure accuracy and timeliness, resulting in added computational costs.

- Critical measures must be undertaken to prevent knowledge from being mislaid or distorted in the event of a failure. To ensure seamless operation, robust architectures necessitate rigorous implementations of checkpointing, state replication, and restoration processes.

- While the intricacies of processing logic and stateful contexts can pose significant challenges to error reproduction and diagnosis, Due to the inherent distributed nature of stream processing methodologies, the complexity arises from numerous components and disparate knowledge sources, rendering root trigger evaluations a formidable challenge.

While stateless stream processing offers value, certain compelling scenarios necessitate the presence of state. Working with state requires stream processing tools to be more complex and challenging to use than traditional relational databases (RTA).

Where to Start with Processing Instrumentation?

Over the past few years, the array of available stream processing techniques has expanded significantly. This blog post will explore some of the major players, both open-source and commercially controlled, to give readers an understanding of what options are available.

Apache Flink

Is a widely-used, open-source, distributed framework designed for efficient real-time stream processing. Developed by the Apache Software Foundation, this open-source project is crafted in both Java and Scala languages. As one of the most popular stream processing frameworks, Flink stands out due to its exceptional flexibility, remarkable efficiency, and thriving open-source community. Notably, prominent companies like Lyft, Uber, and Alibaba have chosen Flink as their solution of choice. It facilitates a wide range of information sources and programming languages, as well as providing support for stateful stream processing applications.

Flux leverages a real-time dataflow paradigm, empowering the processing of streams as they emerge, rather than relying on batch-based approaches. While relying on checkpoints ensures that learning progresses despite isolated node failures, Given the complexity of Flink’s architecture, it is essential that users have significant experience and commit to regular operational maintenance to effectively tune, monitor, and troubleshoot the system.

Apache Spark Streaming

Is Apache Flink a popular, open-source, real-time processing framework capable of handling complex, high-throughput scenarios?

Unlike Flink, Spark Streaming employs a micro-batch processing model, where incoming data is processed in small, pre-defined chunks. This, in turn, results in longer end-to-end latency periods. While addressing fault tolerance in Spark Streaming, the framework employs a mechanism called checkpointing to recover from failures, thereby mitigating potential latency spikes resulting from such events. While there is support for SQL through the Spark SQL library, it’s more limited compared to other stream processing libraries, making it suitable for a subset of use cases only. While Spark Streaming has a longer history than other methods, its established nature simplifies the discovery of best practices and availability of freely accessible, open-source code for widespread scenarios.

Confluent Cloud and ksqlDB

Confluent Cloud’s primary offering for real-time data processing is a powerful streaming solution that combines the familiar SQL-like syntax of KSQL with additional features such as connectors, a persistent query engine, windowing, and aggregation capabilities.

A crucial aspect of ksqlDB is its fully managed nature, streamlining deployment and scalability efforts. Distinctions can be drawn between Flink, which can be deployed in various configurations, including as a standalone cluster, on YARN, or on Kubernetes; note that fully managed versions of Flink are also available. KsqlDB empowers developers to create a SQL-like query language, boasting an array of built-in features and operators, which can be further augmented with customizable user-defined functions (UDFs) and operators. KsqlDB is natively integrated with the Kafka ecosystem, effortlessly aligning with Kafka streams, topics, and brokers.

Where Will My Knowledge Ultimately Rest?

Actual-time analytics (RTA) databases are fundamentally distinct from stream processing techniques. Both companies exhibit a clear upward trajectory, albeit with some convergence in their financial performances. What do we mean by “RTA database” is outlined below.

In the realm of streaming knowledge, Real-Time Analytics (RTA) databases serve as a repository for aggregating and storing information. While both types are helpful for real-time analytics and knowledge functions, they differ in their approach: one provides instant insights upon query, whereas the other continuously serves up knowledge. When ingesting knowledge into an RTA database, users have the option to configure ingest transformations, which can perform tasks such as filtering, combining, or even integrating new information seamlessly. The information lies dormant on a desk, inaccessible through subscription as one would with streaming services.

Apart from the desk vs. Unlike traditional stream processing frameworks that focus solely on indexing data in a narrow manner, real-time analytics (RTA) databases offer a wide range of options for indexing knowledge, enabling more comprehensive and flexible querying capabilities. Indexes enable RTA databases to deliver sub-millisecond query response times, as various types are tailored to accommodate distinct query scenarios. The optimal RTA database selection often boils down to a careful analysis of indexing strategies. When seeking ultra-fast aggregations on historical data, you’re likely to choose a columnar database with a primary index. Searching for information about a specific transaction? Retrieve relevant data swiftly from the pre-indexed repository by querying the database equipped with an inverted index mechanism. While each RTA database may make distinct indexing decisions, The best resolutions will depend on well-defined question patterns and a thorough understanding of user needs.

One final tier of compatibility: enrichment. In the realm of data processing, it is feasible to enhance the value of your streaming knowledge by integrating additional information within a stream processing framework. With this innovative technology, you’ll have the ability to simultaneously follow and engage with two data streams in real-time. Stream processing supports various join types, including interior joins, left and right joins, as well as full outer joins. By relying on the system, it is also feasible to reconcile disparate knowledge streams, integrating historical insights with contemporary perspectives. A delicate balance must be struck between the values of simplicity, complexity, and speed, as navigating these competing demands can prove a significant challenge. Rapidly expanding RTA databases employ straightforward strategies to enhance and join knowledge seamlessly. Denormalization, a common approach, involves simplifying complex data structures by combining multiple tables into a single, more straightforward format, effectively eliminating the need for joins and reducing query complexity. This methodology, despite having various alternatives. Here’s the improved text: Rockset enables efficient execution of inner joins on streaming data at ingestion time, as well as flexible querying capabilities at query time.

The key advantage of RTA databases lies in their ability to enable customers to run complex, high-speed queries against data that’s only 1-2 seconds old. Stream processing frameworks and real-time analytics (RTA) databases empower users to refactor and deliver insights. Their real-time capabilities enable them to seamlessly combine, filter, and analyze data streams with maximum flexibility.

What are key features that distinguish Relational, Time-Series, and Graph databases from one another?

Elasticsearch

Elasticsearch is a highly scalable, open-source search engine that enables the storage, retrieval, and analysis of vast amounts of data in near real-time. While its scalability is fair, it’s primarily employed in log analysis, comprehensive searching, and timely data interpretation.

To further enhance your understanding of streaming data within the context of Elasticsearch, consider exploring. This necessitates aggregating and flattening knowledge before ingestion. Most stream processing instruments do not typically necessitate this step. Elasticsearch users typically experience exceptional performance for real-time analytics and querying of text-based data. Despite efficient handling of updates in normal scenarios, Elasticsearch’s performance can significantly degrade when faced with an excessively high volume of updates. When upstream replacements or inserts occur, Elasticsearch must reindex the updated data on all its replicas, a process that exhausts computational resources and impacts overall performance. While some streaming knowledge use cases solely append data, others won’t; consider your replacement frequency and denormalization before choosing Elasticsearch for selection.

Apache Druid

Apache Druid is a high-performance, column-oriented data repository that excels at processing sub-second analytical queries and seamlessly ingesting real-time knowledge. A traditional term for this type of database is a time-series database, and it stands out in its ability to efficiently handle filtering and aggregation operations. Druids are typically utilized in large-scale data processing applications to manage and analyze vast amounts of information across multiple nodes. The concept is challenging to implement due to its complexity in measuring outcomes.

Transformations and enrichment in Druid are identical to those in Elasticsearch. When relying on an RTA database to connect multiple streams, consider handling these operations elsewhere, as denormalization can be a cumbersome process. Updates current the same problem. When a Druid consumes knowledge from a stream, it must recalculate and reindex all relevant data within that specific scope, akin to processing a temporal slice of information. These values introduce latency and compute performance metrics. When dealing with a high volume of updates, consider utilizing a dedicated Real-Time Analytics (RTA) database to streamline knowledge streaming. Last but not least, it’s worth highlighting that certain SQL features, such as subqueries, correlated queries, and full outer joins, are unsupported in Druid’s query language.

Rockset

Rockset is a fully managed, cloud-constructed real-time analytics database that requires no handling or tuning whatsoever. Providing sub-millisecond latency for complex, analytics-driven queries that leverage the full capabilities of SQL. As a result of combining a column index, a row index, and a search index, Rockset is well-suited to handle various types of query patterns. Rockset’s custom-built SQL query optimizer continually examines each query, selecting the most expedient indexing strategy based on the optimal execution plan. What’s more, this architecture enables the complete segregation of computing resources dedicated to consuming knowledge and those allocated for querying it.

While Rockset’s capabilities for transformations and enrichment bear some resemblance to those of stream processing frameworks, By leveraging inside joins exclusively, this approach significantly enhances the integration of streaming data with historical context at query time, effectively eliminating the need for denormalization. Indeed, Rockset can effortlessly consume and catalogue unstructured data sets, including intricately nested objects and arrays. Rockset is a cloud-based data warehousing platform that enables efficient processing of large datasets and may handle updates without incurring an efficiency penalty. If ease of use, value, and efficiency are paramount considerations, Rockset stands out as an ideal real-time analytics (RTA) database for streaming knowledge effectively. To delve further into this topic, consider exploring…

Wrapping Up

Stream processing frameworks excel at processing vast amounts of data in real-time, allowing for enriched insights, filtered results, and aggregated summaries, with applications including image recognition and natural language processing. Despite their utility, these frameworks are typically unsuitable for long-term data storage and provide limited support for indexing; instead, they often necessitate a robust transactional database for storing and querying information. Additionally, such systems demand critical experience in configuring, calibrating, maintaining, and troubleshooting. Stream processing instruments are highly effective and require minimal maintenance.

RTA databases excel as leading-edge stream processing sinks. Their infrastructure supports rapid ingest and indexing, enabling sub-second query response times for real-time analytics on vast amounts of knowledge. Enabling seamless connections to a diverse array of knowledge sources, including knowledge lakes, warehouses, and databases, fosters a wide range of enrichment opportunities. Certain RTA databases, such as Rockset, further enable real-time analytics by supporting seamless streaming joins, efficient filtering, and rapid aggregations during the ingestion process.

This article will elucidate the most effective methods of implementing Real-Time Analytics (RTA) databases to yield superior insights from streaming data.

Get ahead of the curve and start leveraging Rockset’s real-time analytics database right away. We provide a $300 credit and don’t request a bank account verification. While we’ve developed numerous pattern knowledge units that closely emulate the characteristics of streaming knowledge. Take a closer look and put things to the test.

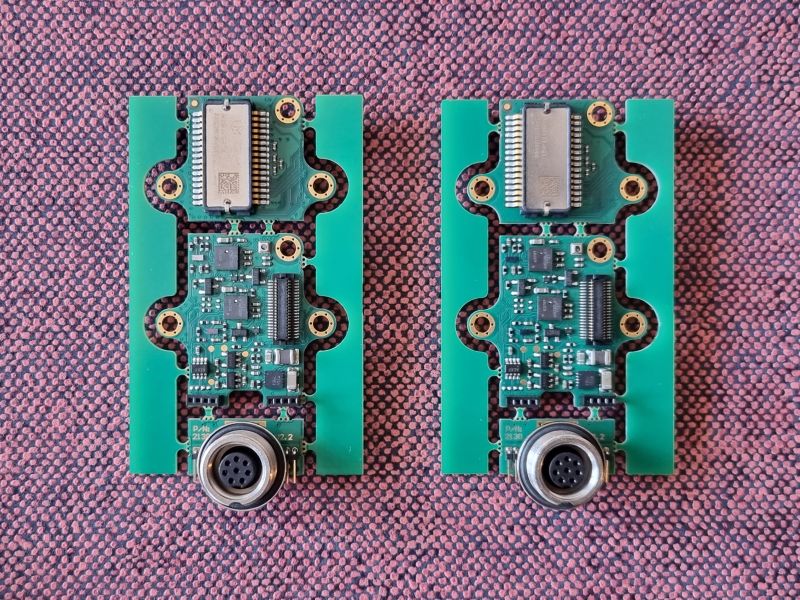

One Palletrone replaces the battery in another Palletrone.Seoul Tech

One Palletrone replaces the battery in another Palletrone.Seoul Tech