Authored by Lakshya Mathur and Abhishek Karnik

As we gear up for the 2024 Paris Olympics, pleasure is constructing, and so is the potential for scams. From pretend ticket gross sales to counterfeit merchandise, scammers are on the prowl, leveraging huge occasions to trick unsuspecting followers. Just lately, McAfee researchers uncovered a very malicious rip-off that not solely goals to deceive but additionally to painting the Worldwide Olympic Committee (IOC) as corrupt.

This rip-off includes subtle social engineering methods, the place the scammers goal to deceive. They’ve change into extra accessible than ever because of developments in Synthetic Intelligence (AI). Instruments like audio cloning allow scammers to create convincing pretend audio messages at a low price. These applied sciences have been highlighted in McAfee’s AI Impersonator report final 12 months, showcasing the rising menace of such tech within the fingers of fraudsters.

The newest scheme includes a fictitious Amazon Prime sequence titled “Olympics has Fallen II: The Finish of Thomas Bach,” narrated by a deepfake model of Elon Musk’s voice. This pretend sequence was reported to have been launched on a Telegram channel on June twenty fourth, 2024. It’s a stark reminder of the lengths to which scammers will go to unfold misinformation and exploit public figures to create plausible narratives.

As we method the Olympic Video games, it’s essential to remain vigilant and query the authenticity of sensational claims, particularly these discovered on much less regulated platforms like Telegram. All the time confirm info via official channels to keep away from falling sufferer to those subtle scams.

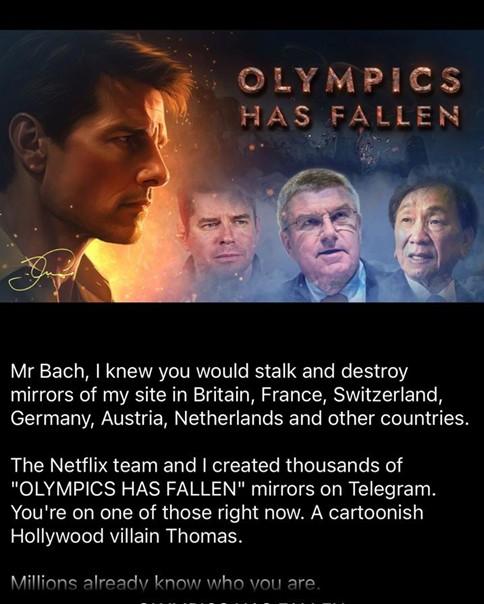

Cowl Picture of the sequence

This sequence appears to be the work of the identical creator who, a 12 months in the past, put out an identical quick sequence titled “Olympics has Fallen,” falsely introduced as a Netflix sequence that includes a deepfake voice of Tom Cruise. With the Olympics lower than a month away, this new launch seems to be a sequel to final 12 months’s fabrication.

Picture and Description of final 12 months’s launched sequence

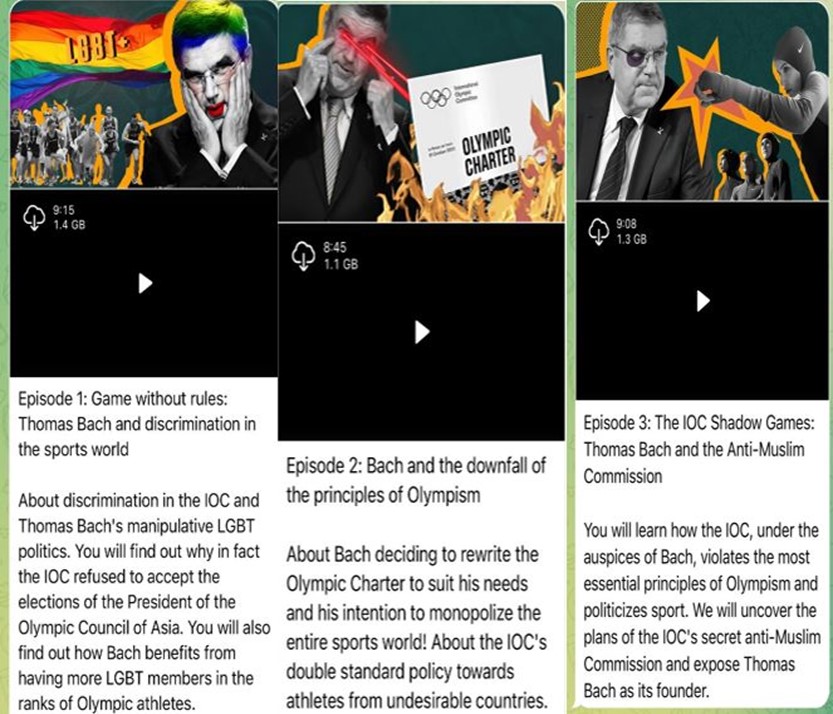

These so-called documentaries are at the moment being distributed by way of Telegram channels. The first goal of this sequence is to focus on the Olympics and discredit its management. Inside only a week of its launch, the sequence has already attracted over 150,000 viewers, and the numbers proceed to climb.

Along with claiming to be an Amazon Prime story, the creators of this content material have additionally circulated pictures of what appear to be fabricated endorsements and critiques from respected publishers, enhancing their try at social engineering.

Faux endorsement of well-known publishers

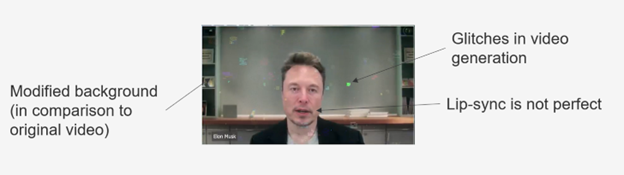

This 3-part sequence consists of episodes using AI voice cloning, picture diffusion and lip-sync to piece collectively a pretend narration. A whole lot of effort has been expended to make the video seem like a professionally created sequence. Nonetheless, there are particular hints within the video, such because the picture-in-picture overlay that seems at varied factors within the sequence. By shut commentary, there are particular glitches.

Overlay video throughout the sequence with some discrepancies

The unique video seems to be from a Wall Road Journal (WSJ) interview that has then been altered and modified (discover the background). The audio clone is nearly indiscernible by human inspection.

Authentic video snapshot from WSJ Interview

Modified and altered screenshot from half 3 of the pretend sequence

Episodes thumbnails and their descriptions captured from the telegram channel

Elon Musk’s voice has been a goal for impersonation earlier than. In actual fact, McAfee’s 2023 Hacker Superstar Sizzling Checklist positioned him at quantity six, highlighting his standing as probably the most regularly mimicked public figures in cryptocurrency scams.

Because the prevalence of deepfakes and associated scams continues to develop, together with campaigns of misinformation and disinformation, McAfee has developed deepfake audio detection know-how. Showcased on Intel’s AI PCs at RSA in Might, McAfee’s Deepfake Detector – previously generally known as Mission Mockingbird – helps individuals discern fact from fiction and defends shoppers towards cybercriminals using fabricated, AI-generated audio to hold out scams that rob individuals of cash and private info, allow cyberbullying, and manipulate the general public picture of outstanding figures.

With the 2024 Olympics on the horizon, McAfee predicts a surge in scams involving AI instruments. Whether or not you’re planning to journey to the summer time Olympics or simply following the thrill from house, it’s essential to stay alert. Be cautious of unsolicited textual content messages providing offers, avoid unfamiliar web sites, and be skeptical of the knowledge shared on varied social platforms. It’s vital to take care of a vital eye and use instruments that improve your on-line security.

McAfee is dedicated to empowering shoppers to make knowledgeable selections by offering instruments that determine AI-generated content material and elevating consciousness about their software the place needed.

AI-generated content material is turning into more and more plausible these days. Some key suggestions whereas viewing content material on-line:

- Be skeptical of content material from untrusted sources – All the time query the motive. On this case, the content material is accessible on Telegram channels and posted to unusual public cloud storage.

- Be vigilant whereas viewing the content material – Most AI fabrications may have some flaws, though it’s turning into more and more harder to identify such discrepancies at a look. On this video, we famous some apparent indicators that seemed to be solid, nevertheless it’s barely extra difficult with the audio.

- Cross-verify info – Any cross-validation of this content material primarily based on the title on standard search engines like google or by looking Amazon Prime content material, would in a short time lead shoppers to appreciate that one thing is amiss.