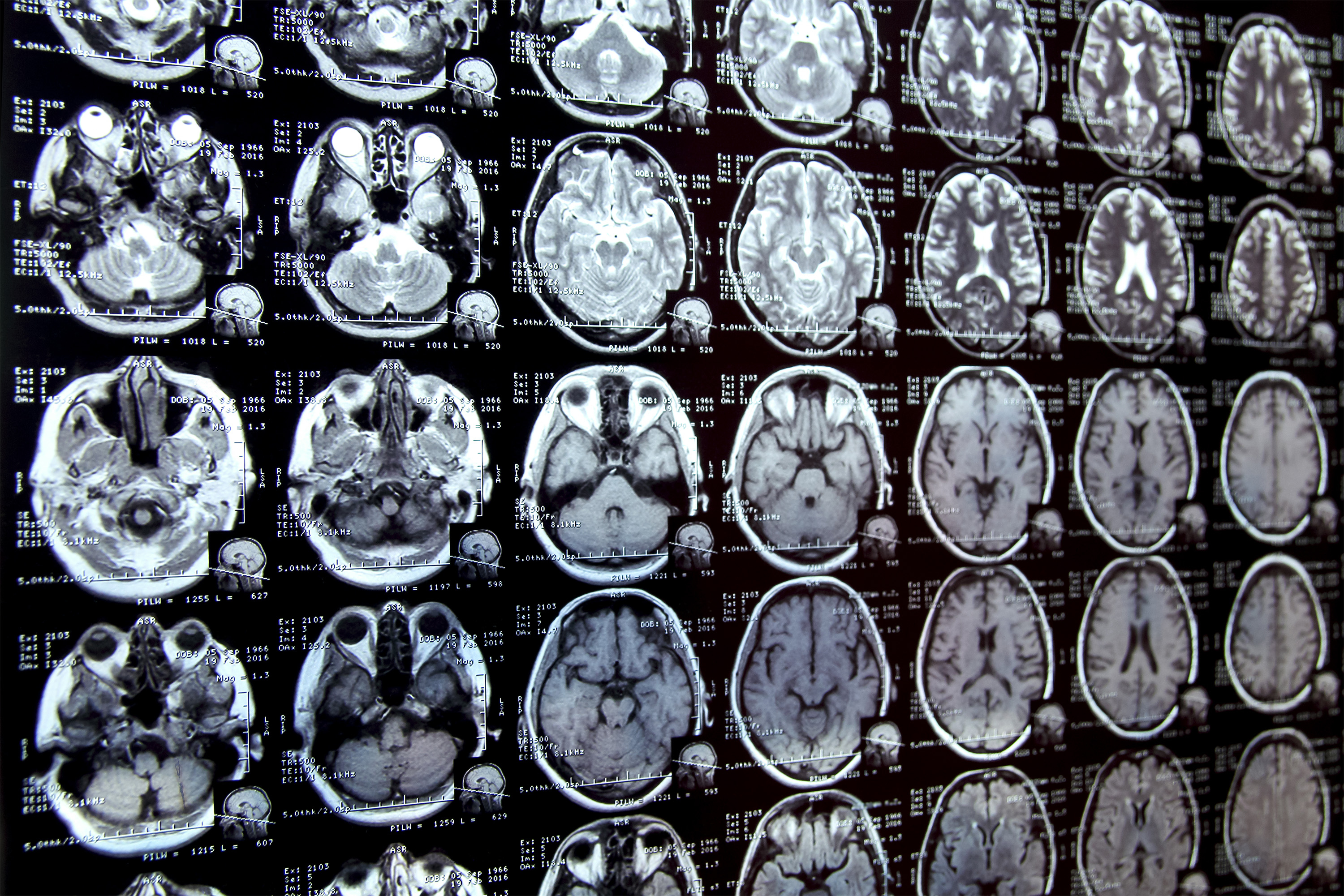

Annotating areas of curiosity in medical pictures, a course of generally known as segmentation, is usually one of many first steps medical researchers take when working a brand new examine involving biomedical pictures.

As an illustration, to find out how the dimensions of the mind’s hippocampus modifications as sufferers age, the scientist first outlines every hippocampus in a collection of mind scans. For a lot of constructions and picture sorts, that is usually a handbook course of that may be extraordinarily time-consuming, particularly if the areas being studied are difficult to delineate.

To streamline the method, MIT researchers developed a synthetic intelligence-based system that permits a researcher to quickly section new biomedical imaging datasets by clicking, scribbling, and drawing packing containers on the photographs. This new AI mannequin makes use of these interactions to foretell the segmentation.

Because the consumer marks further pictures, the variety of interactions they should carry out decreases, finally dropping to zero. The mannequin can then section every new picture precisely with out consumer enter.

It will probably do that as a result of the mannequin’s structure has been specifically designed to make use of data from pictures it has already segmented to make new predictions.

In contrast to different medical picture segmentation fashions, this method permits the consumer to section a whole dataset with out repeating their work for every picture.

As well as, the interactive software doesn’t require a presegmented picture dataset for coaching, so customers don’t want machine-learning experience or in depth computational assets. They’ll use the system for a brand new segmentation process with out retraining the mannequin.

In the long term, this software might speed up research of recent therapy strategies and cut back the price of medical trials and medical analysis. It is also utilized by physicians to enhance the effectivity of medical functions, similar to radiation therapy planning.

“Many scientists may solely have time to section just a few pictures per day for his or her analysis as a result of handbook picture segmentation is so time-consuming. Our hope is that this method will allow new science by permitting medical researchers to conduct research they have been prohibited from doing earlier than due to the dearth of an environment friendly software,” says Hallee Wong, {an electrical} engineering and pc science graduate scholar and lead writer of a paper on this new software.

She is joined on the paper by Jose Javier Gonzalez Ortiz PhD ’24; John Guttag, the Dugald C. Jackson Professor of Pc Science and Electrical Engineering; and senior writer Adrian Dalca, an assistant professor at Harvard Medical College and MGH, and a analysis scientist within the MIT Pc Science and Synthetic Intelligence Laboratory (CSAIL). The analysis shall be offered on the Worldwide Convention on Pc Imaginative and prescient.

Streamlining segmentation

There are primarily two strategies researchers use to section new units of medical pictures. With interactive segmentation, they enter a picture into an AI system and use an interface to mark areas of curiosity. The mannequin predicts the segmentation primarily based on these interactions.

A software beforehand developed by the MIT researchers, ScribblePrompt, permits customers to do that, however they have to repeat the method for every new picture.

One other strategy is to develop a task-specific AI mannequin to routinely section the photographs. This strategy requires the consumer to manually section tons of of pictures to create a dataset, after which prepare a machine-learning mannequin. That mannequin predicts the segmentation for a brand new picture. However the consumer should begin the advanced, machine-learning-based course of from scratch for every new process, and there’s no option to appropriate the mannequin if it makes a mistake.

This new system, MultiverSeg, combines the perfect of every strategy. It predicts a segmentation for a brand new picture primarily based on consumer interactions, like scribbles, but additionally retains every segmented picture in a context set that it refers to later.

When the consumer uploads a brand new picture and marks areas of curiosity, the mannequin attracts on the examples in its context set to make a extra correct prediction, with much less consumer enter.

The researchers designed the mannequin’s structure to make use of a context set of any dimension, so the consumer doesn’t have to have a sure variety of pictures. This provides MultiverSeg the flexibleness for use in a spread of functions.

“In some unspecified time in the future, for a lot of duties, you shouldn’t want to offer any interactions. In case you have sufficient examples within the context set, the mannequin can precisely predict the segmentation by itself,” Wong says.

The researchers rigorously engineered and skilled the mannequin on a various assortment of biomedical imaging information to make sure it had the flexibility to incrementally enhance its predictions primarily based on consumer enter.

The consumer doesn’t have to retrain or customise the mannequin for his or her information. To make use of MultiverSeg for a brand new process, one can add a brand new medical picture and begin marking it.

When the researchers in contrast MultiverSeg to state-of-the-art instruments for in-context and interactive picture segmentation, it outperformed every baseline.

Fewer clicks, higher outcomes

In contrast to these different instruments, MultiverSeg requires much less consumer enter with every picture. By the ninth new picture, it wanted solely two clicks from the consumer to generate a segmentation extra correct than a mannequin designed particularly for the duty.

For some picture sorts, like X-rays, the consumer may solely have to section one or two pictures manually earlier than the mannequin turns into correct sufficient to make predictions by itself.

The software’s interactivity additionally allows the consumer to make corrections to the mannequin’s prediction, iterating till it reaches the specified stage of accuracy. In comparison with the researchers’ earlier system, MultiverSeg reached 90 % accuracy with roughly 2/3 the variety of scribbles and three/4 the variety of clicks.

“With MultiverSeg, customers can all the time present extra interactions to refine the AI predictions. This nonetheless dramatically accelerates the method as a result of it’s often sooner to appropriate one thing that exists than to begin from scratch,” Wong says.

Shifting ahead, the researchers need to check this software in real-world conditions with medical collaborators and enhance it primarily based on consumer suggestions. In addition they need to allow MultiverSeg to section 3D biomedical pictures.

This work is supported, partly, by Quanta Pc, Inc. and the Nationwide Institutes of Well being, with {hardware} assist from the Massachusetts Life Sciences Middle.