The transformers library, featuring BERT and RoBERTa models, alongside the Hugging Face’s LlamaIndex, provide robust frameworks for building functions leveraging natural language processing capabilities, each exceling in their unique ways, presenting distinct strengths and foci that make them suitable for diverse NLP software requirements.

This blog post provides insights into deciding when to employ one framework over another – a comparison between LangChain and LlamaIndex.

Studying Targets

- LangChain’s AI-driven architecture leverages transformer models to generate human-like text responses, while LlamaIndex’s indexing approach utilizes the power of massive language model outputs for fast query processing.

- Delineate the optimal application scenarios for each framework, such as leveraging LangChain in conversational AI and utilizing LlamaIndex for data retrieval purposes.

- Develop a comprehensive grasp of key aspects within each framework, encompassing indexing mechanisms, retrieval techniques, workflow strategies, and contextual data preservation methodologies.

- The efficacy of lifecycle administration tools across various frameworks remains a crucial consideration for software development teams.

- Carefully select a tailored combination of frameworks that aligns with the specific needs and objectives of the project to ensure optimal results.

What’s LangChain?

You’ll be able to view LangChain as a framework rather than solely a device. The platform provides a range of instruments and tools beyond its core capabilities, enabling seamless interaction with Large Language Models. LangChain’s primary functionality lies in its ability to employ chain structures that enable the linking of individual components seamlessly. You could leverage a prompt template and an LLM chain to develop a query and engage an LLM seamlessly. Modular design enables seamless and adaptable combination of diverse components to perform complex tasks with ease.

LangChain streamlines every aspect of the Large Language Model (LLM) software development process.

- Can we construct our models using LangChain’s open-source, pre-trained language models? Design robust, stateful brokers that seamlessly integrate first-class streaming capabilities with the power of human-in-the-loop assistance.

- Carefully scrutinize, track, and ponder your supply chain dynamics to ensure perpetual refinement and deployment with unwavering assurance.

- Develop scalable, secure, and intuitive API gateways to seamlessly integrate LangGraph functionalities, empowering users to effortlessly leverage natural language processing capabilities, machine learning models, and data analysis for real-world applications.

LangChain Ecosystem

- Abstraction is a cornerstone of programming, providing a blueprint for complex systems to ensure scalability, maintainability, and reusability. At its core, an abstraction is a simplified representation of a real-world concept or system, hiding complexities while exposing essential features.

LangChain’s Expression Language (LEL) leverages this fundamental concept by introducing a novel framework for declarative programming. By abstracting away low-level implementation details, LEL enables developers to focus on the logic and intent behind their code, rather than getting bogged down in syntax and semantics. This paradigm shift empowers programmers to craft more efficient, flexible, and readable code, ultimately driving innovation and productivity.

By distilling complex systems into their most essential components, abstractions create a foundation for LangChain’s Expression Language to flourish. As a result, developers can build upon these fundamental building blocks to construct robust, scalable, and maintainable software systems that truly unlock the potential of programming.

- The necessary integrations have been reframed as lightweight packages, enabling collaborative maintenance between the LangChain group and combination builders.

- Cognitive structures comprising chains of brokers and retrieval methods within a software’s architecture.

- Third-party integrations that can be collectively managed.

- Design robust, actor-based interactions within Large Language Models (LLMs) by leveraging graph theory to model step-by-step processes as interconnected nodes and edges. Integrates seamlessly with LangChain, but can also be used independently.

- Streamline production processes by integrating LLM functions built upon LangGraph into manufacturing workflows.

- A developer platform enabling debugging, verification, evaluation, and monitoring of Large Language Model functions.

Developing your inaugural Large Language Model (LLM) software utilizing LangChain and OpenAI?

Let’s develop an intuitive LLM software leveraging LangChain and OpenAI, and learn how it functions.

What’s our goal here? Are we installing software? Let me rephrase that for clarity: We’ll start by installing essential packages.

!pip set up langchain-core langgraph>0.2.27 !pip set up -qU langchain-openaiOrganising openai as llm

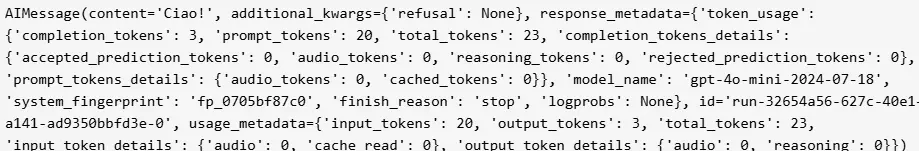

import getpass import os from langchain_openai import OpenAIAPI api_key = os.environ.setdefault("OPENAI_API_KEY", getpass.getpass()) chat = OpenAIAPI(api_key=api_key, model='gpt-4o-mini')By invoking the methodology on an inventory of messages, we can simplify the task of naming the mannequin.

import langchain_core.messages as messages system_message = messages.SystemMessage("Traduci il seguente testo dall'inglese all'italiano") human_message = messages.HumanMessage("Ciao!") mannequin.invoke([system_message, human_message])

Now let’s create an immediate template are nothing however an innovative idea in LangChain designed to facilitate seamless transformations. They absorb raw consumer input and instantly provide information that seamlessly integrates into a language model.

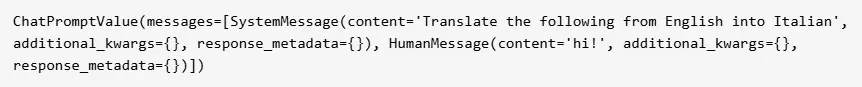

system_template = "Translate this text from English into {language}:" prompt_template = ChatPromptTemplate.from_messages([("system", system_template), ("user", "{text}")])You’ll notice that this function accepts two parameters: `language` and `textual content`. We parse the language parameter to format the system message, and convert the consumer textual content into a corresponding consumer message. The entry to this immediate template is a dictionary. We’ll experiment with this standalone template right away.

Immediate = prompt_template.invoke({"language": "it-IT", "text": "Ciao!"})

This function returns a ChatPromptValue containing two messages. If you want to enter messages immediately, you simply:

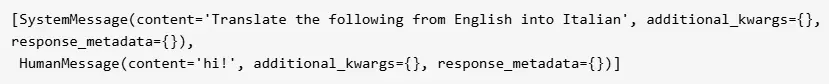

immediate.to_messages()

Lastly, we’re able to invoke the chatbot on the formatted interface.

response = mannequin.invoke(immediate) print(response.content material)

LangChain offers unparalleled versatility and adaptability, offering a comprehensive suite of tools and instruments for a broad range of natural language processing applications.

From simple inquiries to sophisticated workflow solutions. Discover in-depth information about LangChain components.

What’s LlamaIndex?

LlamaIndex, previously known as GPT Index, is a framework designed to create context-enriched generative AI capabilities by combining Large Language Models (LLMs) with specific data sources and domain expertise. Its primary goal is to efficiently ingest, structure, and access proprietary or specialized knowledge. LlamaIndex excels in handling enormous datasets, providing rapid and precise information access, thereby becoming the preferred choice for search and retrieval applications.

The text introduces a suite of tools designed to seamlessly integrate tailored data into Large Language Models (LLMs), with a focus on tasks demanding exceptional search functionality.

LlamaIndex proves to be remarkably effective in information indexing and querying scenarios. Based on my expertise with LlamaIndex, it presents a ideal solution for harnessing the power of vector embeddings and retrievable attention-generated summaries (RAGs).

LlamaIndex places no limitations on your application of Large Language Models. You should leverage large language models (LLMs) for applications such as automatic text completion, conversational AI, market brokering services, and many more. The integration streamlines usage, rendering the process more intuitive and efficient.

They supply instruments like:

- Information connectors Extract and process data from its original source and organize it in a standardized structure. These could potentially include various file formats such as Application Programming Interfaces (APIs), Portable Document Files (PDFs), Structured Query Language (SQL), among many others.

- Information indexes Constructing information in intermediate representations that are simple and performant for large language models (LLMs) to consume.

- Engines What are the main concerns of the team regarding this project? For instance:

- Question engines are highly effective interfaces that enable efficient and accurate question-answering capabilities, exemplified by the likes of a RAG circulation).

- Chat engines are innovative tools that facilitate seamless, multi-turn conversations allowing users to engage in backwards-and-forwards interactions, enabling the exchange of valuable information?

- Brokers Are language model-driven information assistants enhanced by tools, ranging from simple utilities to API connections and beyond?

- Observability/Analysis Integrations that enable meticulous experimentation, contemplation, and monitoring of your application within a self-sustaining cycle.

- Workflows By incorporating the existing framework into a dynamic, event-driven architecture, you can create a far more flexible and adaptable system that seamlessly integrates disparate components, rendering graph-based methods obsolete in the process.

LlamaIndex Ecosystem

Similar to LangChain, LlamaIndex also cultivates its unique ecosystem.

- The organization’s ability to effectively deploy its agentive workflows as manufacturing microservices will significantly enhance its operational efficiency and decision-making capabilities. By leveraging the power of cloud-based technologies and advanced algorithms, the company can create a highly scalable and flexible production environment that is better equipped to handle changes in market demand and supply chain disruptions.

- A diverse array of tailored data links continues to expand.

- A cutting-edge LlamaIndex-powered platform enables comprehensive monetary analysis, providing users with unparalleled insights into market trends and financial performance.

- A robust CLI tool for swiftly orchestrating LlamaIndex operations.

Crafting a Groundbreaking LLM Software with LlamaIndex and OpenAI?

Developing an intuitive Large Language Model (LLM) software utilizing LlamaIndex and OpenAI technologies, allowing users to harness the power of AI-driven language processing.

Let’s set up libraries

!pip set up llama-indexSetup the OpenAI Key:

The LlamaIndex utilizes OpenAI’s gpt-3.5-turbo as its default technology. Don’t expose your API secret directly in your code; instead, secure it by storing it as a setting variable. On MacOS and Linux, this is the command:

export OPENAI_API_KEY=XXXXXand on Home windows it’s

set OPENAI_API_KEY=XXXXXThis instance utilizes the textual content from Paul Graham’s essay.

Acquire and store the data in a designated repository titled “Information”.

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader paperwork = SimpleDirectoryReader("information").load_data() index = VectorStoreIndex.from_documents(paperwork).build() query_engine = index.as_query_engine() response = query_engine.ask("What is that this essay all about?") print(response)

Our technology, LlamaIndex, distills complex query structures into a concise format, subsequently matching the query against relevant information in a pre-computed index. This indexed data is then provided as contextual input to large language models like ours.

What distinguishes LangChain from LlamaIndex? In this thought-provoking analysis, we delve into the strengths and weaknesses of these two AI-driven language processing platforms, aiming to provide a comprehensive overview of their capabilities.

LangChain and LlamaIndex excel in distinct areas, leveraging the unique capabilities of massive language models (LLMs) to serve various needs within the realm of NLP. Here’s a direct comparison:

| Transforms diverse data formats – including unorganized text and structured database entries – into meaningful semantic representations. Optimized to create searchable vector indexes seamlessly. | Permits flexible and adaptable information indexing in a modular format. Integrates complex operations using chains, seamlessly combining multiple instruments and Large Language Model (LLM) calls to facilitate advanced tasks. | |

| Develops expertise in evaluating documents based on semantic likeness, leveraging its proficiency in assessing semantic similarity between documents. Excelles in environmentally friendly initiatives, consistently delivering high-quality results with utmost efficiency. | Combines retrieval-based algorithms with large language models to produce contextualized and informative responses that account for the nuances of user input. Highly effective in facilitating interactive functions that necessitate real-time data extraction. | |

| Restricted customisation, designed specifically for indexing and retrieval purposes. Focused specifically on optimizing speed and precision within a defined scope. | With unparalleled adaptability, this solution excels in tailoring its capabilities to suit diverse applications, ranging from conversational AI interfaces to streamlined workflow optimization. Streamlines complex operations to deliver bespoke results. | |

| Primary capabilities for retaining contextual understanding of questions. Designed for seamless searching and instant data access. | Superior contextual retention enables sustained, cohesive, and long-term communication. What’s critical for chatbot functionality and effective customer support interactions. | |

| Optimized for advanced inner searches, comprehensive information management, and large-scale enterprise solutions demanding precise data retrieval. | Exceptionally well-suited for interactive applications such as customer support, content generation, and complex natural language processing tasks. | |

| Optimized to facilitate rapid and accurate access to relevant information. – Handles massive datasets effectively. | Seamlessly integrates multiple instruments to handle complex workflows with ease. Maintains harmony between productivity and carefully considered task requirements. | |

| Presents a range of debugging and monitoring tools designed to enhance system efficiency, reliability, and performance. Manages a comprehensive software life cycle, ensuring seamless and efficient operations from development to deployment. | Supplies the LangSmith analysis suite to facilitate thorough testing, debugging, and optimization of applications. Delivers robust reliability under demanding real-world conditions. |

Each framework offers highly effective capabilities, with the choice ultimately depending on a project’s unique needs and goals. In specific scenarios, synergies between LlamaIndex and LangChain’s capabilities could yield the most impactful results.

Conclusion

While LangChain and LlamaIndex are both effective frameworks, they serve distinct purposes. LangChain is highly modular, engineered to handle sophisticated workflows that integrate chains of prompts, fashioning narratives, recalling memories, and facilitating brokered conversations. Capable of exceptional performance in complex tasks that necessitate meticulous contextual comprehension and sophisticated interplay management.

Featuring AI-driven tools that echo the functionality of chatbots, buyer assistance platforms, and content creation software. Its seamless integration with tools like LangSmith for in-depth analysis and LangServe for streamlined deployment accelerates the event and optimization lifecycle, solidifying its position as a premier choice for complex, long-term applications.

The LlamaIndex platform primarily concentrates on data retrieval and search functionalities. This innovative technology seamlessly transforms vast data sets into meaningful embeddings, enabling swift and accurate retrieval, thereby offering a compelling substitute for RAG-based applications, information management, and corporate solutions. LlamaHub further enhances its capabilities by incorporating information loaders that seamlessly integrate a diverse range of information sources.

Ultimately, choose LangChain if you seek a flexible, context-sensitive framework for sophisticated workflows and interaction-intensive functionalities, while LlamaIndex is best suited to applications focused on rapid, precise information extraction from large datasets?

Key Takeaways

- LangChain excels in crafting modular, context-aware workflows for interactive applications such as chatbots and customer support systems, streamlining complex conversations.

- LlamaIndex specializes in eco-friendly data indexing and retrieval solutions, particularly well-suited for resource-intensive applications and large-scale dataset management.

- LangChain’s ecosystem empowers seamless lifecycle management with innovative tools like LangSmith and LangGraph, designed to facilitate efficient debugging and deployment processes.

- LlamaIndex offers robust tools such as vector embeddings and LlamaHub, enabling seamless semantic search and efficient information consolidation.

- Frameworks may be hybridized to facilitate effortless data extraction and complex process harmonization.

- Pair LangChain with its strengths in dynamic, long-term functions, reserving LlamaIndex for exact, large-scale information retrieval tasks.

Incessantly Requested Questions

A. LangChain concentrates on building complex workflows and engaging functionalities, such as intelligent chatbots and process automation, by leveraging its expertise in natural language processing. In contrast, LlamaIndex prioritizes environmentally responsible search and data retrieval capabilities from vast datasets through the power of vectorized embeddings.

A. LangChain and LlamaIndex could be leveraged in tandem to capitalize on their complementary abilities. By leveraging LlamaIndex for eco-friendly information retrieval, you can then pipeline the extracted data into LangChain workflows for further processing and interaction.

A. LangChain excels in supporting conversational AI due to its impressive capacity for retaining contextual information, efficiently managing recall of relevant details, and generating modular chain structures that facilitate seamless, context-driven engagements.

A. LlamaIndex leverages vector embeddings to convey information with semantic significance. It enables environmentally sustainable top-k similarity searches, boasting exceptional optimisation for swift and accurate query responses, even when dealing with immense datasets.