Human-in-the-loop is a solution to construct machine studying fashions with folks concerned on the proper moments. In human-in-the-loop machine studying, specialists label information, assessment edge circumstances, and provides suggestions on outputs. Their enter shapes targets, units high quality bars, and teaches fashions find out how to deal with gray areas. The result’s Human-AI collaboration that retains programs helpful and secure for actual use. Many groups deal with HITL as last-minute hand repair. That view misses the purpose.

HITL works finest as deliberate oversight contained in the workflow. Folks information information assortment, annotation guidelines, mannequin coaching checks, analysis, deployment gates, and reside monitoring. Automation handles the routine. People step in the place context, ethics, and judgment matter. This steadiness turns human suggestions in ML coaching into regular enhancements, not one-off patches.

Here’s what this text covers subsequent.

We outline HITL in clear phrases and map the place it suits within the ML pipeline. We define find out how to design a sensible HITL system and why it lifts AI coaching information high quality. We pair HITL with clever annotation, present find out how to scale with out shedding accuracy, and flag widespread pitfalls. We shut with what HITL means as AI programs develop extra autonomous.

What’s Human-in-the-Loop (HITL)?

Human-in-the-Loop (HITL) is a mannequin growth strategy the place human experience guides, validates, and improves AI/ML programs for larger accuracy and reliability. As an alternative of leaving information processing, coaching, and decision-making fully to algorithms, HITL integrates human experience to enhance accuracy, reliability, and security.

In observe, HITL can contain:

- Information labeling and annotation: People present floor reality information that trains AI fashions.

- Reviewing edge circumstances: Specialists validate or right outputs the place the mannequin is unsure.

- Steady suggestions: Human corrections refine the system over time, enhancing adaptability.

This collaboration ensures that AI programs stay clear, truthful, and aligned with real-world wants, particularly in complicated or delicate domains like healthcare, finance, or actual property. Basically, HITL combines the effectivity of automation with human judgment to construct smarter, safer, and extra reliable AI options.

What’s Human-in-the-Loop Machine Studying

Human-in-the-loop machine studying is an ML workflow that retains folks concerned at key steps. It’s greater than guide fixes. Assume deliberate human oversight in information work, mannequin checks, and reside operations.

Automation has grown quick. We moved from rule-based scripts to statistical strategies, then to deep studying and immediately’s generative fashions. Methods now study patterns at scale. Even so, fashions nonetheless miss uncommon circumstances and shift with new information. Labels age. Context adjustments by area, season, or coverage. That’s the reason edge circumstances, information drift, and area quirks preserve exhibiting up.

The price of errors is actual. Facial recognition can present bias on pores and skin tone and gender. Imaginative and prescient fashions in autonomous automobiles can misclassify a truck aspect as open house. In healthcare, a triage rating can skew in opposition to a subgroup if coaching information lacked correct protection. These errors erode belief.

HITL helps shut that hole.

A easy human-in-the-loop structure provides folks to mannequin coaching and assessment so selections keep grounded in context.

- Specialists write labeling guidelines, pull onerous examples, and settle disputes.

- They set thresholds, assessment dangerous outputs, and doc uncommon circumstances so the mannequin learns.

- After launch, reviewers audit alerts, repair labels, and feed these adjustments into the following coaching cycle.

The mannequin takes routine work. Folks deal with judgment, threat, and ethics. This regular loop improves accuracy, reduces bias, and retains programs aligned with actual use.

Why HITL is crucial for high-quality coaching information

Human-in-the-Loop (HITL) is crucial for high-quality coaching information and efficient information preparation for machine studying as a result of AI fashions are solely nearly as good as the info they study from. With out human experience, coaching datasets threat being inaccurate, incomplete, or biased. Automated labeling hits a ceiling when information is noisy or ambiguous. Accuracy plateaus and errors unfold into coaching and analysis.

Rechecks of common benchmarks discovered label errors round 3 to six p.c, sufficient to flip mannequin rankings, and that is the place educated annotators stroll into the image. HITL ensures:

- Area experience. Radiologists for medical imaging. Linguists for NLP. They set guidelines, spot edge circumstances, and repair delicate misreads that scripts miss.

- Clear escalation. Tiered assessment with adjudication prevents single-pass errors from changing into floor reality.

- Focused effort. Lively studying routes solely unsure objects to folks, which raises sign with out bloating value.

High quality field: GIGO in ML

- Higher labels result in higher fashions.

- Human suggestions in ML coaching breaks error propagation and retains datasets aligned with real-world which means.

Right here’s proof that it really works:

- Re-labeled ImageNet. When researchers changed single labels with human-verified units, reported good points shrank and a few mannequin rankings modified. Cleaner labels produced a extra devoted take a look at of actual efficiency.

- Benchmark audits. Systematic evaluations present that small fractions of mislabelled examples can distort each analysis and deployment selections, reinforcing the necessity for human within the loop on high-impact information.

Human-in-the-loop machine studying gives deliberate oversight that upgrades coaching information high quality, reduces bias, and stabilizes mannequin habits the place it counts.

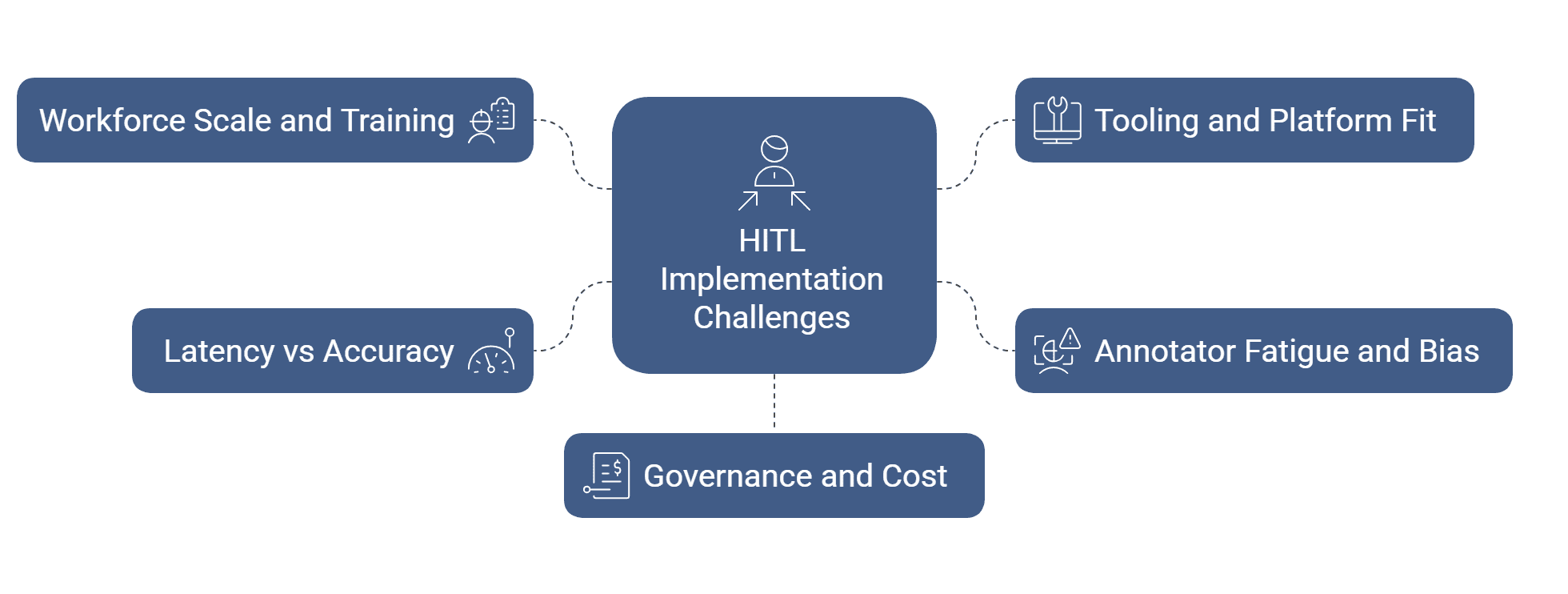

Challenges and issues in implementing HITL

Implementing Human-in-the-Loop (HITL) comes with challenges comparable to scaling human involvement, guaranteeing constant information labeling, managing prices, and integrating suggestions effectively. Organizations should steadiness automation with human oversight, deal with potential biases, and preserve information privateness, all whereas designing workflows that preserve the ML pipeline each correct and environment friendly.

- Workforce scale and coaching:

You want sufficient educated annotators on the proper time. Create clear guides, quick coaching movies, and fast quizzes. Observe settlement charges and provides quick suggestions so high quality improves week by week. - Tooling and platform match:

Test that your labeling device speaks your stack. Help for versioned schemas, audit trails, RBAC, and APIs retains information transferring. In the event you construct customized instruments, price range for ops, uptime, and person assist. - Annotator fatigue and bias:

Lengthy queues and repetitive objects decrease accuracy. Rotate duties, cap session size, and blend simple with onerous examples. Use blind assessment and battle decision to scale back private bias and groupthink. - Latency vs accuracy in actual time:

Some use circumstances want instantaneous outcomes. Others can await assessment. Triage by threat. Route solely high-risk or low-confidence objects to people. Cache selections and reuse them to chop delay. - Governance and value:

Human-in-the-loop machine studying wants clear possession. Outline acceptance standards, escalation paths, and price range alerts. Measure label high quality, throughput, and unit value so leaders can commerce velocity for accuracy with eyes open.

How you can design an efficient human-in-the-loop system

Begin with selections, not instruments.

Listing the factors the place judgment shapes outcomes. Write the foundations for these moments, agree on high quality targets, and match human-in-the-loop machine studying into that path. Hold the loop easy to run and straightforward to measure.

Use the correct varieties of knowledge labeling

Use expert-only labeling for dangerous or uncommon courses. Add model-assist the place the system pre-fills labels and folks verify or edit. For onerous objects, gather two or three opinions and let a senior reviewer resolve. Herald mild programmatic guidelines for apparent circumstances, however preserve folks in command of edge circumstances.

Putting in HITL in your organization

- Decide one high-value use case and run a brief pilot.

- Write tips with clear examples and counter-examples.

- Set acceptance checks, escalation steps, and a service stage for turnaround.

- Wire energetic studying so low-confidence objects attain reviewers first.

- Observe settlement, latency, unit value, and error themes.

- When the loop holds regular, broaden to the following dataset utilizing the identical HITL structure in AI.

Is a human within the loop system scalable?

Sure, if you happen to route by confidence and threat. Right here’s how one can make the system scalable:

- Auto-accept clear circumstances.

- Ship medium circumstances to educated reviewers.

- Escalate solely the few which can be excessive influence or unclear.

- Use label templates, ontology checks, and periodic audits to maintain consistency as quantity grows.

Higher uncertainty scores will goal evaluations extra exactly. Mannequin-assist will velocity video and 3D labeling. Artificial information will assist cowl uncommon occasions, however folks will nonetheless display screen it. RLHF will prolong past textual content to policy-heavy outputs in different domains.

For moral and equity checks, begin writing bias-aware guidelines. Pattern by subgroup and assessment these slices on a schedule. Use various annotator swimming pools and occasional blind evaluations. Hold audit trails, privateness controls, and consent information tight.

These steps preserve human-AI collaboration secure, traceable, and match for actual use.

Trying forward: HITL in a way forward for autonomous AI

Fashions are getting higher at self-checks and self-corrections. They’ll nonetheless want guardrails. Excessive-stakes calls, long-tail patterns, and shifting insurance policies name for human judgment.

Human enter will change form. Extra immediate design and coverage establishing entrance. Extra suggestions curation and dataset governance. Moral assessment as a scheduled observe, not an afterthought. In reinforcement studying with human suggestions, reviewers will give attention to disputed circumstances and security boundaries whereas instruments deal with routine rankings.

HITL shouldn’t be a fallback. It’s a strategic accomplice in ML operations: it units requirements, tunes thresholds, and audits outcomes so programs keep aligned with actual use.

Deeper integrations with labeling and MLOps instruments, richer analytics for slice-level high quality, and a specialised workforce by area and job sort. The intention is straightforward: preserve automation quick, preserve oversight sharp, and preserve fashions helpful because the world adjustments.

Conclusion

Human within the loop is the bottom of reliable AI because it retains judgment within the workflow the place it issues. It turns uncooked information into dependable indicators. With deliberate evaluations, clear guidelines, and energetic studying, fashions study quicker and fail safer.

High quality holds as you scale as a result of folks deal with edge circumstances, bias checks, and coverage shifts whereas automation does the routine. That’s how information turns into intelligence with each scale and high quality.

In case you are selecting a accomplice, decide one which embeds HITL throughout information assortment, annotation, QA, and monitoring. Ask for measurable targets, slice-level dashboards, and actual escalation paths. That’s our mannequin at HitechDigital. We construct and run HITL loops finish to finish so your programs keep correct, accountable, and prepared for actual use.