Delivering on the promise of real-time agentic automation requires a quick, dependable, and scalable knowledge basis. At UiPath, we would have liked a contemporary streaming structure to underpin merchandise like Maestro and Insights, enabling close to real-time visibility into agentic automation metrics as they unfold. That journey led us to unify batch and streaming on Azure Databricks utilizing Apache Spark™ Structured Streaming, enabling cost-efficient, low-latency analytics that help agentic decision-making throughout the enterprise.

This weblog particulars the technical strategy, trade-offs, and influence of those enhancements.

With Databricks-based streaming, we have achieved sub-minute event-to-warehouse latency whereas delivering simplified structure and future-proof scalability, setting the brand new commonplace for event-driven knowledge processing throughout UiPath.

Why Streaming Issues for UiPath Maestro and UiPath Insights

At UiPath, merchandise like Maestro and Insights rely closely on well timed, dependable knowledge. Maestro acts because the orchestration layer for our agentic automation platform; coordinating AI brokers, robots, and people primarily based on real-time occasions. Whether or not it’s reacting to a system set off, executing a long-running workflow, or together with a human-in-the-loop step, Maestro is dependent upon quick, correct sign processing to make the best selections.

UiPath Insights, which powers monitoring and analytics throughout these automations, provides one other layer of demand: capturing key metrics and behavioral alerts in close to actual time to floor tendencies, calculate ROI, and help difficulty detection.

Delivering these sorts of outcomes – reactive orchestration and real-time observability – requires an information pipeline structure that’s not solely low-latency, but in addition scalable, dependable, and maintainable. That want is what led us to rethink our streaming structure on Azure Databricks.

Constructing the Streaming Information Basis

Delivering on the promise of highly effective analytics and real-time monitoring requires a basis of scalable, dependable knowledge pipelines. Over the previous few years, now we have developed and expanded a number of pipelines to help new product options and reply to evolving enterprise necessities. Now, now we have the chance to evaluate how we will optimize these pipelines to not solely save prices, but in addition have higher scalability, and at-least as soon as supply assure to help knowledge from new providers like Maestro.

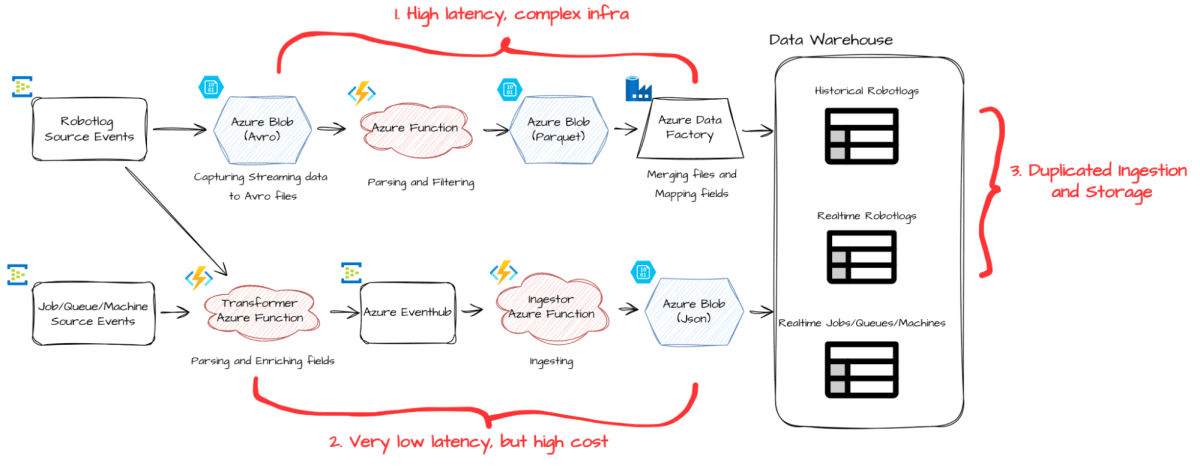

Whereas our earlier setup (proven above) labored effectively for our prospects, it additionally revealed areas for enchancment:

- The batching pipeline launched as much as half-hour of latency and relied on a fancy infrastructure

- The true-time pipeline delivered quicker knowledge however got here with increased value.

- For Robotlogs, our largest dataset, we maintained separate ingestion and storage paths for each historic and real-time processing, leading to duplication and inefficiency.

- To help the brand new ETL pipeline for UiPath Maestro, a brand new UiPath product, we would wish to realize at-least as soon as supply assure.

To handle these challenges, we undertook a significant architectural overhaul. We merged the batching and real-time ingestion processes for Robotlogs right into a single pipeline, and re-architected the real-time ingestion pipeline to be extra cost-efficient and scalable.

Why Spark Structured Streaming on Databricks?

As we got down to simplify and modernize our pipeline structure, we would have liked a framework that might deal with each high-throughput batch workloads and low-latency real-time knowledge—with out introducing operational overhead. Spark Structured Streaming (SSS) on Azure Databricks was a pure match.

Constructed on high of Spark SQL and Spark Core, Structured Streaming treats real-time knowledge as an unbounded desk—permitting us to reuse acquainted Spark batch constructs whereas gaining the advantages of a fault-tolerant, scalable streaming engine. This unified programming mannequin diminished complexity and accelerated improvement.

We had already leveraged Spark Structured Streaming to develop our Actual-time Alert characteristic, which makes use of stateful stream processing in Databricks. Now, we’re increasing its capabilities to construct our subsequent technology of Actual-time ingestion pipelines, enabling us to realize low-latency, scalability, value effectivity, and at-least-once supply ensures.

The Subsequent Era of Actual-time Ingestion

Our new structure, proven beneath, dramatically simplifies the information ingestion course of by consolidating beforehand separate elements right into a unified, scalable pipeline utilizing Spark Structured Streaming on Databricks:

On the core of this new design is a set of streaming jobs that learn immediately from occasion sources. These jobs carry out parsing, filtering, flattening, and—most critically—be a part of every occasion with reference knowledge to complement it earlier than writing to our knowledge warehouse.

We orchestrate these jobs utilizing Databricks Lakeflow Jobs, which helps handle retries and job restoration in case of transient failures. This streamlined setup improves each developer productiveness and system reliability.

The advantages of this new structure embrace:

- Value effectivity: Saves COGS by decreasing infrastructure complexity and compute utilization

- Low latency: Ingestion latency averages round one minute, with the flexibleness to scale back this additional

- Future-proof scalability: Throughput is proportional to the variety of cores, and we will scale out infinitely

- No knowledge misplaced: Spark does the heavy-lifting of failure restoration, supporting at-least as soon as supply.

- With downstream sink deduplication in future improvement, will probably be in a position to obtain precisely as soon as supply

- Quick improvement cycle because of the Spark DataFrame API

- Easy and unified structure

Low-Latency

Our streaming job at the moment runs in micro-batch mode with a one-minute set off interval. Which means that from the second an occasion is printed to our Occasion Bus, it usually lands in our knowledge warehouse round 27 seconds on median, with 95% of data arriving inside 51 seconds, and 99% inside 72 seconds.

Structured Streaming supplies configurable set off settings, which may even convey down the latency to a couple seconds. For now, we’ve chosen the one-minute set off as the best steadiness between value and efficiency, with the flexibleness to decrease it sooner or later if necessities change.

Scalability

Spark divides the large knowledge work by partitions, which totally make the most of the Employee/Executor CPU cores. Every Structured Streaming job is cut up into levels, that are additional divided into duties, every of which runs on a single core. This stage of parallelization permits us to totally make the most of our Spark cluster and scale effectively with rising knowledge volumes.

Because of optimizations like in-memory processing, Catalyst question planning, whole-stage code technology, and vectorized execution, we course of round 40,000 occasions per second in scalability validation. If site visitors will increase, we will scale out just by rising partition counts on the supply Occasion Bus and including extra employee nodes—guaranteeing future-proof scalability with minimal engineering effort.

Supply Assure

Spark Structured Streaming supplies exactly-once supply by default, because of its checkpointing system. After every micro-batch, Spark persists the progress (or “epoch”) of every supply partition as write-ahead logs and the job’s software state in state retailer. Within the occasion of a failure, the job resumes from the final checkpoint—guaranteeing no knowledge is misplaced or skipped.

That is talked about within the authentic Spark Structured Streaming analysis paper, which states that reaching exactly-once supply requires:

- The enter supply to be replayable

- The output sink to help idempotent writes

However there’s additionally an implicit third requirement that always goes unstated: the system should be capable of detect and deal with failures gracefully.

That is the place Spark works effectively—its strong failure restoration mechanisms can detect job failures, executor crashes, and driver points, and mechanically take corrective actions comparable to retries or restarts.

Notice that we’re at the moment working with at-least as soon as supply, as our output sink is just not idempotent but. If now we have additional necessities of exactly-once supply sooner or later, so long as we put additional engineering efforts into idempotency, we should always be capable of obtain it.

Uncooked Information is Higher

Now we have additionally made another enhancements. Now we have now included and endured a standard rawMessage area throughout all tables. This column shops the unique occasion payload as a uncooked string. To borrow the sushi precept (though we imply a barely completely different factor right here): uncooked knowledge is healthier.

Uncooked knowledge considerably simplifies troubleshooting. When one thing goes fallacious—like a lacking area or surprising worth—we will immediately consult with the unique message and hint the difficulty, with out chasing down logs or upstream methods. With out this uncooked payload, diagnosing knowledge points turns into a lot tougher and slower.

The draw back is a small improve in storage. However because of low cost cloud storage and the columnar format of our warehouse, this has minimal value and no influence on question efficiency.

Easy and Highly effective API

The brand new implementation is taking us much less improvement time. That is largely because of the DataFrame API in Spark, which supplies a high-level, declarative abstraction over distributed knowledge processing. Prior to now, utilizing RDDs meant manually reasoning about execution plans, understanding DAGs, and optimizing the order of operations like joins and filters. DataFrames permit us to give attention to the logic of what we need to compute, fairly than methods to compute it. This considerably simplifies the event course of.

This has additionally improved operations. We not have to manually rerun failed jobs or hint errors throughout a number of pipeline elements. With a simplified structure and fewer shifting components, each improvement and debugging are considerably simpler.

Driving Actual-Time Analytics Throughout UiPath

The success of this new structure has not gone unnoticed. It has shortly turn out to be the brand new commonplace for real-time occasion ingestion throughout UiPath. Past its preliminary implementation for UiPath Maestro and Insights, the sample has been broadly adopted by a number of new groups and initiatives for his or her real-time analytics wants, together with these engaged on cutting-edge initiatives. This widespread adoption is a testomony to the structure’s scalability, effectivity, and extensibility, making it straightforward for brand new groups to onboard and enabling a brand new technology of merchandise with highly effective real-time analytics capabilities.

If you happen to’re seeking to scale your real-time analytics workloads with out the operational burden, the structure outlined right here gives a confirmed path, powered by Databricks and Spark Structured Streaming and able to help the following technology of AI and agentic methods.

About UiPath

UiPath (NYSE: PATH) is a worldwide chief in agentic automation, empowering enterprises to harness the total potential of AI brokers to autonomously execute and optimize advanced enterprise processes. The UiPath Platform™ uniquely combines managed company, developer flexibility, and seamless integration to assist organizations scale agentic automation safely and confidently. Dedicated to safety, governance, and interoperability, UiPath helps enterprises as they transition right into a future the place automation delivers on the total potential of AI to remodel industries.