Introduction

From facial photographs, it is undoubtedly one of the many highly desirable outcomes. We combine OpenCV for face detection and the Roboflow API for gender classification, creating a tool that detects faces, verifies them, and predicts their gender. We’ll optimize, streamline, and execute this code efficiently. This direct provides a clear and concise walkthrough of the code, simplifying each step to enable readers to easily understand and adapt it to their own projects.

Studying Goal

- Discover how to effectively employ face detection with OpenCV’s Haar Cascade algorithm.

- What are some effective methods to integrate Roboflow API for sex classification?

- Explore the realm of digital image processing using Python’s versatile libraries, unlocking new possibilities for creative manipulation and analysis of visual data.

- Visualize detection outcomes utilizing Matplotlib.

- Develop robust capabilities that seamlessly integrate Artificial Intelligence (AI) with Laptop Vision to tackle complex real-world challenges.

How to detect gender utilizing OpenCV and RoboFlow in Python using deep learning techniques involves the following steps:

Explore methods to integrate OpenCV and Roboflow in Python for gender classification.

Importing necessary libraries to proceed with data analysis. From image processing library **OpenCV**, import required functionalities like reading, writing, and displaying images. Additionally, import **numpy** for efficient numerical computations and **pandas** for data manipulation.

import cv2

import numpy as np

import pandas as pd

The initial step is to import the essential libraries. We utilize OpenCV for image preprocessing, cluster handling, and visualizing outcomes. We also uploaded an image featuring individuals whose facial features we sought to analyze.

from google.colab import information import cv2 import numpy as np from matplotlib import pyplot as plt from inference_sdk import InferenceHTTPClient # Add picture uploaded = information.add() # Load the picture for filename in uploaded.keys(): img_path = filename In Google Colab, the information.add() functionality enables users to upload data, such as footage, from their local machines directly into the Colab environment. When imported, the image is stored under the ‘transferred’ reference key, which matches report names based on key examination. Using a for loop, we iterate through the files and extract their paths for further processing. To handle picture processing responsibilities, OpenCV is utilized to identify facial features and outline surrounding rectangles around them. At the same time, Matplotlib is employed to visualize the results, including displaying the image and cropped facial features.

Is the Haar cascade classifier loaded successfully for face detection?

Following this, we deploy OpenCV’s pre-trained Haar Cascade classifier, capable of detecting and identifying faces. This AI-powered mannequin detects patterns in images that resemble human faces, accurately pinpointing their locations.

The Haar Cascade classifier is loaded for detecting frontal faces from images, utilizing the default cascade file. A widely employed method in object detection. The algorithm effectively detects edges, textures, and patterns linked to the object, specifically in this instance, facial features. OpenCV provides a pre-trained face detection model that can be loaded using the `CascadeClassifier`.

What do you think about using computer vision to identify people in a photograph?

By superimposing the transferred image and converting it to grayscale, we enable a more pronounced difference that facilitates progress in achieving precise location accuracy. Following this step, we employ a face detection algorithm to identify and locate facial features within the image.

Here is the improved text in a different style: img_path = cv2.imread(img_path) grey_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) faces_detected = face_cascade.detectMultiScale(grey_img, scaleFactor=1.1, min_neighbors=5, min_size=(30, 30))-

- cv2.hconcat([cv2.imread(‘image1.jpg’), cv2.imread(‘image2.jpg’)])

- The image can be converted to grayscale using cv2.cvtColor(image, cv2.COLOR_BGR2GRAY), which reduces the complexity of the image by eliminating color information and improves the efficiency of subsequent processing steps such as edge detection or object recognition.

-

- Employing the `detectMultiScale()` method allows for the efficient identification of facial features within a grayscale image.

- The operating system scales the image and thoroughly examines various regions to identify facial patterns.

- The parameters scaleFactor and minNeighbors govern the trade-off between detection sensitivity and accuracy in object detection algorithms.

The gender detection API will assist in determining whether a user’s name is male or female. To set this up, follow these steps: First, navigate to the API provider’s website and sign up for an account. You will receive an API key after successful registration. Next, install the necessary library by running the command npm install gender-api in your terminal. Once installed, you can use the API’s detection function by passing a user’s name as a parameter. The API will return the detected gender along with its confidence level.

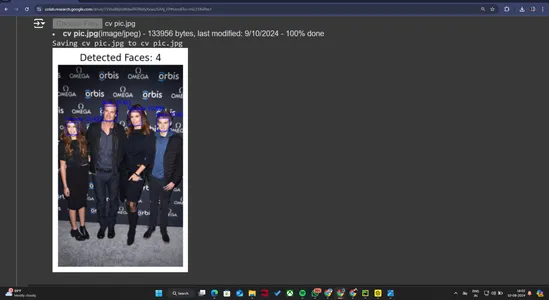

We’ve successfully detected the faces and utilize the Roboflow API’s InferenceHTTPClient to predict the gender of each detected face through an HTTP request.

Initialise InferenceHTTPClient for gender detection: client = InferenceHTTPClient(api_url="https://detect.roboflow.com", api_key="USE_YOUR_API")

The Inference HTTP Client streamlines interactions with Roboflow’s pre-trained models by automatically configuring a client with the Roboflow API URL and API key, thereby simplifying the development process for users. The setup enables requests to be sent to the gender detection model hosted on Roboflow. The API key operates as a unique authenticator, allowing secure access to and exploitation of the Roboflow API.

As we successfully detect faces in real-time video streams, our algorithm now proceeds to process each detected face.

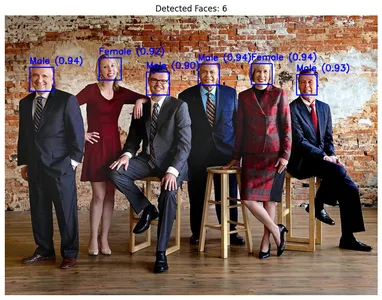

We iterate through each detected face, drawing a bounding box around it, and then cropping the face image for further processing. Each cropped facial image is promptly saved and transmitted to the Roboflow API, where a pre-trained gender-detection model is employed to predict the individual’s gender.

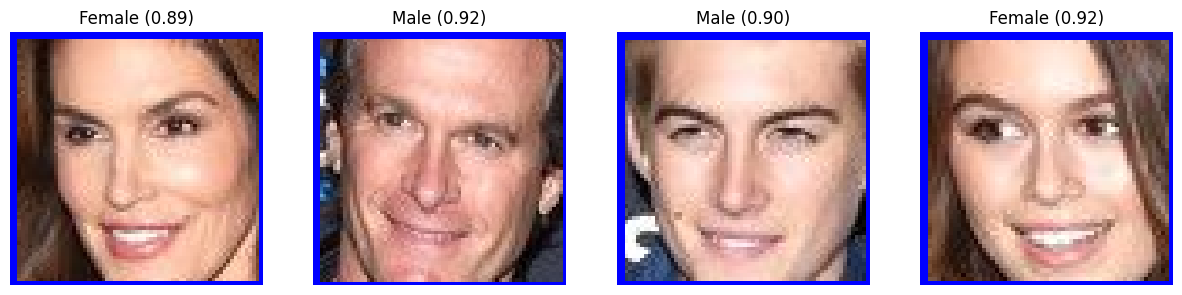

The Gender-Detection-QiyyG/2 Mannequin, a pre-trained deep learning model, is specifically designed to classify individuals’ gender as male or female based on facial features. The system provides predicted outcomes accompanied by a confidence level, signifying its certainty in the classification assessment. Trained on a robust dataset, the mannequin demonstrates exceptional accuracy in predicting faces across a diverse range of photographs. The API generates predictions that categorize each detected face into a specific gender, accompanied by a corresponding confidence level.

cropped_faces = [] for x, y, w, h in faces: face_count += 1 cv2.rectangle(img, (x, y), (x + w, y + h), (255, 0, 0), 2) face_img = img[y:y+h, x:x+w] face_img_path="temp_face.jpg" cv2.imwrite(face_img_path, face_img) consequence = CLIENT.infer(face_img_path, model_id="gender-detection-qiyyg/2") if 'predictions' in consequence and consequence['predictions']: prediction = consequence['predictions'][0] gender = prediction['class'] confidence = prediction['confidence'] label = f"{gender} ({confidence:.2f})" cv2.putText(img, label, (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (255, 0, 0), 2) cropped_faces.append((face_img, label)) As the system recognizes each familiar face, it generates a dynamically bounded area using cv2.rectangle() To draw attention visually to the face in the image. The image then undergoes facial cropping using slicing techniques to isolate the face region.face_img = img[y:y+h, x:x+w]The data will then be isolated for further processing. After swiftly processing the facial crop, the AI dispatches it seamlessly to the Roboflow mannequin for further analysis. CLIENT.infer()Returns the predicted gender along with its associated confidence level. The system provides these outcomes as textual content labels above every individual’s face using. cv2.putText()Providing a translucent and educative layer.

Step 6: Displaying the Outcomes

Lastly, we visualize the output. After converting the image from BGR to RGB, which is OpenCV’s default format, we proceed to display the identified facial features along with corresponding gender classifications. We subsequently display the individual facial images along with their corresponding annotations.

figsize=(10, 10), plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB), cmap='gray') plt.axis('off') plt.title(f"Detected Faces: {face_count}") plt.show(); fig, axs = plt.subplots(1, len(cropped_faces), figsize=(15, 5)) for i, ((face_img, _), label) in enumerate(zip(cropped_faces, labels)): axs[i].imshow(cv2.cvtColor(face_img, cv2.COLOR_BGR2RGB)) axs[i].axis('off') axs[i].set_title(label) plt.show(); - To ensure accurate color representation when plotting OpenCV images with Matplotlib, we employ the cv2.cvtColor function to transform the default BGR format to RGB.

-

- Using Matplotlib, we display an image featuring detected faces alongside corresponding gender labels superimposed atop these visual representations.

- We also display each cropped face image alongside its predicted gender label in an individual subplot.

Unique information

Output End result information

Conclusion

Efficiently developed a robust gender detection system utilizing OpenCV and Roboflow in Python, showcasing proficiency in integrating computer vision libraries to tackle complex tasks. Using OpenCV’s facial detection capabilities and integrating them with Roboflow’s machine learning algorithms, we developed a system capable of accurately identifying and categorizing genders in images. The incorporation of Matplotlib significantly enhanced our challenge by providing clear and informative visualizations of the results. This challenge showcases the benefits of integrating applied sciences, demonstrating practical value in real-world applications and offering a robust solution for gender detection tasks.

Key Takeaways

- The challenge showcases an effective approach to identifying and categorizing gender from photographs leveraging a pre-trained model. The demonstration clearly distinguishes sexual orientation with utmost certainty, showcasing its unwavering excellence.

- The company seamlessly integrates innovative technologies like Roboflow’s AI-driven insights, OpenCV’s image processing capabilities, and Matplotlib’s visualization expertise to achieve its objectives effectively.

- The system’s proficiency in discerning and categorizing genders from a solitary image showcases its versatility, rendering it suitable for diverse applications.

- By leveraging a pre-trained model, predictions exhibit remarkably high accuracy, consistently validated through favorable evaluation metrics reported subsequently. Accuracy is paramount when it comes to reliable gender classification applications.

- The challenge leverages visualization techniques to superimpose annotated labels on photographs, highlighting detected facial features and predicted gender classifications. This enhancement enables the results to become more transparent and valuable for further analysis.

Incessantly Requested Questions

A. The goal of this challenge is to develop an artificial intelligence system capable of detecting and classifying human gender based on photographs. The AI model utilizes pre-existing fashion knowledge to accurately identify and categorize individuals’ genders in images.

A. The challenge leveraged Roboflow’s gender detection model for AI-driven inference, combined with OpenCV for image processing and Matplotlib for data visualization. The software further leveraged Python for scripting and data manipulation.

A. The mannequin scrutinizes photographs to identify faces, subsequently categorizing each detected visage as either male or female utilizing sophisticated AI-driven criteria. The model outputs confidence scores for its predictions.

A. The mannequin exhibits exceptional precision, boasting reliable confidence scores that accurately predict outcomes. The boldness scores within the outcomes consistently exceeded 80%, demonstrating a robust level of effectiveness.