Google simply launched its new agent-based net browser from Google DeepMind, powered by Gemini 2.5 Professional. Constructed on the Gemini API, it may possibly “see” and work together with net and app interfaces: clicking, typing, and scrolling similar to a human. This new AI net automation mannequin bridges the hole between understanding and motion. On this article, we’ll discover the important thing options of Gemini Laptop Use, its capabilities, and easy methods to combine it into your agentic AI workflows.

What’s Gemini 2.5 Laptop Use?

Gemini 2.5 Laptop Use is an AI assistant that may management a browser utilizing pure language. You describe a aim, and it performs the steps wanted to finish it. Constructed on the brand new computer_use instrument within the Gemini API, it analyzes screenshots of a webpage or app, then generates actions like “click on,” “sort,” or “scroll.” A shopper similar to Playwright executes these actions and returns the subsequent display till the duty is completed.

The mannequin interprets buttons, textual content fields, and different interface parts to resolve easy methods to act. As a part of Gemini 2.5 Professional, it inherits sturdy visible reasoning, enabling it to finish advanced on-screen duties with minimal human enter. At present, it’s centered on browser environments and doesn’t management desktop apps outdoors the browser.

Key Capabilities

- Automate information entry and filling out types on web sites. The agent will be capable to discover fields, enter textual content, and submit types the place acceptable.

- Conduct testing of net purposes and consumer flows, by means of clicking pages, triggering occasions, and making certain that parts present up precisely.

- Conduct analysis throughout a number of web sites. For instance, it might acquire details about merchandise, pricing, or opinions throughout a number of e-commerce pages and summarize outcomes.

The way to Entry Gemini 2.5 Laptop Use?

The experimental capabilities of Gemini 2.5 Laptop Use are actually publicly obtainable for any developer to play with. Builders simply want to join the Gemini API by way of AI Studio or Vertex AI, after which request entry to the computer-use mannequin. Google supplies documentation, runnable code samples and reference implementation that you would be able to check out. For instance, the Gemini API docs present an instance of the four-step agent loop, full with examples in Python utilizing the Google Cloud GenAI SDK and Playwright.

You’ll arrange both a browser automation atmosphere, similar to Playwright for this comply with these steps:

- Join the Gemini API by means of AI Studio or Vertex AI.

- Request entry to the computer-use mannequin.

- Overview Google’s documentation, runnable code samples, and reference implementations.

For example, there may be an agent loop instance utilizing 4 steps in Python supplied by Google with the GenAI SDK and Playwright to automate the browser.

Additionally Learn: The way to Entry and Use the Gemini API?

Setting Up the Surroundings

Right here is the overall instance of what the setup appears like along with your code:

Preliminary Configuration and Gemini Shopper

# Load atmosphere variables from dotenv import load_dotenv load_dotenv() # Initialize Gemini API shopper from google import genai shopper = genai.Shopper() # Set display dimensions (beneficial by Google) SCREEN_WIDTH = 1440 SCREEN_HEIGHT = 900 We’ll start by organising the atmosphere variables are loaded for the API credentials, and the Gemini shopper is initialized. The beneficial display dimensions by Google are outlined, and later used to transform normalized coordinates to the precise pixel values crucial for actions within the UI.

Browser Automation with Playwright

Subsequent, the code units up Playwright for browser automation:

from playwright.sync_api import sync_playwright playwright = sync_playwright().begin() browser = playwright.chromium.launch( headless=False, args=[ '--disable-blink-features=AutomationControlled', '--disable-dev-shm-usage', '--no-sandbox', ] ) context = browser.new_context( viewport={"width": SCREEN_WIDTH, "top": SCREEN_HEIGHT}, user_agent="Mozilla/5.0 (Home windows NT 10.0; Win64; x64) AppleWebKit/537.36 Chrome/131.0.0.0 Safari/537.36", ) web page = context.new_page() Defining the Activity and Mannequin Configuration

Right here we’re launching a Chromium browser, using the anti-detection flags to stop webpages from recognizing automation. Then we outline a practical viewport and user-agent as a way to emulate a standard consumer and create a brand new web page as a way to navigate and automate interplay.

After the browser is ready up and able to go, the mannequin is supplied with the consumer’s aim and given the preliminary screenshot:

from google.genai.sorts import Content material, Half USER_PROMPT = "Go to BBC Information and discover in the present day's prime know-how headlines" initial_screenshot = web page.screenshot(sort="png") contents = [ Content(role="user", parts=[ Part(text=USER_PROMPT), Part.from_bytes(data=initial_screenshot, mime_type="image/png") ]) ] The USER_PROMPT defines what natural-language job the agent will undertake. It captures an preliminary screenshot of the state of the browser web page, which can then be despatched, together with the immediate to the mannequin. They’re encapsulated with the Content material object that may later even be handed to the Gemini mannequin.

Lastly, the agent loop runs sending the standing to the mannequin and executing the actions it returns:

The computer_use instrument prompts the mannequin to create perform calls, that are then executed within the browser atmosphere. thinking_config holds the intermediate steps of reasoning to supply transparency to the consumer, which can be helpful for later debugging or understanding the agent’s decision-making course of.

from google.genai.sorts import sorts config = sorts.GenerateContentConfig( instruments=[types.Tool( computer_use=types.ComputerUse( environment=types.Environment.ENVIRONMENT_BROWSER ) )], thinking_config=sorts.ThinkingConfig(include_thoughts=True), )The way it Works?

Gemini 2.5 Laptop Use runs as a closed-loop agent. You give it a aim and a screenshot, it predicts the subsequent motion, executes it by means of the shopper, after which opinions the up to date display to resolve what to do subsequent. This suggestions loop permits Gemini to see, motive, and act very like a human navigating a browser. Your complete course of is powered by the computer_use instrument within the Gemini API.

The Core Suggestions Loop

The agent operates in a steady cycle till the duty is full:

- Enter aim and screenshot: The mannequin receives the consumer’s instruction (e.g., “discover prime tech headlines”) and the screenshot of the present state of the browser.

- Generate actions: The mannequin suggests a number of perform calls that correspond to UI actions utilizing the computer_use instrument.

- Execute actions: The shopper program carries out these perform calls within the browser.

- Seize suggestions: A brand new screenshot and URL are captured and despatched again to the mannequin.

What the Mannequin Sees in Every Iteration

With each iteration, the mannequin receives three key items of data:

- Person request: The natural-language aim or instruction (instance: “discover the highest information headlines”)

- Present screenshot: A picture of your browser or app window and its present state

- Motion historical past: A report of latest actions taken to date (for context)

The mannequin analyzes the screenshot and the consumer’s aim, then generates a number of perform calls—every representing a UI motion. For instance:

{"identify": "click_at", "args": {"x": 400, "y": 600}}This might instruct the agent to click on at these coordinates.

Executing Actions within the Browser

The shopper program (utilizing Playwright’s mouse and keyboard APIs) executes these actions within the browser. Right here’s an instance of how perform calls are executed:

def execute_function_calls(candidate, web page, screen_width, screen_height): for half in candidate.content material.components: if half.function_call: fname = half.function_call.identify args = half.function_call.args if fname == "click_at": actual_x = int(args["x"] / 1000 * screen_width) actual_y = int(args["y"] / 1000 * screen_height) web page.mouse.click on(actual_x, actual_y) elif fname == "type_text_at": actual_x = int(args["x"] / 1000 * screen_width) actual_y = int(args["y"] / 1000 * screen_height) web page.mouse.click on(actual_x, actual_y) web page.keyboard.sort(args["text"]) # ...different supported actions...The perform parses the FunctionCall entries returned by the mannequin and executes every motion within the browser. It converts normalized coordinates (0–1000) into precise pixel values primarily based on the display dimension.

Capturing Suggestions for the Subsequent Cycle

After executing actions, the system captures the brand new state and sends it again to the mannequin:

def get_function_responses(web page, outcomes): screenshot_bytes = web page.screenshot(sort="png") current_url = web page.url function_responses = [] for identify, outcome, extra_fields in outcomes: response_data = {"url": current_url} response_data.replace(outcome) response_data.replace(extra_fields) function_responses.append( sorts.FunctionResponse( identify=identify, response=response_data, components=[types.FunctionResponsePart( inline_data=types.FunctionResponseBlob( mime_type="image/png", data=screenshot_bytes ) )] ) ) return function_responsesThe brand new state of the browser is wrapped in FunctionResponse objects, which the mannequin makes use of to motive about what to do subsequent. The loop continues till the mannequin not returns any perform calls or till the duty is accomplished.

Additionally Learn: High 7 Laptop Use Brokers

Agent Loop Steps

After loading the computer_use instrument, a typical agent loop follows these steps:

- Ship a request to the mannequin: Embody the consumer’s aim and a screenshot of the present browser state within the API name

- Obtain mannequin response: The mannequin returns a response containing textual content and/or a number of FunctionCall entries.

- Execute the actions: The shopper code parses every perform name and performs the motion within the browser.

- Seize and ship suggestions: After executing, the shopper takes a brand new screenshot and notes the present URL. It wraps these in a FunctionResponse and sends them again to the mannequin as the subsequent consumer message. This tells the mannequin the results of its motion so it may possibly plan the subsequent step.

This course of runs mechanically in a loop. When the mannequin stops producing new perform calls, it indicators that the duty is full. At that time, it returns any ultimate textual content output, similar to a abstract of what it completed. Typically, the agent will undergo a number of cycles earlier than both finishing the aim or reaching the set flip restrict.

Extra Supported Actions

Gemini’s Laptop Use instrument has dozens of built-in UI actions. The fundamental set contains actions which are typical for web-based purposes, together with:

- open_web_browser: Initializes the browser earlier than the agent loop begins (sometimes dealt with by the shopper).

- click_at: Clicks on a particular (x, y) coordinate on the web page.

- type_text_at: Clicks at some extent and kinds a given string, optionally urgent Enter.

- navigate: Opens a brand new URL within the browser.

- go_back / go_forward: Strikes backward or ahead within the browser historical past.

- hover_at: Strikes the mouse to a particular level to set off hover results.

- scroll_document / scroll_at: Scrolls your complete web page or a particular part.

- key_combination: Simulates urgent keyboard shortcuts.

- wait_5_seconds: Pauses execution, helpful for ready on animations or web page hundreds.

- drag_and_drop: Clicks and drags a component to a different location on the web page.

Google’s documentation mentions that the pattern implementation contains the three most typical actions: open_web_browser, click_at, and type_text_at. You’ll be able to lengthen this by including another actions you want or excluding ones that aren’t related to your workflow.

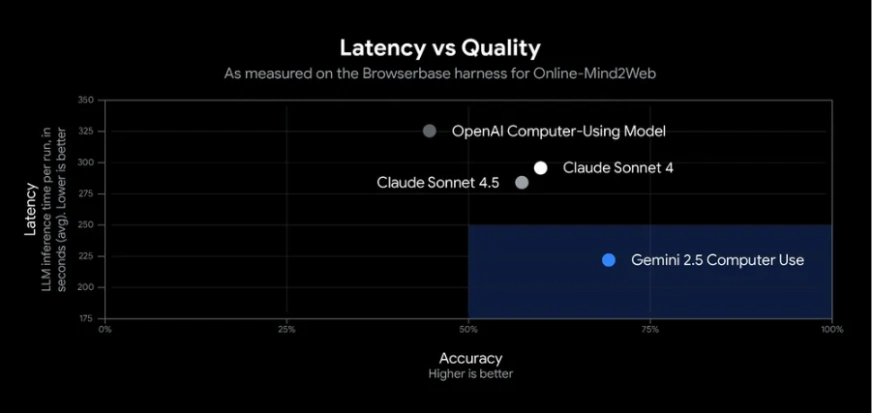

Efficiency and Benchmarks

Gemini 2.5 Laptop Use performs strongly in UI management duties. In Google’s exams, it achieved over 70% accuracy with round 225 ms latency, outperforming different fashions in net and cell benchmarks similar to shopping websites and finishing app workflows.

In observe, the agent can reliably deal with duties like filling types and retrieving information. Unbiased benchmarks rank it as probably the most correct and quickest public AI mannequin for easy browser automation. Its sturdy efficiency comes from Gemini 2.5 Professional’s visible reasoning and an optimized API pipeline. Because it’s nonetheless in preview, it is best to monitor its actions, occasional errors could happen.

Additionally Learn:

Conclusion

The Gemini 2.5 Laptop Use is a big growth in AI-Supported Automation, permitting brokers to successfully and effectively work together with actual interfaces. With it, builders can automate duties like net shopping, information entry, or information extraction with nice accuracy and velocity.

In its publicly obtainable state, we will provide builders a strategy to safely experiment with the capabilities of Gemini 2.5 Laptop Use, adapting it into their very own workflows. General, it represents a versatile framework by means of which to construct next-generation sensible assistants or highly effective automation workflows for a wide range of makes use of and domains.

Login to proceed studying and revel in expert-curated content material.