A remarkably frequent case in massive established enterprises is that there

are programs that no one needs to the touch, however everybody relies on. They run

payrolls, deal with logistics, reconcile stock, or course of buyer orders.

They’ve been in place and evolving slowly for many years, constructed on stacks no

one teaches anymore, and maintained by a shrinking pool of specialists. It’s

arduous to seek out an individual (or a group) that may confidently say that they know

the system nicely and are prepared to offer the useful specs. This

scenario results in a very lengthy cycle of study, and lots of packages get

lengthy delayed or stopped mid method due to the Evaluation Paralysis.

These programs typically reside inside frozen environments: outdated databases,

legacy working programs, brittle VMs. Documentation is both lacking or

hopelessly out of sync with actuality. The individuals who wrote the code have lengthy

since moved on. But the enterprise logic they embody remains to be vital to

day by day operations of hundreds of customers. The result’s what we name a black

field: a system whose outputs we will observe, however whose internal workings stay

opaque. For CXOs and know-how leaders, these black packing containers create a

modernization impasse

- Too dangerous to exchange with out totally understanding them

- Too expensive to keep up on life help

- Too vital to disregard

That is the place AI-assisted reverse engineering turns into not only a

technical curiosity, however a strategic enabler. By reconstructing the

useful intent of a system,even when it’s lacking the supply code, we will

flip concern and opacity into readability. And with readability comes the boldness to

modernize.

The System we Encountered

The system itself was huge in each scale and complexity. Its databases

throughout a number of platforms contained greater than 650 tables and 1,200 saved

procedures, reflecting many years of evolving enterprise guidelines. Performance

prolonged throughout 24 enterprise domains and was introduced by almost 350

consumer screens. Behind the scenes, the applying tier consisted of 45

compiled DLLs, every with hundreds of capabilities and nearly no surviving

documentation. This intricate mesh of information, logic, and consumer workflows,

tightly built-in with a number of enterprise programs and databases, made

the applying extraordinarily difficult to modernize

Our activity was to hold out an experiment to see if we may use AI to

create a useful specification of the present system with enough

element to drive the implementation of a substitute system. We accomplished

the experiment section for an finish to finish skinny slice with reverse and ahead

engineering. Our confidence stage is greater than excessive as a result of we did a number of

ranges of cross checking and verification. We walked by the reverse

engineered useful spec with sys-admin / customers to substantiate the supposed

performance and likewise verified that the spec we generated is enough

for ahead engineering as nicely.

The consumer issued an RFP for this work, with we estimated would take 6

months for a group of peak 20 individuals. Sadly for us, they determined to work

with certainly one of their present most popular companions, so we can’t be capable of see

how our experiment scales to the complete system in observe. We do, nevertheless,

assume we discovered sufficient from the train to be price sharing with our

skilled colleagues.

Key Challenges

- Lacking Supply Code: legacy understanding is already complicated if you

have supply code and an SME (in some type) to place the whole lot collectively. When the

supply code is lacking and there are not any specialists it’s a fair higher problem.

What’s left are some compiled binaries. These should not the current binaries that

are straightforward to decompile on account of wealthy metadata (like .NET assemblies or JARs), these

are even older binaries: the type that you simply may see in previous home windows XP below

C:Home windowssystem32. Even when the database is accessible, it doesn’t inform

the entire story. Saved procedures and triggers encode many years of gathered

enterprise guidelines. Schema displays compromises made primarily based on context unknown. - Outdated Infrastructure: OS and DB reached finish of life, long gone its

LTS. Software has been in a frozen state within the type of VM resulting in

important danger to not solely enterprise continuity, additionally considerably rising

safety vulnerability, non compliance and danger legal responsibility. - Institutional Information Misplaced: whereas hundreds of finish customers are

constantly utilizing the system, there may be hardly any enterprise information out there

past the occasional help actions. The reside system is the most effective supply of

information. The one dependable view of performance is what customers see on display screen.

However the UI captures solely the “final mile” of execution. Behind every display screen lies a

tangled net of logic deeply built-in to a number of different core programs. It is a

frequent problem, and this technique was no exception, having a historical past of a number of

failed makes an attempt to modernize.

Our Aim

The target is to create a wealthy, complete useful specification

of the legacy system while not having its unique code, however with excessive

confidence. This specification then serves because the blueprint for constructing a

fashionable substitute utility from a clear slate.

- Perceive total image of the system boundary and the mixing

patterns - Construct detailed understanding of every useful space

- Establish the frequent and distinctive eventualities

To make sense of a black-box system, we would have liked a structured approach to pull

collectively fragments from completely different sources. Our precept was easy: don’t

attempt to get better the code — reconstruct the useful intent.

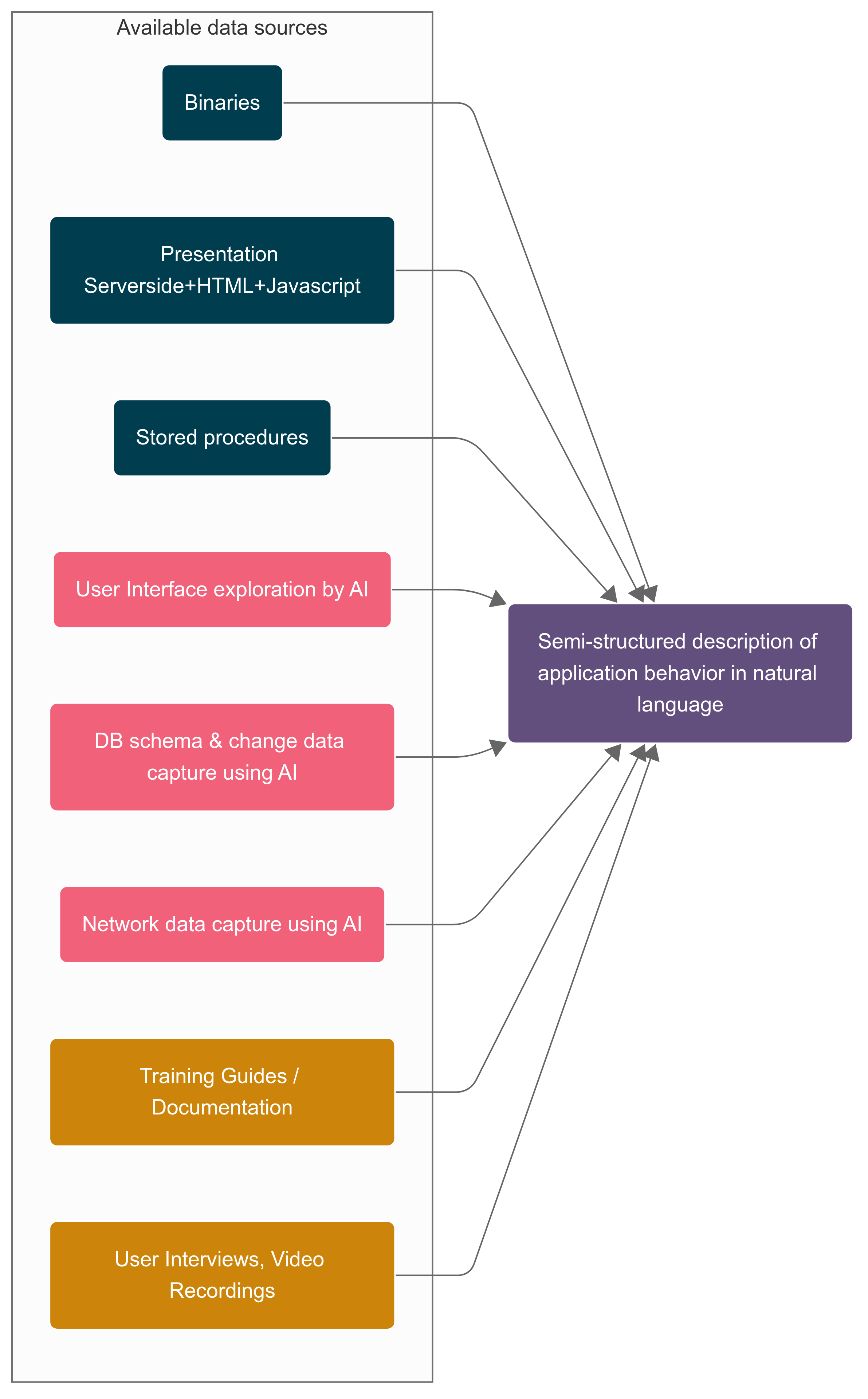

Our Multi Lens Strategy

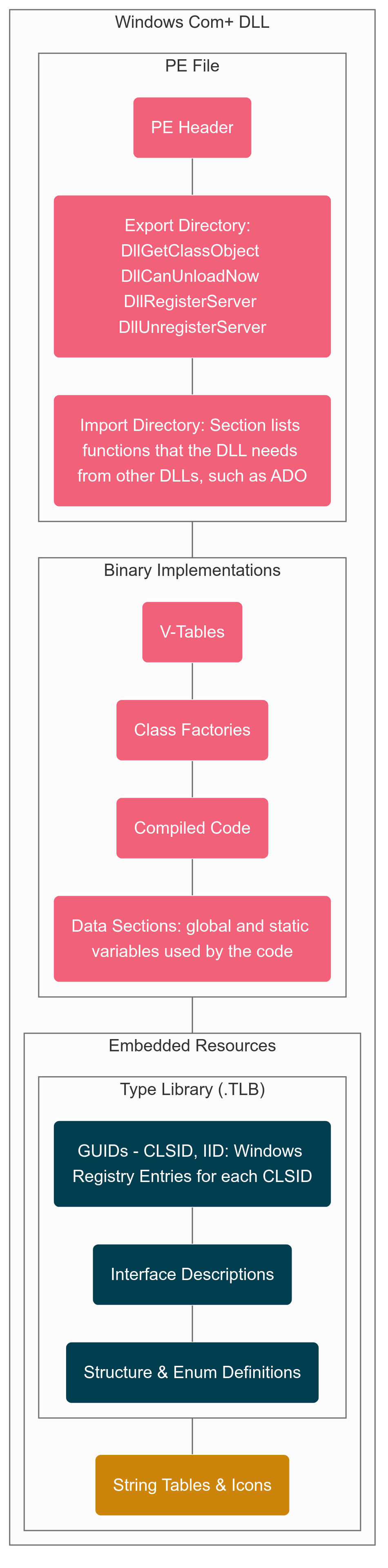

It was a 3 tier structure – Internet Tier (ASP), App Tier (DLL) and

Persistence (SQL). This structure sample gave us a leap begin even with out

supply repo. We extracted ASP information and DB schema, saved procedures from the

manufacturing system. For App Tier we solely have the native binaries. With all

this info out there, we deliberate to create a semi-structured

description of utility conduct in pure language for the enterprise

customers to validate their understanding and expectations and use the validated

useful spec to do accelerated ahead engineering. For the semi-structured

description, our method had broadly two elements

- Utilizing AI to attach dots throughout completely different knowledge sources

- AI assisted binary Archaeology to uncover the hidden performance from

the native DLL information

Join dots throughout completely different knowledge sources

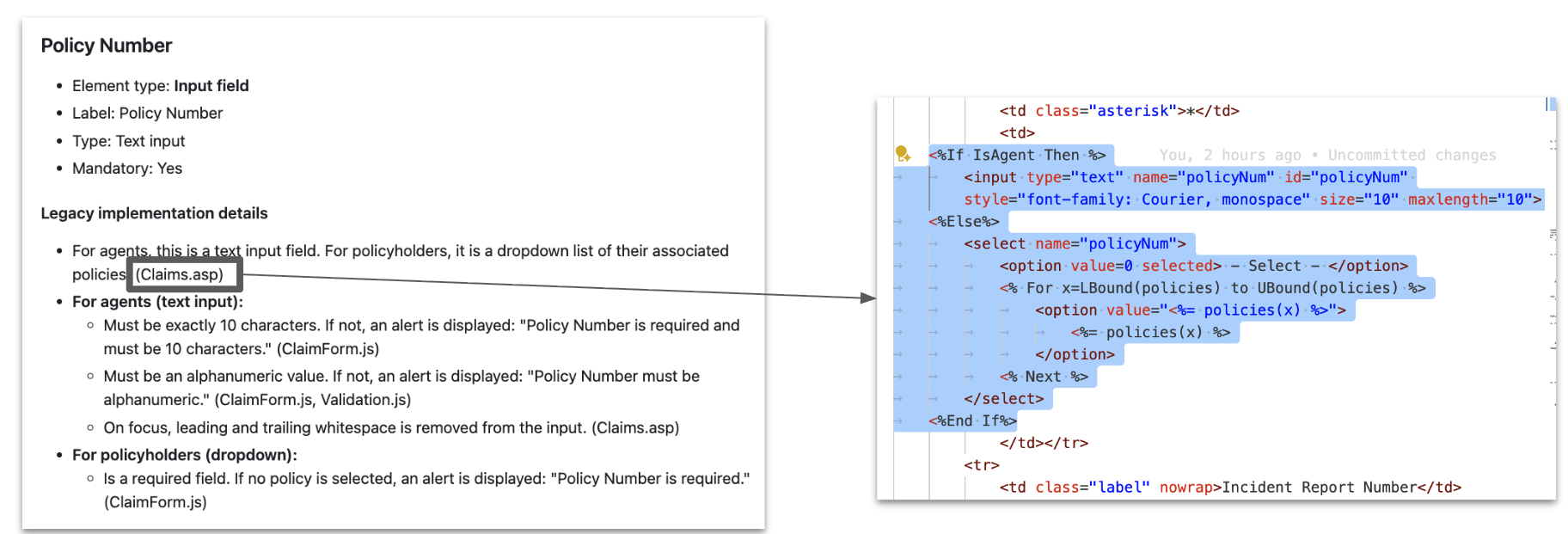

UI Layer Reconstruction

Looking the present reside utility and screenshots, we recognized the

UI parts. Utilizing the ASP and JS content material the dynamic behaviour related

with the UI component might be added. This gave us a UI spec like under:

What we seemed for: validation guidelines, navigation paths, hidden fields. One

of the important thing challenges we confronted from the early stage was hallucination, each

step we added an in depth lineage to make sure that we cross verify and make sure. In

the above instance we had the lineage of the place it comes from. Following this

sample, for each key info we added the lineage together with the

context. Right here the LLM actually sped up the summarizing of huge numbers of

display screen definitions and consolidating logic from ASP and JS sources with the

already recognized UI layouts and subject descriptions that may in any other case take

weeks to create and consolidate.

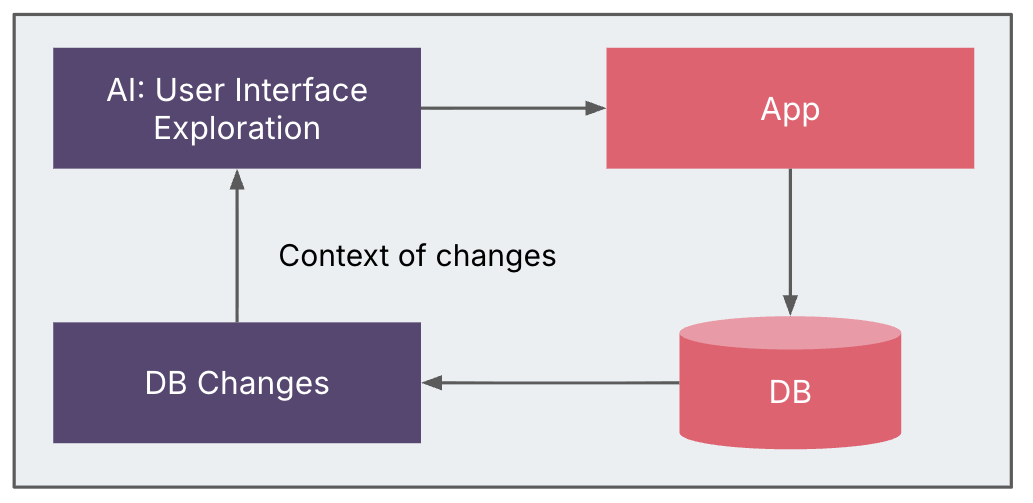

Discovery with Change Knowledge Seize (CDC)

We deliberate to make use of Change Knowledge Seize (CDC) to hint how UI actions mapped

to database exercise, retrieving change logs from MCP servers to trace the

workflows. Setting constraints meant CDC may solely be enabled partially,

limiting the breadth of captured knowledge.

Different potential sources—corresponding to front-end/back-end community visitors,

filesystem modifications, extra persistence layers, and even debugging

breakpoints—stay viable choices for finer-grained discovery. Even with

partial CDC, the insights proved useful in linking UI conduct to underlying

knowledge modifications and enriching the system blueprint.

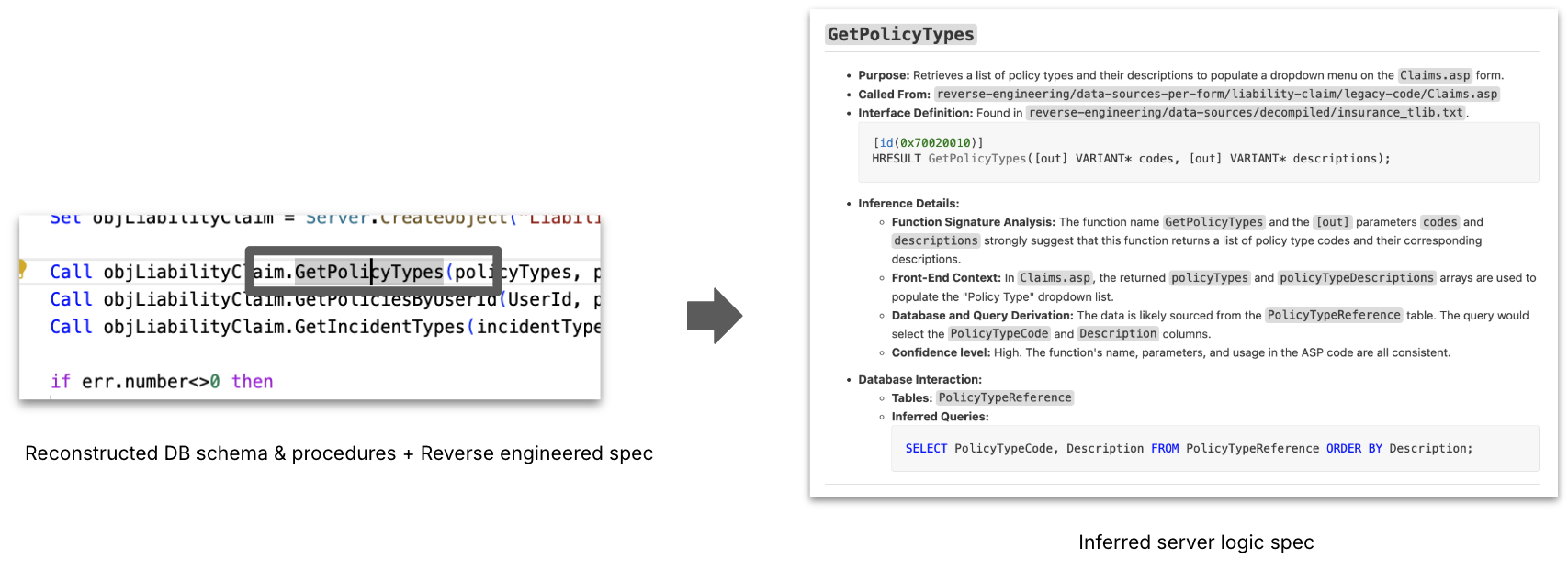

Server Logic Inferance

We then added extra context by supplying

typelibs that had been extracted from the native binaries, and saved procedures,

and schema extracted from the database. At this level with details about

format, presentation logic, and DB modifications, the server logic will be inferred,

which saved procedures are possible referred to as, and which tables are concerned for

most strategies and interfaces outlined within the native binaries. This course of leads

to an Inferred Server Logic Spec. LLM helped in proposing possible relationships

between App tier code and procedures / tables, which we then validated by

noticed knowledge flows.

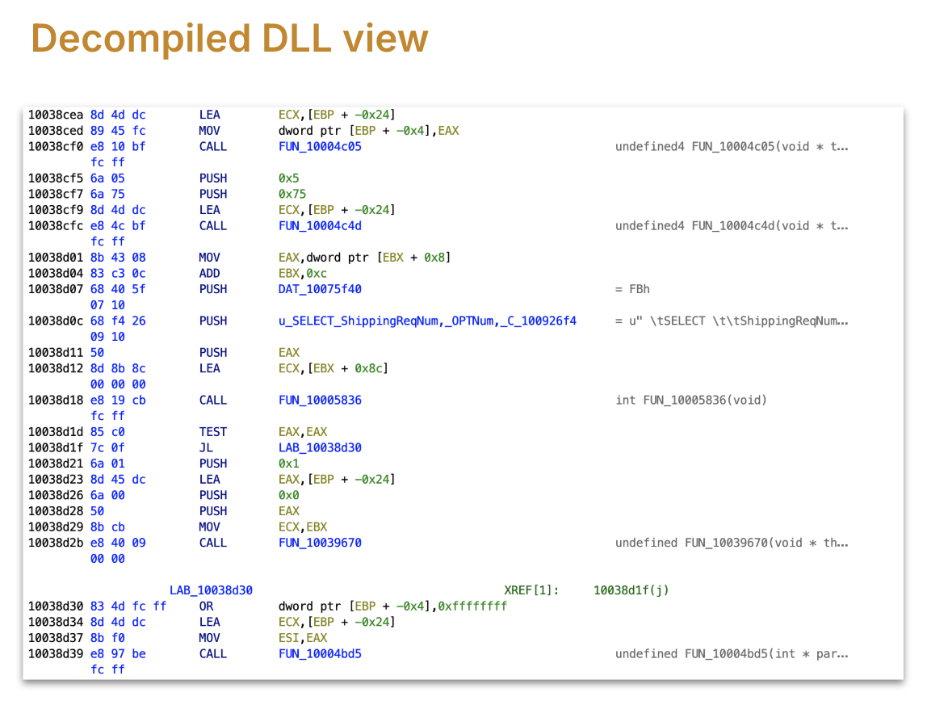

AI assisted Binary Archaeology

Essentially the most opaque layer was the compiled binaries (DLLs, executables). Right here,

we handled binaries as artifacts to be decoded somewhat than rebuilt. What we

seemed for: name bushes, recurring meeting patterns, candidate entry factors.

AI assisted in bulk summarizing disassembled code into human-readable

hypotheses, flagging possible operate roles — at all times validated by human

specialists.

The impression of not having good deployment practices was evident with the

Manufacturing machine having a number of variations of the identical file with file names

used to establish completely different variations and complicated names. Timestamps offered

some clues. Finding the binaries was additionally achieved utilizing the home windows registry.

There have been additionally proxies for every binary that handed calls to the precise binary

to permit the App tier to run on a distinct machine than the net tier. The

indisputable fact that proxy binaries had the identical title as goal binaries provides to

confusion.

We did not have to have a look at binary code of DLL. Instruments like Ghidra assist to

decompile binary to a giant set of ASM capabilities. A few of these instruments even have

the choice to transform ASM into C code however we discovered that conversions should not

at all times correct. In our case decompilation to C missed a vital lead.

Every DLL had 1000s of meeting capabilities, and we settled on an method

the place we establish the related capabilities for a useful space and decode what

that subtree of related capabilities does.

Prior Makes an attempt

Earlier than we arrived at this method, we tried

- brute-force technique: Added all meeting capabilities right into a workspace, and used

the LLM agent to make it humanly readable pseudocode. Confronted a number of challenges

with this. Ran out of the 1 million context window as LLM tried to ultimately

load all capabilities on account of dependencies (references it encountered e.g. operate

calls, and different capabilities referencing present one) - Break up the set of capabilities into a number of batches, a file every with 100s of

capabilities, after which use LLM to investigate every batch in isolation. We confronted so much

of hallucination points, and file measurement points whereas streaming to mannequin. A number of

capabilities had been transformed meaningfully however a number of different capabilities did not make

any-sense in any respect, all seemed like related capabilities, on cross checking we

realised the hallucination impact. - The subsequent try was to transform the capabilities separately, to

guarantee LLM is supplied with a recent slender window of context to restrict

hallucination. We confronted a number of challenges (API utilization restrict, charge

limits) – We could not confirm what LLM translation of enterprise logic

was proper or mistaken. Then we could not join the dots between these

capabilities. Fascinating observe, we even discovered some C++ STDLIB capabilities

like

std::vector::insert

on this method. We discovered so much had been really unwind capabilities purely

used to name destructors when exception occurs (stack

unwinding)

destructors, catch block capabilities. Clearly we would have liked to deal with

enterprise logic and ignore the compiled library capabilities, additionally combined

into the binary

After these makes an attempt we determined to alter our method to slice the DLL primarily based

on useful space/workflow somewhat than contemplate the entire meeting code.

Discovering the related operate

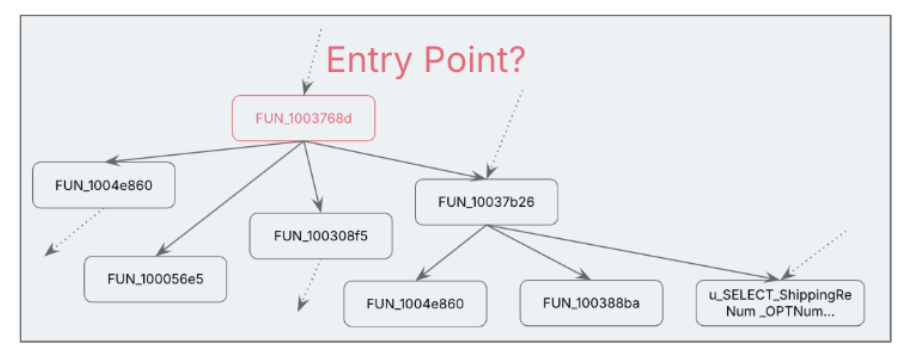

The primary problem within the useful space / workflow method is to discover a

hyperlink or entry level among the many 1000s of capabilities.

One of many out there choices was to rigorously have a look at the constants and

strings within the DLL. We used the historic context: late Nineties or early 2000

frequent architectural sample adopted in that interval was to insert knowledge into

the DB: was to both “choose for insert” or “insert/replace dealt with by saved

process” or by way of ADO (which is an ORM). Apparently we discovered all of the

patterns in numerous elements of the system.

Our performance was about inserting or updating the DB on the finish of the

course of however we could not discover any insert or replace queries within the strings, no

saved process to carry out the operation both. For the performance we

had been in search of, it occurred to really use a SELECT by SQL after which

up to date by way of ADO (activex knowledge object microsoft library).

We obtained our break primarily based on the desk title talked about within the

strings/constants, and this led to discovering the operate which is utilizing that

SQL assertion. Preliminary have a look at that operate did not reveal a lot, it might be

in the identical useful space however a part of a distinct workflow.

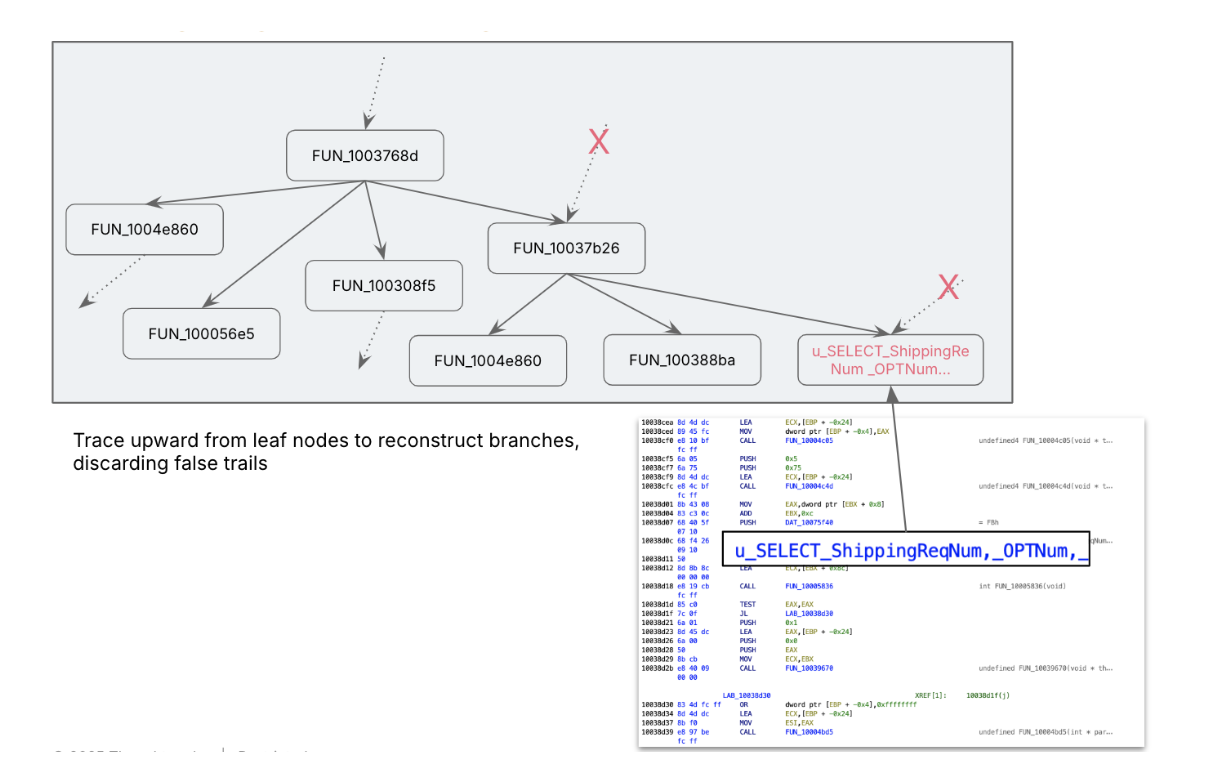

Constructing the related subtree

ASM code, and our disassembly device, gave us the operate name reference

knowledge, utilizing it we walked up the tree, assuming the assertion execution is one

of the leaf capabilities, we navigated to the dad or mum which referred to as this to

perceive its context. At every step we transformed ASM into pseudo code to

construct context.

Earlier after we transformed ASM to pseudocode utilizing brute-force we could not

cross confirm whether it is true. This time we’re higher ready as a result of we all know

to anticipate what might be the potential issues that would occur earlier than a

sql execution. And use the context that we gathered from earlier steps.

We mapped out related capabilities utilizing this name tree navigation, typically

we have now to keep away from mistaken paths. We discovered about context poisoning in a tough

method, in-advertely we handed what we had been in search of into LLM. From that

second LLM began colouring its output focused in the direction of what we had been wanting

for, main into mistaken paths and eroding belief. We needed to recreate a clear

room for AI to work in throughout this stage.

We obtained a excessive stage define of what the completely different capabilities had been, and what

they might be doing. For a given work move, we narrowed down from 4000+

capabilities to 40+ capabilities to cope with.

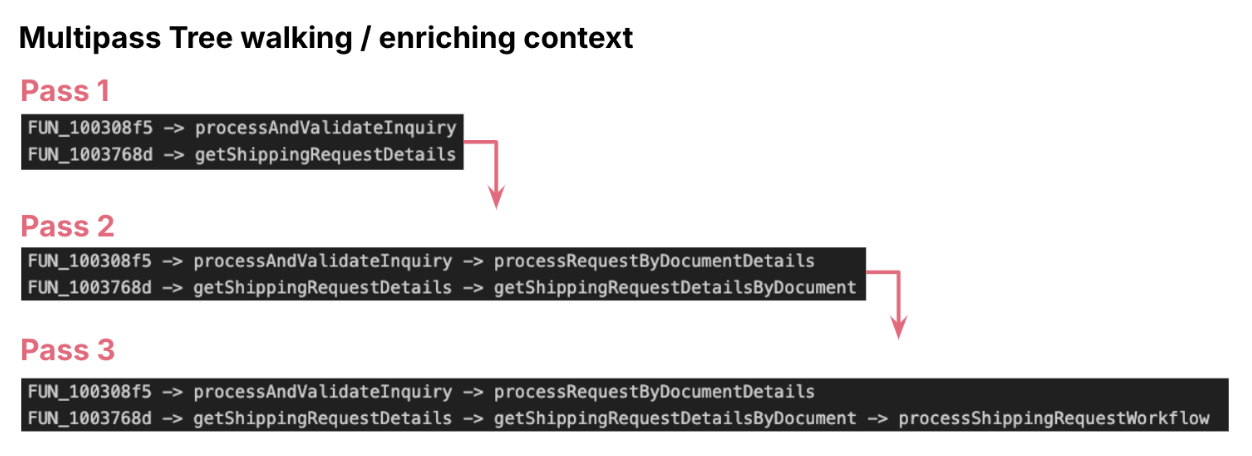

Multi-Cross Enrichment

AI accelerated the meeting archaeology layer by layer, cross by cross: We

utilized multi cross enrichment. In every cross, we both navigated from leaf

node to high of the tree or reverse, in every step we enriched the context of

the operate both utilizing its mother and father context or its baby context. This

helped us to alter the technical conversion of pseudocode right into a useful

specification. We adopted easy methods like asking LLM to provide

significant technique names primarily based on identified context. After a number of passes we construct

out your entire useful context.

Validating the entry level

The final and important problem was to substantiate the entry operate. Typical

to C++, digital capabilities made it more durable to hyperlink entry capabilities in school

definition. Whereas performance seemed full beginning with the basis node,

we weren’t certain if there may be some other extra operation occurring in a

dad or mum operate or a wrapper. Life would have been simpler if we had debugger

enabled, a easy break level and overview of the decision stack would have

confirmed it.

Nevertheless with triangulation methods, like:

- Name stack evaluation

- Validating argument signatures and the the return signature within the

stack - Cross-checking with UI layer calls (e.g., associating technique signature

with the “submit” name from Internet tier, checking parameter sorts and utilization, and

validating in opposition to that context)

Constructing the Spec from Fragments to Performance

By integrating the reconstructed parts from the earlier phases:UI Layer

Reconstruction, Discovery with CDC, Server Logic Inference, and Binary

evaluation of App tier, a whole useful abstract of the system is recreated

with excessive confidence. This complete specification varieties a traceable and

dependable basis for enterprise overview and modernization/ahead engineering

efforts.

From our work, a set of repeatable practices emerged. These aren’t

step-by-step recipes — each system is completely different — however guiding patterns that

form how one can method the unknown.

- Begin The place Visibility is Highest: Start with what you may see and belief:

screens, knowledge schemas, logs. These give a basis of observable conduct

earlier than diving into opaque binaries. This avoids evaluation paralysis by anchoring

early progress in artifacts customers already perceive. - Enrich in Passes: Don’t overload AI or people with the entire system at

as soon as. Break artifacts into manageable chunks, extract partial insights, and

progressively construct context. This reduces hallucination danger, reduces

assumptions, scales higher with massive legacy estates. - Triangulate Every little thing: By no means depend on a single artifact. Verify each

speculation throughout at the least two impartial sources — e.g., a display screen move matched

in opposition to a saved process, then validated in a binary name tree. It creates

confidence in conclusions, exposes hidden contradictions. - Protect Lineage: Observe the place every bit of inferred information comes

from — UI display screen, schema subject, binary operate. This “audit path” prevents

false assumptions from propagating unnoticed. When questions come up later, you

can hint again to unique proof. - Hold People within the Loop: AI can speed up evaluation, however it can’t

substitute area understanding. All the time pair AI hypotheses with knowledgeable validation,

particularly for business-critical guidelines. Helps to keep away from embedding AI errors

immediately into future modernization designs.

Conclusion and Key Takeaways

Blackbox reverse engineering, particularly when supercharged with AI, affords

important benefits for legacy system modernization:

- Accelerated Understanding: AI hastens legacy system understanding from

months to weeks, reworking complicated duties like changing meeting code into

pseudocode and classifying capabilities into enterprise or utility classes. - Decreased Concern of Undocumented Methods: organizations not have to

concern undocumented legacy programs. - Dependable First Step for Modernization: reverse engineering turns into a

dependable and accountable first step towards modernization.

This method unlocks Clear Useful Specs even with out

supply code, Higher-Knowledgeable Choices for modernization and cloud

migration, Perception-Pushed Ahead Engineering whereas transferring away from

guesswork.

The longer term holds a lot quicker legacy modernization as a result of

impression of AI instruments, drastically decreasing steep prices and dangerous long-term

commitments. Modernization is predicted to occur in “leaps and bounds”. Within the

subsequent 2-3 years we may count on extra programs to be retired than within the final 20

years. It is suggested to start out small, as even a sandboxed reverse

engineering effort can uncover shocking insights