The latest advancements in software programs and hardware have unleashed a plethora of possibilities, enabling individuals to operate massive language models on their personal computers with ease. One unlikely yet effective tool simplifying this process is indeed LM Studio. Discovering effortless ways to deploy Large Language Models (LLMs) locally with LM Studio. Let’s walk through the key steps, identify potential obstacles, and highlight the benefits of having a Large Language Model (LLM) onboard your system? Regardless of your level of interest in technology or artificial intelligence, this article offers invaluable insights and practical advice. Let’s get began!

Overview

- Determine the essential prerequisites for running a Large Language Model (LLM) within a domestic setting.

- Create a folder named “LM Studio” on your laptop.

- Collaborate seamlessly with a Large Language Model using LM Studio’s cutting-edge infrastructure and intuitive interface.

- Native Large Language Model (LLM) deployments offer significant benefits, including enhanced contextual understanding, improved entity recognition, and optimized language processing capabilities. However, they also pose limitations, such as scalability constraints, data quality concerns, and potential biases in training datasets.

What’s LM Studio?

Streamlines duties for managing and overseeing private computer systems. This innovative solution provides seamless capabilities that cater to a wide range of users, ensuring maximum efficiency and accessibility. With the LM Studio, effortlessly manage, organize, and deploy distinct large language models, liberating you from reliance on cloud services while unlocking their versatility for seamless usage.

Key Options of LM Studio

What’s the ultimate creative workflow? Experience seamless collaboration with LM Studio. Here, you’ll discover:

* End-to-end video production: From conceptualization to final cut, streamline your project lifecycle

* Advanced color grading: Enhance visuals with precision-crafted palettes and precise control

* AI-driven editing tools: Automate tedious tasks, unlock new creative possibilities

- LM Studio seamlessly manages fashion assets, datasets, and configurations with ease.

- What’s the optimal approach to seamlessly integrate diverse Large Language Models?

- Optimize settings to maximize efficiency, primarily leveraging your hardware’s capabilities.

- Interact seamlessly with the Large Language Model (LLM) in real-time through a built-in console, enabling effortless dialogue and seamless knowledge exchange.

- Harness the power of fashion without relying on the internet, ensuring the confidentiality and control of your data.

Additionally learn:

Setting Up LM Studio

Here’s a way to set up LM Studio:

System Necessities

Before investing in a laptop, ensure that your device meets the following fundamental requirements:

- A computer processor boasting at least four processing cores.

- Users require operating systems such as Windows 10, 11, or macOS 10.15 or later, or a modern Linux distribution.

- A minimum of 16 GB.

- A solid-state drive (SSD) with a minimum of at least 50 gigabytes (GB) of available storage space.

- A graphics processing unit from NVIDIA with CUDA functionality available, but not essential for optimal performance.

Set up Steps

- Download the installation package directly from the official website to your current operating system.

- Install the software program according to the on-screen instructions on your laptop.

- Upon initialization, navigate to the setup interface and proceed through the preliminary setup wizard to establish fundamental settings.

Downloading and Configuring a Mannequin

To acquire and set up a model, follow these steps.

- Navigate to the ‘Fashions’ section within the LM Studio interface and explore the available language models. The original text:

Choose one which meets your necessities and hit ‘Obtain.’

Improved text:

Select an option that aligns with your requirements and click “Obtain” to proceed. - Following download, adjust mannequin parameters, including batch size, memory usage, and processing power. These changes should align seamlessly with your hardware specifications.

- Once settings are configured, initiate the simulation by clicking ‘Load Mannequin.’ Depending on the mannequin’s size and your hardware’s capabilities, this process may take a few minutes to complete.

What are some effective strategies for utilizing a Large Language Model (LLM)?

Utilizing the Interactive Console

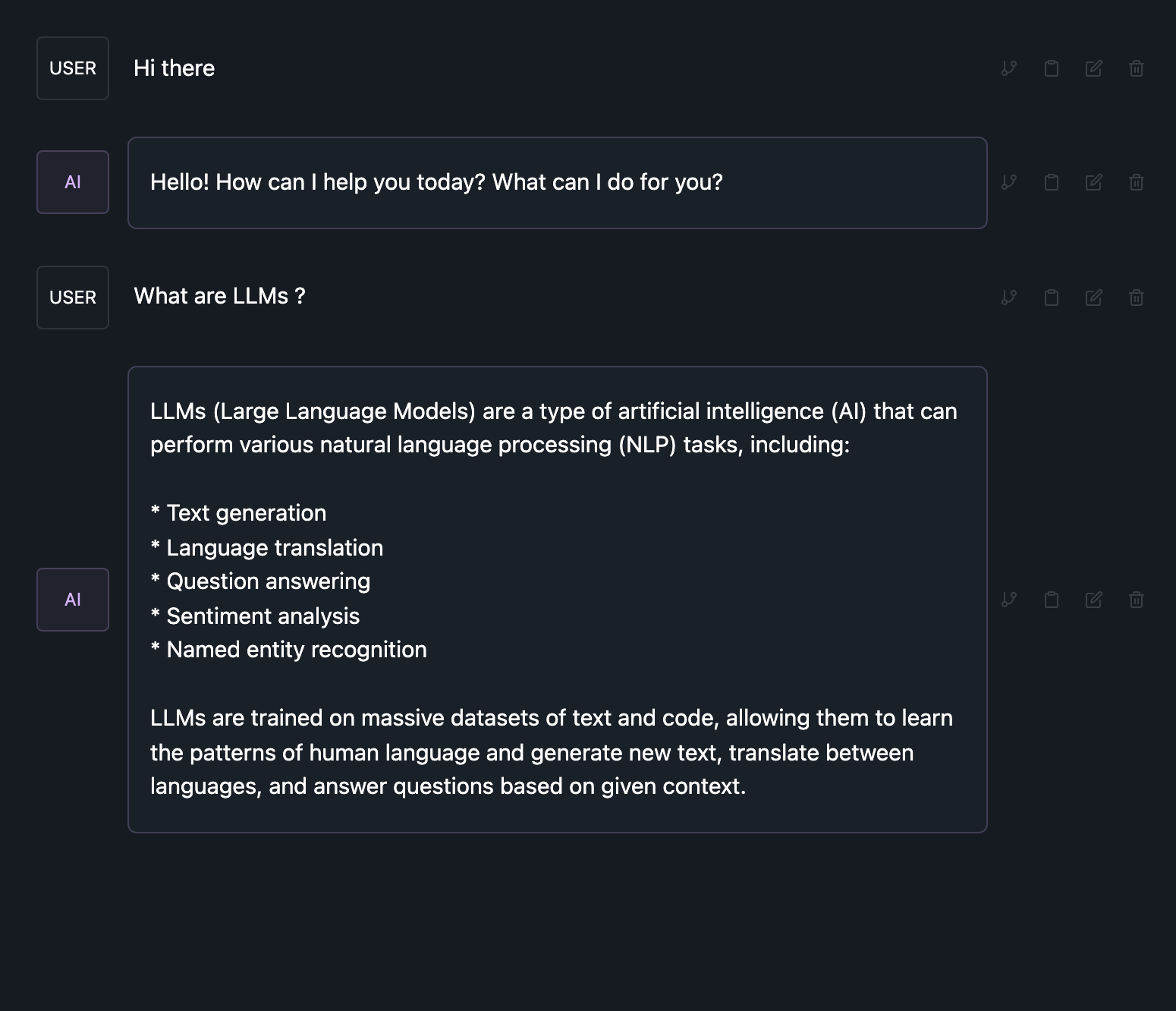

The AI-powered console allows users to engage in a dynamic dialogue by entering text and receiving thoughtful responses generated by the pre-trained large language model (LLM). This console provides a suitable environment for testing the mannequin’s capabilities and exploring various prompts.

- From within the LM Studio interface, proceed to the “Console” section.

- Kind your immediate or query into the enter discipline.

- The language learning model will process your input and generate a response, which will likely be displayed in the console.

Integrating with Functions

LM Studio facilitates seamless API integration, empowering users to effortlessly incorporate the Large Language Model (LLM) into their projects. This innovative technology is particularly valuable in developing chatbots, content generation tools, or any software that relies on natural language comprehension and creation.

The evolving landscape of AI-driven innovation has birthed a revolutionary platform – LM Studio – designed to harness the power of Gemma 2B, Google’s groundbreaking language model.

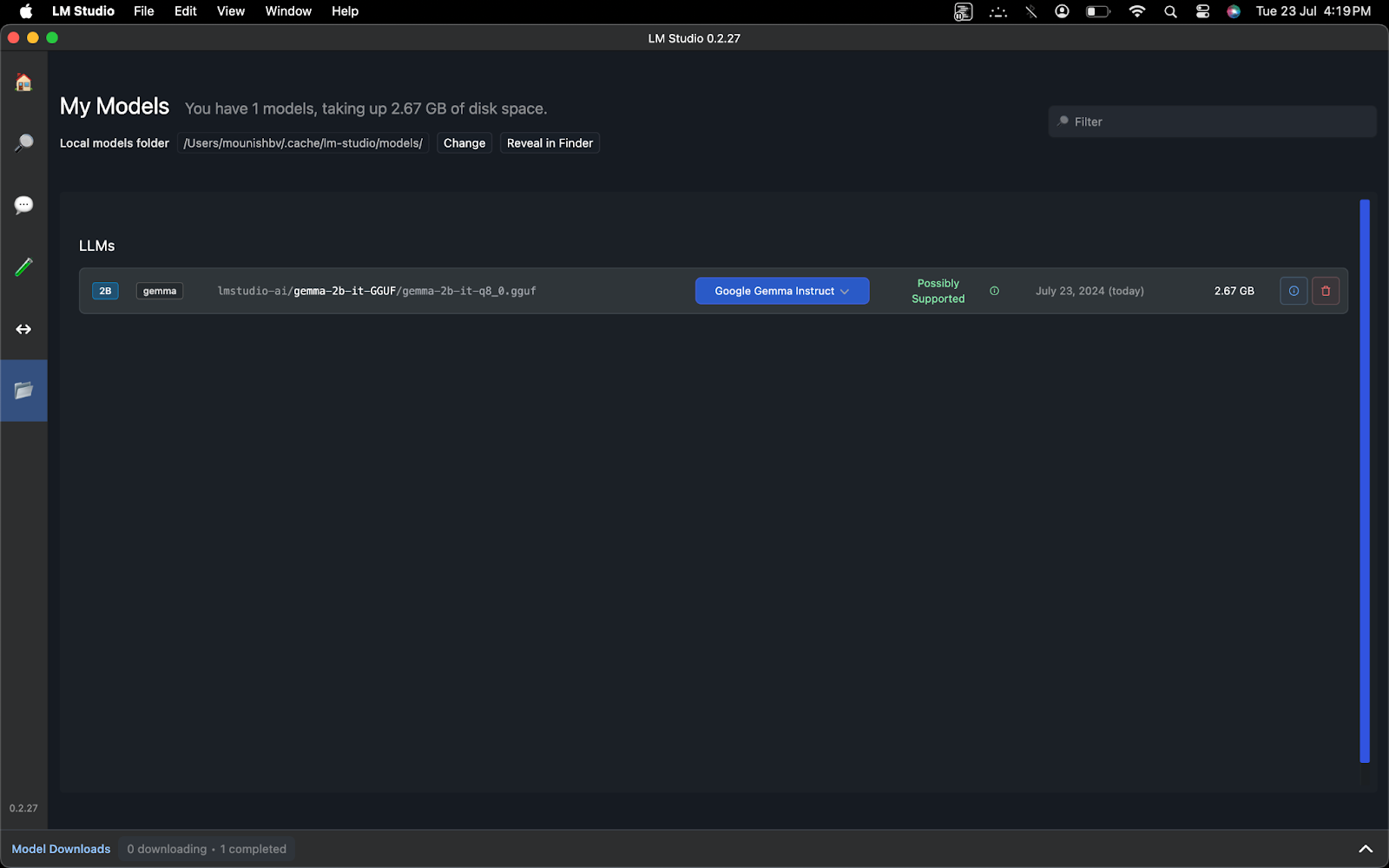

I successfully downloaded Instruct from the official website of the house, which is a compact and efficient large language model. You can acquire any requested styles from the website’s homepage or search for a specific model explicitly. You can view your downloaded styles in “My Styles”.

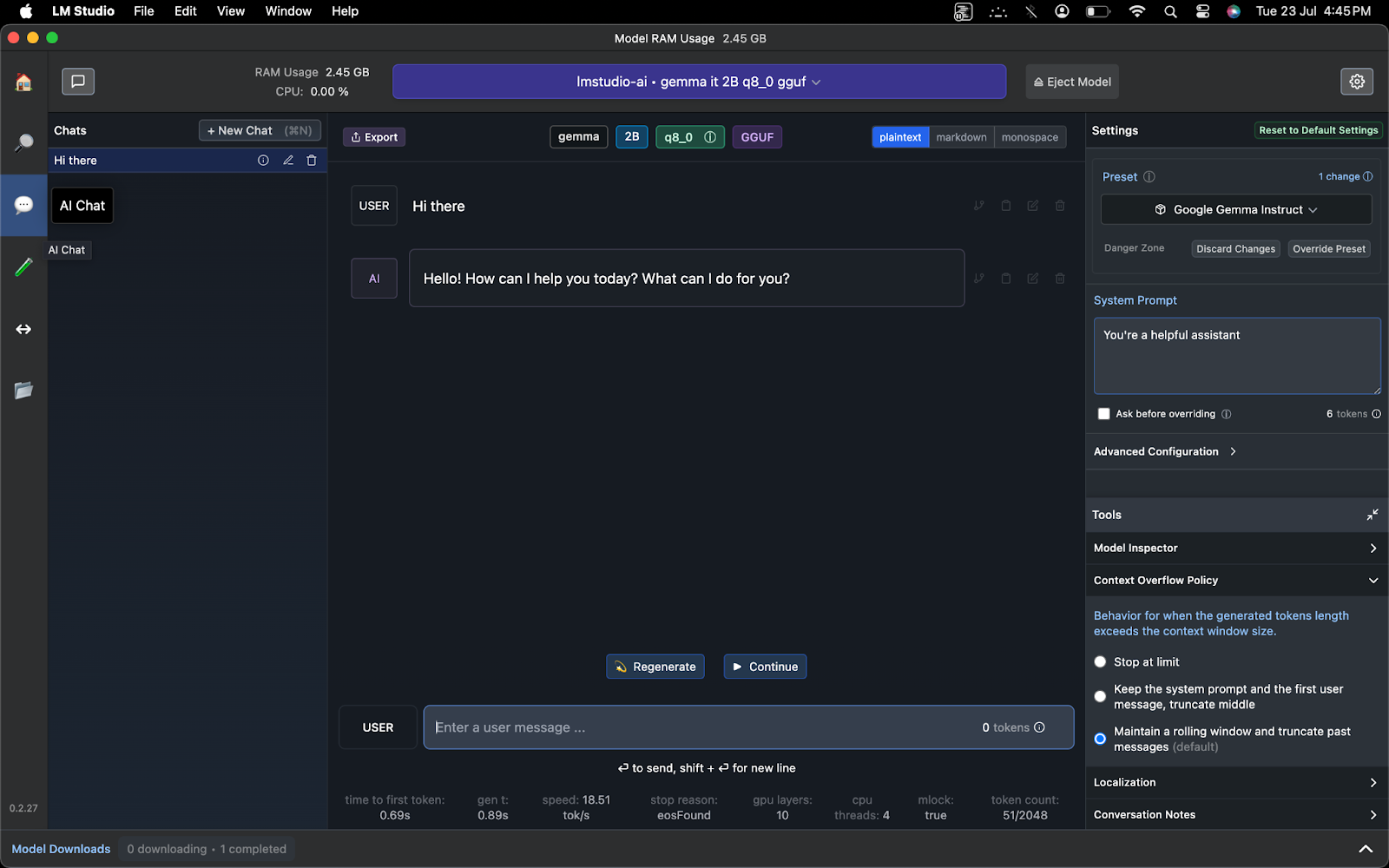

Select the mannequin on the top of this page. I am using the Gemma 2B instructional model here. What’s the current memory usage of your system? You can easily check the RAM utilization by navigating to Task Manager or Activity Monitor, depending on your operating system.

I set the system immediately to “You’re a valuable resource.” You are free to depart that option as the default or set it according to your needs.

The textual content era’s language model is responding to my prompts and answering my questions effectively. Discovering and experimenting with various Large Language Models (LLMs) has become increasingly accessible for domestic exploration.

While working in a large language model region offers many benefits, there are several advantages to working locally. Firstly, regional offices offer more personal connections with colleagues and clients, fostering stronger relationships and better communication. Secondly, local teams often have deeper understanding of the specific industry needs and cultural nuances, allowing for more tailored solutions.

Listed here are the advantages:

- Domestically hosting a Large Language Model (LLM) guarantees the confidentiality of your data remains intact, as no sensitive information needs to be transmitted to external servers.

- Harness the capabilities of your existing hardware infrastructure to circumvent recurring costs associated with cloud-based large language model (LLM) services.

- Configure the mannequin according to your unique requirements and hardware specifications to optimize performance.

- Design a self-contained, offline-capable mannequin to ensure universal access and usability, regardless of internet connectivity, catering to diverse settings where online connectivity may be limited or unavailable.

Limitations and Challenges

The challenges of running Large Language Models (LLMs) within domestic confines stem from several constraints.

- Large language models require a significant amount of domestic computing resources, particularly for larger models.

- Preliminary setup and configuration can be expedited for customers with limited technical expertise.

- Native deployment may lack the efficiency and scalability offered by cloud-based solutions, especially in high-stakes, real-time applications.

Conclusion

Running an LLM on a personal laptop utilizing LM Studio offers numerous advantages, including enhanced knowledge security, reduced expenses, and increased customisation options. Despite hurdles linked to hardware demands and setup processes, the benefits make this an attractive option for those working with large language models.

Ans. LM Studio streamlines the onboarding and management of massive language models, offering an intuitive interface and robust features.

Ans. LM Studio enables users to run fashion simulations offline, ensuring the confidentiality and portability of learning experiences even in remote settings.

Ans. What matters most to you when selecting a cloud storage service? Is it the assurance of your data’s confidentiality, the potential for significant financial savings, the ability to tailor the solution to your unique needs, or the freedom to access your files even when disconnected from the internet?

Ans. The deployment of on-premises solutions poses significant challenges, primarily due to the excessive hardware requirements, complex setup procedures, and inherent performance constraints that often contrast unfavorably with their cloud-based counterparts.