Introduction

In this comprehensive tutorial, we will develop a cutting-edge deep learning model capable of accurately classifying phrases. We employ techniques for handling knowledge input/output (IO) and preprocessing, as well as tools to build and fine-tune the model.

The dataset comprises approximately 65,000 one-second audio recordings of individuals articulating 30 distinct phrases. Each audio file contains a solitary English phrase delivered in spoken form. The dataset was released by Google under a Creative Commons license.

The original implementation of our model is a Keras port of the TensorFlow Lite-based, which itself drew inspiration from . Different approaches exist for the speech recognition activity, such as acoustic modelling, Hidden Markov Model (HMM), or Gaussian Mixture Model (GMM).

While our mannequin may not be cutting-edge in terms of audio recognition technology, it does have the advantage of being relatively simple and rapid to train. We offer a means of efficiently harnessing and presenting ways to process and deliver knowledge effectively.

Audio illustration

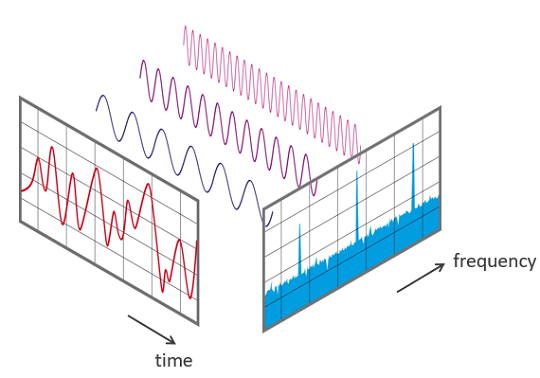

Several deep learning architectures employ an end-to-end approach, where a model learns meaningful representations directly from raw data without intermediate processing or feature engineering. Despite these limitations, audio knowledge grows remarkably rapidly – 16,000 samples per second, leveraging a richly detailed architecture across multiple time scales. To avoid dealing with raw waveform data, researchers frequently employ signal processing techniques.

Each sound wave will be represented by its spectral signature, which can be computed digitally using algorithms such as FFT.

Audio knowledge can be effectively categorized by breaking it down into smaller, overlapping segments. To determine the magnitude of each frequency component in a given chunk, we employ the Fast Fourier Transform (FFT). The resulting spectra are combined, layer by layer, to create the phenomenon we refer to as.

It’s also common for speech recognition methods to further process the audio signal, including remodelling the frequency spectrum and computing relevant features.

The transformation effectively takes into account the human ear’s limited ability to distinguish between closely spaced frequencies, subsequently creating discrete bins along the frequency axis. Discovering the incredible world of Mel-Frequency Cepstral Coefficients (MFCCs).

Following this procedure, we’ve obtained a visual representation for each audio pattern, which we’ll leverage to train convolutional neural networks, adhering to conventional architectures commonly employed in image classification applications.

Downloading

Let’s gain insight into our vision. You may also obtain this data (~1 GB) or rent it from a provider.

Contained in the knowledge Listings We Could Have: A Folder Known As speech_commands_v0.01. The WAV audio files within this listing are organized into subfolders bearing specific label names. Within every second of recorded audio, snippets of conversations mentioning the term “mattress” are carefully stored. mattress listing. Among the thirty entities in question, one stands out for its distinct characteristics. _background_noise_ which seamlessly integrates diverse patterns, potentially merged to mimic ambient soundscape.

Importing

On this step, we will list all audio .wav information into a dataframe. tibble with 3 columns:

fname: the file title;classThe title tag for each audio file.class_idA unique numerical value, ranging from zero to represent each class independently, allowing for efficient one-hot encoding of the courses.

Once we’ve created a generator using this framework. tfdatasets bundle.

Generator

We’ll now create our Datasetwhich, situated within the context of tfdatasetsProvides critical operations to the TensorFlow graph, empowering the model to learn and pre-process complex knowledge. As TensorFlow ops, these are executed in C++ and concurrently with Mannequin training.

Here is the rewritten text in a different style:

Our forthcoming generator will have the capability to process audio files by analyzing the data from disk, generating spectrograms for each file, and aggregating the resulting outputs into batches.

Let’s create a comprehensive dataset from the extracted slices of relevant information. knowledge.body With audio file names and courses, we’ve straightforwardly generated.

Let’s define the key parameters for creating a high-quality spectrogram. Parameters include: ?

– Window size

– Number of FFT points

– Frequency resolution We have to outline window_size_ms What is the sample rate, measured in milliseconds, at which we will divide the audio waveform into manageable chunks? window_stride_msThe spatial relationships between adjacent facility clusters.

Now we’ll convert the window measurement and stride from milliseconds to samples. The audio data consists of 16,000 samples per second, with a sampling interval of approximately 0.0625 milliseconds?

We’ll obtain various segments suitable for generating spectrograms, mirroring the assortment of frames and Fast Fourier Transform (FFT) measurements, thus comprising a range of frequency-axis bins. We will employ an operation that computes the spectrogram without permitting us to adjust the Fast Fourier Transform (FFT) measurement, instead utilizing the first power of two exceeding the window size by default.

We’ll now use dataset_map Allows us to define a preprocessing operation for each line of our dataset, enabling fine-grained control over data preparation. At this stage, we retrieve the unprocessed audio file from storage, generate its spectrogram, and construct a one-hot encoded response vector.

We will now define the criteria for selecting batches of observations from the dataset. We’re utilizing dataset_shuffle since we require shuffling observations within the dataset, otherwise it may preserve the order of the df object. Then we use dataset_repeat To prevent TensorFlow from exhausting the entire dataset and reinitializing the generator at each epoch, we need to explicitly indicate that we wish to retain observations beyond the initial pass. And above all, crucially, dataset_padded_batch to specify batches of measurement 32, which require padding, namely If some commentary has a distinct measurement, we append leading zeros. The padded form is carefully presented dataset_padded_batch through the padded_shapes argument and we use NULL It is not necessary to pad this dimension.

That is our dataset specification; however, we wish to rewrite all the code for validation knowledge, so it’s a good practice to encapsulate this within a function that takes in relevant data and other necessary parameters like. window_size_ms and window_stride_ms. Below, we will outline an operation called data_generator The generator that relies on these inputs can create.

What are the fundamental principles of coaching and validation? How do these concepts drive employee engagement and motivation? It’s worth noting that simply executing this code won’t actually compute a spectrogram or process any files. The proposed architecture solely outlines the framework for processing and learning data within the TensorFlow graph.

To obtain a batch from the generator, one may establish a TensorFlow session and instruct it to execute the generator. For instance:

Listing of two dollar signs: $ : numeric vector [1:32, 1:98, 1:257, 1] -4.6, repeated numerous times $ : numeric vector [1:32, 1:30] 0, repeated 10 timesEvery time you run sess$run(batch) It’s generally more effective to view a separate collection of observations.

Mannequin definition

Now that we’ve established our approach to learning, let’s focus on refining our understanding of a mannequin. Architectures typically employed for image classification tasks can be expected to perform well with spectrograms, treating them as visual representations.

We’ll develop a convolutional neural network, mirroring the approach taken with the MNIST dataset.

The entrance measurement is characterized by the number of chunks and FFT measurements. Like previously established, such compounds are frequently sourced from the window_size_ms and window_stride_ms used to generate the spectrogram.

We will now outline our model utilizing the Keras sequential API:

We employed a four-layer convolutional architecture, alternating with max-pooling layers, to effectively extract features from spectrogram images; subsequently, a dual-dense layer configuration was applied to the output. Compared to more advanced architectures such as ResNet and DenseNet, our community’s simplicity is a notable feature, allowing for efficient performance in image recognition tasks.

Now let’s compile our mannequin. We will employ categorical cross-entropy as the loss function and utilize the Adadelta optimizer. We will also specify that we will evaluate the accuracy metric during training.

Mannequin becoming

Now, we’ll match our mannequin. In Keras, we utilize TensorFlow Datasets as inputs to the model. fit_generator Let’s get to work and make it happen right now.

Epoch 1/10 | Time taken: 87s, Loss: 2.0225, Accuracy: 41.84%, Val Loss: 0.7855, Val Acc: 79.07% Epoch 2/10 | Time taken: 75s, Loss: 0.8781, Accuracy: 74.32%, Val Loss: 0.4522, Val Acc: 87.04% Epoch 3/10 | Time taken: 75s, Loss: 0.6196, Accuracy: 81.90%, Val Loss: 0.3513, Val Acc: 90.06% Epoch 4/10 | Time taken: 75s, Loss: 0.4958, Accuracy: 85.43%, Val Loss: 0.3130, Val Acc: 91.17% Epoch 5/10 | Time taken: 75s, Loss: 0.4282, Accuracy: 87.54%, Val Loss: 0.2866, Val Acc: 92.13% Epoch 6/10 | Time taken: 76s, Loss: 0.3852, Accuracy: 88.85%, Val Loss: 0.2732, Val Acc: 92.52% Epoch 7/10 | Time taken: 75s, Loss: 0.3566, Accuracy: 89.91%, Val Loss: 0.2700, Val Acc: 92.69% Epoch 8/10 | Time taken: 76s, Loss: 0.3364, Accuracy: 90.45%, Val Loss: 0.2573, Val Acc: 92.84% Epoch 9/10 | Time taken: 76s, Loss: 0.3220, Accuracy: 90.87%, Val Loss: 0.2537, Val Acc: 93.23% Epoch 10/10 | Time taken: 76s, Loss: 0.2997, Accuracy: 91.50%, Val Loss: 0.2582, Val Acc: 93.23%The mannequin’s accuracy is 93.23%. Let’s dive into making predictions and examining the confusion matrix.

Making predictions

We will use thepredict_generator Train models to generate forecasts on an unexplored data set. Let’s generate predictions for our validation dataset.

The predict_generator The operating system requires a “step_size” argument, which specifies the number of instances the generator may be called.

We will determine the diversity of steps by grasping the batch size and the scope of our validation dataset.

We will then use the predict_generator operate:

Num[1:19,424; 1:30]: 1.22 × 10^(-13), 7.30 × 10^(-19), 5.29 × 10^(-10), 6.66 × 10^(-22), 1.12 × 10^(-17) ...The output matrix will have 30 columns, each representing a unique phrase, alongside n_steps*batch_size rows. Upon observing that it commences repeating the dataset towards the end to form a complete batch?

We calculate the expected class by selecting the column exhibiting the highest likelihood, thereby ensuring optimal results.

A thought-provoking way to illustrate a confusion matrix is to craft a captivating alluvial diagram.

As evident from the diagram, the primary error our model commits is incorrectly classifying “tree” as “three”. Widespread errors abound, including the misclassification of “go” as “no”, and “up” as “off”. At a remarkable 93% accuracy across 30 courses, it’s fair to conclude that this model is surprisingly affordable.

The saved mannequin takes up approximately 25 megabytes of disk space, making it a reasonable allocation for a typical desktop computer; however, this may not be feasible for smaller devices. Let’s test a simplified model with fewer components and assess how much the performance drops.

In the realm of speech recognition, a common practice is to supplement duties with knowledge augmentation by incorporating background noise into the spoken audio, thereby enhancing its effectiveness in scenarios where ambient distractions often prevail.

The total code required to complete this tutorial is readily available.