Migrating knowledge warehouse workloads poses a significant challenge, yet remains a crucial responsibility for any organization to undertake successfully. Regardless of whether the driving force is the need to scale up existing offerings or reduce the costs associated with licensing and hardware expenditures for legacy systems, migration entails more than simply moving files. At Databricks, our seasoned Professional Services team has collaborated extensively with numerous clients and partners on migration projects, boasting a comprehensive track record of successful migrations. When embarking on a migration project, knowledge professionals should heed the following best practices and lessons learned to ensure a successful outcome:

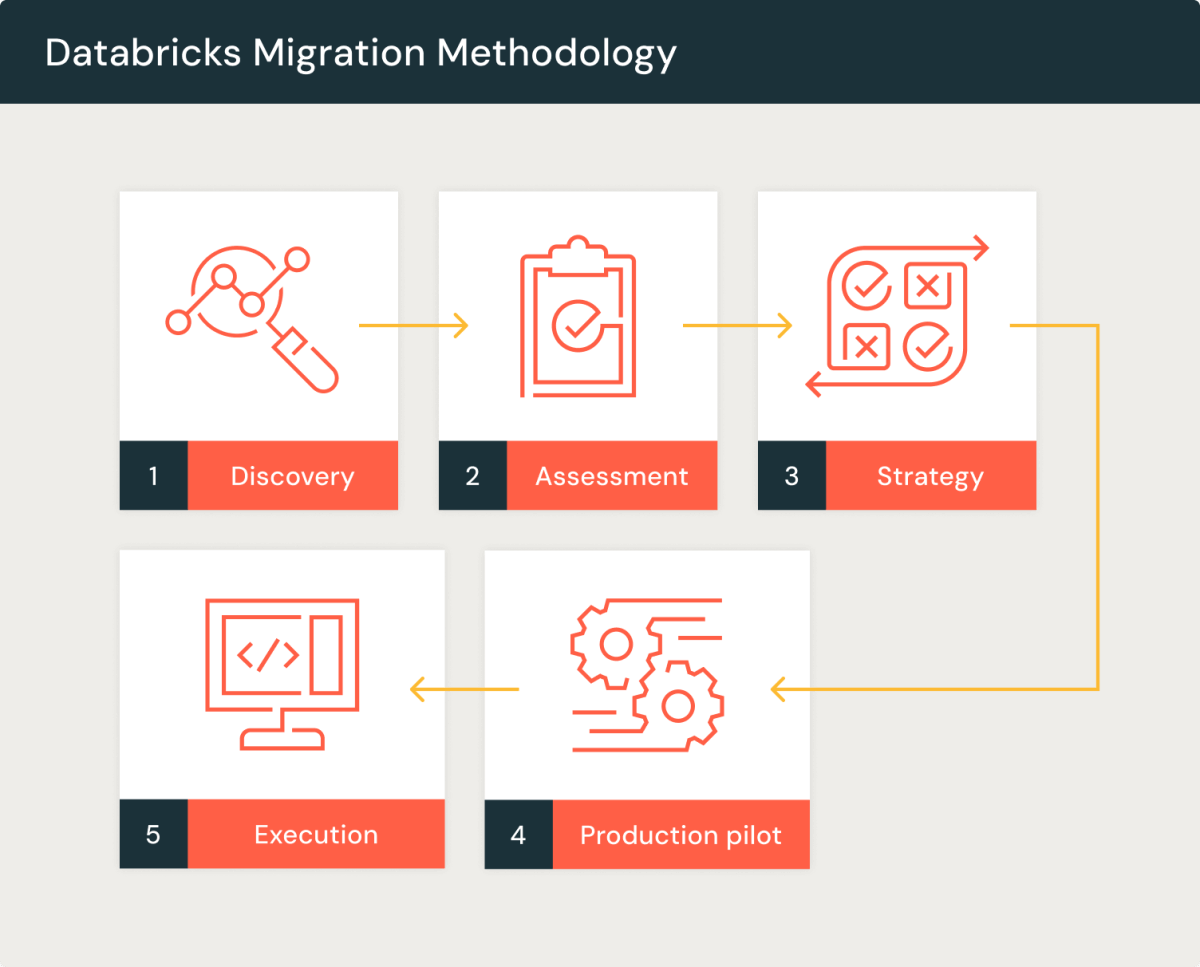

At Databricks, our team has crafted a five-phase framework for streamlined migration processes, grounded in our collective expertise and real-world experience.

Before embarking on any migration project, we begin with a crucial section. We aim to comprehend the rationales driving the migration and overcome the hurdles posed by our outdated legacy system. By highlighting the benefits of transferring workloads to the Databricks Information Intelligence Platform, we emphasize its value proposition. The invention section entails collaborative Q&A periods and architectural discussions with key stakeholders from the shopper, Databricks. Furthermore, our automated discovery profiler provides valuable insights into legacy workloads, enabling us to accurately estimate the consumption-based pricing for the Databricks Platform, ultimately calculating a tangible Total Cost of Ownership (TCO) discount.

Following the completion of the invention phase, we transition to an in-depth analysis. During this stage, we leverage advanced analytics tools to assess the intricacy of the existing codebase and gain a preliminary understanding of the effort and resources necessary for refactoring. This course provides valuable insights into the architecture of the current knowledge landscape and the capabilities it enables. This additional step enables us to distill the migration’s objectives, eliminate obsolete assets, and begin conceptualizing the target architecture.

During the migration and design phase, we will refine the nuances of the goal framework, as well as develop a comprehensive plan for knowledge migration, extract-transform-load (ETL) processes, saved process code translations, and report and business intelligence (BI) modernization initiatives. At this juncture, we are able to identify a precise mapping of the interdependencies between the supply-side assets and the desired outcomes. Once we’ve finalized the migration technique, alongside the goal structure, migration patterns, toolings, and selected supply chain partners, Databricks PS, in conjunction with the chosen SI associate, will develop a comprehensive Migration Statement of Work (SOW) outlining the Pilot plan (Section I), or multiple phases for the project. Databricks licenses several entities that provide automated tooling to ensure seamless and profitable migrations. Databricks-empowered companies can collaborate with a Systems Integrator (SI) partner to offer Migration Assurance services alongside their solutions.

Once the Statement of Work (SOW) is signed, Databricks Professional Services (PS) or the designated Supply Partner executes the assigned project section. A seamless migration of a comprehensive end-to-end use case from its legacy platform to Databricks is accomplished. Information, code, and reviews are seamlessly migrated to Databricks using automated tools and code converters, streamlining the process. Best practices are thoroughly documented, and a comprehensive Dash retrospective is conducted to distill the valuable lessons learned, thereby identifying opportunities for growth and improvement. A comprehensive Databricks onboarding guide serves as a foundation for subsequent phases, which may be tackled concurrently through agile Scrum methodologies, enabling efficient execution across multiple development sprints.

Ultimately, we proceed to the comprehensive Migration chapter. We reapply our refined methodology, harmoniously combining all insights gained. This enables the establishment of a Data Engineering Centre of Excellence across the organization, fostering scalability through collaborative efforts with customer groups, licensed System Integrator partners, and our Professional Services team to ensure seamless migration experiences and guaranteed success.

Classes realized

In the technique section, it’s crucial to thoroughly grasp your product’s knowledge landscape. It is equally crucial to verify multiple specific end-to-end usage scenarios during the manufacturing pilot phase. Despite best intentions, certain issues may arise during execution? Confronting challenges head-on enables you to explore potential solutions from the outset. To select a suitable pilot use case, start by identifying a crucial objective – such as a critical reporting dashboard – that is vital for the product or service being promoted. Then, determine the necessary data and processes required to create this dashboard, and finally attempt to build an equivalent dashboard on your target platform as a proof-of-concept test. This provides a preview of the comprehensive migration course content.

To comprehensively understand the extent of the migration, we begin by administering questionnaires and conducting in-depth interviews with the database directors. Our proprietary platform leverages advanced profiling techniques to gather detailed insights from database information dictionaries and Hadoop system metadata, providing us with accurate, data-driven metrics on CPU usage, the proportion of Extract Transform Load (ETL) versus Business Intelligence (BI) utilization, and patterns of use by diverse customers, as well as repair strategies. Here are the estimated costs of running this workload on Databricks: Code complexity analyzers provide valuable insights by categorizing database objects such as Data Definition Language (DDL) statements, Data Manipulation Language (DML) commands, stored procedures, and Extract, Transform, Load (ETL) processes into distinct complexity tiers. By determining these key factors, we are able to establish effective migration prices and timelines.

Automating code conversions using specialized tools is crucial for efficient migration and cost reduction. Instruments exist to facilitate the process of upgrading legacy code, which encompasses tasks such as migrated saved procedures or Extract-Transform-Load (ETL), into Databricks SQL. The revised text is: This guarantees that no enterprise guidelines or features applied within the legacy code are overlooked due to inadequate documentation. While the conversion course often yields significant benefits, saving builders up to 80% of growth time allows them to efficiently review transformed code, implement necessary modifications, and focus on unit testing without delay. It is crucial to ensure that automated tooling can successfully convert both database code and ETL code from legacy GUI-based platforms, thereby streamlining the migration process.

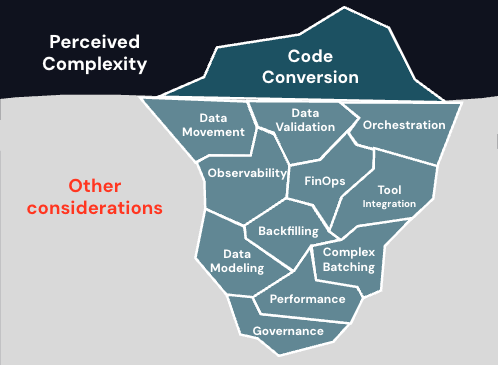

While migrations may initially present a seemingly straightforward endeavour? After accounting for migration, our primary focus typically shifts from rewriting code within the supply engine to aligning it with the target requirements. Notwithstanding this, it is crucial to avoid overlooking various details that could prove essential for making the new platform functional.

To successfully complete the project, it is crucial to establish a definitive plan for migrating knowledge, akin to the processes employed in code migration and conversion. Data migration may be successfully accomplished using Databricks, enabling seamless integration with relevant data sources or selecting from one of our numerous offerings. During the growth phase, it may be necessary to extract historical and lagging data from the legacy enterprise data warehouse (EDW) while simultaneously establishing the data ingestion process from relevant sources to Databricks. Furthermore, you will require a well-structured orchestration strategy incorporating diverse instrumental timbres, such as woodwinds, brass, and percussion, to effectively convey the intended musical narrative. Before embarking on a migration of your knowledge platform, ensure that it is properly aligned with your software development progress and considered complete beforehand.

Governance and safety are distinct yet often overlooked aspects to consider when planning and defining a migration project, respectively. Regardless of your current governance practices, we recommend leveraging Databricks as a unified platform for centralized data management, auditing, lineage, and knowledge discovery capabilities. Enabling the Unity Catalog during migration significantly amplifies the complexity of the process, necessitating additional resources and effort. Discovering the unique strengths of some of our existing team members.

Correct knowledge validation and enthusiastic SME engagement are crucial for project success during Person Acceptance Testing, as it ensures accurate functionality and stakeholder satisfaction. To ensure seamless data transfers, Databricks migration staff, along with our certified System Integrators, leverage parallel testing and knowledge reconciliation tools to thoroughly verify data integrity and accuracy, guaranteeing zero discrepancies meet the stringent information quality standards. Close coordination between executives and subject matter experts (SMEs) enables seamless integration of user-acceptance testing, streamlining the transition to production and efficient decommissioning of outdated systems once the new platform is operational.

Implement exemplary operational best practices, aligned with industry-recognized knowledge quality frameworks, robust exception handling, reprocessing strategies, and transparent knowledge pipeline observability controls, to capture and accurately report process metrics. This tool enables swift identification and notification of any discrepancies or setbacks, thereby facilitating prompt rectification measures. Databricks provides options such as metrics and tables to enhance observability and streamline Financial Operations (FinOps) monitoring.

Migrations might be difficult. There will always be trade-offs between stability and unexpected events, necessitating flexibility and the ability to adapt to unforeseen delays. You require assured partners and choices for the entities, scope, and competence aspects of the migration process. We recommend entrusting your project to our seasoned consultants, renowned for their comprehensive understanding of providing timely and high-quality migration solutions. To initiate your migration evaluation process.