Artificial neural networks have triggered a revolutionary impact on the development of controllers for robots, enabling the creation of highly adaptable and environmentally conscious machines. Despite their brain-like sophistication, machine-learning techniques pose a paradoxical conundrum: while their intricacy yields exceptional performance, it equally complicates verifying whether a robot driven by a neural network can reliably complete its task without compromising safety.

The traditional approach to verifying robustness and stability relies on techniques dubbed Lyapunov methods. Since the discovery of a Lyapunov function with decreasing value is guaranteed, it follows that one can confidently conclude that the system’s instability associated with higher values will never materialize. While prior methods for verifying Lyapunov stability in systems controlled by neural networks have struggled to accommodate complex robotic architectures.

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), collaborating with others, have devised innovative approaches to thoroughly verify Lyapunov computations utilizing advanced methodologies. The algorithm successfully identifies and validates a Lyapunov operator, thereby ensuring the stability of the system. This approach will undoubtedly enable safer deployments of robots and autonomous vehicles, including planes and spacecraft.

To surpass previous algorithms, researchers uncovered a resourceful shortcut through the coaching and verification process. Researchers developed more affordable countermeasures by identifying potential pitfalls in sensor data that could have misinformed the control system’s decision-making process; they then refined the robotic system to adaptively mitigate these vulnerabilities. Understanding these edge circumstances allowed machines to learn how to effectively navigate complex scenarios, thereby enabling them to operate safely across a broader range of environments than previously possible. They subsequently devised a pioneering validation framework permitting the employment of a scalable neural network-based verifier, alpha-beta CROWN, to provide robust worst-case scenario guarantees beyond counterexamples.

Notably, the team has witnessed remarkable empirical feats in AI-driven machines such as humanoids and robotic canines, yet these controllers lack formal guarantees essential for safety-critical applications, notes Lujie Yang, a PhD candidate in MIT’s Electrical Engineering and Computer Science department and CSAIL affiliate. “Our research fills the gap between the efficiency achieved by neural network controllers and the security assurances needed to deploy more complex neural network controllers in the real world,” says Yang.

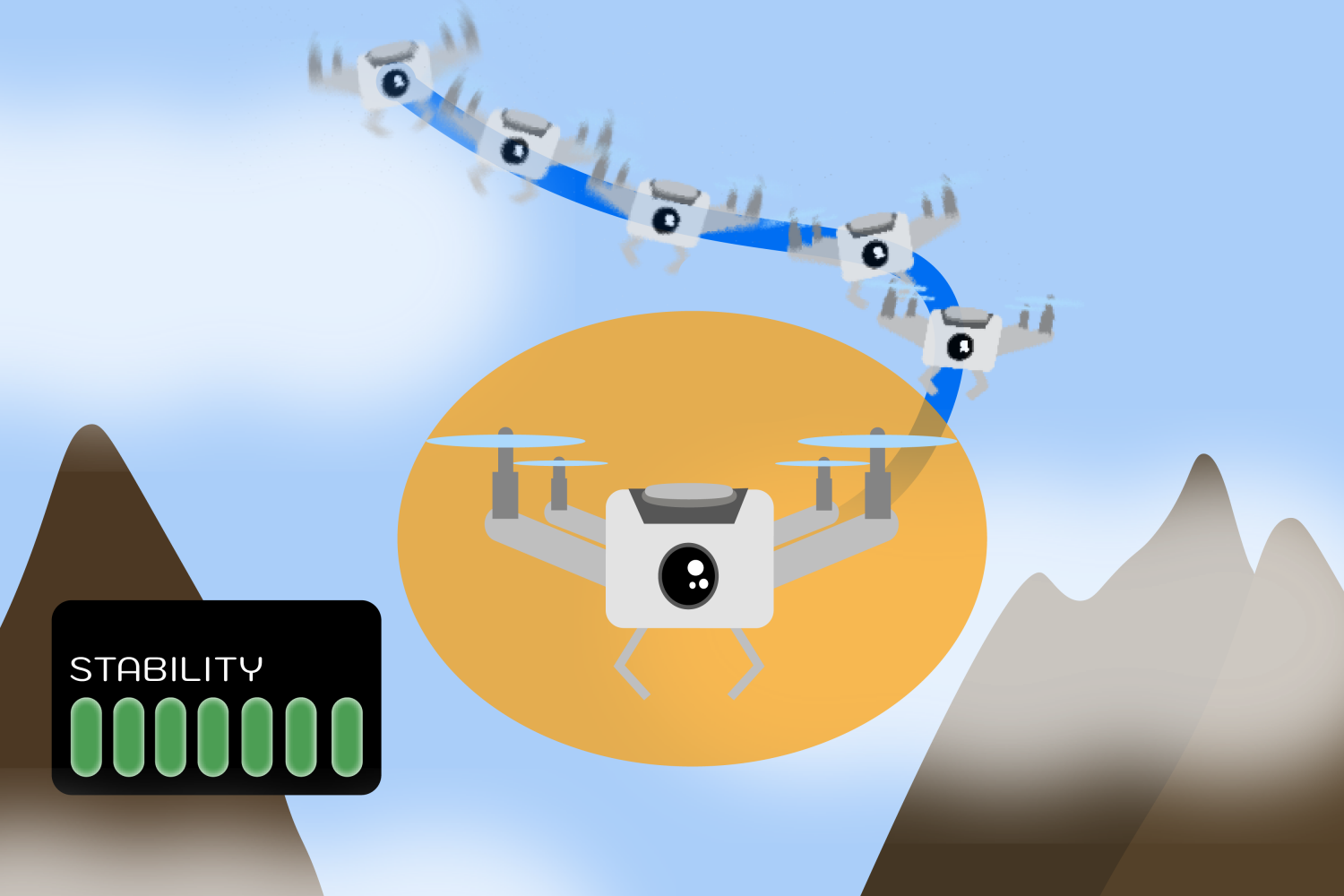

In a digital simulation, the team replicated the behaviour of a quadrotor drone equipped with lidar sensors to illustrate its stabilization in a 2D environment. The algorithm successfully steered the drone into a stable hovering position, leveraging only the limited data provided by the lidar sensors. Two distinct experiments validated their approach by successfully operating two simulated robotic systems across a broad range of scenarios: an inverted pendulum and a path-tracking automobile. While these experiments may seem modest in scope, they actually represent a significant leap forward in complexity compared to what the neural community’s verification neighbourhood would typically achieve, primarily due to their incorporation of sensor models.

“Unlike widespread machine learning challenges, rigorous neural network utilization as Lyapunov functions necessitates overcoming arduous global optimization hurdles, thus scalability emerges as the key bottleneck,” observes Sicun Gao, affiliate professor of computer science and engineering at the University of California at San Diego. This research presents a groundbreaking innovation by developing tailored algorithms that can be specifically designed to optimize the application of neural networks with Lyapunov properties in real-world management scenarios, offering significant improvements and opportunities for practical implementation? The innovative solution delivers a remarkable boost in scalability and excellence of offerings compared to existing methods. The text proposes innovative guidelines for enhancing optimization techniques in neural Lyapunov strategies, as well as the meticulous application of deep learning in management and robotics fields.

The researchers’ proposed stability strategy presents a framework with far-reaching implications that ensures the delivery of secure outcomes in critical situations. It could help ensure a smoother experience for autonomous vehicles, just like those in the aerospace industry. Unlike other aircraft, drones engaged in gadget delivery or terrain mapping might benefit from enhanced security features.

The fundamental methodologies outlined here have broad applicability, transcending the realm of robotics and holding potential to positively impact diverse fields such as biomedicine and industrial processing in the future.

While the previous approach demonstrated scalability improvements, the research team is now focused on pushing its capabilities to even greater heights through the integration of advanced techniques and larger dimensional spaces. They would also aim to incorporate information beyond lidar data, such as visual images and atmospheric conditions.

As a future analysis route, the workforce aims to ensure identical stability guarantees for techniques operating in uncertain environments subject to disturbances. When a drone encounters a robust gust of wind, Yang and her team must ensure that it remains steady and completes its designated task.

They aim to apply their method to optimize problems, seeking to reduce the time and distance required for a robot to complete a task while maintaining consistency. Researchers aim to enhance their humanoid robotics framework by integrating it with various real-world machines, focusing on maintaining stability while interacting with its surroundings.

Russ Tedrake, as the Toyota Professor of Electrical Engineering and Computer Science, Aeronautics and Astronautics, and Mechanical Engineering at MIT, alongside being the vice chairman of robotics analysis at TRI and a member of CSAIL, serves as the senior author of this analytical piece. The paper also credits Zhouxing Shi, a PhD student from the University of California, Los Angeles, as well as Cho-Jui Hsieh, an associate professor at the same institution, and Huan Zhang, an assistant professor at the University of Illinois Urbana-Champaign, for their contributions. The research received partial support from Amazon, the National Science Foundation, the Office of Naval Research, and Schmidt Sciences’ AI2050 initiative. The researchers’ paper will be presented at the 2024 Global Conference on Artificial Intelligence.