We’ve all seen AI write essays, compose music, and even paint jaw-dropping portraits. But there may be one other frontier that’s far more thrilling – AI-generated movies. Think about stepping right into a film scene, sending an animated greeting, or witnessing a historic reenactment, all crafted by AI. Till now, most of us had been simply curious spectators, giving directions and hoping for the best output. However what if you happen to might transcend that and construct your personal video technology webapp?

That’s precisely what I did with Time Capsule. Right here is the way it works: you add a photograph, choose a time interval, choose a occupation, and similar to that, you might be transported into the previous with a customized picture and a brief video. Easy, proper? However the true magic occurred after I took this concept to the Knowledge Hack Summit, essentially the most futuristic AI convention in India.

We turned Time Capsule right into a GenAI playground sales space designed purely for enjoyable and engagement. It grew to become the favorite sales space not only for attendees, however for audio system and GenAI leaders too. Watching individuals’s faces gentle up as they noticed themselves as astronauts, kings, or Victorian-era students jogged my memory why constructing with AI is so thrilling.

So I believed, why not share this concept with the beautiful viewers of AnalyticsVidhya. Buckle up, as I take you behind the scenes of how Time Capsule went from an concept to an interactive video technology webapp.

The Idea of a Video Technology WebApp (With Time Capsule Instance)

At its core, a video technology webapp is any utility that takes consumer enter and transforms it into a brief, AI-created video. The enter might be a selfie, textual content, or a number of easy selections. The AI then turns them into shifting visuals that really feel distinctive and private.

Each video technology app works by means of three foremost blocks:

- Enter: What the consumer gives – this might be a photograph, textual content, or picks.

- Transformation: The AI interprets the enter and creates visuals.

- Output: The ultimate outcome, delivered as a video (and typically a picture too).

The actual energy lies in personalization: Generic AI movies on-line might be enjoyable, however movies starring you immediately develop into extra partaking and memorable.

Ideas like Time Capsule thrive as a result of they don’t simply generate random clips, they generate your story, or on this case, your journey by means of time.

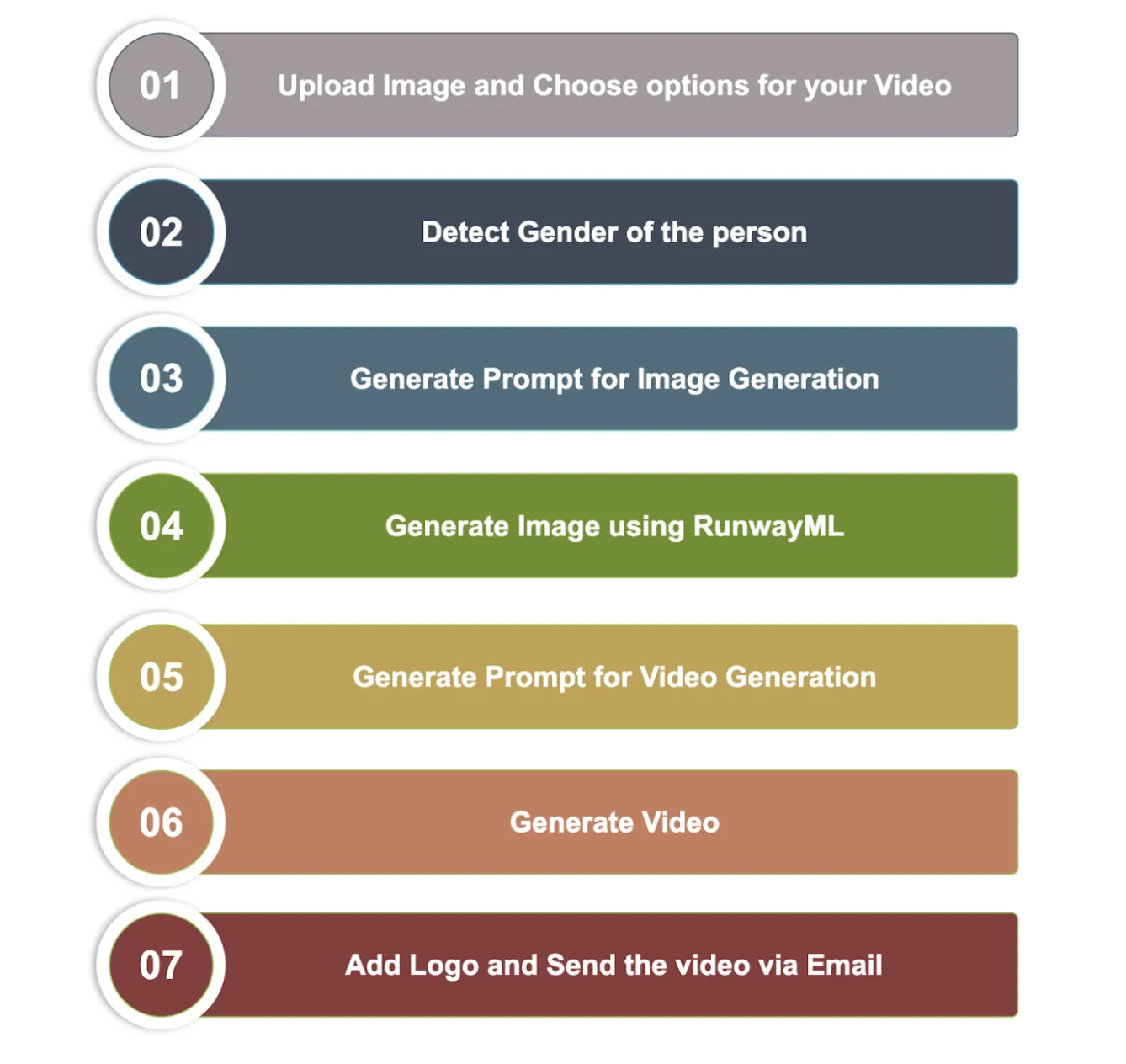

How Time Capsule Works

Right here is the brief and simple means during which Time Capsule, our video technology webapp, works.

- Add a photograph of your self.

- Choose ethnicity, time interval, occupation, and motion.

- AI generates a customized portrait and brief video.

As soon as achieved, you obtain your personal time-travel expertise, whether or not as a Roman gladiator, a Renaissance artist, or perhaps a futuristic explorer.

Now that you simply’ve seen how the method works, it’s time to begin constructing your personal ‘Time Capsule’.

Applied sciences Utilized in TimeCapsule

Listed below are all of the applied sciences utilized in constructing our very personal video technology webapp – TimeCapsule.

Programming Language

- Python: Core language for scripting the applying and integrating AI providers.

AI & Generative Fashions

- OpenAI API: For enhancing prompts and producing text-based steerage for photographs and movies.

- Google Gemini (genai): For picture evaluation (e.g., gender detection) and generative duties.

- RunwayML: AI picture technology from prompts and reference photographs.

- fal_client (FAL AI): Accessing Seeddance professional mannequin for video technology from a single picture and motion immediate.

Pc Imaginative and prescient

- OpenCV (cv2): Capturing photographs from a webcam and processing video frames.

- Pillow (PIL): Dealing with photographs, overlays, and including a emblem to movies.

- NumPy: Array manipulation for photographs and frames throughout video processing.

E-mail Integration

- Yagmail: Sending emails with attachments (generated picture and video).

Utilities & System

- Requests: Downloading generated photographs and movies through HTTP requests.

- uuid: Producing distinctive identifiers for information.

- os: Listing creation, file administration, and atmosphere entry.

- dotenv: Loading API keys and credentials from .env information.

- datetime: Timestamping generated information.

- base64: Encoding photographs for API uploads.

- enum: Defining structured choices for ethnicity, time interval, occupation, and actions.

- re: Sanitizing and cleansing textual content prompts for AI enter.

The best way to Make Your Personal Time Capsule

Now that you already know all the weather that make the Time Capsule attainable, right here is the precise, step-by-step blueprint to make your personal video-generation webapp.

1. Import All Libraries

You’ll first must import all crucial libraries for the challenge.

import cv2 import os import uuid import base64 import requests import yagmail import fal_client import numpy as np from PIL import Picture import google.generativeai as genai from enum import Enum from dotenv import load_dotenv from openai import OpenAI from datetime import datetime import time import re from runwayml import RunwayML # Load atmosphere variables load_dotenv()2. Enter from Consumer

The method of the net app begins with the consumer importing a private photograph. This photograph types the muse of the AI-generated character. Customers then choose ethnicity, time interval, occupation, and motion, offering structured enter that guides the AI. This ensures the generated picture and video are customized, contextually correct, and visually partaking.

Seize Picture

The capture_image methodology makes use of OpenCV to take a photograph from the consumer’s digital camera. Customers can press SPACE to seize or ESC to cancel. It consists of fallbacks for instances when the digital camera GUI isn’t out there, routinely capturing a picture if wanted. Every photograph is saved with a novel filename to keep away from overwriting.

1. Initialize Digicam

Listed below are the steps to initialize the digital camera.

cap = cv2.VideoCapture(0)- Opens the default digital camera (gadget 0).

- Checks if the digital camera is accessible; if not, prints an error and exits.

2. Begin Seize Loop

whereas True: ret, body = cap.learn()- Constantly reads frames from the digital camera.

- ret is True if a body is efficiently captured.

- The body incorporates the precise picture knowledge.

3. Show the Digicam Feed

Strive: cv2.imshow('Digicam - Press SPACE to seize, ESC to exit', body) key = cv2.waitKey(1) & 0xFF besides cv2.error as e: # If GUI show fails, use automated seize after delay print("GUI show not out there. Utilizing automated seize...") print("Capturing picture in 3 seconds...") time.sleep(3) key = 32 # Simulate SPACE key press- Reveals the reside digital camera feed in a window.

- Waits for consumer enter:

– SPACE (32) → Seize the picture.

– ESC (27) → Cancel seize. - Fallback: If the GUI show fails (e.g., working in a headless atmosphere), the code waits 3 seconds and routinely captures the picture.

4. Save the Picture

unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") filename = f"captured_{timestamp}_{unique_id}.jpg" filepath = os.path.be part of('captured_images', filename) # Save the picture cv2.imwrite(filepath, body)Right here is the whole code to seize the picture, in a single piece:

def capture_image(self): """Seize picture utilizing OpenCV with fallback strategies""" print("Initializing digital camera...") cap = cv2.VideoCapture(0) if not cap.isOpened(): print("Error: Couldn't open digital camera") return None attempt: print("Digicam prepared! Press SPACE to seize picture, ESC to exit") whereas True: ret, body = cap.learn() if not ret: print("Error: Couldn't learn body") break # Attempt to show the body attempt: cv2.imshow('Digicam - Press SPACE to seize, ESC to exit', body) key = cv2.waitKey(1) & 0xFF besides cv2.error as e: # If GUI show fails, use automated seize after delay print("GUI show not out there. Utilizing automated seize...") print("Capturing picture in 3 seconds...") # import time time.sleep(3) key = 32 # Simulate SPACE key press if key == 32: # SPACE key # Generate UUID for distinctive filename unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") filename = f"captured_{timestamp}_{unique_id}.jpg" filepath = os.path.be part of('captured_images', filename) # Save the picture cv2.imwrite(filepath, body) print(f"Picture captured and saved as: {filepath}") break elif key == 27: # ESC key print("Seize cancelled") filepath = None break besides Exception as e: print(f"Error throughout picture seize: {e}") # Fallback: seize with out GUI print("Making an attempt fallback seize...") attempt: ret, body = cap.learn() if ret: unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") filename = f"captured_{timestamp}_{unique_id}.jpg" filepath = os.path.be part of('captured_images', filename) cv2.imwrite(filepath, body) print(f"Fallback picture captured and saved as: {filepath}") else: filepath = None besides Exception as e2: print(f"Fallback seize additionally failed: {e2}") filepath = None lastly: cap.launch() attempt: cv2.destroyAllWindows() besides: cross # Ignore if GUI cleanup fails return filepathOutput:

Select ethnicity, Time interval, Career, and Motion

The get_user_selections methodology permits customers to customise their character by selecting from the next choices: ethnicity, time interval, occupation, and motion. Choices are displayed with numbers, and the consumer inputs their selection. The picks are returned and used to create a customized picture and video.

Listed below are all of the choices out there to select from:

class EthnicityOptions(Enum): CAUCASIAN = "Caucasian" AFRICAN = "African" ASIAN = "Asian" HISPANIC = "Hispanic" MIDDLE_EASTERN = "Center Japanese" MIXED = "Blended Heritage" class TimePeriodOptions(Enum): Jurassic = "Jurassic Interval (200-145 million in the past)" ANCIENT = "Historical Occasions (Earlier than 500 AD)" MEDIEVAL = "Medieval (500-1500 AD)" RENAISSANCE = "Renaissance (1400-1600)" COLONIAL = "Colonial Period (1600-1800)" VICTORIAN = "Victorian Period (1800-1900)" EARLY_20TH = "Early twentieth Century (1900-1950)" MID_20TH = "Mid twentieth Century (1950-1990)" MODERN = "Fashionable Period (1990-Current)" FUTURISTIC = "Futuristic (Close to Future)" class ProfessionOptions(Enum): WARRIOR = "Warrior/Soldier" SCHOLAR = "Scholar/Trainer" MERCHANT = "Service provider/Dealer" ARTISAN = "Artisan/Craftsperson" FARMER = "Farmer/Agricultural Employee" HEALER = "Healer/Medical Skilled" ENTERTAINER = "Entertainer/Performer" NOBLE = "Noble/Aristocrat" EXPLORER = "Explorer/Adventurer" SPIRITUAL = "Religious Chief/Clergy" class ActionOptions(Enum): SELFIE = "Taking a selfie from digital camera view" DANCING = "Dancing to music" WORK_ACTION = "Performing work/skilled motion" WALKING = "Easy strolling" COMBAT = "Fight/preventing motion" CRAFTING = "Crafting/creating one thing" SPEAKING = "Talking/giving a speech" CELEBRATION = "Celebrating/cheering"Right here is the code block to seize the choice:

def get_user_selections(self): """Get consumer picks for character customization""" print("n=== Character Customization ===") # Ethnicity choice print("nSelect Ethnicity:") for i, choice in enumerate(EthnicityOptions, 1): print(f"{i}. {choice.worth}") ethnicity_choice = int(enter("Enter selection (1-6): ")) - 1 ethnicity = checklist(EthnicityOptions)[ethnicity_choice] # Time Interval choice print("nSelect Time Interval:") for i, choice in enumerate(TimePeriodOptions, 1): print(f"{i}. {choice.worth}") period_choice = int(enter("Enter selection (1-9): ")) - 1 time_period = checklist(TimePeriodOptions)[period_choice] # Career choice print("nSelect Career:") for i, choice in enumerate(ProfessionOptions, 1): print(f"{i}. {choice.worth}") profession_choice = int(enter("Enter selection (1-10): ")) - 1 occupation = checklist(ProfessionOptions)[profession_choice] # Motion Choice print("n=== Video Motion Choice ===") for i, motion in enumerate(ActionOptions, 1): print(f"{i}. {motion.worth}") action_choice = int(enter("Choose motion (1-8): ")) - 1 action_choice = checklist(ActionOptions)[action_choice] return ethnicity, time_period, occupation,action_choiceDetect Gender from the Picture

The detect_gender_from_image perform makes use of Google Gemini 2.0 Flash to determine the gender from an uploaded picture. It handles errors gracefully, returning ‘particular person’ if detection fails. This helps personalize the generated video, making certain the mannequin precisely represents the consumer and avoids producing a male picture for a feminine or vice versa.

def detect_gender_from_image(self, image_path): """Detect gender from captured picture utilizing Google Gemini 2.0 Flash""" attempt: print("Analyzing picture to detect gender...") # Add picture to Gemini uploaded_file = genai.upload_file(image_path) # Anticipate the file to be processed # import time whereas uploaded_file.state.title == "PROCESSING": print("Processing picture...") time.sleep(2) uploaded_file = genai.get_file(uploaded_file.title) if uploaded_file.state.title == "FAILED": print("Didn't course of picture") return 'particular person' # Generate response response = self.gemini_model.generate_content([ uploaded_file, "Look at this image and determine if the person appears to be male or female. Respond with only one word: 'male' or 'female'." ]) # Clear up the uploaded file genai.delete_file(uploaded_file.title) gender = response.textual content.strip().decrease() if gender in ['male', 'female']: return gender else: return 'particular person' # fallback besides Exception as e: print(f"Error detecting gender with Gemini: {e}") return 'particular person' # fallback 3. Generate an Picture from the Inputs

Now that now we have the enter from the consumer for all of the parameters, we will proceed to creating an AI picture utilizing the identical. Listed below are the steps for that:

Generate a Immediate for Picture Technology

After accumulating the consumer’s picks, we use the enhance_image_prompt_with_openai perform to create an in depth and interesting immediate for the picture technology mannequin. It transforms the essential inputs like gender, ethnicity, occupation, time interval, and motion right into a inventive, skilled, and age-appropriate immediate, making certain the generated photographs are correct, visually interesting, and customized.

For this, we’re utilizing the “gpt-4.1-mini” mannequin with a temperature of 0.5 to introduce some randomness and creativity. If the OpenAI service encounters an error, the perform falls again to a easy default immediate, holding the video technology course of clean and uninterrupted.

def enhance_image_prompt_with_openai(self, ethnicity, time_period, occupation, gender,motion): """Use OpenAI to reinforce the picture immediate based mostly on consumer picks""" base_prompt = f""" Create a easy, clear immediate for AI picture technology: - Gender: {gender} - Ethnicity: {ethnicity.worth} - Career: {occupation.worth} - Time interval: {time_period.worth} - Performing Motion: {motion.worth} - Present applicable clothes and setting - Make the background a bit distinctive within the immediate - Preserve it applicable for all ages - Most 30 phrases """ attempt: response = self.openai_client.chat.completions.create( mannequin="gpt-4.1-mini", messages=[{"role": "user", "content": base_prompt}], max_tokens=80, temperature=0.5 ) enhanced_prompt = response.selections[0].message.content material.strip() return enhanced_prompt besides Exception as e: print(f"Error with OpenAI: {e}") # Fallback immediate return f"{gender} {ethnicity.worth} {occupation.worth} from {time_period.worth} performing {motion.worth}, skilled portrait"After producing a immediate, we have to clear and sanitize it for API compatibility. Right here is the perform for sanitizing the immediate.

def sanitize_prompt(self, immediate): """Sanitize and restrict immediate for API compatibility""" # Take away problematic characters and restrict size import re # Take away further whitespace and newlines immediate = re.sub(r's+', ' ', immediate.strip()) # Take away particular characters which may trigger points immediate = re.sub(r'[^ws,.-]', '', immediate) # Restrict to 100 phrases most phrases = immediate.cut up() if len(phrases) > 100: immediate=" ".be part of(phrases[100]) # Guarantee it is not empty if not immediate: immediate = "Skilled portrait {photograph}" return immediateGenerate Knowledge URI for the Picture

The image_to_data_uri perform converts a picture right into a Knowledge URI, permitting it to be despatched instantly in API requests or embedded in HTML. It encodes the file as Base64, detects its sort (JPEG, PNG, or GIF), and creates a compact string format for seamless integration.

def image_to_data_uri(self, filepath): """Convert picture file to knowledge URI for API""" with open(filepath, "rb") as image_file: encoded_string = base64.b64encode(image_file.learn()).decode('utf-8') mime_type = "picture/jpeg" if filepath.decrease().endswith(".png"): mime_type = "picture/png" elif filepath.decrease().endswith(".gif"): mime_type = "picture/gif" return f"knowledge:{mime_type};base64,{encoded_string}"Generate Picture utilizing RunwayML

As soon as now we have generated the Immediate and Knowledge URI of the Picture. Now its time for the AI to do its magic. We are going to use runwayML to generate Picture. You need to use completely different picture technology device out there out there.

The perform generate_image_with_runway is answerable for producing a picture utilizing RunwayML.

Import and initialize RunwayML

from runwayml import RunwayML runway_client = RunwayML()It hundreds the RunwayML library and creates a consumer to work together with the API.

Put together the immediate

print(f"Utilizing immediate: {immediate}") print(f"Immediate size: {len(immediate)} characters") # Sanitize immediate yet another time immediate = self.sanitize_prompt(immediate)The immediate supplied by the consumer is printed and cleaned (sanitized) to make sure it doesn’t break the mannequin.

Convert reference picture

data_uri = self.image_to_data_uri(image_path)The enter picture is transformed right into a Knowledge URI (a Base64 string) so it may be handed to RunwayML as a reference.

Generate picture with RunwayML

process = runway_client.text_to_image.create( mannequin="gen4_image", prompt_text=immediate, ratio='1360:768', reference_images=[{ "uri": data_uri}] ).wait_for_task_output()It sends the sanitized immediate + reference picture to RunwayML’s gen4_image mannequin to generate a brand new picture.

Obtain and save the generated picture

image_url = process.output[0] response = requests.get(image_url) unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") filename = f"generated_{timestamp}_{unique_id}.png" filepath = os.path.be part of('intermediate_images', filename)As soon as RunwayML returns a URL, it downloads the picture. A novel filename (based mostly on time and UUID) is created, and the picture is saved within the intermediate_images folder.

Error dealing with & fallback

- If one thing goes flawed with the principle immediate, the perform retries with an easier immediate (simply ethnicity + occupation).

- If even that fails, it returns None.

Right here the entire Code Block for Picture Technology:

def generate_image_with_runway(self, image_path, immediate, ethnicity, time_period, occupation): """Generate picture utilizing RunwayML""" attempt: runway_client = RunwayML() print("Producing picture with RunwayML...") print(f"Utilizing immediate: {immediate}") print(f"Immediate size: {len(immediate)} characters") # Sanitize immediate yet another time immediate = self.sanitize_prompt(immediate) print(f"Sanitized immediate: {immediate}") data_uri = self.image_to_data_uri(image_path) process = runway_client.text_to_image.create( mannequin="gen4_image", prompt_text=immediate, ratio='1360:768', reference_images=[{ "uri": data_uri }] ).wait_for_task_output() # Obtain the generated picture image_url = process.output[0] response = requests.get(image_url) if response.status_code == 200: # Generate distinctive filename unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") filename = f"generated_{timestamp}_{unique_id}.png" filepath = os.path.be part of('intermediate_images', filename) with open(filepath, 'wb') as f: f.write(response.content material) print(f"Generated picture saved as: {filepath}") return filepath else: print(f"Didn't obtain picture. Standing code: {response.status_code}") return None besides Exception as e: print(f"Error producing picture: {e}") print("Attempting with an easier immediate...") # Fallback with quite simple immediate simple_prompt = f"{ethnicity.worth} {occupation.worth} portrait" attempt: data_uri = self.image_to_data_uri(image_path) process = runway_client.text_to_image.create( mannequin="gen4_image", prompt_text=simple_prompt, ratio='1360:768', reference_images=[{ "uri": data_uri }] ).wait_for_task_output() # Obtain the generated picture image_url = process.output[0] response = requests.get(image_url) if response.status_code == 200: # Generate distinctive filename unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") filename = f"generated_{timestamp}_{unique_id}.png" filepath = os.path.be part of('intermediate_images', filename) with open(filepath, 'wb') as f: f.write(response.content material) print(f"Generated picture saved as: {filepath}") return filepath else: print(f"Didn't obtain picture. Standing code: {response.status_code}") return None besides Exception as e2: print(f"Fallback additionally failed: {e2}") return NoneOutput:

4. Generate Video from the Picture

Now that now we have generated a picture based mostly precisely on the consumer enter, right here is the method to transform this picture to a video.

Generate a Immediate for Video Technology

The enhance_video_prompt_with_openai perform turns consumer selections into protected, inventive video prompts utilizing GPT-4.1-mini. It adapts tone based mostly on occupation i.e., critical for warriors, gentle and humorous for others, whereas holding content material family-friendly.

To take care of consistency, it additionally ensures the character’s face stays the identical throughout the video. Together with consumer picks, the picture technology immediate is handed too, so the video has full context of the character and background. If OpenAI fails, a fallback immediate retains issues working easily.

def enhance_video_prompt_with_openai(self, motion, image_prompt, ethnicity, time_period, occupation, gender): """Enhanced video immediate technology - simplified and protected""" if occupation == "WARRIOR": video_prompt_base = f""" Context from picture immediate : {image_prompt} Get Context from picture immediate and generate an in depth and protected video immediate: - Character: A {gender} {ethnicity.worth} {occupation.worth} - Motion: {motion.worth} - Time Interval: {time_period.worth} - Focus fully on the motion. - Preserve the language easy and applicable. - Scene needs to be life like. - Keep away from controversial subjects or violence. - Video needs to be applicable for all ages. """ else: video_prompt_base = f""" Context from picture immediate : {image_prompt} Get Context from picture immediate and generate a easy, protected and humorous video immediate: - Character: A {gender} {ethnicity.worth} {occupation.worth} - Motion: {motion.worth} - Time Interval: {time_period.worth} - Focus fully on the motion. - Preserve the language easy and applicable. - Make it little bit humorous. - Scene needs to be life like and humorous. - Keep away from controversial subjects or violence. - Video needs to be applicable for all ages """ attempt: response = self.openai_client.chat.completions.create( mannequin="gpt-4.1-mini", messages=[{"role": "user", "content": video_prompt_base}], max_tokens=60, temperature=0.5 ) enhanced_video_prompt = response.selections[0].message.content material.strip() enhanced_video_prompt = enhanced_video_prompt+" Preserve face of the @particular person constant in entire video." return enhanced_video_prompt besides Exception as e: print(f"Error enhancing video immediate: {e}") # Fallback immediate - quite simple return f"{gender} {ethnicity.worth} {occupation.worth} from {time_period.worth} performing {motion.worth}, skilled video"Generate Video with Seedance V1 Professional

For video technology, we’re utilizing the Seedance V1 Professional mannequin. To entry this mannequin, we’re utilizing fal.ai. Fal AI gives entry to Seedance Professional at a less expensive value. I’ve examined many video technology fashions like Veo3, Kling AI, and Hailuo. I discover Seedance finest for this objective because it has a lot better face consistency and is less expensive. The one downside is that it doesn’t present audio/music within the video.

def generate_video_with_fal(self, image_path, video_prompt): """Generate video utilizing fal_client API with error dealing with""" attempt: print("Producing video with fal_client...") print(f"Utilizing video immediate: {video_prompt}") # Add the generated picture to fal_client print("Importing picture to fal_client...") image_url = fal_client.upload_file(image_path) print(f"Picture uploaded efficiently: {image_url}") # Name the mannequin with the uploaded picture URL print("Beginning video technology...") outcome = fal_client.subscribe( "fal-ai/bytedance/seedance/v1/professional/image-to-video", arguments={ "immediate": video_prompt, "image_url": image_url, "decision": "720p", "length": 10 }, with_logs=True, on_queue_update=self.on_queue_update, ) print("Video technology accomplished!") print("Outcome:", outcome) # Extract video URL from outcome if outcome and 'video' in outcome and 'url' in outcome['video']: video_url = outcome['video']['url'] print(f"Video URL: {video_url}") # Obtain the video print("Downloading generated video...") response = requests.get(video_url) if response.status_code == 200: # Generate distinctive filename for video unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") video_filename = f"generated_video_{timestamp}_{unique_id}.mp4" video_filepath = os.path.be part of('final_videos', video_filename) # Save the video with open(video_filepath, "wb") as file: file.write(response.content material) print(f"Video generated efficiently: {video_filepath}") return video_filepath else: print(f"Didn't obtain video. Standing code: {response.status_code}") return None else: print("No video URL present in outcome") print("Full outcome construction:", outcome) return None besides Exception as e: print(f"Error producing video with fal_client: {e}") if "delicate" in str(e).decrease(): print("Content material flagged as delicate. Attempting with an easier immediate...") # Strive with very fundamental immediate basic_prompt = "particular person shifting" attempt: image_url = fal_client.upload_file(image_path) outcome = fal_client.subscribe( "fal-ai/bytedance/seedance/v1/professional/image-to-video", arguments={ "immediate": basic_prompt, "image_url": image_url, "decision": "720p", "length": 10 }, with_logs=True, on_queue_update=self.on_queue_update, ) if outcome and 'video' in outcome and 'url' in outcome['video']: video_url = outcome['video']['url'] response = requests.get(video_url) if response.status_code == 200: unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") video_filename = f"generated_video_{timestamp}_{unique_id}.mp4" video_filepath = os.path.be part of('final_videos', video_filename) with open(video_filepath, "wb") as file: file.write(response.content material) print(f"Video generated with fundamental immediate: {video_filepath}") return video_filepath besides Exception as e2: print(f"Even fundamental immediate failed: {e2}") return None return NoneOutput:

Add your emblem within the video (optionally available):

In case you’re making a video to your group, you may simply add a watermark to it. This helps defend your content material by stopping others from utilizing the video for industrial functions.

The add_logo_to_video perform provides a emblem watermark to a video. It checks if the brand exists, resizes it, and locations it within the bottom-right nook of each body. The processed frames are saved as a brand new video with a novel title. If one thing goes flawed, it skips the overlay and retains the unique video.

def add_logo_to_video(self, video_path, logo_width=200): """Add emblem overlay to video earlier than emailing""" attempt: print("Including emblem overlay to video...") # Verify if emblem file exists if not os.path.exists(self.logo_path): print(f"Emblem file not discovered at {self.logo_path}. Skipping emblem overlay.") return video_path # Load emblem with transparency utilizing Pillow emblem = Picture.open(self.logo_path).convert("RGBA") # Open the enter video cap = cv2.VideoCapture(video_path) # Get video properties width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) peak = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) fps = cap.get(cv2.CAP_PROP_FPS) fourcc = cv2.VideoWriter_fourcc(*'mp4v') # Create output filename unique_id = str(uuid.uuid4()) timestamp = datetime.now().strftime("%Ypercentmpercentd_percentHpercentMpercentS") output_filename = f"video_with_logo_{timestamp}_{unique_id}.mp4" output_path = os.path.be part of('final_videos', output_filename) out = cv2.VideoWriter(output_path, fourcc, fps, (width, peak)) # Resize emblem to specified width logo_ratio = logo_width / emblem.width logo_height = int(emblem.peak * logo_ratio) emblem = emblem.resize((logo_width, logo_height)) frame_count = 0 total_frames = int(cap.get(cv2.CAP_PROP_FRAME_COUNT)) whereas True: ret, body = cap.learn() if not ret: break # Present progress frame_count += 1 if frame_count % 10 == 0: progress = (frame_count / total_frames) * 100 print(f"Processing body {frame_count}/{total_frames} ({progress:.1f}%)") # Convert body to PIL Picture frame_pil = Picture.fromarray(cv2.cvtColor(body, cv2.COLOR_BGR2RGB)).convert("RGBA") # Calculate place (backside proper nook with padding) pos = (frame_pil.width - emblem.width - 10, frame_pil.peak - emblem.peak - 10) # Paste the brand onto the body frame_pil.alpha_composite(emblem, dest=pos) # Convert again to OpenCV BGR format frame_bgr = cv2.cvtColor(np.array(frame_pil.convert("RGB")), cv2.COLOR_RGB2BGR) out.write(frame_bgr) cap.launch() out.launch() print(f"Emblem overlay accomplished: {output_path}") return output_path besides Exception as e: print(f"Error including emblem to video: {e}") print("Persevering with with unique video...") return video_pathShip this Video through E-mail

As soon as the video is generated, customers will wish to view and obtain it. This perform makes it attainable by sending the video on to the e-mail deal with they supplied.

def send_email_with_attachments(self, recipient_email, image_path, video_path): """Ship e-mail with generated content material utilizing yagmail""" attempt: # Get e-mail credentials from atmosphere variables sender_email = os.getenv('SENDER_EMAIL') sender_password = os.getenv('SENDER_PASSWORD') if not sender_email or not sender_password: print("E-mail credentials not present in atmosphere variables") return False yag = yagmail.SMTP(sender_email, sender_password) topic = "Your AI Generated Character Picture and Video" physique = f""" Whats up! Your AI-generated character content material is prepared! Connected you will discover: - Your generated character picture - Your generated character video (with emblem overlay) Thanks for utilizing our AI Picture-to-Video Generator! Greatest regards, AI Generator Crew """ attachments = [] if image_path: attachments.append(image_path) if video_path: attachments.append(video_path) yag.ship( to=recipient_email, topic=topic, contents=physique, attachments=attachments ) print(f"E-mail despatched efficiently to {recipient_email}") return True besides Exception as e: print(f"Error sending e-mail: {e}") return FalseAt this stage, you’ve constructed the core engine of your internet app, producing a picture, making a video, including a emblem, and sending it to the consumer through e-mail. The subsequent step is to attach all of it collectively by growing the frontend and backend for the net app.

Challenges

Constructing a customized video technology internet app comes with a number of technical and operational challenges:

1. Dealing with AI Failures and API Errors

- AI fashions for picture and video technology can fail unexpectedly.

- APIs could return errors or produce undesired outputs.

- Fallback methods had been important to make sure clean operation, reminiscent of utilizing simplified prompts or different technology strategies.

2. Managing Delicate Content material

- AI-generated content material can inadvertently produce inappropriate outcomes.

- Implementing checks and protected prompts ensured that each one outputs remained family-friendly.

3. Consumer Expectations for Personalization

- Customers count on extremely correct and customized outcomes.

- Making certain gender, ethnicity, occupation, and different particulars had been appropriately mirrored required cautious immediate design and validation.

4. Finalizing the Video Technology Mannequin

- Discovering a mannequin that maintained face consistency at an inexpensive price was difficult.

- After testing a number of choices, Seedance V1 Professional through Fal.ai supplied the very best stability of high quality, consistency, and value.

5. Faux or Unreliable Aggregators

- Selecting a dependable mannequin supplier was tough. Fal.ai labored nicely, however earlier I experimented with others:

- Replicate.com: Quick however restricted customization choices, and later confronted fee points.

- Pollo.ai: Good interface, however their API service was unreliable; it generated no movies.

- The important thing takeaway: keep away from pretend or unreliable suppliers; all the time take a look at completely earlier than committing.

6. Time Administration and Efficiency

- Video technology is time-consuming, particularly for real-time demos.

- Optimizations like LRU caching and a number of API situations helped cut back latency and enhance efficiency throughout occasions.

Past the Time Capsule: What Else Might You Construct?

The Time Capsule is only one instance of a customized video technology app. The core engine might be tailored to create quite a lot of modern purposes:

- Customized Greetings: Generate birthday or pageant movies that includes family and friends in historic or fantasy settings.

- Advertising & Branding: Produce promotional movies for companies, including logos and customised characters to showcase services or products.

- Academic Content material: Carry historic figures, scientific ideas, or literature scenes to life in a visually partaking means.

- Interactive Storytelling: Permit customers to create mini-movies the place characters evolve based mostly on consumer enter, selections, or actions.

- Gaming Avatars & Animations: Generate customized in-game characters, motion sequences, or brief cutscenes for sport storytelling.

The probabilities are countless, any situation the place you need customized, visible, and interactive content material, this engine may also help carry concepts to life.

Conclusion

The Time Capsule internet app reveals simply how far AI has come, from producing textual content and pictures to creating customized movies that really really feel like your personal. You begin with a easy photograph, choose a time interval, occupation, and motion, and in moments, the AI brings your historic or fantasy self to life. Alongside the best way, we deal with challenges like AI errors, delicate content material, and time-consuming video technology with good fallbacks and optimizations. What makes this thrilling isn’t simply the know-how, it’s the countless potentialities.

From enjoyable customized greetings to instructional storytelling, advertising movies, or interactive mini-movies, this engine might be tailored to carry numerous inventive concepts to life. With slightly creativeness, your Time Capsule might be the beginning of one thing actually magical.

Login to proceed studying and luxuriate in expert-curated content material.