(metamorworks/Shutterstock)

The emergence of agentic AI is placing contemporary stress on the infrastructure layer. If Nvidia CEO Jensen Huang is right in his assumptions, demand for accelerated compute will enhance by 100x as enterprises deploy AI brokers based mostly on reasoning fashions. The place will prospects get the required GPUs and servers to run these inference workloads? The cloud is one apparent place, however some warn it could possibly be too costly.

When ChatGPT landed on the scene in late 2022 and early 2023, there was a Gold Rush mentality, and firms opened up the purse strings to discover totally different approaches. A lot of that exploration was achieved within the cloud, the place the prices for sporadic workloads could be decrease. However now as firms zero in on what kind of AI they need to run on a longterm foundation–which in lots of instances might be agentic AI–the cloud doesn’t look pretty much as good of an possibility.

One of many firms serving to enterprises to maneuver AI from proof of idea to deployed actuality is H2O.ai. a San Francisco-based supplier of predictive and generative AI options. In line with H2O’s founder and CEO, Sri Ambati, its partnership with Dell to deploy on-prem AI factories at buyer websites is gaining steam.

“Individuals threw a variety of issues within the exploratory section, and there was limitless budgets a few years in the past to do this,” Ambati informed BigDATAwire in an interview at GTC 2025 in San Jose this week. “Individuals simply beginning have that mindset of limitless price range. However after they go from demos to manufacturing, from pilots to manufacturing…it’s a protracted journey.”

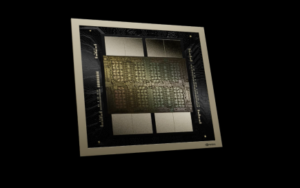

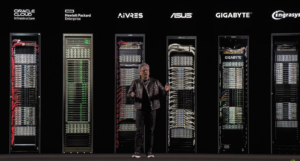

These 16 OEMs are presently transport techniques with Nvidia’s newest Blackwell GPUs, as Huang demonstrated throughout his keynote at GTC 2025 this week.

In lots of instances, that journey includes analyzing the price of turning phrases into tokens which might be processed by the massive language fashions (LLMs), after which turning the output again into phrases which might be introduced to the consumer. The emergence of reasoning fashions adjustments the token math, largely as a result of there are various extra steps within the chain-of-thought reasoning achieved with reasoning fashions, which necessitates many extra tokens. Ambati stated he doesn’t imagine that it’s a 100x distinction, because the reasoning fashions might be extra environment friendly than Huang claimed. However effectivity calls for higher bang-for-your-buck, and for a lot of which means transferring on-prem, he stated.

“On-prem GPUs are a few third of the price of cloud GPUs,” Ambati stated. “I believe the environment friendly AI frontier has arrived.”

On-line and On-Prem

One other firm seeing a resurgence of on-prem processing within the agentic AI period is Cloudera. In line with Priyank Patel, the corporate’s company VP of enterprise AI, some Cloudera prospects already are beginning down the highway to adopting agentic AI and reasoning fashions, together with Mastercard and OCBC Financial institution in Singapore.

“We see positively a variety of our prospects experimenting with brokers and reasoning fashions,” he stated. “The market is transferring there, not simply because infrastructure suppliers need to go there, but additionally as a result of the worth is being seen by the top customers as properly.”

Nonetheless, if inference goes to drive a 100x enhance in workload, as Nvidia’s Huang stated throughout his keynote deal with at GTC 2025 this week, then it doesn’t make a variety of sense to run these workloads within the public cloud, Patel informed BigDATAwire on the Nvidia GTC 2025 convention this week.

“It seems like for the final 10 years, the world has been going to the cloud,” he stated. “Now they’re taking a second onerous look.”

Cloudera designed its knowledge platform as a hybrid cloud providing, prospects can simply transfer workloads the place they should. Clients don’t must retool their purposes to run them someplace else, Patel stated. The truth that Cloudera prospects want to run agentic AI purposes on-prem signifies the financials don’t make a lot sense to do it within the cloud, he stated.

“The price of possession of doing coaching, tuning, even large-scale inferencing like those that Nvidia talks about for agentic AI sooner or later, is a a lot better TCO argument pushed on prem, or pushed with owned infrastructure inside knowledge facilities, versus simply on rented by the hour cases on the cloud,” Patel stated. “On the information middle facet, you’re paying for it as soon as, after which you have got it. Theoretically, you’re not basically including on to price should you’re utilizing extra of it.”

Get Thee to the FinOps-ery

The three massive public clouds, AWS, Microsoft Azure, and Google Cloud, have seen great development through the years within the quantity of knowledge they’re storing and the quantity of knowledge they’re processing for purchasers. The expansion has been so nice that folks have grown a bit complacent about attempting to align the price of the providers with the worth they get out of them.

In line with Akash Tayal, the cloud engineering providing lead at Deloitte, the sum of money enterprises waste within the cloud typically ranges from 20% to 40%.

“There’s a variety of waste within the cloud,” Tayel informed BigDATAwire. “It’s not that folks haven’t considered it. It’s that as you begin entering into the cloud, you get new concepts, the know-how evolves on you, there’s new providers accessible.”

Clients who simply lift-and-shift their current utility into the cloud and don’t change how they devour assets are almost certainly to waste cash, Tayal stated. That’s additionally the best of the ten% of complete waste to recoup, he stated. Eliminating the remainder of the adjustments requires extra cautious monitoring and reengineering purposes, which is tougher to do, he stated. It’s additionally the main focus of his FinOps follow at Deloitte, which has been rising strongly over the previous few years.

Tayal defended the general public clouds’ data in the case of innovation. Those that are utilizing the cloud to check out new know-how and develop new purposes usually tend to be getting higher worth out of the cloud, he stated. Coaching or fine-tuning a mannequin doesn’t require 24x7x365, always-on assets, so spinning up rented GPUs within the cloud may make sense.

Agentic AI continues to be a nascent know-how, so there’s a variety of innovation occurring there that could possibly be achieved within the cloud. However as that innovation turns into manufacturing use instances that should be all the time on, enterprises must take a tough take a look at what they’re working and the way they’re working it. That’s the place FinOps comes into play, Tayal stated.

“If I really use a workload that was persistent, taking an instance of an ERP or one thing like that, and I began utilizing these on demand providers for it, working the meter 24 over seven for the entire month is just not advisable,” Tayel stated.

We’re nonetheless a good distance from agentic AI turning into as crucial a workload as an ERP system (or as predictable of a workload for that matter). Nonetheless, the prices of working agentic AI are doubtlessly a lot bigger than a well-established and environment friendly ERP system, which ought to pressure prospects to research their prices and apply rising FinOps rules a lot sooner.

Various Clouds

The reality is the general public clouds have developed a status for overcharging prospects. Typically these accusations are truthful, however different occasions they don’t seem to be. No matter whether or not the general public clouds are deliberately gouging prospects or not, there are dozens of other cloud firms which have popped up which might be more than pleased to offer knowledge storage and compute assets at a fraction of the price of the large guys.

A type of different clouds is Vultr. The corporate was at GTC 2025 this week to let people know that they’ve the most recent, best GPUs from Nvidia, the HGX B200, prepared to start out doing AI.

“Mainly it’s a play towards the cloud giants. So it’s an alternate possibility,” stated Kevin Cochrane, the corporate’s chief advertising and marketing officer. “We’re going to avoid wasting you between 50% and 90% on the price of cloud compute, and it’s going to release sufficient capital to mainly put money into your GPUs and your new AI initiative.”

Vultr isn’t attempting to face solely between enterprises and AWS, as an example. However working completely on one cloud for all of your workloads could not make monetary sense, Cochrane stated.

“You’re simply attempting to deploy a customer support agent. My God, are you actually going to spend $10 million on AWS when you are able to do it on us for a fraction of the associated fee?” he stated. “If we are able to get you up and working in half the time and half the associated fee, wouldn’t that be useful for you?”

Vultr was launched in 2014 by a bunch of engineers who wished to supply strong infrastructure at a good worth. The corporate purchased a bunch of servers, storage arrays, and networking gear, and put in it in colocation amenities across the nation. Progressively the corporate expanded and at this time it’s in lots of of knowledge facilities around the globe. It employed its first advertising and marketing individual (Cochrane) three years in the past, and lately handed the $100 million income mark. The corporate is “immensely worthwhile,” Cochrane stated, and is solely self bootstrapped, that means it hasn’t taken any enterprise or fairness funding.

If Amazon is a luxurious yacht with an abundance of facilities, then Vultr is a speedboat with a cooler filled with drinks and sandwiches bouncing across the again. It’s going to get you the place you might want to go shortly, however with out the pricey consolation and padding.

“You’re funding a extremely capital-intensive enterprise. Each penny issues, proper?” Cochrane stated. “The truth is Amazon has all these fantastic providers and all these fantastic providers have very massive product engineering groups and really massive advertising and marketing budgets. They don’t earn money. So how do you fund all this different stuff that doesn’t really make you cash? It’s the price of EC2, S3 and bandwidth are wildly inflated to cowl the price of every thing else.”

Whereas Vultr gives a variety of compute providers, it’s embracing its status as cloud GPU specialist. Because the agentic AI period dawns and drives calls for for compute up, Vultr desires prospects to rethink how they’re architecting their techniques.

“When you really construct one thing after which deploy it, you must scale it throughout lots of or 1000’s of various nodes, so the runtime demand all the time dwarfs the construct demand 100% of the time by an order of magnitude or two,” Cochrane stated. “We imagine that we’re on the daybreak of a brand new 10-year cycle of compute structure that’s the union of CPUs and GPUs. It’s taking-cloud native engineering rules and now making use of it to AI.”

Associated Gadgets:

Nvidia Preps for 100x Surge in Inference Workloads, Due to Reasoning AI Brokers

Nvidia Touts Subsequent Era GPU Superchip and New Photonic Switches

AI Classes Realized from DeepSeek’s Meteoric Rise