Implementing Manufacturing-Grade Analytics on a Databricks Knowledge Warehouse

Excessive-concurrency, low-latency knowledge warehousing is crucial for organizations the place knowledge drives important enterprise selections. This implies supporting lots of of concurrent customers, delivering fast question efficiency for interactive analytics and enabling actual‑time insights for quick, knowledgeable resolution‑making. A manufacturing‑grade knowledge warehouse is greater than a help system—it’s a catalyst for progress and innovation.

Databricks pioneered the lakehouse structure to unify knowledge, analytics and AI workloads—eliminating pricey knowledge duplication and sophisticated system integrations. With built-in autonomous efficiency optimizations, the lakehouse delivers aggressive value/efficiency whereas simplifying operations. As an open lakehouse, it additionally ensures quick, safe entry to important knowledge by means of Databricks SQL, powering BI, analytics and AI instruments with unified safety and governance that reach throughout the complete ecosystem. Open interoperability is crucial since most customers work together with the warehouse by means of these exterior instruments. The platform scales effortlessly—not solely with knowledge and customers, but additionally with the rising range of instruments your groups depend on—and gives highly effective built-in capabilities like Databricks AI/BI, Mosaic AI and extra, whereas sustaining flexibility and interoperability along with your current ecosystem.

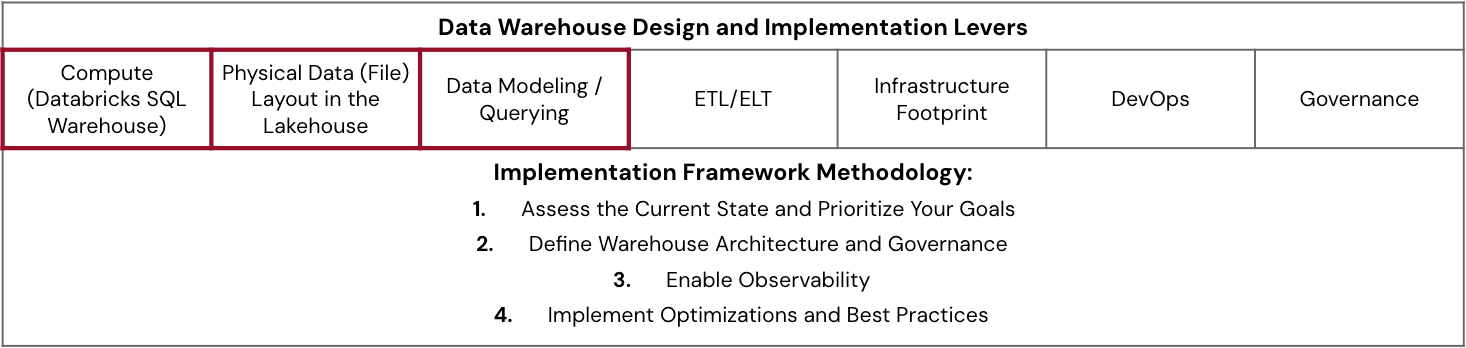

This weblog supplies a complete information for organizations at any stage of their lakehouse structure journey—from preliminary design to mid-implementation to ongoing optimization—on maximizing high-concurrency, low-latency efficiency with the Databricks Knowledge Intelligence Platform. We’ll discover:

- Core architectural parts of an information warehouse and their collective impression on platform efficiency.

- A structured performance-tuning framework to information the optimization of those architectural components.

- Finest practices, monitoring methods and tuning methodologies to make sure sustained efficiency at scale.

- An actual-world case research demonstrating how these rules work collectively in apply.

Key Architectural Issues

Whereas many foundational rules of conventional knowledge warehouses nonetheless apply—corresponding to sound knowledge modeling, sturdy knowledge administration and embedded knowledge high quality—designing a contemporary lakehouse for manufacturing‑grade analytics requires a extra holistic method. Central to this can be a unified governance framework, and Unity Catalog (AWS | Azure | GCP) performs a important position in delivering it. By standardizing entry controls, lineage monitoring and auditability throughout all knowledge and AI property, Unity Catalog ensures constant governance at scale—one thing that is more and more important as organizations develop in knowledge quantity, consumer concurrency and platform complexity.

Efficient design requires:

- Adoption of confirmed architectural finest practices

- An understanding of tradeoffs between interconnected parts

- Clear aims for concurrency, latency and scale based mostly on enterprise necessities

In a lakehouse, efficiency outcomes are influenced by architectural decisions made early within the design section. These deliberate design selections spotlight how fashionable lakehouses characterize a basic departure from legacy knowledge warehouses throughout 5 important axes:

With these architectural concerns in thoughts, let’s discover a sensible framework for implementing a production-grade knowledge warehouse that may ship on the promise of high-concurrency and low-latency at scale.

Technical Resolution Breakdown

The next framework distills finest practices and architectural rules developed by means of real-world engagements with enterprise prospects. Whether or not you are constructing a brand new knowledge warehouse, migrating from a legacy platform or tuning an current lakehouse, these pointers will provide help to speed up time to manufacturing whereas delivering scalable, performant and cost-efficient outcomes.

Begin With a Use Case-Pushed Evaluation

Earlier than implementing, we advocate a fast evaluation of a important workload—usually your slowest dashboard or most resource-intensive pipeline. This method helps you determine efficiency gaps and prioritize areas for optimization.

Ask the next questions to border your evaluation:

- What efficiency metrics matter most (e.g., question latency, throughput, concurrency) and the way do they examine to enterprise expectations?

- Who makes use of this workload, when and the way ceaselessly?

- Are compute prices proportional to the workload’s enterprise worth?

This evaluation creates a basis for focused enhancements and helps align your optimization efforts with enterprise impression.

Implementation Framework

The framework beneath outlines a step-by-step method to implementing or modernizing your warehouse on Databricks:

- Assess the Present State and Prioritize Your Targets

- Consider and examine the prevailing structure towards efficiency, price and scalability targets.

- Outline enterprise (and know-how) necessities for concurrency, latency, scale, price, SLAs and different elements so the aim posts do not preserve shifting.

- Establish gaps that impression the enterprise most and prioritize remediation based mostly on worth and complexity (whether or not designing new, mid-migration or in manufacturing).

- Outline Warehouse Structure and Governance

- Design logical segmentation: Decide which groups or use instances will share or require devoted SQL Warehouses.

- Proper-size your warehouse situations, apply tagging and outline defaults (e.g., cache settings, timeouts, and many others.).

- Perceive and plan for fine-grained configurations like default caching, warehouse timeouts, JDBC timeouts from BI instruments and SQL configuration parameters (AWS | Azure | GPC).

- Set up a governance mannequin for warehouses overlaying administrator (AWS | Azure | GCP) and finish consumer (AWS | Azure | GCP) roles and obligations.

- Put money into coaching and supply implementation templates to make sure consistency throughout groups.

- Allow Observability

- Allow observability and monitoring for SQL warehouse utilization to detect anomalies, uncover inefficient workloads and optimize useful resource utilization.

- Activate out-of-the-box performance (AWS | Azure | GCP) alongside customized telemetry and automate alerts/remediations the place doable.

- Study to leverage system tables, warehouse monitoring and question profiles to determine points like spill, shuffle or queuing.

- Combine price knowledge and lineage metadata (e.g., BI device context through question historical past tables) to correlate efficiency and spend.

- Implement Optimizations and Finest Practices

- Leverage insights from observability to align workload efficiency with enterprise and know-how necessities.

- Implement AI options for price, format and compute effectivity.

- Codify learnings into reusable templates, documentation and checklists to scale finest practices throughout groups.

- Optimize incrementally utilizing an effort (complexity, timeline, experience) vs. impression (efficiency, price, upkeep overhead) matrix to prioritize.

Within the sections beneath, let’s stroll by means of every stage of this framework to know how considerate design and execution allow excessive concurrency, low latency and business-aligned price efficiency on Databricks.

Assess the Present State and Prioritize Your Targets

Earlier than diving into finest practices and tuning methods, it is important to know the foundational levers that form lakehouse efficiency—corresponding to compute sizing, knowledge format and knowledge modeling. These are the areas groups can instantly affect to satisfy high-concurrency, low-latency, scale objectives.

The scorecard beneath supplies a easy matrix to evaluate maturity throughout every lever and determine the place to focus your efforts. To make use of it, consider every lever throughout three dimensions: how effectively it meets enterprise wants, how carefully it aligns with finest practices, the extent of technical functionality your crew has in that space and governance. Apply a Pink-Amber-Inexperienced (RAG) score to every intersection to shortly visualize strengths (inexperienced), areas for enchancment (amber) and important gaps (purple). The perfect practices and analysis methods later on this weblog will inform the score–use this directionality together with a extra granular maturity evaluation. This train can information discussions throughout groups, floor hidden bottlenecks and assist prioritize the place to take a position—whether or not in coaching, structure adjustments or automation.

With the parts that drive lakehouse efficiency and a framework to implement them outlined, what’s subsequent? The mix of finest practices (what to do), tuning methods (how to do it) and evaluation strategies (when to do it) supplies the actions to take to realize your efficiency aims.

The main target shall be on particular finest practices and granular configuration methods for just a few important parts that work harmoniously to function a high-performing knowledge warehouse.

Outline Warehouse Structure and Governance

Compute (Databricks SQL Warehouse)

Whereas compute is commonly seen as the first efficiency lever, compute sizing selections ought to at all times be thought-about alongside knowledge format design and modeling/querying, as these instantly impression the compute wanted to realize the required efficiency.

Proper-sizing SQL warehouses is important for cost-effective scaling. There isn’t any crystal ball for exact sizing upfront, however these are a collection of key heuristics to observe for organizing and sizing SQL warehouse compute.

- Allow SQL Serverless Warehouses: They provide instantaneous compute, elastic autoscaling and are totally managed, simplifying operations for all sorts of makes use of, together with bursty and inconsistent BI/analytics workloads. Databricks totally manages the infrastructure, with that infrastructure price baked in, providing the potential for TCO reductions.

- Perceive Workloads and Customers: Phase customers (human/automated) and their question patterns (interactive BI, advert hoc, scheduled reviews) to make use of completely different warehouses scoped by utility context, a logical grouping by goal, crew, operate, and many others. Implement a multi-warehouse structure, by these segments, to have extra fine-grained sizing management and the power to observe independently. Guarantee tags for price attribution are enforced. Attain out to your Databricks account contact to entry upcoming options meant to forestall noisy neighbors.

- Iterative Sizing and Scaling: Do not overthink the preliminary warehouse measurement or min/max cluster settings. Changes based mostly on monitoring actual workload efficiency, utilizing mechanisms within the subsequent part, are far simpler than upfront guesses. Knowledge volumes and the variety of customers don’t precisely estimate the compute wanted. The varieties of queries, patterns and concurrency of question load are higher metrics, and there is an automatic profit from Clever Workload Administration (IWM) (AWS | Azure | GCP).

- Perceive When to Resize vs. Scale: Improve warehouse measurement (“T-shirt measurement”) when needing to accommodate resource-heavy, advanced queries like massive aggregations and multi-table joins, which require excessive reminiscence—monitor frequency of disk spills and reminiscence utilization. Improve the variety of clusters for autoscaling when coping with bursty concurrent utilization and if you see persistent queuing on account of many queries ready to execute, not just a few intensive queries pending.

- Steadiness Availability and Value: Configure auto-stop settings. Serverless’s fast chilly begin makes auto-stopping a big cost-saver for idle intervals.

Bodily Knowledge (File) Structure within the Lakehouse

Quick queries start with knowledge skipping, the place the question engine reads solely related recordsdata utilizing metadata and statistics for environment friendly file pruning. The bodily group of your knowledge instantly impacts this pruning, making file format optimization important for high-concurrency, low-latency efficiency.

The evolution of knowledge format methods on Databricks gives numerous approaches for optimum file group:

For brand spanking new tables, Databricks recommends defaulting to managed tables with Auto Liquid Clustering (AWS | Azure | GCP) and Predictive Optimization (AWS | Azure | GCP). Auto Liquid Clustering intelligently organizes knowledge based mostly on question patterns, and you’ll specify preliminary clustering columns as hints to allow it in a single command. Predictive Optimization robotically handles upkeep jobs like OPTIMIZE, VACUUM and ANALYZE.

For current deployments utilizing exterior tables, contemplate migrating to managed tables to completely leverage these AI-powered options, prioritizing high-read and latency-sensitive tables first. Databricks supplies an automatic resolution (AWS | Azure | GCP) with the ALTER TABLE...SET MANAGED command to simplify the migration course of. Moreover, Databricks helps managed Iceberg tables as a part of its open desk format technique.

Knowledge Modeling / Querying

Modeling is the place enterprise necessities meet knowledge construction. All the time begin by understanding your finish consumption patterns, then mannequin to these enterprise wants utilizing your group’s most well-liked methodology—Kimball, Inmon, Knowledge Vault or denormalized approaches. The lakehouse structure on Databricks helps all of them.

Unity Catalog options prolong past observability and discovery with lineage, major keys (PKs), constraints and schema evolution capabilities. They supply essential hints to the Databricks question optimizer, enabling extra environment friendly question plans and bettering question efficiency. As an example, declaring PKs and international keys with RELY permits the optimizer to get rid of redundant joins, instantly impacting pace. Unity Catalog’s sturdy help for schema evolution additionally ensures agility as your knowledge fashions adapt over time. Unity Catalog supplies a normal governance mannequin based mostly on ANSI SQL.

Further related sources embody Knowledge Warehousing Modeling Strategies and a three-part sequence on Dimensional Knowledge Warehousing (Half 1, Half 2 and Half 3).

Allow Observability

Activating monitoring and motion tuning selections completely highlights the interconnectedness of knowledge warehouse parts amongst compute, bodily file format, question effectivity and extra.

- Begin by establishing observability by means of dashboards and functions.

- Outline discovered patterns for figuring out and diagnosing efficiency bottlenecks after which correcting them.

- Iteratively construct in automation by means of alerting and agentic corrective actions.

- Compile frequent developments inflicting bottlenecks and incorporate them into growth finest practices, code overview checks and templates.

Steady monitoring is crucial for sustained excessive, constant efficiency and price effectivity in manufacturing. Understanding normal patterns permits one to refine one’s tuning selections as utilization evolves.

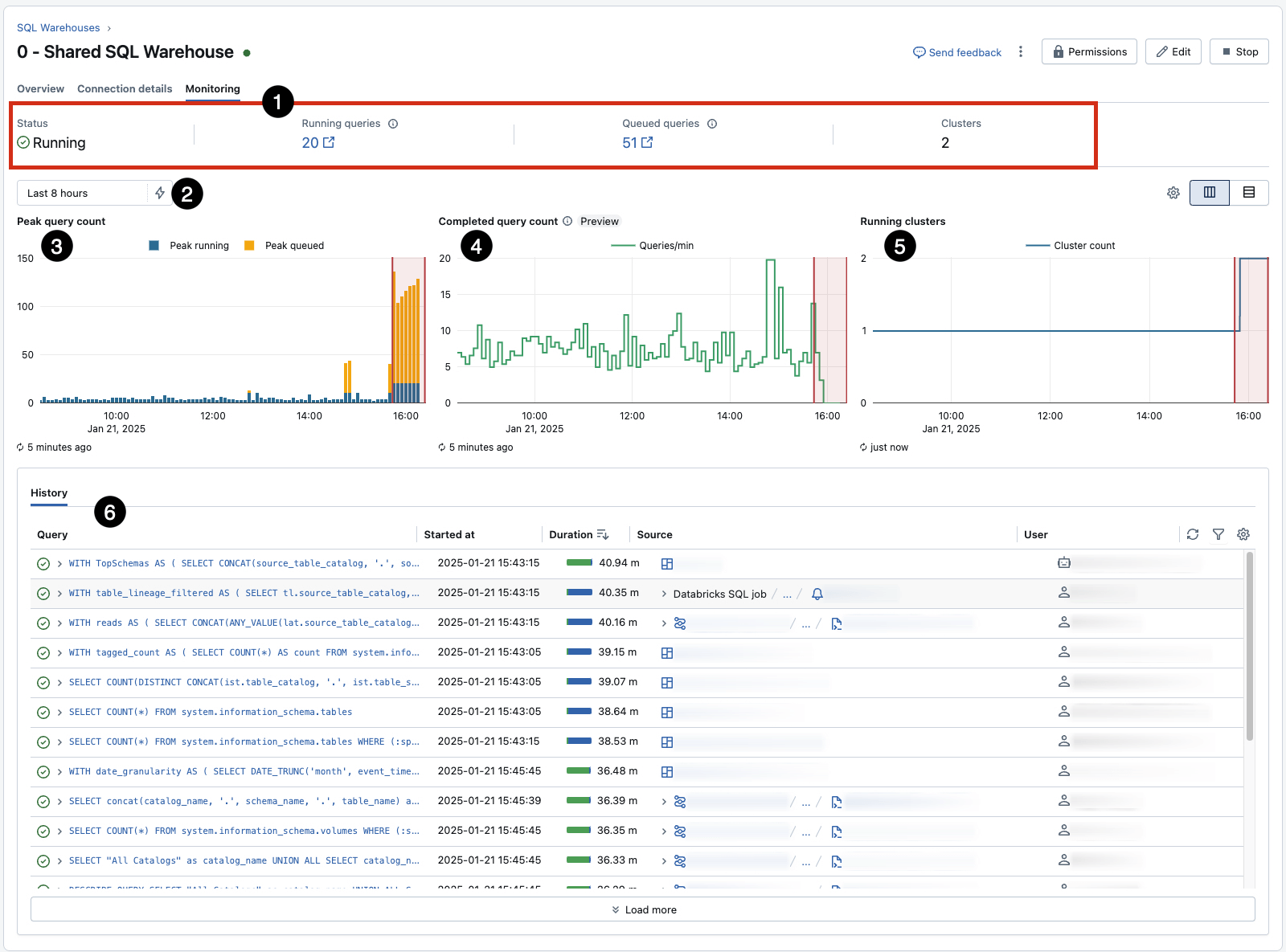

Monitor and Regulate: Use every warehouse’s built-in Monitoring tab (AWS | Azure | GCP) for real-time insights into peak concurrent queries, utilization and different key statistics. This supplies a fast reference for statement, however must be supplemented with additional methods to drive alerts and motion.

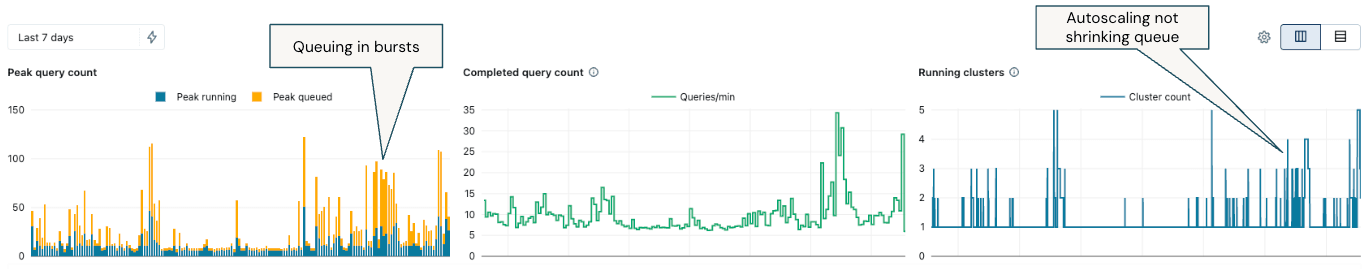

- Pay specific consideration to 3, which reveals queueing as a consequence of concurrency limits for a given warehouse (and will be influenced by resizing) and 5, which exhibits autoscaling occasions in response to the queue. 6 captures question historical past, an ideal place to begin for figuring out and investigating long-running and inefficient workloads.

Leverage system tables: Helps extra granular, bespoke monitoring. Over time, develop customized dashboards and alerts, however benefit from ready choices:

- The Granular SQL Warehouse Monitoring Dashboard supplies a complete view of knowledgeable scaling selections by understanding who and what drives prices.

- The DBSQL Workflow Advisor supplies a view throughout scaling, question efficiency to determine bottlenecks and price attribution.

- Introduce customized SQL Alerts (AWS | Azure | GCP) for in-built notifications discovered from the monitoring occasions from the above.

For patrons interested by price attribution and observability past simply the SQL Warehouse, this devoted weblog, From Chaos to Management: A Value Maturity Journey with Databricks, on the price maturity journey, is a helpful useful resource.

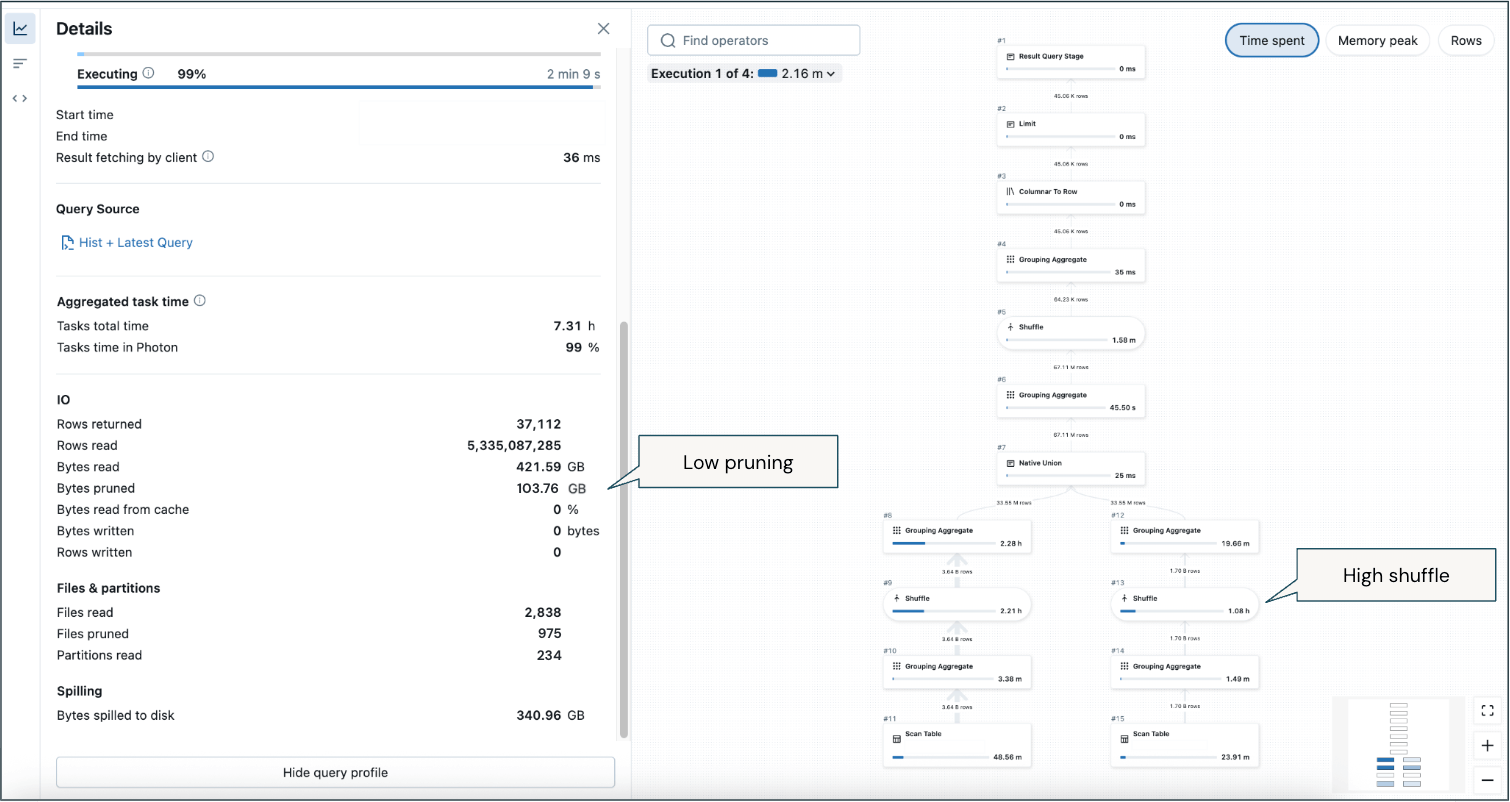

Make the most of Question Profiles: The Question Profile (AWS | Azure | GCP) device is your major diagnostic for particular person question efficiency points. It supplies detailed execution plans and helps pinpoint bottlenecks that have an effect on required compute.

|

|

A number of place to begin options of what to search for from the question profile:

- Examine if pruning happens. If there must be pruning (AWS | Azure | GCP) (i.e., lowering knowledge learn from storage utilizing metadata/statistics of tables), which you’d count on if making use of predicates or joins, nevertheless it’s not occurring, then analyze the file format technique. Ideally, recordsdata/partitions learn must be low and recordsdata pruned must be excessive.

- A big quantity of wall-clock time spent in “Scheduling” (larger than just a few seconds) suggests queuing.

- If the ‘End result fetching by consumer’ period takes more often than not, it signifies a possible community concern between the exterior device/utility and SQL warehouse.

- Bytes learn from the cache will differ relying on utilization patterns, as customers working queries utilizing the identical tables on the identical warehouse will naturally leverage the cached knowledge slightly than re-scanning recordsdata.

- The DAG (Directed Acyclic Graph–AWS | Azure | GCP) permits you to determine steps by period of time they took, reminiscence utilized and rows learn. This may also help slender down efficiency points for extremely advanced queries.

- To detect the small file downside (the place knowledge recordsdata are considerably smaller than the optimum measurement, inflicting inefficient processing), ideally, the common file measurement must be between 128MB and 1GB, relying on the scale of the desk:

- Nearly all of the question plan spent scanning supply desk(s).

- Run

DESCRIBE DETAIL [Table Name]. To search out the common file measurement, divide thesizeInBytesby thenumFiles. Or, within the question profile, use [Bytes read] / [Files read].

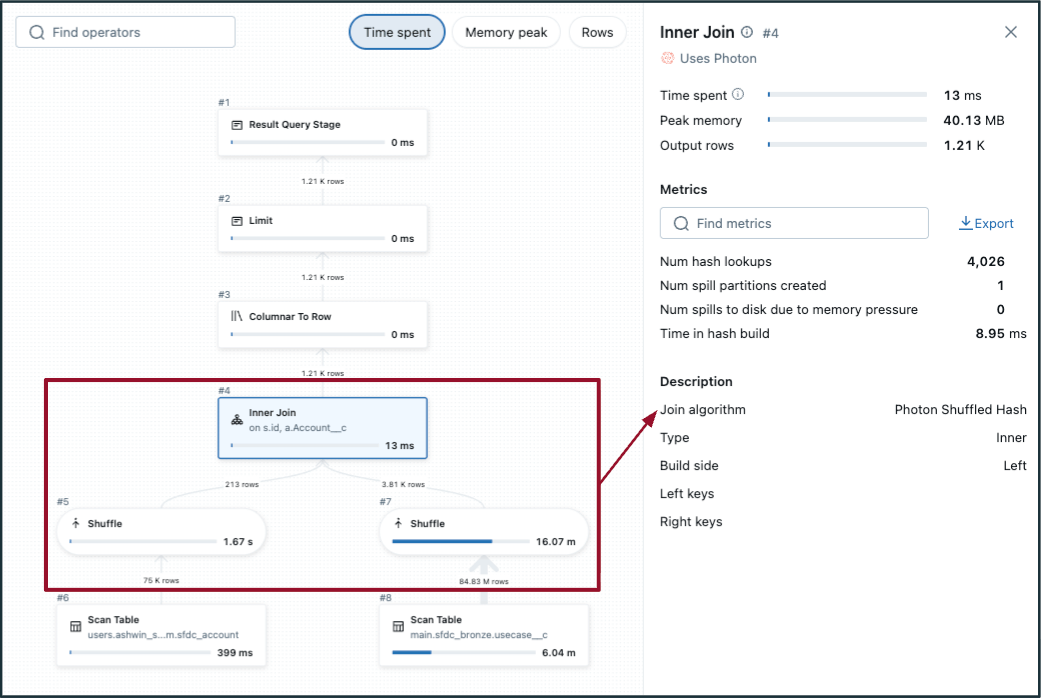

- To detect a doubtlessly inefficient shuffle hash be a part of:

- Select the be a part of step within the DAG and test the “Be part of algorithm”.

- No/low file pruning.

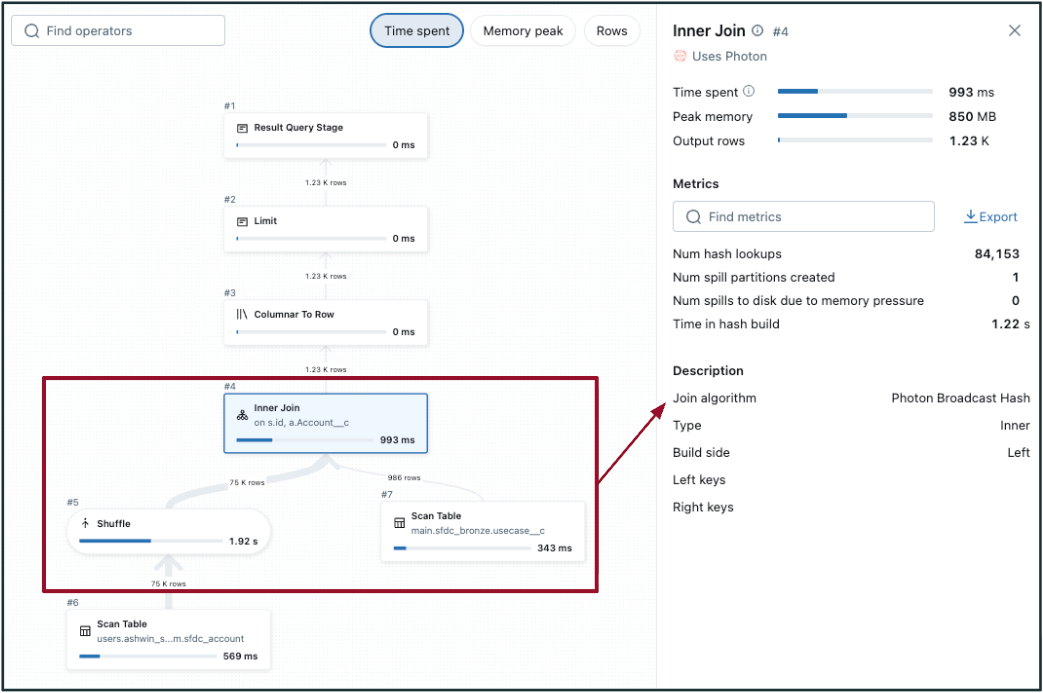

- Within the DAG, shuffle happens on each tables (on both aspect of the be a part of, like within the picture to the left). If one of many tables is sufficiently small, contemplate broadcasting to carry out a broadcast hash be a part of as an alternative (proven within the picture to the fitting).

- Adaptive question execution (AQE) defaults to

- All the time guarantee filters are being utilized to scale back supply datasets.

|  |

Implement Optimizations and Finest Practices

Efficiency Points: The 4 S’s + Queuing

Whether or not configuring compute for a brand new workload or optimizing, it’s a necessity to know the most typical efficiency points. These match into a typical moniker, “The 4 S’s”, with a fifth (queuing) added on:

To cut back question latency in your SQL warehouse, decide whether or not spill, queuing and/or shuffle (skew and small recordsdata will come up later) is the first efficiency bottleneck. This complete information supplies extra particulars. After figuring out the foundation trigger, apply the rules beneath to regulate SQL warehouse sizing accordingly and measure the impression.

- Disk Spill (from reminiscence to disk): Spill happens when a SQL warehouse runs out of reminiscence and writes non permanent outcomes to disk, considerably slower than in-memory processing. In a Question Profile, any quantities towards “spill (bytes)” or “spill time” point out that is occurring.

To mitigate spills, enhance the SQL warehouse T-shirt measurement to supply extra reminiscence. Question reminiscence utilization will also be diminished by means of question optimization methods corresponding to early filtering, lowering skew and simplifying joins. Bettering file format—utilizing appropriately sized recordsdata or making use of Liquid Clustering—can additional restrict the quantity of knowledge scanned and shuffled throughout execution.

Helper question on system tables that may be transformed to a SQL Alert or AI/BI Dashboard

- Question Queuing: If the SQL Warehouse Monitoring display exhibits persistent queuing (the place peak queued queries are >10) that does not instantly resolve with an autoscaling occasion, enhance the max scaling worth to your warehouse. Queuing instantly provides latency as queries anticipate obtainable sources.

Helper question on system tables that may be transformed to a SQL Alert or AI/BI Dashboard

- Excessive Parallelization/Low Shuffle: For queries that may be cut up into many impartial duties—corresponding to filters or aggregations throughout massive datasets—and present low shuffle in Question Profiles, rising the SQL warehouse T-shirt measurement can enhance throughput and cut back queuing. Low shuffle signifies minimal knowledge motion between nodes, which allows extra environment friendly parallel execution.

- Slim transformations (e.g., level lookups, combination lookups) typically profit from extra scaling for concurrent question dealing with. Broad transformations (advanced joins with aggregation) typically profit extra from bigger warehouse sizes versus scaling.

- Excessive Shuffle: Conversely, when shuffle is excessive, massive quantities of knowledge are exchanged between nodes throughout question execution—usually as a consequence of joins, aggregations or poorly organized knowledge. This could be a important efficiency bottleneck. In Question Profiles, excessive shuffle is indicated by massive values underneath “shuffle bytes written”, “shuffle bytes learn” or lengthy durations in shuffle-related phases. If these metrics are persistently elevated, optimizing the question or bettering bodily knowledge format slightly than merely scaling up compute is finest.

Helper question on system tables that may be transformed to a SQL Alert or AI/BI Dashboard

Taking a Macro Monitoring View

Whereas these analyses and guidelines assist perceive how queries impression the warehouse on the micro stage, sizing selections are made on the macro stage. Usually, begin by enabling the monitoring capabilities within the earlier part (and customise them) to determine what is occurring after which set up threshold measures for spill, skew, queuing, and many others., to function indicators for when resizing is required. Consider these thresholds to generate an impression rating by the frequency with which the thresholds are met or the proportion of time the thresholds are exceeded throughout common operation. To share just a few instance measures (outline these utilizing your particular enterprise necessities and SLAs):

- Share of time every day that peak queued queries > 10

- Queries which might be within the high 5% of highest shuffle for an prolonged interval or persistently within the high 5% highest shuffle throughout peak utilization

- Intervals the place a minimum of 20% of queries spill to disk or queries that spill to disk on greater than 25% of their executions

It is necessary to floor this in recognizing there are tradeoffs to think about, not a single recipe to observe or one-size-fits-all for each knowledge warehouse. If queue latency just isn’t a priority, doubtlessly for in a single day queries that refresh, then do not tune for ultra-low-concurrency and acknowledge price effectivity with greater latency. This weblog supplies a information on finest practices and methodologies to diagnose and tune your knowledge warehouse based mostly in your distinctive implementation wants.

Optimizing Bodily Knowledge (File) Structure within the Lakehouse

Under are a number of finest practices for managing and optimizing bodily knowledge recordsdata saved in your lakehouse. Use these and monitoring methods to diagnose and resolve points impacting your knowledge warehouse analytic workloads.

- Regulate the information skipping of a desk (AWS | Azure | GCP) if crucial. Delta tables retailer min/max and different statistics metadata for the primary 32 columns by default. Growing this quantity can enhance DML operation execution occasions, however might lower question runtime if the extra columns are filtered in queries.

- To determine if in case you have the small file downside, overview desk properties (numFiles, sizeInBytes, clusteringColumns, partitionColumns) and use both Predictive Optimization with Liquid Clustering or make sure you run OPTIMIZE compaction routines on high of correctly organized knowledge.

- Whereas the advice is to allow Auto Liquid Clustering and benefit from Predictive Optimization to take away guide tuning, it’s useful to know underlying finest practices and be empowered to tune in choose situations manually. Under are helpful guidelines of thumb for choosing clustering columns:

- Begin with a single column, the one most naturally used as a predicate (and utilizing the options beneath), until there are just a few apparent candidates. Typically, solely enormous tables profit from >1 cluster key.

- Prioritizing columns to make use of prioritizes optimizing reads over writes. They need to be 1) used as filter predicates, 2) utilized in GROUP BY or JOIN operations and three) MERGE columns.

- Usually, it ought to have excessive cardinality (however not distinctive). Keep away from meaningless values like UUID strings until you require fast lookups on these columns.

- Do not cut back cardinality (e.g., convert from timestamp to this point) as you’ll when setting a partition column.

- Do not use two associated columns (e.g., timestamp and datestamp)—at all times select the one with the upper cardinality.

- The order of keys within the CREATE TABLE syntax doesn’t matter. Multi-dimensional clustering is used.

Bringing it All Collectively: A Systematic Strategy

This weblog focuses on the primary three architectural levers. Different important implementation parts contribute to architecting a high-concurrency, scalable, low-latency knowledge warehouse, together with ETL/ELT, infrastructure footprint, DevOps and Governance. Further product perspective on implementing a lakehouse will be discovered right here, and an array of finest practices is out there from the Complete Information to Optimize Databricks, Spark and Delta Lake Workloads.

The foundational parts of your knowledge warehouse—compute, knowledge format and modeling/querying—are extremely interdependent. Addressing efficiency successfully requires an iterative course of: repeatedly monitoring, optimizing and guaranteeing new workloads adhere to an optimized blueprint. And evolve that blueprint as know-how finest practices change and your enterprise necessities change. You need the instruments and know-how to tune your warehouse to satisfy your exact concurrency, latency and scalability necessities. Strong governance, transparency, monitoring and safety allow this core architectural framework. These should not separate concerns however the bedrock for delivering best-in-class knowledge warehouse experiences on Databricks.

Now, let’s discover a latest buyer instance by which the framework and foundational finest practices, tuning and monitoring levers, had been utilized in apply, and a company considerably improved its knowledge warehouse efficiency and effectivity.

Actual-World Situations and Tradeoffs

E mail Advertising and marketing Platform Optimization

Enterprise Context

An electronic mail advertising and marketing platform supplies e-commerce retailers with instruments to create customized buyer journeys based mostly on wealthy buyer knowledge. The applying allows customers to orchestrate electronic mail campaigns to focused audiences, serving to shoppers craft segmentation methods and monitor efficiency. Actual-time analytics are important to their enterprise—prospects count on quick visibility into marketing campaign efficiency metrics like click-through charges, bounces and engagement knowledge.

Preliminary Problem

The platform was experiencing efficiency and price points with its analytics infrastructure. They had been working a Giant SQL Serverless warehouse with autoscaling from 1-5 clusters and even wanted to improve to XL throughout peak reporting intervals. Their structure relied on:

- Actual-time streaming knowledge from a message queue into Delta Lake through steady structured streaming

- A nightly job to consolidate streamed information right into a historic desk

- Question-time unions between the historic desk and streaming knowledge

- Advanced aggregations and deduplication logic executed at question time

This method meant that each buyer dashboard refresh required intensive processing, resulting in greater prices and slower response occasions.

From monitoring the SQL warehouse, there was important queueing (yellow columns), with bursty intervals of utilization, the place autoscaling correctly engaged however was not capable of sustain with workloads:

To diagnose the reason for queueing, we recognized just a few long-running queries and most ceaselessly executed queries utilizing the question historical past (AWS | Azure | GCP) and system tables to find out whether or not queueing was merely as a consequence of a excessive quantity of comparatively fundamental, slender queries or if optimization was wanted to enhance poor-performing queries.

A number of important callouts from this instance profile from a long-running question:

- Low pruning (regardless of important filtering on time interval to return the newest 2 weeks) means a substantial quantity of knowledge is being scanned.

- Excessive shuffle—there’ll inherently be shuffle as a consequence of analytical aggregations, however it’s the majority of reminiscence utilization throughout historic and up to date knowledge.

- Spill to disk in some situations.

These learnings from observing important queries led to optimization actions throughout compute, knowledge format and question methods.

Optimization Strategy

Working with a Databricks Supply Options Architect, the platform applied a number of key optimizations:

- Elevated merge frequency: Modified from nightly to hourly merges, considerably lowering the amount of streaming knowledge that wanted processing at question time.

- Implement Materialized Views: Transformed the aggregation desk right into a materialized view that refreshes incrementally every hour, pre-computing advanced aggregation logic throughout refresh in order that query-time processing is proscribed to solely the newest hour’s knowledge.

- Fashionable knowledge group: Switched from Hive-style partitioning to automated liquid clustering, which intelligently selects optimum clustering columns based mostly on question patterns and adapts over time.

Outcomes

After a six-week discovery and implementation course of, the platform noticed quick and noteworthy enhancements as soon as deployed:

- Decreased infrastructure prices: Downsized from a Giant serverless warehouse with autoscaling to a Small serverless warehouse with no autoscaling.

- Improved question efficiency: Decrease latency for end-user dashboards, enhancing buyer expertise.

- Streamlined operations: Eradicated operational overhead from frequent end-user efficiency complaints and help instances.

An instance of a question profile after optimization:

- Because the file format was optimized, extra file pruning occurred to scale back the quantity of knowledge/recordsdata that wanted to be learn.

- No spill to disk.

- Shuffle nonetheless happens due to analytical aggregations, however the quantity of shuffling is considerably diminished as a consequence of extra environment friendly pruning and pre-aggregated components that do not have to be calculated at runtime.

This transformation demonstrates how making use of knowledge modeling finest practices, leveraging serverless compute and using Databricks superior options like materialized views and liquid clustering can dramatically enhance each efficiency and cost-efficiency.

Key Takeaways

- Focus your necessities on knowledge warehouse concurrency, latency and scale. Then, use finest practices, observability capabilities and tuning methods to satisfy these necessities.

- Give attention to right-sizing compute, implementing sturdy knowledge format practices (considerably helped by AI) and addressing knowledge fashions and queries because the precedence.

- The perfect knowledge warehouse is a Databricks lakehouse—benefit from modern approaches that result in new options, married with foundational knowledge warehouse rules.

- Meet conventional knowledge warehousing wants with out sacrificing AI/ML (you are capitalizing on them with Databricks).

- Do not measurement and tune blindly; leverage built-in observability to observe, optimize and automate cost-saving actions.

- Undertake Databricks SQL Serverless for optimum value efficiency and help the variable utilization patterns typical of BI and analytics workloads.

Subsequent Steps and Further Assets

Reaching a high-concurrency, low-latency knowledge warehouse that scales doesn’t occur by following a boilerplate recipe. There are tradeoffs to think about, and lots of parts all work collectively. Whether or not you are cementing your knowledge warehousing technique, in progress with an implementation and struggling to go stay, or optimizing your present footprint, contemplate the very best practices and framework outlined on this weblog to deal with it holistically. Attain out if you would like assist or to debate how Databricks can help all of your knowledge warehousing wants.

Databricks Supply Options Architects (DSAs) speed up Knowledge and AI initiatives throughout organizations. They supply architectural management, optimize platforms for price and efficiency, improve developer expertise and drive profitable venture execution. DSAs bridge the hole between preliminary deployment and production-grade options, working carefully with numerous groups, together with knowledge engineering, technical leads, executives and different stakeholders to make sure tailor-made options and sooner time to worth. To profit from a customized execution plan, strategic steerage and help all through your knowledge and AI journey from a DSA, please get in contact along with your Databricks Account Group.