Till just lately, I held the idea that Generative Synthetic Intelligence

(GenAI) in software program growth was predominantly fitted to greenfield

initiatives. Nevertheless, the introduction of the Mannequin Context Protocol (MCP)

marks a big shift on this paradigm. MCP emerges as a transformative

enabler for legacy modernization—particularly for large-scale, long-lived, and

complicated programs.

As a part of my exploration into modernizing Bahmni’s codebase, an

open-source Hospital Administration System and Digital Medical Report (EMR),

I evaluated the usage of Mannequin Context Protocol (MCP) to assist the migration

of legacy show controls. To information this course of, I adopted a workflow that

I discuss with as “Analysis, Evaluate, Rebuild”, which supplies a structured,

disciplined, and iterative method to code migration. This memo outlines

the modernization effort—one which goes past a easy tech stack improve—by

leveraging Generative AI (GenAI) to speed up supply whereas preserving the

stability and intent of the present system. Whereas a lot of the content material

focuses on modernizing Bahmni, that is just because I’ve hands-on

expertise with the codebase.

The preliminary outcomes have been nothing wanting exceptional. The

streamlined migration effort led to noticeable enhancements in code high quality,

maintainability, and supply velocity. Primarily based on these early outcomes, I

imagine this workflow—when augmented with MCP—has the potential to turn out to be a

recreation changer for legacy modernization.

Bahmni and Legacy Code Migration

Bahmni is an open-source Hospital Administration

System & EMR constructed to assist healthcare supply in low-resource

settings offering a wealthy interface for scientific and administrative customers.

The Bahmni frontend was initially

developed utilizing AngularJS (model 1.x)—an

early however highly effective framework for constructing dynamic internet purposes.

Nevertheless, AngularJS has lengthy been deprecated by the Angular staff at Google,

with official long-term assist having led to December 2021.

Regardless of this, Bahmni continues to rely closely on AngularJS for a lot of of

its core workflows. This reliance introduces vital dangers, together with

safety vulnerabilities from unpatched dependencies, problem in

onboarding builders unfamiliar with the outdated framework, restricted

compatibility with fashionable instruments and libraries, and lowered maintainability

as new necessities are constructed on an growing older codebase.

In healthcare programs, the continued use of outdated software program can

adversely have an effect on scientific workflows and compromise affected person knowledge security.

For Bahmni, frontend migration has turn out to be a vital precedence.

Analysis, Evaluate, Rebuild

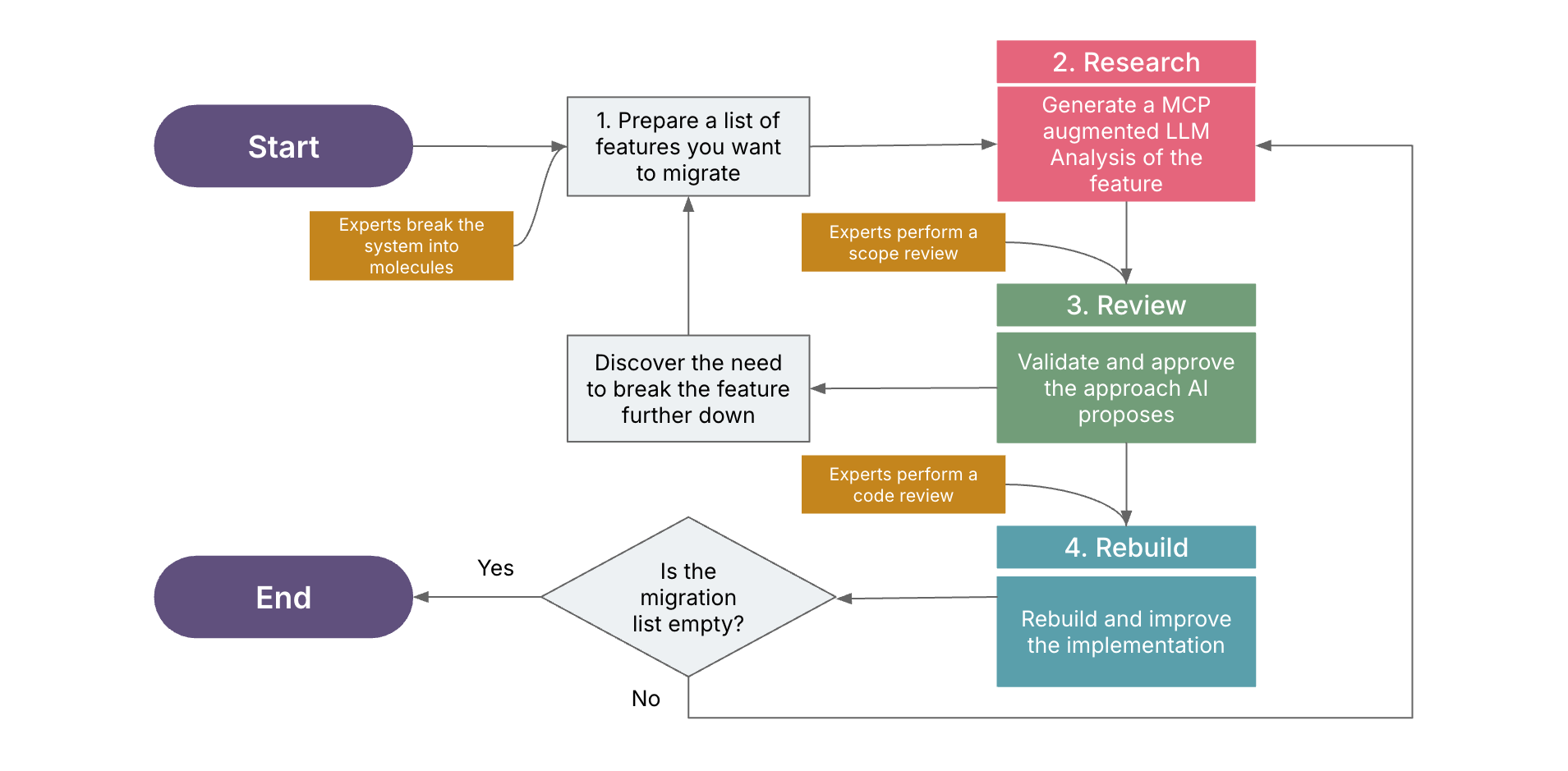

Determine 1: Analysis, Evaluate, Rebuild Workflow

The workflow I adopted known as “Analysis, Evaluate, Rebuild” — the place

we do a characteristic migration analysis utilizing a few MCP servers, validate

and approve the method AI proposes, rebuild the characteristic after which as soon as

all of the code era is finished, refactor issues that you just did not like.

The Workflow

- Put together an inventory of options focused for migration. Choose one characteristic to

start with. - Use Mannequin Context Protocol (MCP) servers to analysis the chosen characteristic

by producing a contextual evaluation of the chosen characteristic by way of a Massive

Language Mannequin (LLM). - Have area consultants evaluation the generated evaluation, making certain it’s

correct, aligns with present mission conventions and architectural tips.

If the characteristic just isn’t sufficiently remoted for migration, defer it and replace

the characteristic record accordingly. - Proceed with LLM-assisted rebuild of the validated characteristic to the goal

system or framework. - Till the record is empty, return to #2

Earlier than Getting Began

Earlier than we proceed with the workflow, it’s important to have a

high-level understanding of the present codebase and decide which

parts must be retained, discarded, or deferred for future

consideration.

Within the context of Bahmni, Show

Controls

are modular, configurable widgets that may be embedded throughout varied

pages to reinforce the system’s flexibility. Their decoupled nature makes

them well-suited for focused modernization efforts. Bahmni presently

contains over 30 show controls developed over time. These controls are

extremely configurable, permitting healthcare suppliers to tailor the interface

to show pertinent knowledge like diagnoses, remedies, lab outcomes, and

extra. By leveraging show controls, Bahmni facilitates a customizable

and streamlined person expertise, aligning with the varied wants of

healthcare settings.

All the present Bahmni show controls are constructed over OpenMRS REST

endpoint, which is tightly coupled with the OpenMRS knowledge mannequin and

particular implementation logic. OpenMRS (Open

Medical Report System) is an open-source platform designed to function a

foundational EMR system primarily for low-resource environments offering

customizable and scalable methods to handle well being knowledge, particularly in

creating nations. Bahmni is constructed on prime of OpenMRS, counting on

OpenMRS for scientific knowledge modeling and affected person file administration, utilizing

its APIs and knowledge constructions. When somebody makes use of Bahmni, they’re

primarily utilizing OpenMRS as half of a bigger system.

FHIR (Quick Healthcare

Interoperability Assets) is a contemporary customary for healthcare knowledge

trade, designed to simplify interoperability through the use of a versatile,

modular method to symbolize and share scientific, administrative, and

monetary knowledge throughout programs. FHIR was launched by

HL7 (Well being Stage Seven Worldwide), a

not-for-profit requirements growth group that performs a pivotal

position within the healthcare business by creating frameworks and requirements for

the trade, integration, sharing, and retrieval of digital well being

data. The time period “Well being Stage Seven” refers back to the seventh layer

of the OSI (Open Programs

Interconnection) mannequin—the applying

layer,

accountable for managing knowledge trade between distributed programs.

Though FHIR was initiated in 2011, it reached a big milestone

in December 2018 with the discharge of FHIR Launch 4 (R4). This launch

launched the primary normative content material, marking FHIR’s evolution right into a

secure, production-ready customary appropriate for widespread adoption.

Bahmni’s growth commenced in early 2013, throughout a time when FHIR

was nonetheless in its early phases and had not but achieved normative standing.

As such, Bahmni relied closely on the mature and production-proven OpenMRS

REST API. Given Bahmni’s dependence on OpenMRS, the provision of FHIR

assist in Bahmni was inherently tied to OpenMRS’s adoption of FHIR. Till

just lately, FHIR assist in OpenMRS remained restricted, experimental, and

lacked complete protection for a lot of important useful resource varieties.

With the current developments in FHIR assist inside OpenMRS, a key

precedence within the ongoing migration effort is to architect the goal system

utilizing FHIR R4. Leveraging FHIR endpoints facilitates standardization,

enhances interoperability, and simplifies integration with exterior

programs, aligning the system with globally acknowledged healthcare knowledge

trade requirements.

For the aim of this experiment, we’ll focus particularly on the

Remedies Show Management as a consultant candidate for

migration.

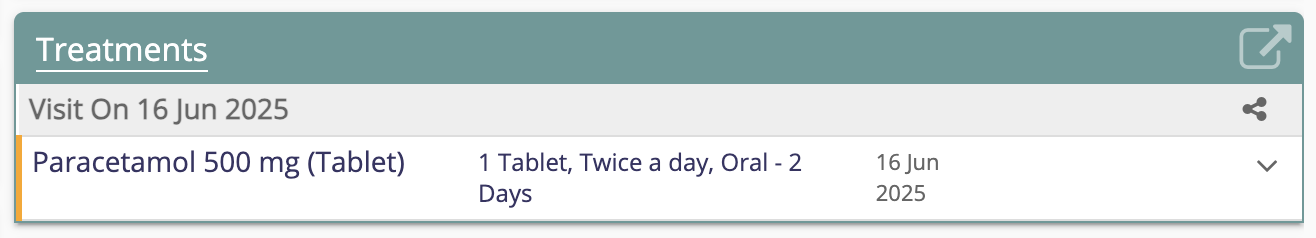

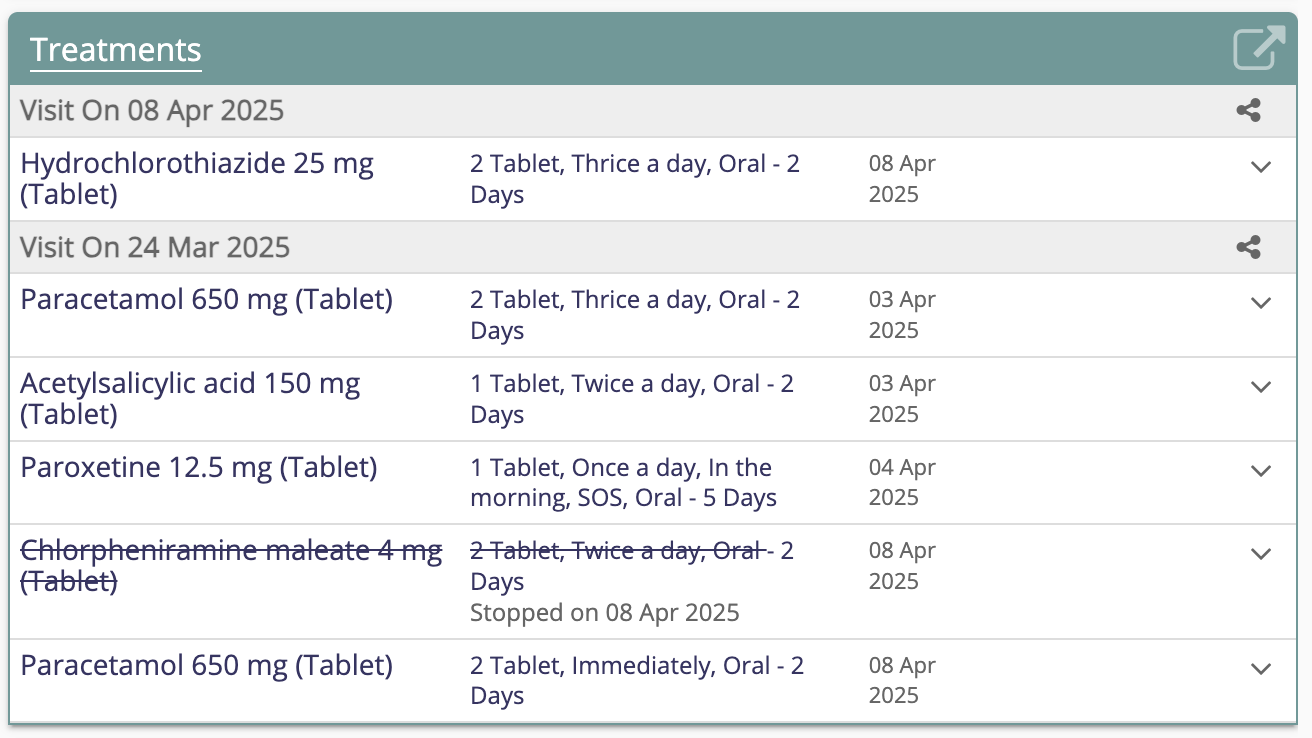

Determine 2: Legacy Remedies Show Management constructed utilizing

Angular and built-in with OpenMRS REST endpoints

The Remedy Particulars Management is a selected kind of show management

in Bahmni that focuses on presenting complete details about a

affected person’s prescriptions or drug orders over a configurable variety of

visits. This management is instrumental in offering clinicians with a

consolidated view of a affected person’s remedy historical past, aiding in knowledgeable

decision-making. It retrieves knowledge through a REST API, processing it right into a

view mannequin for UI rendering in a tabular format, supporting each present

and historic remedies. The management incorporates error dealing with, empty

state administration, and efficiency optimizations to make sure a strong and

environment friendly person expertise.

The information for this management is sourced from the

/openmrs/ws/relaxation/v1/bahmnicore/drugOrders/prescribedAndActive endpoint,

which returns visitDrugOrders. The visitDrugOrders array accommodates

detailed entries that hyperlink drug orders to particular visits, together with

metadata in regards to the supplier, drug idea, and dosing directions. Every

drug order contains prescription particulars equivalent to drug identify, dosage,

frequency, length, administration route, begin and cease dates, and

customary code mappings (e.g., WHOATC, CIEL, SNOMED-CT, RxNORM).

Here’s a pattern JSON response from Bahmni’s

/bahmnicore/drugOrders/prescribedAndActive REST API endpoint containing

detailed details about a affected person’s drug orders throughout a selected

go to, together with metadata like drug identify, dosage, frequency, length,

route, and prescribing supplier.

{ "visitDrugOrders": [ { "visit": { "uuid": "3145cef3-abfa-4287-889d-c61154428429", "startDateTime": 1750033721000 }, "drugOrder": { "concept": { "uuid": "70116AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA", "name": "Acetaminophen", "dataType": "N/A", "shortName": "Acetaminophen", "units": null, "conceptClass": "Drug", "hiNormal": null, "lowNormal": null, "set": false, "mappings": [ { "code": "70116", "name": null, "source": "CIEL" },y /* Response Truncated */ ] }, "directions": null, "uuid": "a8a2e7d6-50cf-4e3e-8693-98ff212eee1b", present remainder of json

"orderType": "Drug Order", "accessionNumber": null, "orderGroup": null, "dateCreated": null, "dateChanged": null, "dateStopped": null, "orderNumber": "ORD-1", "careSetting": "OUTPATIENT", "motion": "NEW", "commentToFulfiller": null, "autoExpireDate": 1750206569000, "urgency": null, "previousOrderUuid": null, "drug": { "identify": "Paracetamol 500 mg", "uuid": "e8265115-66d3-459c-852e-b9963b2e38eb", "kind": "Pill", "energy": "500 mg" }, "drugNonCoded": null, "dosingInstructionType": "org.openmrs.module.bahmniemrapi.drugorder.dosinginstructions.FlexibleDosingInstructions", "dosingInstructions": { "dose": 1.0, "doseUnits": "Pill", "route": "Oral", "frequency": "Twice a day", "asNeeded": false, "administrationInstructions": "{"directions":"As directed"}", "amount": 4.0, "quantityUnits": "Pill", "numberOfRefills": null }, "dateActivated": 1750033770000, "scheduledDate": 1750033770000, "effectiveStartDate": 1750033770000, "effectiveStopDate": 1750206569000, "orderReasonText": null, "length": 2, "durationUnits": "Days", "voided": false, "voidReason": null, "orderReasonConcept": null, "sortWeight": null, "conceptUuid": "70116AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA" }, "supplier": { "uuid": "d7a67c17-5e07-11ef-8f7c-0242ac120002", "identify": "Tremendous Man", "encounterRoleUuid": null }, "orderAttributes": null, "retired": false, "encounterUuid": "fe91544a-4b6b-4bb0-88de-2f9669f86a25", "creatorName": "Tremendous Man", "orderReasonConcept": null, "orderReasonText": null, "dosingInstructionType": "org.openmrs.module.bahmniemrapi.drugorder.dosinginstructions.FlexibleDosingInstructions", "previousOrderUuid": null, "idea": { "uuid": "70116AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA", "identify": "Acetaminophen", "dataType": "N/A", "shortName": "Acetaminophen", "models": null, "conceptClass": "Drug", "hiNormal": null, "lowNormal": null, "set": false, "mappings": [ { "code": "70116", "name": null, "source": "CIEL" }, /* Response Truncated */ ] }, "sortWeight": null, "uuid": "a8a2e7d6-50cf-4e3e-8693-98ff212eee1b", "effectiveStartDate": 1750033770000, "effectiveStopDate": 1750206569000, "orderGroup": null, "autoExpireDate": 1750206569000, "scheduledDate": 1750033770000, "dateStopped": null, "directions": null, "dateActivated": 1750033770000, "commentToFulfiller": null, "orderNumber": "ORD-1", "careSetting": "OUTPATIENT", "orderType": "Drug Order", "drug": { "identify": "Paracetamol 500 mg", "uuid": "e8265115-66d3-459c-852e-b9963b2e38eb", "kind": "Pill", "energy": "500 mg" }, "dosingInstructions": { "dose": 1.0, "doseUnits": "Pill", "route": "Oral", "frequency": "Twice a day", "asNeeded": false, "administrationInstructions": "{"directions":"As directed"}", "amount": 4.0, "quantityUnits": "Pill", "numberOfRefills": null }, "durationUnits": "Days", "drugNonCoded": null, "motion": "NEW", "length": 2 } ] } The /bahmnicore/drugOrders/prescribedAndActive mannequin differs considerably

from the OpenMRS FHIR

MedicationRequest

mannequin in each construction and illustration. Whereas the Bahmni REST mannequin is

tailor-made for UI rendering with visit-context grouping and contains

OpenMRS-specific constructs like idea, drug, orderNumber, and versatile

dosing directions, the FHIR MedicationRequest mannequin adheres to worldwide

requirements with a normalized, reference-based construction utilizing assets equivalent to

Treatment, Encounter, Practitioner, and coded parts in

CodeableConcept and Timing.

Analysis

The “Analysis” part of the method entails producing an

MCP-augmented LLM evaluation of the chosen Show Management. This part is

centered round understanding the legacy system’s conduct by analyzing

its supply code and conducting reverse engineering. Such evaluation is

important for informing the ahead engineering efforts. Whereas not all

recognized necessities could also be carried ahead—significantly in long-lived

programs the place sure functionalities could have turn out to be out of date—it’s

vital to have a transparent understanding of present behaviors. This allows

groups to make knowledgeable choices about which parts to retain, discard,

or redesign within the goal system, making certain that the modernization effort

aligns with present enterprise wants and technical targets.

At this stage, it’s useful to take a step again and contemplate how human

builders sometimes method a migration of this nature. One key perception

is that migrating from Angular to React depends closely on contextual

understanding. Builders should draw upon varied dimensions of information

to make sure a profitable and significant transition. The vital areas of

focus sometimes embrace:

- Function Analysis: understanding the purposeful intent and position of the

present Angular parts inside the broader utility. - Knowledge Mannequin Evaluation: reviewing the underlying knowledge constructions and their

relationships to evaluate compatibility with the brand new structure. - Knowledge Circulation Mapping: tracing how knowledge strikes from backend APIs to the

frontend UI to make sure continuity within the person expertise. - FHIR Mannequin Alignment: figuring out whether or not the present knowledge mannequin will be

mapped to an HL7 FHIR-compatible construction, the place relevant. - Comparative Evaluation: evaluating structural and purposeful similarities,

variations, and potential gaps between the outdated and goal implementations. - Efficiency Issues: bearing in mind areas for efficiency

enhancement within the new system. - Function Relevance: assessing which options must be carried ahead,

redesigned, or deprecated based mostly on present enterprise wants.

This context-driven evaluation is usually probably the most difficult side of

any legacy migration. Importantly, modernization just isn’t merely about

changing outdated applied sciences—it’s about reimagining the way forward for the

system and the enterprise it helps. It entails the evolution of the

utility throughout its whole lifecycle, together with its structure, knowledge

constructions, and person expertise.

The experience of subject material consultants (SMEs) and area specialists

is essential to know present conduct and to arrange a information for the

migration. And what higher technique to seize the anticipated conduct than

by way of well-defined check eventualities towards which the migrated code will

be evaluated. Understanding what eventualities are to be examined is vital

not simply in ensuring that – every thing that used to work nonetheless works

and the brand new conduct would work as anticipated but additionally as a result of now your LLM

has a clearly outlined set of targets that it is aware of is what’s anticipated. By

defining these targets explicitly, we are able to make the LLM’s responses as

deterministic as doable, avoiding the unpredictability of probabilistic

responses and making certain extra dependable outcomes throughout the migration

course of.

Primarily based on this understanding, I developed a complete and

strategically structured immediate

designed to seize all related data successfully.

Whereas the immediate covers all anticipated areas—equivalent to knowledge circulate,

configuration, key features, and integration—it additionally contains a number of

sections that warrant particular point out:

- FHIR Compatibility: this part maps the customized Bahmni knowledge mannequin

to HL7 FHIR assets and highlights gaps, thereby supporting future

interoperability efforts. Finishing this mapping requires a stable understanding

of FHIR ideas and useful resource constructions, and could be a time-consuming activity. It

sometimes entails a number of hours of detailed evaluation to make sure correct

alignment, compatibility verification, and identification of divergences between

the OpenMRS and FHIR remedy fashions, which may now be carried out in a matter of

seconds. - Testing Tips for React + TypeScript Implementation Over OpenMRS

FHIR: this part presents structured check eventualities that emphasize knowledge

dealing with, rendering accuracy, and FHIR compliance for the modernized frontend

parts. It serves as a superb basis for the event course of,

setting out a compulsory set of standards that the LLM ought to fulfill whereas

rebuilding the part. - Customization Choices: this outlines obtainable extension factors and

configuration mechanisms that improve maintainability and adaptableness throughout

numerous implementation eventualities. Whereas a few of these choices are documented,

the LLM-generated evaluation typically uncovers further customization paths

embedded within the codebase. This helps determine legacy customization approaches

extra successfully and ensures a extra exhaustive understanding of present

capabilities.

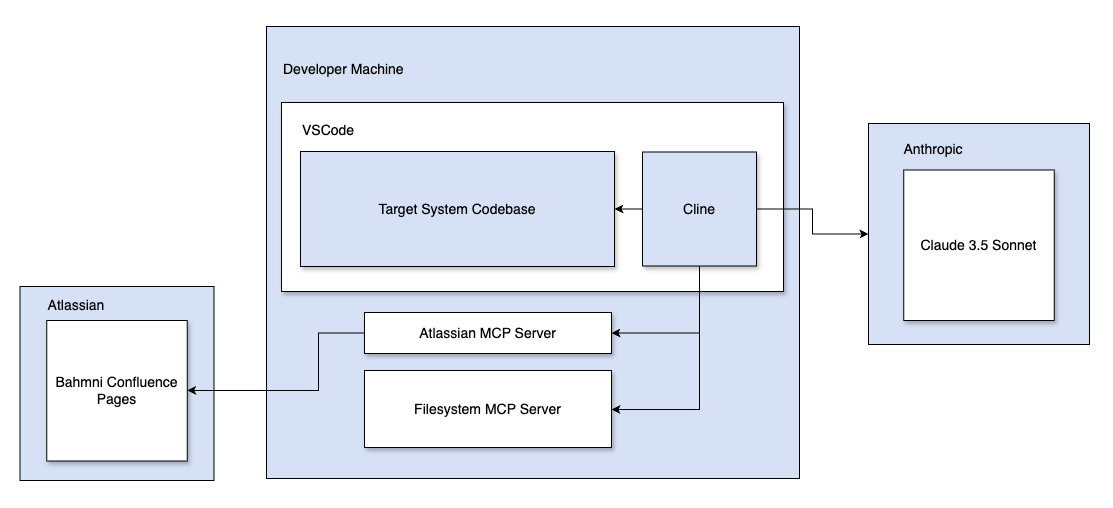

To assemble the required knowledge, I utilized two light-weight servers:

- An Atlassian MCP server to extract any obtainable documentation on the

show management. - A filesystem MCP server, the place the legacy frontend code and configuration

had been mounted, to offer supply code-level evaluation.

Determine 3: MCP + Cline + Claude Setup Diagram

Whereas elective, this filesystem server allowed me to deal with the goal

system’s code inside my IDE, with the legacy reference codebases conveniently

accessible by way of the mounted server.

These mild weight servers every expose particular capabilities by way of the

standardized Mannequin Context Protocol, which is then utilized by Cline (my shopper in

this case) to entry the code base, documentation and configuration. Because the

configurations shipped are opinionated and the paperwork typically outdated, I added

particular directions to take the supply code as the only supply of fact and

the remaining as a supplementary reference.

Evaluate

The second part of the method —is the place the human within the loop

turns into invaluable.

The AI-generated evaluation is not meant to be accepted at face worth,

particularly for complicated codebases. You’ll nonetheless want a site professional and an

architect to vet, contextualize, and information the migration course of. AI alone

is not going emigrate a whole mission seamlessly; it requires

considerate decomposition, clear boundaries, and iterative validation.

Not all these necessities will essentially be integrated into the

goal system, for instance the flexibility to print a prescription sheet based mostly

on the drugs prescribed is deferred for now.

On this case, I augmented the evaluation with pattern responses from the

FHIR endpoint and whereas discarding features of the system that aren’t

related to the modernization effort. This contains efficiency

optimizations, check circumstances that aren’t immediately related to the migration,

and configuration choices such because the variety of rows to show and

whether or not to indicate energetic or inactive drugs. I felt these will be

addressed as a part of the subsequent iteration.

For example, contemplate the unit check eventualities outlined for rendering

remedy knowledge:

✅ Blissful Path It ought to appropriately render the drugName column. It ought to appropriately render the standing column with the suitable Tag colour. It ought to appropriately render the precedence column with the right precedence Tag. It ought to appropriately render the supplier column. It ought to appropriately render the startDate column. It ought to appropriately render the length column. It ought to appropriately render the frequency column. It ought to appropriately render the route column. It ought to appropriately render the doseQuantity column. It ought to appropriately render the instruction column. ❌ Unhappy Path It ought to present a “-” if startDate is lacking. It ought to present a “-” if frequency is lacking. It ought to present a “-” if route is lacking. It ought to present a “-” if doseQuantity is lacking. It ought to present a “-” if instruction is lacking. It ought to deal with circumstances the place the row knowledge is undefined or null.

Changing lacking values with “-” within the unhappy path eventualities has been eliminated,

because it doesn’t align with the necessities of the goal system. Such choices

must be guided by enter from the subject material consultants (SMEs) and

stakeholders, making certain that solely performance related to the present enterprise

context is retained.

The literature gathered on the show management now must be coupled with

mission conventions, practices, and tips with out which the LLM is open to

interpret the above request, on the info that it was skilled with. This contains

entry to features that may be reused, pattern knowledge fashions and companies and

reusable atomic parts that the LLMs can now depend on. If such practices,

model guides and tips aren’t clearly outlined, each iteration of the

migration dangers producing non-conforming code. Over time, this will contribute to

a fragmented codebase and an accumulation of technical debt.

The core goal is to outline clear, project-specific coding requirements and

model guides to make sure consistency within the generated code. These requirements act as

a foundational reference for the LLM, enabling it to supply output that aligns

with established conventions. For instance, the Google TypeScript Type Information can

be summarized and documented as a TypeScript model information saved within the goal

codebase. This file is then learn by Cline at the beginning of every session to make sure

that every one generated TypeScript code adheres to a constant and acknowledged

customary.

Rebuild

Rebuilding the characteristic for a goal system with LLM-generated code is

the ultimate part of the workflow. Now with all of the required knowledge gathered,

we are able to get began with a easy immediate

You might be tasked with constructing a Remedy show management within the new react ts fhir frontend. Yow will discover the small print of the legacy Remedy show management implementation in docs/treatments-legacy-implementation.md. Create the brand new show management by following the docs/display-control-guide.md

At this stage, the LLM generates the preliminary code and check eventualities,

leveraging the data offered. As soon as this output is produced, it’s

important for area consultants and builders to conduct a radical code evaluation

and apply any essential refactoring to make sure alignment with mission requirements,

performance necessities, and long-term maintainability.

Refactoring the LLM-generated code is vital to making sure the code stays

clear and maintainable. With out correct refactoring, the consequence might be a

disorganized assortment of code fragments relatively than a cohesive, environment friendly

system. Given the probabilistic nature of LLMs and the potential discrepancies

between the generated code and the unique goals, it’s important to

contain area consultants and SMEs at this stage. Their position is to completely

evaluation the code, validate that the output aligns with the preliminary expectations,

and assess whether or not the migration has been efficiently executed. This professional

involvement is essential to make sure the standard, accuracy, and total success of

the migration course of.

This part must be approached as a complete code evaluation—just like

reviewing the work of a senior developer who possesses sturdy language and

framework experience however lacks familiarity with the particular mission context.

Whereas technical proficiency is important, constructing sturdy programs requires a

deeper understanding of domain-specific nuances, architectural choices, and

long-term maintainability. On this context, the human-in-the-loop performs a

pivotal position, bringing the contextual consciousness and system-level understanding

that automated instruments or LLMs could lack. It’s a essential course of to make sure that

the generated code integrates seamlessly with the broader system structure

and aligns with project-specific necessities.

In our case, the intent and context of the rebuild had been clearly outlined,

which minimized the necessity for post-review refactoring. The necessities gathered

throughout the analysis part—mixed with clearly articulated mission conventions,

expertise stack, coding requirements, and magnificence guides—ensured that the LLM had

minimal ambiguity when producing code. Consequently, there was little left for

the LLM to deduce independently.

That stated, any unresolved questions relating to the implementation plan can

result in deviations from the anticipated output. Whereas it’s not possible to

anticipate and reply each such query upfront, it is very important

acknowledge the inevitability of “unknown unknowns.” That is exactly the place a

thorough evaluation turns into important.

On this specific occasion, my familiarity with the show management we had been

rebuilding allowed me to proactively decrease such unknowns. Nevertheless, this degree

of context could not all the time be obtainable. Due to this fact, I strongly advocate

conducting an in depth code evaluation to assist uncover these hidden gaps. If

recurring points are recognized, the immediate can then be refined to deal with them

preemptively in future iterations.

The attract of LLMs is plain; they provide a seemingly easy answer

to complicated issues, and builders can typically create such an answer shortly and

with no need years of deep coding expertise. This could not create a bias

within the consultants, succumbing to the attract of LLMs and finally take their palms

off the wheel.

End result

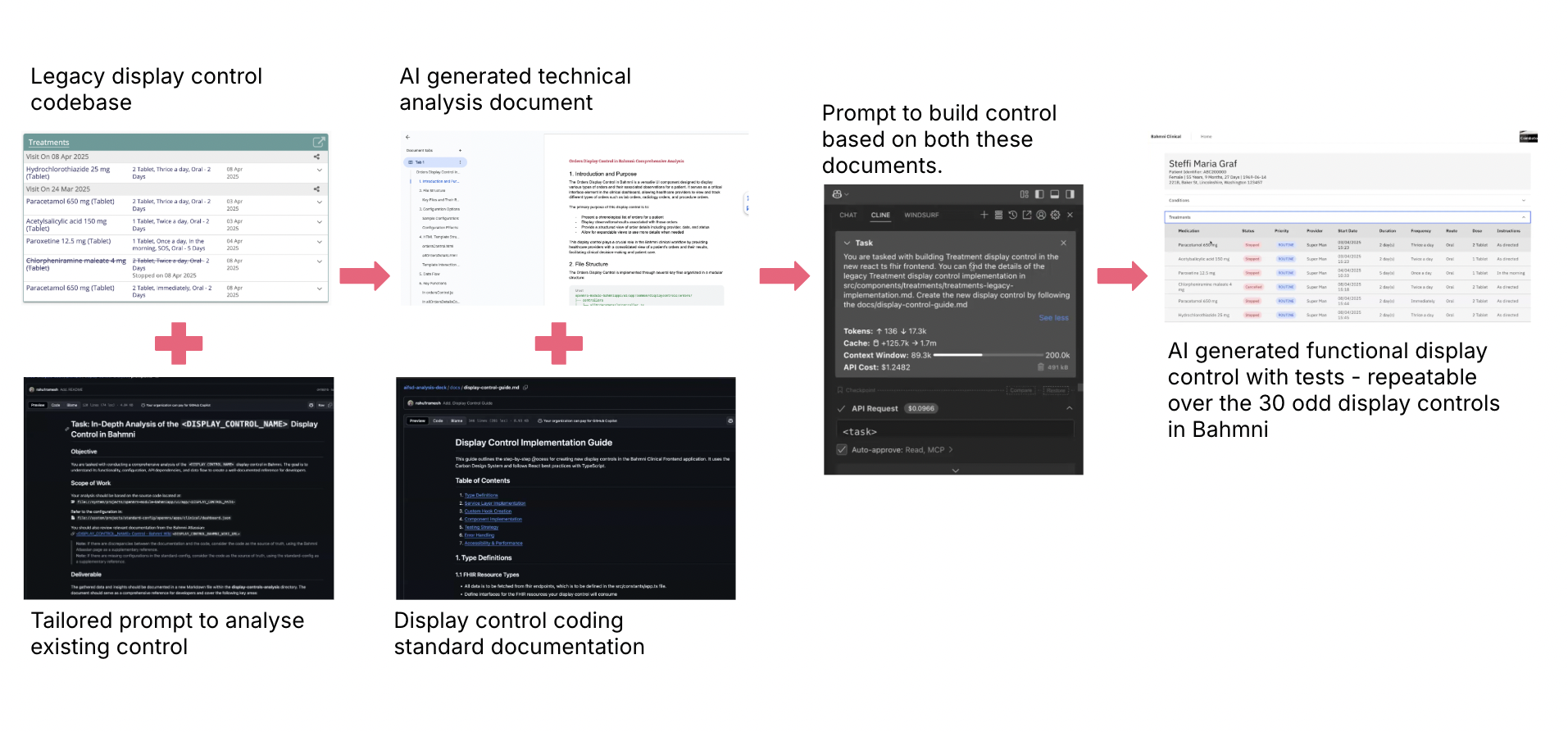

Determine 4: A excessive degree overview of the method; taking a characteristic from the legacy codebase and utilizing LLM-assisted evaluation to rebuild it inside the goal system

In my case the code era course of took about 10 minutes to

full. The evaluation and implementation, together with each unit and

integration checks with roughly 95% protection, had been accomplished utilizing

Claude 3.5 Sonnet (20241022). The full value for this effort was about

$2.

Determine 5: Legacy Remedies Show Management constructed utilizing Angular and built-in with OpenMRS REST endpoints

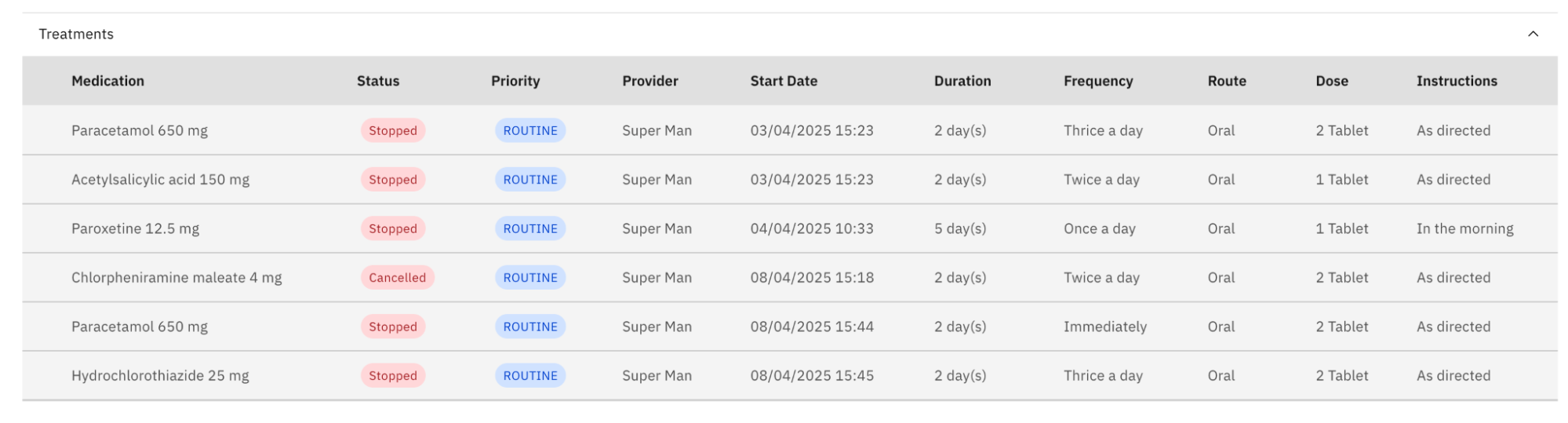

Determine 6: Modernized Remedies Show Management rebuilt

utilizing React and TypeScript, leveraging FHIR endpoints

With out AI assist, each the technical evaluation and implementation

would have probably taken a developer a minimal of two to a few days. In my

case, creating a reusable, general-purpose immediate—grounded within the shared

architectural rules behind the roughly 30 show controls in

Bahmni—took about 5 centered iterations over 4 hours, at a barely

larger inference value of round $10 throughout these cycles. This effort was

important to make sure the generated immediate was modular and broadly

relevant, given that every show management in Bahmni is actually a

configurable, embeddable widget designed to reinforce system flexibility

throughout completely different scientific dashboards.

Even with AI-assisted era, one of many key prices in growth

stays the time and cognitive load required to research, evaluation, and

validate the output. Due to my prior expertise with Bahmni, I used to be in a position

to evaluation the generated evaluation in underneath quarter-hour, supplementing it

with fast parallel analysis to validate the claims and knowledge mappings. I

was pleasantly shocked by the standard of the evaluation: the info mannequin

mapping was exact, the logic for transformation was sound, and the check

case recommendations coated a complete vary of eventualities, each typical

and edge circumstances.

Code evaluation, nonetheless, emerged as probably the most vital problem.

Reviewing the generated code line by line throughout all adjustments took me

roughly 20 minutes. In contrast to pairing with a human developer—the place

iterative discussions happen at a manageable tempo—working with an AI system

able to producing whole modules inside seconds creates a bottleneck

on the human facet, particularly when making an attempt line-by-line scrutiny. This

isn’t a limitation of the AI itself, however relatively a mirrored image of human

evaluation capability. Whereas AI-assisted code reviewers are sometimes proposed as a

answer, they will usually determine syntactic points, adherence to finest

practices, and potential anti-patterns—however they battle to evaluate intent,

which is vital in legacy migration initiatives. This intent, grounded in

area context and enterprise logic, should nonetheless be confirmed by the human in

the loop.

For a legacy modernization mission involving a migration from AngularJS

to React, I might fee this expertise an absolute 10/10. This functionality

opens up the likelihood for any people with first rate technical

experience and robust area information emigrate any legacy codebase to a

fashionable stack with minimal effort and in considerably much less time.

I imagine that with a bottom-up method, breaking the issue down

into atomic parts, and clearly defining finest practices and

tips, AI-generated code might enormously speed up supply

timelines—even for complicated brownfield initiatives as we noticed for Bahmni.

The preliminary evaluation and the following evaluation by consultants leads to a

crisp sufficient doc that lets us use the restricted area within the context

window in an environment friendly method so we are able to match extra data into one single

immediate. Successfully, this permits the LLM to research code in a method that’s

not restricted by how the code is organized within the first place by builders.

This additionally leads to decreasing the general value of utilizing LLMs, as a brute

drive method would imply that you just spend 10 occasions as a lot even for a a lot

less complicated mission.

Whereas modernizing the legacy codebase is the primary product of this

proposed method, it’s not the one helpful one. The documentation

generated in regards to the system is efficacious when offered not simply to the top

customers / implementers in complementing or filling gaps in present programs

documentation and in addition would stand in as a information base in regards to the system

for ahead engineering groups pairing with LLMs to reinforce or enrich

system capabilities.

Why the Evaluate Section Issues

A key enabler of this profitable migration was a well-structured plan

and detailed scope evaluation part previous to implementation. This early

funding paid dividends throughout the code era part. And not using a

clear understanding of the info circulate, configuration construction, and

show logic, the AI would have struggled to supply coherent and

maintainable outputs. In case you have labored with AI earlier than, you might have

observed that it’s constantly desperate to generate output. In an earlier

try, I proceeded with out ample warning and skipped the evaluation

step—solely to find that the generated code included a useMemo hook

for an operation that was computationally trivial. One of many success

standards within the generated evaluation was that the code must be

performant, and this gave the impression to be the AI’s method of fulfilling that

requirement.

Curiously, the AI even added unit checks to validate the

efficiency of that particular operation. Nevertheless, none of this was

explicitly required. It arose solely because of a poorly outlined intent. AI

integrated these adjustments with out hesitation, regardless of not absolutely

understanding the underlying necessities or looking for clarification.

Reviewing each the generated evaluation and the corresponding code ensures

that unintended additions are recognized early and that deviations from

the unique expectations are minimized.

Evaluate additionally performs a key position in avoiding pointless back-and-forth

with the AI throughout the rebuild part. For example, whereas refining the

immediate for the “Show Management Implementation

Information”,

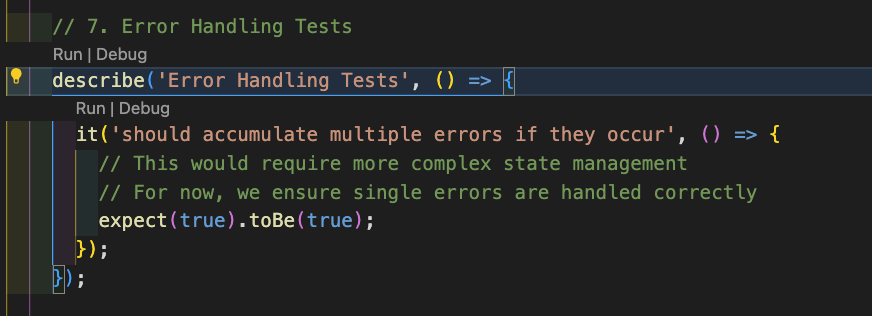

I initially didn’t have the part specifying the unit checks to be

included. Consequently, the AI generated a check that was largely

meaningless—providing a false sense of check protection with no actual

connection to the code underneath check.

Determine 7: AI generated unit check that verifies actuality

continues to be actual

In an try to repair this check, I started prompting

extensively—offering examples and detailed directions on how the unit

check must be structured. Nevertheless, the extra I prompted, the additional the

course of deviated from the unique goal of rebuilding the show

management. The main focus shifted totally to resolving unit check points, with

the AI even starting to evaluation unrelated checks within the codebase and

suggesting fixes for issues it recognized there.

Ultimately, realizing the rising divergence from the meant

activity, I restarted the method with clearly outlined directions from the

outset, which proved to be far simpler.

This leads us to a vital perception: Do not Interrupt AI.

LLMs, at their core, are predictive sequence mills that construct

narratives token by token. If you interrupt a mannequin mid-stream to

course-correct, you break the logical circulate it was establishing.

Stanford’s “Misplaced within the

Center”

examine revealed that fashions can undergo as much as a 20%

drop in accuracy when vital data is buried in the course of

lengthy contexts, versus when it’s clearly framed upfront. This underscores

why beginning with a well-defined immediate and letting the AI full its

activity unimpeded typically yields higher outcomes than fixed backtracking or

mid-flight corrections.

This concept can be strengthened in “Why Human Intent Issues Extra as AI

Capabilities Develop” by Nick

Baumann,

which argues that as mannequin capabilities scale, clear human intent—not

simply brute mannequin energy—turns into the important thing to unlocking helpful output.

Moderately than micromanaging each response, practitioners profit most by

designing clear, unambiguous setups and letting the AI full the arc

with out interruption.

Conclusion

You will need to make clear that this method just isn’t meant to be a

silver bullet able to executing a large-scale migration with out

oversight. Moderately, its energy lies in its capability to considerably

scale back growth time—probably by a number of weeks—whereas sustaining

high quality and management.

The objective is not to switch human experience however to amplify it—to

speed up supply timelines whereas making certain that high quality and

maintainability are preserved, if not improved, throughout the transition.

It’s also essential to notice that the expertise and outcomes mentioned

to date are restricted to read-only controls. Extra complicated or interactive

parts could current further challenges that require additional

analysis and refinement of the prompts used.

One of many key insights from exploring GenAI for legacy migration is

that whereas massive language fashions (LLMs) excel at general-purpose duties and

predefined workflows, their true potential in large-scale enterprise

transformation is just realized when guided by human experience. That is

effectively illustrated by Moravec’s Paradox, which observes that duties perceived

as intellectually complicated—equivalent to logical reasoning—are comparatively simpler

for AI, whereas duties requiring human instinct and contextual

understanding stay difficult. Within the context of legacy modernization,

this paradox reinforces the significance of subject material consultants (SMEs)

and area specialists, whose deep expertise, contextual understanding,

and instinct are indispensable. Their experience permits extra correct

interpretation of necessities, validation of AI-generated outputs, and

knowledgeable decision-making—finally making certain that the transformation is

aligned with the group’s targets and constraints.

Whereas project-specific complexities could render this method formidable,

I imagine that by adopting this structured workflow, AI-generated code can

considerably speed up supply timelines—even within the context of complicated

brownfield initiatives. The intent is to not substitute human experience, however to

increase it—streamlining growth whereas safeguarding, and probably

enhancing, code high quality and maintainability. Though the standard and

architectural soundness of the legacy system stay vital components, this

methodology presents a powerful place to begin. It reduces handbook overhead,

creates ahead momentum, and lays the groundwork for cleaner and extra

maintainable implementations by way of expert-led, guided refactoring.

I firmly imagine following this workflow opens up the likelihood for

any people with first rate technical experience and robust area

information emigrate any legacy codebase to a contemporary stack with minimal

effort and in considerably much less time.