Two years ago, I penned a post that has garnered significant attention – likely due to its relevance to a challenging topic that many people are actively seeking solutions for. Since then, I’ve leveraged Docker’s capabilities further in my professional endeavors, successfully incorporating novel techniques into my workflow. Although long delayed, I have finally compiled my most recent insights for this publication.

Recently, I came across an article that resonated strongly with me. While it’s true that creating a replicable ROS environment can be challenging, especially for those without extensive experience with the platform, it’s still crucial to ensure reproducibility and avoid relying solely on Docker as a crutch to expedite development.

If the preceding discussion hasn’t entirely dissuaded you from pursuing this exploration of Docker, you may now take satisfaction in your study.

The time has come to revisit our trusty Dockerfile and give it a bit of a tune-up for ROS 2. We’ll make sure it’s running smoothly and efficiently so you can get back to building your robot or autonomous system without any hiccups. Let’s take a look at the file.

As ROS 1 approaches end-of-life in 2025, now seems an opportune moment to revisit and update our previous content using the latest advancements with ROS 2.

Significant updates to this section involve ROS 2, alongside customer libraries, launch files, and nodes. The examples themselves have been updated to leverage the latest tools and frameworks, including `/` syntax for both C++ and Python. Consult my published work for additional information on the instance and/or habits of timber.

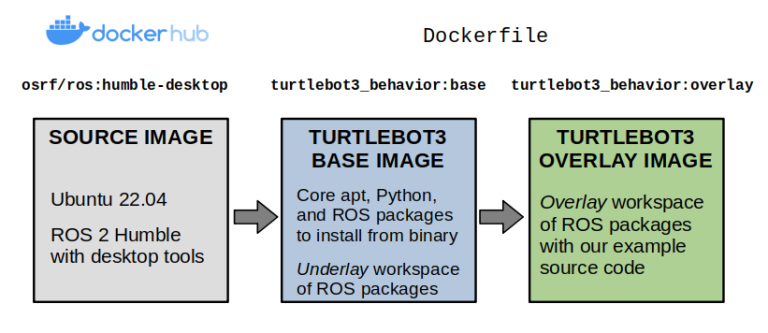

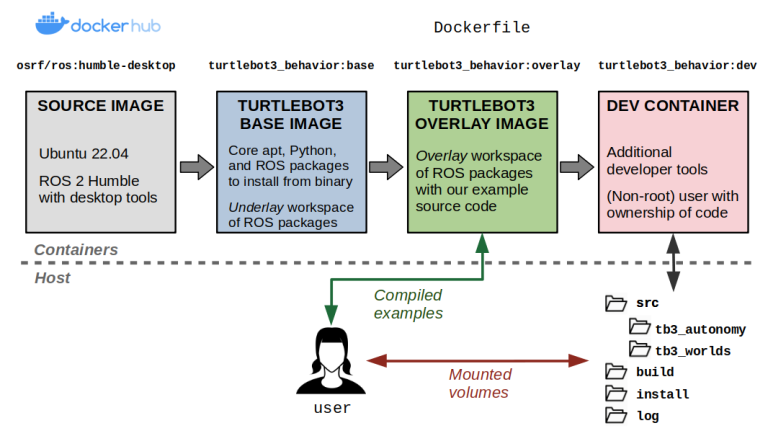

In essence, from a Docker perspective, the options are relatively limited. Our newly streamlined container architecture now takes shape.

What are the layers that comprise our TurtleBot3 instance Docker image?

We’ll begin by making our DockerfileCaption, which specifies the context of our image. Our preliminary layer inherits from one of several possible base classes. osrf/ros:humble-desktopSet up the space into a unit? The outlines are utilized through a repository file.

We have successfully arranged the argument. ARG ROS_DISTRO=humbleThis allows packages to be easily adapted for various ROS 2 versions. Rather than crafting multiple Dockerfiles for diverse configurations, it’s more effective to aim for leveraging build arguments to the extent possible without becoming “over-intelligent” in a manner that hampers readability?

ARG ROS_DISTRO=humble

########################################

Base Picture for TurtleBot3 Simulation

########################################

FROM osrf/ros:${ROS_DISTRO}-desktop as base

ENV ROS_DISTRO=${ROS_DISTRO}

SHELL [“/bin/bash”, “-c”]

CREATE COLCON WORKSPACE WITH EXTERIOR DEPENDENCIES?

colcon ws init –language python

RUN mkdir -p /turtlebot3_ws/src

WORKDIR /turtlebot3_ws/src

COPY dependencies.repos .

RUN vcs import

To develop a picture with a specific narrative, assume using ROS 2 Rolling as an alternative; in this case, you would proceed by supplying all relevant references to? ${ROS_DISTRO} One statistical test that accurately resolves to a rolling distribution is the exponentially weighted moving average (EWMA).

docker construct -f docker/Dockerfile

--build-arg="ROS_DISTRO=rolling"

--target base -t turtlebot3_behavior:base .

As a professional editor, I would suggest the following revised text:

I have encountered several issues while using the default DDS vendor (FastDDS) in ROS 2 Humble and later versions. To resolve these problems, I prefer to modify the default implementation to Cyclone DDS by configuring it as the default and setting an atmosphere variable to ensure its consistent usage.

Cyclone DDS, a robust open-source implementation of the OMG Data Distribution Service (DDS), provides reliable and efficient communication between applications. By leveraging its powerful features, such as type-safe programming, fault-tolerant messaging, and scalable architecture, you can develop systems that seamlessly integrate with other components in your ecosystem.

RUN apt-get replace && apt-get set up -y --no-install-recommends

ros-${ROS_DISTRO}-rmw-cyclonedds-cpp

ENV RMW_IMPLEMENTATION=rmw_cyclonedds_cpp

Let’s build our layer now. Here, we’re going to copy over the instance supply code, setting up any missing dependencies within. rosdep set upWhen a container is launched, ensure that you arrange an entry point to run each time it starts.

###########################################

TurtleBot 3 Simulator: Enhanced Visual Experience with Customizable Overlays

###########################################

FROM base AS overlay

CREATE A COLCON WORKSPACE WITH AN OVERLAY

?sudo snap install colcon-core

?mkdir -p src

?cd src

?git clone https://github.com/colcon/colcon-core.git

?mkdir -p package

?cd package

?cat > package.xml

?colcon build –symlink-install

RUN mkdir -p /overlay_ws/src

WORKDIR /overlay_ws

COPY ./tb3_autonomy/ ./src/tb3_autonomy/

COPY ./tb3_worlds/ ./src/tb3_worlds/

RUN supply /turtlebot3_ws/set up/setup.bash

&& rosdep set up –from-paths src –ignore-src –rosdistro ${ROS_DISTRO} -y

&& colcon construct –symlink-install

# Arrange the entrypoint

COPY ./docker/entrypoint.sh /

ENTRYPOINT [ “/entrypoint.sh” ]

The entry point described above is a Bash script that sources ROS 2 and loads all relevant workspaces, setting up necessary environment variables required to run our TurtleBot3 examples successfully. Consider utilizing entrypoints for setting up other features that may prove valuable in your application.

#!/bin/bash

#!/bin/bash

set -euo pipefail

if [ "${ROS_DISTRO:-}" == "" ]; then

echo "Please set the ROS_DISTRO environment variable"

exit 1

fi

if command -v colcon &> /dev/null; then

if [ "${COLCON_IGNORE_ROS:-false}" == "true" ]; then

exec "$@"

else

exec colcon exec --ros-distro "${ROS_DISTRO}" "$@"

fi

else

exec rosrun

fi

# Supply ROS 2

supply /decide/ros/${ROS_DISTRO}/setup.bash

The existing infrastructure will need to accommodate an additional floor for office space and amenities, requiring a comprehensive reevaluation of the building’s foundation and structural integrity?

if [ -f /turtlebot3_ws/install/setup.bash ]

then

supply /turtlebot3_ws/set up/setup.bash

export TURTLEBOT3_MODEL=waffle_pi

export GAZEBO_MODEL_PATH=$GAZEBO_MODEL_PATH:$(ros2 pkg prefix turtlebot3_gazebo)/share/turtlebot3_gazebo/fashions

fi

If the overlay workspace has been successfully built then

if [ -f /overlay_ws/install/setup.bash ]

then

supply /overlay_ws/set up/setup.bash

export GAZEBO_MODEL_PATH=$GAZEBO_MODEL_PATH:$(ros2 pkg prefix tb3_worlds)/share/tb3_worlds/fashions

fi

Execution starts here? What does it mean to execute a command?

exec “$@”

At this level, it’s essential to be able to build a comprehensive Dockerfile.

docker construct

-f docker/Dockerfile --target overlay

-t turtlebot3_behavior:overlay .

We can initiate an instance launch record with the correct settings using this lengthy but precise command. The majority of these atmosphere variables and volumes are required to ensure that graphics and ROS 2 networking operate properly from within our container.

Docker run -i -t --net=host --ipc=host --privileged

--env="DISPLAY"

--env="QT_X11_NO_MITSHM=1"

--volume="/tmp/.X11-unix:/tmp/.X11-unix:rw"

--volume="${XAUTHORITY}:/root/.Xauthority"

turtlebot3_behavior:overlay

The command `bash -c "ros2 launch tb3_worlds tb3_demo_world.launch.py"` is executed directly in the terminal without any improvements. Therefore, I return:

bash -c "ros2 launch tb3_worlds tb3_demo_world.launch.py"

Please note that it is not possible to improve a simple command that does not contain syntax errors or ambiguities.

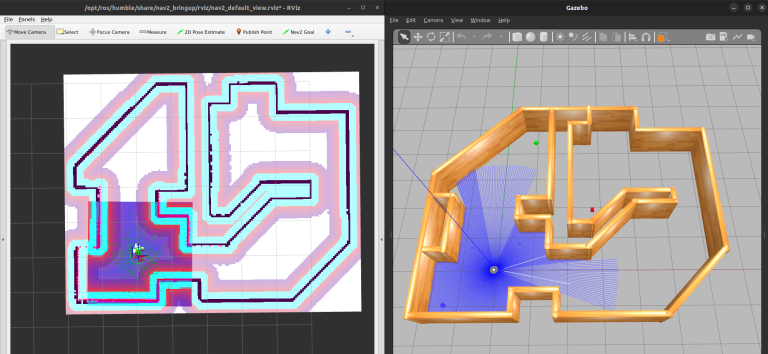

Here’s a revised version: Our TurtleBot3 instance simulation seamlessly integrates RViz on the left and Gazebo in a traditional setup.

Introducing Docker Compose

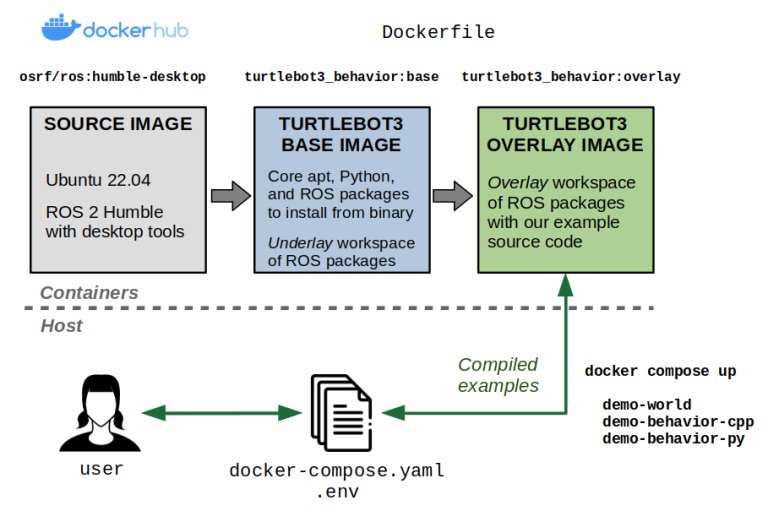

From the preceding fragments, it becomes clear that docker construct and docker run Instructions can become increasingly lengthy and unwieldy as additional options are incorporated. Docker simplifies the process of wrapping complex applications in abstraction layers, making it unnecessary to employ scripting languages or Makefiles to manage build dependencies.

Docker Compose enables users to define a YAML file that comprehensively outlines the configuration required for building and running containers, streamlining the process of creating and managing complex multi-container applications.

Docker Compose distinguishes itself from the standard Docker command by providing a unique approach to orchestration. This involves creating multiple images or targets within a single picture, and simultaneously releasing a series of packages containing a comprehensive application. This feature also empowers users to expand existing configurations, thereby minimizing the need for repetitive copy-pasting of similar settings across multiple areas, making it easier to define and reuse variables.

Our primary objective is to establish concise guidelines for managing exemplary scenarios.

- will construct what we want

- will launch what we want

With Docker Compose, we can simplify the process of building and running containerized applications.

The default identity of this enchanting YAML file is. docker-compose.yaml. For our instance, the docker-compose.yaml What type of file are you referring to? The original text please.

model: "3.9"

providers:

# Base picture containing dependencies.

base:

picture: turtlebot3_behavior:base

construct:

context: .

dockerfile: docker/Dockerfile

args:

ROS_DISTRO: humble

goal: base

# Interactive shell

stdin_open: true

tty: true

Networked robots rely on robust communication frameworks to effectively collaborate. In this context, Inter-Process Communication (IPC) plays a pivotal role in enabling seamless information exchange between various components of the robotic system.

For instance, consider a scenario where multiple robots work together to accomplish a complex task. Each robot must receive and send data continuously to ensure coordinated actions. In such cases, ROS 2's networking capabilities become essential for facilitating the exchange of crucial information.

One way to achieve this is by utilizing ROS 2's built-in support for Network Transport (NT) protocols like TCP/IP, UDP, and HTTP. These protocols enable nodes in a ROS 2 system to communicate effectively with each other across the network, allowing for efficient data transfer between nodes.

Another vital aspect of IPC in ROS 2 is its ability to handle different data formats. For instance, you might want to transmit image or video data from one node to another. In such cases, you can leverage ROS 2's support for various message formats like DDS (Data Distribution Service) and JSON (JavaScript Object Notation).

To illustrate the effectiveness of IPC in ROS 2, consider a scenario where a robotic arm needs to receive real-time sensor data from a camera module to perform grasping actions. In this case, using IPC allows you to transmit the sensor data from the camera module to the robotic arm's controller node in a seamless and efficient manner.

In conclusion, IPC plays a crucial role in enabling effective communication between nodes in ROS 2-based systems. By leveraging built-in support for NT protocols and handling various message formats, developers can build robust and reliable robotic systems that can effectively collaborate with each other.

network_mode: host

ipc: host

Wanted to illustrate graphically

privileged: true

atmosphere:

What's the best way to organize a TurtleBot3 dummy sort?

- TURTLEBOT3_MODEL=${TURTLEBOT3_MODEL:-waffle_pi}

Permitting graphical packages to be installed and run within containers enhances their capabilities.

- DISPLAY=${DISPLAY}

- QT_X11_NO_MITSHM=1

- NVIDIA_DRIVER_CAPABILITIES=all

volumes:

Permits the installation of graphical packages, allowing for a richer user experience within the container.

- /tmp/.X11-unix:/tmp/.X11-unix:rw

- ${XAUTHORITY:-$HOME/.Xauthority}:/root/.Xauthority

Supply Code Instance Overlay Picture

overlay:

extends: base

picture: turtlebot3_behavior:overlay

construct:

context: .

dockerfile: docker/Dockerfile

goal: overlay

# Demo world

demo-world:

extends: overlay

command: ros2 launch tb3_worlds tb3_demo_world.launch.py

Can we refactor this prompt to make it more specific?

demo-behavior-py:

extends: overlay

command: >

ros2 launch tb3_autonomy tb3_demo_behavior_py.launch.py

tree_type:=${BT_TYPE:?}

enable_vision:=${ENABLE_VISION:?}

target_color:=${TARGET_COLOR:?}

Conduct a comprehensive demo using C++ and BehaviorTree.cpp to effectively showcase the capabilities of this powerful library in solving complex decision-making scenarios.

demo-behavior-cpp:

extends: overlay

command: >

ros2 launch tb3_autonomy tb3_demo_behavior_cpp.launch.py

tree_type:=${BT_TYPE:?}

enable_vision:=${ENABLE_VISION:?}

target_color:=${TARGET_COLOR:?}

As illustrated by the provided Docker Compose file, you can define variables using the familiar $ operator typical of Unix-based systems. These variables are learned by default from both your host environment and through the use of an atmosphere file, typically referred to as a `.env` file. Here are a few potential ways to rephrase your sentence:

Our instance’s environment file has the following structure:

Our instance environment file resembles:

The instance.env file takes on the following form:

The instance’s environmental settings appear in this format:

Our instance’s .env file looks as follows:

SKIP

# TurtleBot3 mannequin

TURTLEBOT3_MODEL=waffle_pi

Conducting a tree sort may involve either a naive or queue-based approach.

BT_TYPE=queue

Use this flag to enable imaginative and perceptive capabilities, or set it to false for purely navigational behavior.

ENABLE_VISION=true

The goal setting’s visionary scope may take on a hue of purple, green, or blue to guide its trajectory.

TARGET_COLOR=blue

At this level, you have the freedom to build anything.

By default, Docker Compose picks up a `docker-compose.yaml` file and supports environment variables through a `.env` file.

docker compose construct

You can explicitly specify the recordset.

docker compose -f docker-compose.yaml -e $(cat .env) up -d

Hereafter, you may run the providers that matter to you.

# Convey up the simulation

docker compose up demo-world

Following the initiation of the simulation,

# Launch one of these in a separate Terminal session?

docker compose up demo-behavior-py

docker compose up demo-behavior-cpp

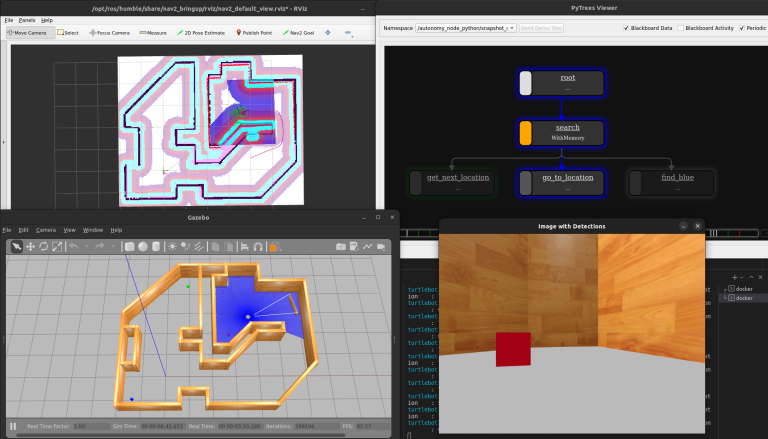

The TurtleBot3 demonstration successfully functions due to its integration with the Conduct Tree framework.

Organising Developer Containers

Our current implementation performs well when bundling example scenarios for various clients. Notwithstanding your willingness to enhance the instance code within this context, you are confronted with the following hurdles:

- Every time you update your code, you must rebuild the Docker image. Without timely feedback, making adjustments becomes an extremely inefficient process. It seems like a relationship has reached its endpoint before even beginning.

- By leveraging the `sync` command, you’ll effectively synchronize the code on your host machine with the corresponding code within the container, ensuring seamless integration and coordination between the two environments. This will get us heading in the right direction; nonetheless, it becomes apparent that any data files created within the container and mounted on the host can be owned by anyone with access to the directory.

rootas default. You may circumvent this issue by rapidly producing a solution.sudoandchownhammer, however it’s not crucial. - Within a Docker container, all necessary instruments for improvement and debugging can be missing unless explicitly included in the Dockerfile, potentially inflating the size of your image.

Luckily, developers have conceived the concept of a developer container. Here is the rewritten text:

Dev environments are easily created through various implementation methods. Given the context, I’m assuming you meant to ask me to improve the provided text in a different style. Please provide the original text, and I’ll do my best to enhance it while adhering to your requested style. If I encounter any limitations or difficulties, I’ll return “SKIP” as instructed. Dockerfile As a professional editor, I would revise the sentence to:

To create something entirely novel and innovative. dev goal that extends our present overlay goal.

Dev containers allow us to develop within a container environment on our host system, minimizing the overhead.

This Dev Container will provide a self-contained development environment for developers to work on projects.

- Develop integrations with AI-assisted coding tools, such as code completion suggestions and automated testing frameworks, to streamline the development process? These critical components will not form part of the overall strategy.

overlayLayers that we will be shipping directly to our end customers. - The new user is created with identical credentials as the original user who built the container on the host. By setting this configuration option, each file recorded within a container’s designated folders will inherit the same ownership settings as if the file were created locally on the host system. By “folders we care about”, we mean specifically the ROS (Robot Operating System) workspace that includes the source code relevant to our project.

- The entrypoint script is incorporated seamlessly into the user’s Bash profile.

~/.bashrcfile). This allows us to set the ROS atmosphere not just at container startup, but every time we establish a new interactive shell while our dev container remains active.

#####################

# Growth Picture #

#####################

FROM overlay as dev

# Dev container arguments

ARG USERNAME=devuser

ARG UID=1000

ARG GID=${UID}

Can we establish a comprehensive framework to enhance our performance?

RUN apt-get replace && apt-get set up -y –no-install-recommends

gdb gdbserver nano

Who’s New in Town? Meet Sarah Thompson, Your Next Door Neighbor!

RUN groupadd –gid $GID $USERNAME

&& useradd –uid ${GID} –gid ${UID} –create-home ${USERNAME}

&& echo ${USERNAME} ALL=(root) NOPASSWD:ALL > /and so forth/sudoers.d/${USERNAME}

&& chmod 0440 /and so forth/sudoers.d/${USERNAME}

&& mkdir -p /residence/${USERNAME}

&& chown -R ${UID}:${GID} /residence/${USERNAME}

Set the ownership of the overlay workspace to a newly created individual.

RUN chown -R ${UID}:${GID} /overlay_ws/

Set the person’s entry point for setting their environment variables in their .bashrc file.

USER ${USERNAME}

RUN echo “supply /entrypoint.sh” >> /residence/${USERNAME}/.bashrc

You’ll be able to seamlessly integrate and add a brand-new developer service to the platform. docker-compose.yaml file. What are the directories automatically created and mapped for further usage? colcon construct to a .colcon A directory on our host file system. Without deleting the generated construct artifacts after stopping the dev container, they persist, which means you’d need to perform a full rebuild every time you restart; otherwise, the lingering files would remain.

dev:

extends: overlay

picture: turtlebot3_behavior:dev

construct:

context: .

dockerfile: docker/Dockerfile

goal: dev

args:

- UID=${UID:-1000}

- GID=${UID:-1000}

- USERNAME=${USERNAME:-devuser}

volumes:

# Mount the supply code

- ./tb3_autonomy:/overlay_ws/src/tb3_autonomy:rw

- ./tb3_worlds:/overlay_ws/src/tb3_worlds:rw

Constructing mount Colcon artifacts for faster rebuilds?

- ./.colcon/construct/:/overlay_ws/construct/:rw

- ./.colcon/set up/:/overlay_ws/set up/:rw

- ./.colcon/log/:/overlay_ws/log/:rw

person: ${USERNAME:-devuser}

command: sleep infinity

At this level, you’re able to grasp fundamental concepts and apply them in a straightforward manner.

# Begin the dev container

docker compose up dev

Connect a new Terminal session with an interactive shell for enhanced workflow flexibility.

You’re able to do this on multiple occasions?

docker-compose exec -it dev /bin/bash

As a direct result of mounting the source code, you can now modify your host and rebuild within the dev container, or leverage tools such as Docker to instantly develop within the container. As much as you.

When contained within a container, you can configure the workspace by:

colcon construct

Attributed to our growing quantities, you will observe that the content of our products has significantly increased. .colcon/construct, .colcon/set up, and .colcon/log The folders on your hosting server have now been populated. When shutting down the development container and bringing up a fresh instance, these files persist and accelerate rebuilds by allowing. colcon construct.

As a direct consequence of our successful endeavor to craft a human being, it becomes apparent that these data assets are no longer under your jurisdiction. rootSo you may delete them if you wish to erase the structural imperfections. Without creating a brand-new user account, you’ll likely encounter some frustrating permission issues.

$ ls -al .colcon

whole 20

drwxr-xr-x 1 sebastian sebastian 4096 Jul 9 10:15 .

drwxr-xr-x 10 sebastian sebastian 4096 Jul 9 10:15 ..

drwxr-xr-x 4 sebastian sebastian 4096 Jul 9 11:29 construct

drwxrwxr-x 4 sebastian sebastian 4096 Jul 9 11:29 Set Up

drwxr-xr-x 5 sebastian sebastian 4096 Jul 9 11:31 log

The notion of dev containers has become ubiquitous at this stage, with a norm having crystallized around their use. I also need to harmonize additional reliable resources alongside, and, as well as the official.

Conclusion

Discovering how to harness the power of Docker and Docker Compose enables the creation of dependable and repeatable ROS 2 environments. This feature enables dynamic configuration of variables during both construction and runtime, as well as creation of development containers that facilitate early code development in target environments before sharing with others.

Let’s just scratch the surface of this publish; it’s essential you explore the resources linked throughout and discover more about how Docker can simplify your life and that of your customers, so be sure to keep poking around.

Please feel free to succeed without asking any questions and making suggestions. Here’s the improved text:

As Docker is notoriously flexible, I’m curious about your experience with it: have you found specific configurations that worked particularly well for you, or did you explore alternative solutions to overcome any challenges? You’d have learned something new!

Sebastian Castro

Working as a Senior Robotics Engineer at PickNik.