Brokers are solely as succesful because the instruments you give them—and solely as reliable because the governance behind these instruments.

This weblog put up is the second out of a six-part weblog collection referred to as Agent Manufacturing facility which is able to share finest practices, design patterns, and instruments to assist information you thru adopting and constructing agentic AI.

Within the earlier weblog, we explored 5 widespread design patterns of agentic AI—from instrument use and reflection to planning, multi-agent collaboration, and adaptive reasoning. These patterns present how brokers may be structured to realize dependable, scalable automation in real-world environments.

Throughout the business, we’re seeing a transparent shift. Early experiments targeted on single-model prompts and static workflows. Now, the dialog is about extensibility— give brokers a broad, evolving set of capabilities with out locking into one vendor or rewriting integrations for every new want. Platforms are competing on how rapidly builders can:

- Combine with lots of of APIs, providers, knowledge sources, and workflows.

- Reuse these integrations throughout totally different groups and runtime environments.

- Preserve enterprise-grade management over who can name what, when, and with what knowledge.

The lesson from the previous yr of agentic AI evolution is easy: brokers are solely as succesful because the instruments you give them—and solely as reliable because the governance behind these instruments.

Extensibility by means of open requirements

Within the early phases of agent improvement, integrating instruments was typically a bespoke, platform-specific effort. Every framework had its personal conventions for outlining instruments, passing knowledge, and dealing with authentication. This created a number of constant blockers:

- Duplication of effort—the identical inner API needed to be wrapped in another way for every runtime.

- Brittle integrations—small modifications to schemas or endpoints may break a number of brokers directly.

- Restricted reusability—instruments constructed for one staff or setting have been arduous to share throughout tasks or clouds.

- Fragmented governance—totally different runtimes enforced totally different safety and coverage fashions.

As organizations started deploying brokers throughout hybrid and multi-cloud environments, these inefficiencies grew to become main obstacles. Groups wanted a approach to standardize how instruments are described, found, and invoked, whatever the internet hosting setting.

That’s the place open protocols entered the dialog. Simply as HTTP remodeled the net by creating a typical language for shoppers and servers, open protocols for brokers purpose to make instruments moveable, interoperable, and simpler to manipulate.

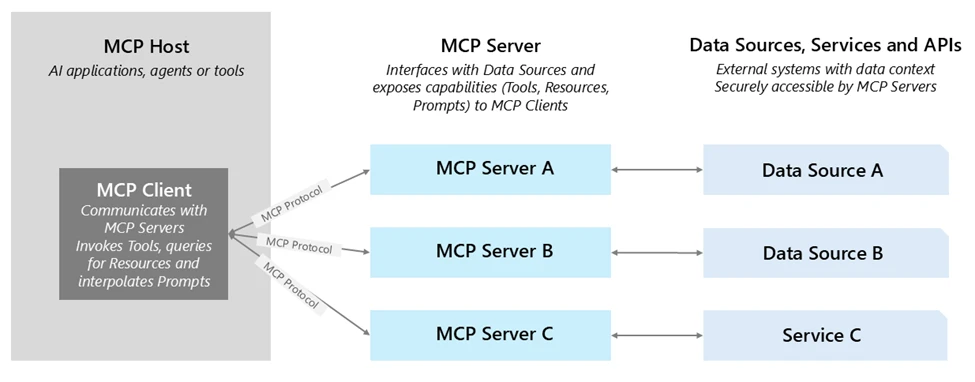

One of the vital promising examples is the Mannequin Context Protocol (MCP)—a regular for outlining instrument capabilities and I/O schemas so any MCP-compliant agent can dynamically uncover and invoke them. With MCP:

- Instruments are self-describing, making discovery and integration sooner.

- Brokers can discover and use instruments at runtime with out guide wiring.

- Instruments may be hosted anyplace—on-premises, in a accomplice cloud, or in one other enterprise unit—with out shedding governance.

Azure AI Foundry helps MCP, enabling you to convey current MCP servers straight into your brokers. This offers you the advantages of open interoperability plus enterprise-grade safety, observability, and administration. Be taught extra about MCP at MCP Dev Days.

Upon getting a regular for portability by means of open protocols like MCP, the following query turns into: what sorts of instruments ought to your brokers have, and the way do you manage them to allow them to ship worth rapidly whereas staying adaptable?

In Azure AI Foundry, we consider this as constructing an enterprise toolchain—a layered set of capabilities that stability pace (getting one thing useful working at this time), differentiation (capturing what makes your small business distinctive), and attain (connecting throughout all of the methods the place work really occurs).

1. Constructed-in instruments for fast worth: Azure AI Foundry contains ready-to-use instruments for widespread enterprise wants: looking out throughout SharePoint and knowledge lake, executing Python for knowledge evaluation, performing multi-step internet analysis with Bing, and triggering browser automation duties. These aren’t simply conveniences—they let groups arise useful, high-value brokers in days as an alternative of weeks, with out the friction of early integration work.

2. Customized instruments in your aggressive edge: Each group has proprietary methods and processes that may’t be replicated by off-the-shelf instruments. Azure AI Foundry makes it easy to wrap these as agentic AI instruments—whether or not they’re APIs out of your ERP, a producing high quality management system, or a accomplice’s service. By invoking them by means of OpenAPI or MCP, these instruments turn into moveable and discoverable throughout groups, tasks, and even clouds, whereas nonetheless benefiting from Foundry’s identification, coverage, and observability layers.

3. Connectors for optimum attain: Via Azure Logic Apps, Foundry can join brokers to over 1,400 SaaS and on-premises methods—CRM, ERP, ITSM, knowledge warehouses, and extra. This dramatically reduces integration elevate, permitting you to plug into current enterprise processes with out constructing each connector from scratch.

One instance of this toolchain in motion comes from NTT DATA, which constructed brokers in Azure AI Foundry that combine Microsoft Material Knowledge Agent alongside different enterprise instruments. These brokers enable staff throughout HR, operations, and different capabilities to work together naturally with knowledge—revealing real-time insights and enabling actions—lowering time-to-market by 50% and giving non‑technical customers intuitive, self-service entry to enterprise intelligence.

Extensibility should be paired with governance to maneuver from prototype to enterprise-ready automation. Azure AI Foundry addresses this with a secure-by-default strategy to instrument administration:

- Authentication and identification in built-in connectors: Enterprise-grade connectors—like SharePoint and Microsoft Material—already use on-behalf-of (OBO) authentication. When an agent invokes these instruments, Foundry ensures that the decision respects the top person’s permissions through managed Entra IDs, preserving current authorization guidelines. With Microsoft Entra Agent ID, each agentic challenge created in Azure AI Foundry mechanically seems in an agent-specific utility view throughout the Microsoft Entra admin heart. This offers safety groups with a unified listing view of all brokers and agent functions they should handle throughout Microsoft. This integration marks step one towards standardizing governance for AI brokers firm large. Whereas Entra ID is native, Azure AI Foundry additionally helps integrations with exterior identification methods. Via federation, clients who use suppliers comparable to Okta or Google Id can nonetheless authenticate brokers and customers to name instruments securely.

- Customized instruments with OpenAPI and MCP: OpenAPI-specified instruments allow seamless connectivity utilizing managed identities, API keys, or unauthenticated entry. These instruments may be registered straight in Foundry, and align with commonplace API design finest practices. Foundry can also be increasing MCP safety to incorporate saved credentials, project-level managed identities, and third-party OAuth flows, together with safe personal networking—advancing towards a completely enterprise-grade, end-to-end MCP integration mannequin.

- API governance with Azure API Administration (APIM): APIM offers a strong management aircraft for managing instrument calls: it permits centralized publishing, coverage enforcement (authentication, charge limits, payload validation), and monitoring. Moreover, you possibly can deploy self-hosted gateways inside VNets or on-prem environments to implement enterprise insurance policies near backend methods. Complementing this, Azure API Heart acts as a centralized, design-time API stock and discovery hub—permitting groups to register, catalog, and handle personal MCP servers alongside different APIs. These capabilities present the identical governance you anticipate in your APIs—prolonged to agentic AI instruments with out extra engineering.

- Observability and auditability: Each instrument invocation in Foundry—whether or not inner or exterior—is traced with step-level logging. This contains identification, instrument identify, inputs, outputs, and outcomes, enabling steady reliability monitoring and simplified auditing.

Enterprise-grade administration ensures instruments are safe and observable—however success additionally depends upon the way you design and function them from day one. Drawing on Azure AI Foundry steerage and buyer expertise, a couple of rules stand out:

- Begin with the contract. Deal with each instrument like an API product. Outline clear inputs, outputs, and error behaviors, and maintain schemas constant throughout groups. Keep away from overloading a single instrument with a number of unrelated actions; smaller, single-purpose instruments are simpler to check, monitor, and reuse.

- Select the best packaging. For proprietary APIs, determine early whether or not OpenAPI or MCP most closely fits your wants. OpenAPI instruments are easy for well-documented REST APIs, whereas MCP instruments excel when portability and cross-environment reuse are priorities.

- Centralize governance. Publish customized instruments behind Azure API Administration or a self-hosted gateway so authentication, throttling, and payload inspection are enforced persistently. This retains coverage logic out of instrument code and makes modifications simpler to roll out.

- Bind each motion to identification. All the time know which person or agent is invoking the instrument. For built-in connectors, leverage identification passthrough or OBO. For customized instruments, use Entra ID or the suitable API key/credential mannequin, and apply least-privilege entry.

- Instrument early. Add tracing, logging, and analysis hooks earlier than transferring to manufacturing. Early observability helps you to observe efficiency developments, detect regressions, and tune instruments with out downtime.

Following these practices ensures that the instruments you combine at this time stay safe, moveable, and maintainable as your agent ecosystem grows.

What’s subsequent

Partially three of the Agent Manufacturing facility collection, we’ll concentrate on observability for AI brokers— hint each step, consider instrument efficiency, and monitor agent conduct in actual time. We’ll cowl the built-in capabilities in Azure AI Foundry, integration patterns with Azure Monitor, and finest practices for turning telemetry into steady enchancment.

Did you miss the primary put up within the collection? Test it out: The brand new period of agentic AI—widespread use circumstances and design patterns.