“In today’s rapidly changing digital landscape, we’re witnessing a growing number of businesses and environments where our clients leverage Azure to achieve their goals.” To ensure seamless operations and uncompromised security in Azure, our teams remain proactive in addressing regular maintenance and updates, thereby staying aligned with evolving customer demands. Stability, reliability, and rolling updates that arrive at precisely timed intervals remain.

“—

Optimization issues in cloud computing

Optimization challenges persist across the entire knowledge industry. Today’s software products are designed to operate seamlessly across a broad range of environments, including websites, applications, and operating systems. Simultaneously, Azure is designed to function seamlessly across a diverse range of servers and server configurations encompassing multiple hardware architectures, virtual machine types, and operating systems within a production fleet. As time constraints, limited computational resources, and escalating system complexity due to added hardware, virtual machines, and companies mount, achieving an optimal solution becomes increasingly challenging. To address problems of this nature, sophisticated algorithms are employed to identify a near-ideal solution that efficiently utilises available resources and timeframe. To overcome the challenges associated with establishing a robust setting for software and hardware testing platforms, we will delve into the intricacies of such complexities and present a novel library designed to address these issues across various domains.

Surroundings design and combinatorial testing

When designing an experiment to evaluate a novel treatment, it’s crucial to enroll a diverse population of subjects to assess potential adverse effects that may disproportionately affect specific subgroups of patients. To effectively leverage cloud computing, we must create a comprehensive experimentation platform capable of simulating various Azure properties and exhaustively testing every feasible configuration scenario. To prevent the check matrix from becoming unwieldy in application, it’s crucial to prioritize the essential and high-risk items. While diversifying your approach by avoiding treatments that could counterproductively interact with one another might seem straightforward, the same principle applies when harnessing the unique characteristics of cloud-based properties in industrial applications – it’s essential to respect inherent constraints for successful deployment. While some hardware might exclusively interact with virtual machine variants one and two, excluding three and four from its capabilities. Ultimately, clients may impose additional constraints on us to consider within our immediate environment?

To accommodate all feasible combinations, we need to establish a framework that identifies the requisite blends while factoring in diverse limitations. Our AzQualify platform enables internal testing of Azure integrations within applications, utilizing managed experimentation to validate any changes before their deployment. In AzQualify, a diverse range of configurations and configuration mixes is employed to rigorously test and validate applications prior to their release into production, thereby identifying and resolving potential issues early on.

Although it would be ideal to thoroughly examine the innovative treatment and gather insights on each prospective customer and interaction with each solution across all scenarios, unfortunately, there is insufficient time and resources to make this feasible. In cloud computing, we encounter an identical constrained optimization problem. The problem is inherently intractable due to its classification as an NP-hard issue.

NP-hard issues

The complexity of a problem classified as NP-hard lies in its difficulty to solve efficiently, rendering it challenging not only to find an optimal solution but also to verify one if provided. Pioneering a novel therapeutic approach that holds promise for treating multiple conditions, the efficacy assessment encompasses an intricate network of cutting-edge trials across diverse patient populations, settings, and scenarios. The consequences of each trial are often intertwined with those of others, rendering both the execution and verification process arduous due to the complexity of interconnected outcomes that must be carefully considered? Whether we’re able to determine whether this treatment is indeed the most superior or if it’s the ultimate one. In computer science, it remains unconfirmed and is considered highly improbable that optimal solutions to NP-hard problems can be efficiently found.

One notable NP-hard challenge we consider in AzQualify is the allocation of virtual machines across hardware to balance load effectively? This involves provisioning buyer virtual machines across physical hosts in a manner that optimises resource allocation, reduces latency, and prevents any individual host from being overwhelmed? To effectively visualize the most suitable approach, we employ a property graph to model and resolve complex relationships within interconnected information.

Property graph

A property graph is a fundamental information construct commonly employed in graph databases to model complex relationships between entities.

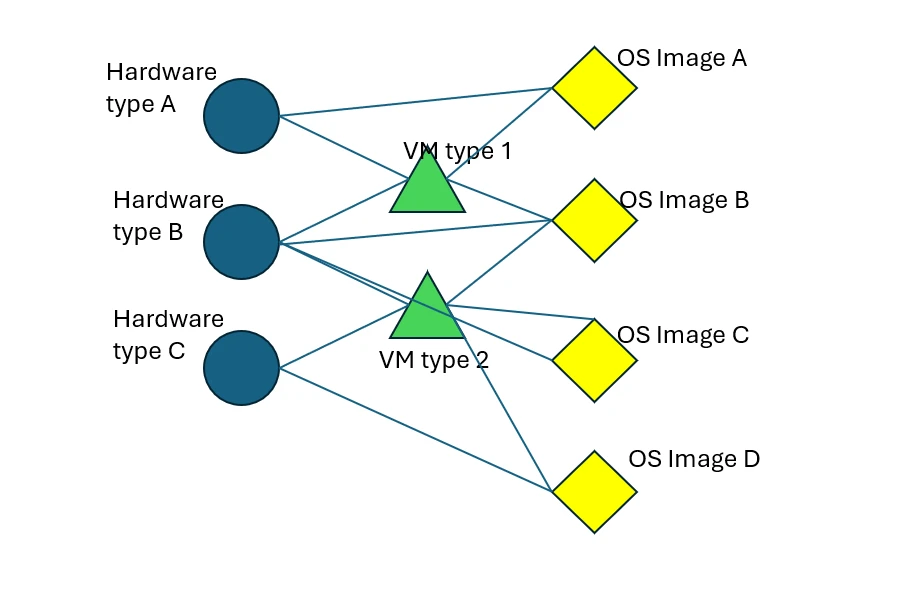

We can effectively demonstrate various property types using their unique vertices and edges to represent compatibility relationships. Properties in this context serve as vertices within a graph, where two properties may share an edge if they exhibit compatibility. This mannequin proves exceptionally valuable in facilitating the visualization of constraints. By imposing such constraints, we can effectively utilize existing concepts and methods to tackle novel optimization challenges.

The underlying instance property graph comprises three distinct types of properties: hardware model, virtual machine type, and operating systems. Vertices characterise distinct properties mirroring hardware configurations (blue circles A, B, and C) as well as virtual machine types (green triangles D and E) and operating system snapshots (yellow diamonds F, G, H, and I). Connections (black lines linking nodes) represent compatibility links. Nodes connected by an edge describe properties suitable for each other mirroring hardware architecture, virtual machine configurations, and operating system images.

Azure’s infrastructure consists of nodes situated in geographically dispersed datacenters across various regions. Azure customers utilize virtual machines that execute operations on scalable nodes. A solitary node can concurrently host multiple virtual machines, each allocated a share of the node’s processing resources. reminiscence or storage, and operate independently of the opposing virtual machines (VMs) on the node. To deploy a functioning {hardware} emulator, virtual machine type, and operating system image on the same virtualized environment, all three components must be compatible with each other. The relationships on the graph are interconnected. As a result, valid node configurations are characterized by cliques in the graph, each comprising a single hardware model, virtual machine type, and operating system image.

One instance where our setting design falls short in AzQualify is the need to account for all hardware models, VM types, and operating system versions within a single graph.

We aim to configure our experiment with a hardware mannequin A comprising 40% of the machines, while VM kind D should operate on 50% of these machines. Furthermore, we intend for OS image F to be installed on 10% of all VMs operating within the experiment. Precisely, we should utilize a total of twenty machines. Satisfying a set of compatibility constraints by allocating hardware, virtual machine varieties, and working system photos across multiple machines, in order to achieve a configuration that closely meets all specified requirements, poses an instance where no efficient algorithm exists?

Library of optimization algorithms

Having distilled key takeaways from resolving notoriously complex problems, we’ve crafted a versatile library comprising optimized code, now readily available for practical application. Despite the availability of Python and R libraries for the algorithms employed, their limitations render them unsuitable for tackling advanced combinatorial, NP-hard problems of this nature. Azure leverages a versatile library to tackle diverse, dynamic settings-related challenges and develop scalable solutions for various combinatorial optimization problems across domains, prioritizing extensibility and flexibility. The environmental design system, leveraging this library, has significantly enhanced our property coverage in testing, thereby enabling us to identify and catch 5-10 regressions per month. Through identifying regressions, we can effectively optimize Azure’s internal applications during the pre-production phase, thereby minimizing potential platform instability and negative user experience once changes are widely deployed.

The optimization library? Yes, let’s dive deeper into its capabilities and benefits. The optimization library provides a range of functions for solving mathematical optimization problems efficiently. It is designed to simplify the process of solving optimization problems by providing pre-built functions that handle the underlying complexities.

Optimizing methods is crucial for companies seeking to boost effectiveness, reduce costs, and improve efficiency and dependability. Visit our state-of-the-art library to tackle complex computational problems and find innovative solutions to NP-hard issues. For those unfamiliar with optimization or NP-hard problems, consulting the README.md file in the library is a great starting point to learn about interacting with its diverse algorithmic offerings. As we delve into the evolving landscape of cloud computing, we continually refine existing algorithms and introduce innovative solutions tailored to specific classes of computationally intractable problems.

By effectively addressing these challenges, organisations can achieve greater resource utilisation, enhance customer experience, and maintain a competitive advantage in today’s rapidly evolving digital landscape. Investing in cloud optimization is not merely a cost-cutting exercise; rather, it’s an essential step in building a robust foundation for achieving lasting business objectives.