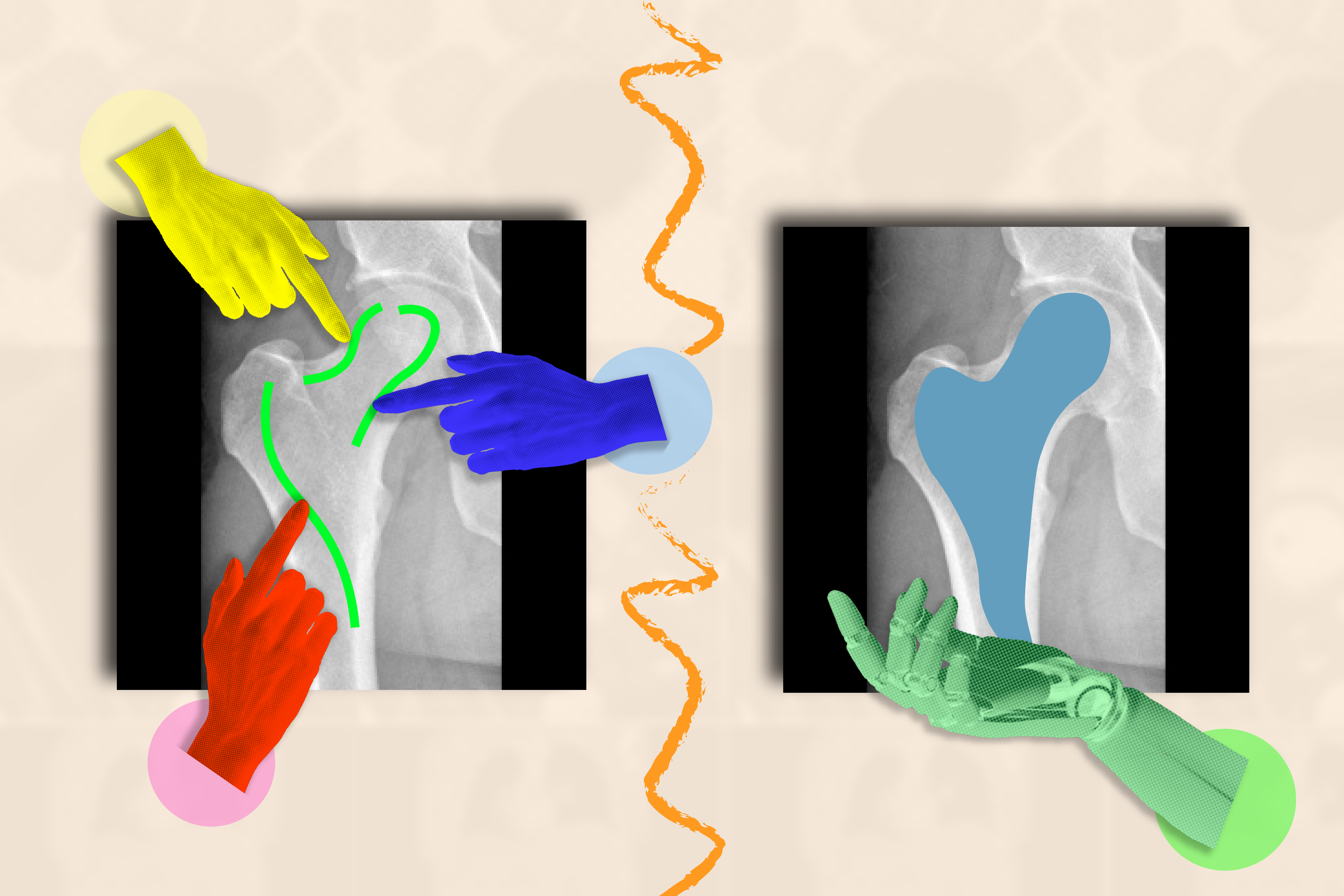

Without context, a medical image like an MRI or X-ray appears as a complex tapestry of varying shades of gray, seemingly unintelligible to the uninitiated viewer. Deciphering where one cancerous growth ends and another begins can prove a daunting challenge.

By being trained on the nuances of organic structures, AI systems can effectively segment or demarcate regions of interest that healthcare professionals and biomedical specialists desire to surveil for potential afflictions and anomalies. By leveraging artificial intelligence, individuals could spare precious hours from laboriously tracing anatomical structures across numerous images, freeing them to focus on more complex and creative pursuits.

The catch? Prior to enabling an AI system to accurately segment images, researchers and clinicians must meticulously annotate a vast number of photographs. To train a supervised model, you would need to manually label the cerebral cortex in numerous MRI scans, allowing the AI to learn how the cortex’s shape varies across different brains.

Researchers at MIT’s CSAIL, MGH, and Harvard Medical School have created an innovative “framework” that enables rapid segmentation of medical images, including novel ones not previously encountered.

To streamline the process of image annotation, team members simulated customer interactions by labeling over 50,000 scans, including MRI, ultrasound, and picture images, across various bodily structures such as eyes, cells, brain, bone, skin, and more. Using algorithms, the staff simulated haphazard scribbling and random clicking patterns on medical images to accurately label each scan. With labels designating specific regions, the team employed superpixel algorithms to identify image segments sharing similar pixel values, thereby pinpointing novel areas of interest for medical investigators; they utilized ScribblePrompt to segment these findings. This tool is designed to efficiently process real-world segmentation requests from customers, utilizing AI-driven algorithms to provide accurate results.

“According to MIT PhD scholar Hallea Wong SM ’22, lead researcher at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and affiliate, AI holds significant potential in processing high-dimensional data, such as photographs, to enhance human productivity.” “We aim to amplify the endeavors of medical staff through a dynamic and engaging platform, fostering collaboration rather than competition.” ScribblePrompt is a user-friendly tool designed to help doctors focus on the most critical aspects of their diagnoses by highlighting key information and simplifying complex data. Notably outperforming rival approaches, the method achieves a 28% reduction in annotation time compared to Meta’s Phase Something Model (SAM) framework.

ScribblePrompt’s intuitive interface simplifies the process: users can effortlessly scribble within the designated area, or tap to select it, allowing the software to automatically isolate and highlight constructions or backgrounds with precision. In a retinal scan, it is theoretically possible to identify distinct individual vein patterns. ScribblePrompt effectively annotates a building’s layout within a defined perimeter.

The software could then proactively correct errors and inaccuracies by leveraging user feedback as a primary source of guidance. To highlight a kidney during an ultrasound examination, a bounding box is typically employed first, followed by additional features of the organ’s structure if the initial outline doesn’t fully capture all its edges. When editing your section, consider using a “damaging scribble” technique to effectively isolate specific areas.

ScribblePrompt’s self-correcting and interactive features made it a highly sought-after tool among neuroimaging researchers at Massachusetts General Hospital, particularly for individual research projects. A staggering 93.8% of customers overwhelmingly preferred the effectiveness of the MIT method in refining segments following scribble corrections, eclipsing the performance of the SAM baseline. According to a survey, 87.5% of medical researchers overwhelmingly preferred ScribblePrompt’s click-based editing approach.

ScribblePrompt demonstrated expertise in simulated scribbles and clicks across a vast array of 54,000 photographs spanning 65 datasets, featuring diverse anatomical structures such as scans of the eyes, thorax, spine, cells, skin, abdominal muscles, neck, brain, bones, teeth, and lesions. The mannequin became acquainted with a diverse range of medical images, including microscopic views, computed tomography scans, X-ray images, magnetic resonance imaging, ultrasound recordings, and visual depictions.

Although current strategies can struggle to effectively respond to customer inputs that mimic real-life interactions, such as scribbling on photographs during training, this limitation hinders the ability to accurately simulate complex scenarios and user behaviors. ScribblePrompt has successfully coaxed its model into focusing on diverse inputs by leveraging artificial segmentation tasks, notes Wong. “We aimed to train a versatile baseline model on diverse datasets, enabling it to generalize effectively to novel images and tasks.”

Following a thorough evaluation of a vast amount of data, the staff thoroughly assessed ScribblePrompt across 12 newly introduced datasets. Although untrained on these images initially, it surpassed four prevailing methods by more accurately segmenting and predicting customer-preferred regions.

Segmentation, the most ubiquitous biomedical image analysis process, is crucially executed in both routine clinical practice and research, rendering it a vital and highly impactful step. “ScribblePrompt’s intuitive design enables seamless collaboration between clinicians and researchers, streamlining workflows and accelerating the development process.”

According to Bruce Fischl, a Harvard Medical School professor in radiology and Massachusetts General Hospital neuroscientist, “the majority of segmentation algorithms developed in image evaluation and machine learning rely on our ability to manually annotate images.” The challenges in medical imaging, typically involving 3D volumes, are exponentially more daunting due to humans’ lack of innate ability to annotate three-dimensional “photographs” without a corresponding evolutionary or phenomenological basis for proficiency. ScribblePrompt empowers rapid and precise manual annotation of handbooks by training a network to replicate the exact interactions a human would typically have with an image during manual annotation processes.

The outcome yields a seamless interface allowing annotators to seamlessly collaborate with imaging data, thereby achieving exponentially higher productivity than previously thought possible.

Wong and Dalca collaborated with CSAIL associates John Guttag and Dugald C. Moran to author the paper. As a principal investigator at the Computer Science and Artificial Intelligence Laboratory (CSAIL), Jackson, along with MIT PhD scholar Marianne Rakic, who earned her Master’s degree in 2022. Their work was supported in part by Quanta Laptop Inc., the Eric and Wendy Schmidt Foundation at the Broad Institute, Wistron Corporation, and the National Institute of Biomedical Imaging and Bioengineering, a part of the National Institutes of Health, with hardware support from the Massachusetts Life Sciences Center.

According to a recent announcement, Wong’s research team is set to present their findings at the 2024 European Conference on Computer Vision, preceded by an oral presentation at the DCAMI workshop at the Computer Vision and Pattern Recognition Convention last year. The award recognizes the innovative scientific impact of ScribblePrompt’s research, as presented at the workshop.