Every time it involves coaching mannequin, firms often guess of feeding it increasingly more knowledge for coaching.

Greater datasets = smarter fashions

When DeepSeek launched initially, it challenged this strategy and set new definitions for mannequin coaching. And after that got here a wave of mannequin coaching with much less knowledge and optimized strategy. I got here throughout one such analysis paper: LIMI: Much less Is Extra for Clever Company and it actually bought me hooked. It discusses the way you don’t want hundreds of examples to construct a strong AI. In truth, simply 78 fastidiously chosen coaching samples are sufficient to outperform fashions educated on 10,000.

How? By specializing in high quality over amount. As an alternative of flooding the mannequin with repetitive or shallow examples, LIMI makes use of wealthy, real-world situations from software program growth and scientific analysis. Every pattern captures the total arc of problem-solving: planning, software use, debugging, and collaboration.

The outcome? A mannequin that doesn’t simply “know” issues: it does issues. And it does them higher, sooner, and with far much less knowledge.

This text explains how LIMI works!

Key Takeaways

- Company is outlined because the capability of AI programs to behave autonomously, fixing issues by means of self-directed interplay with instruments and environments.

- The LIMI strategy makes use of solely 78 high-quality, strategically designed coaching samples centered on collaborative software program growth and scientific analysis.

- On the AgencyBench analysis suite, LIMI achieves 73.5% efficiency, far surpassing main fashions like GLM-4.5 (45.1%), Kimi-K2 (24.1%), and DeepSeek-V3.1 (11.9%).

- LIMI reveals a 53.7% enchancment over fashions educated on 10,000 samples, utilizing 128 instances much less knowledge.

- The research introduces the Company Effectivity Precept: machine autonomy emerges not from knowledge quantity however from the strategic curation of high-quality agentic demonstrations.

- Outcomes generalize throughout coding, software use, and scientific reasoning benchmarks, confirming that the “much less is extra” paradigm applies broadly to agentic AI.

What’s Company?

The paper defines Company as an emergent functionality the place AI programs perform as autonomous brokers. These brokers don’t look forward to step-by-step directions. As an alternative, they:

- Actively uncover issues

- Formulate hypotheses

- Execute multi-step options

- Work together with environments and instruments

This contrasts sharply with conventional language fashions that generate responses however can’t act. Actual-world purposes like debugging code, managing analysis workflows, or working microservices, require this type of proactive intelligence.

The shift from “considering AI” to “working AI” is pushed by trade wants. Firms now search programs that may full duties end-to-end, not simply reply questions.

Why Much less Information Can Be Extra Efficient?

For over a decade, AI progress has adopted one rule: scale up. Greater fashions. Extra tokens. Bigger datasets. And it labored: for language understanding. Nonetheless, current work in different domains suggests in any other case:

- LIMO (2025) demonstrated that complicated mathematical reasoning improves by 45.8% utilizing solely 817 curated samples.

- LIMA (2023) confirmed that mannequin alignment could be achieved with simply 1,000 high-quality examples.

However company is totally different. You possibly can’t study to construct by studying tens of millions of code snippets. You study by doing. And doing properly requires dense, high-fidelity examples: not simply quantity.

Consider it like studying to cook dinner. Watching 10,000 cooking movies would possibly train you vocabulary. However one hands-on session with a chef, the place you chop, season, style, and modify, teaches you tips on how to cook dinner.

LIMI applies this concept to AI coaching. As an alternative of accumulating limitless logs of software calls, it curates 78 full “cooking periods,” each a whole, profitable collaboration between human and AI on a posh process.

The outcome? The mannequin learns the essence of company: tips on how to plan, adapt, and ship.

The LIMI Method: Three Core Improvements

LIMI’s success rests on three methodological pillars:

Agentic Question Synthesis

Queries aren’t generic prompts. They simulate actual collaborative duties in software program growth (“vibe coding”) and scientific analysis. The group collected:

- 60 real-world queries from skilled builders and researchers.

- 18 artificial queries generated from GitHub Pull Requests utilizing GPT-5, making certain authenticity and technical depth.

Trajectory Assortment Protocol

For every question, the group recorded full interplay trajectories, multi-turn sequences that embody:

- Mannequin reasoning steps

- Device calls (e.g., file edits, API requests)

- Environmental suggestions (e.g., error messages, person clarifications)

These trajectories common 42,400 tokens, with some exceeding 150,000 tokens, capturing the total complexity of collaborative problem-solving.

Deal with Excessive-Affect Domains

All 78 coaching samples come from two domains that symbolize the majority of information work:

- Vibe Coding: Collaborative software program growth with iterative debugging, testing, and gear use.

- Analysis Workflows: Literature search, knowledge evaluation, experiment design, and report technology.

This focus ensures that each coaching instance is dense with agentic alerts.

Dataset Building: From GitHub to Human-AI Collaboration

The LIMI dataset was constructed by means of a meticulous pipeline:

Step 1: Question Pool Creation

Actual queries got here from precise developer and researcher workflows. Artificial queries have been derived from 100 high-star GitHub repositories, filtered for significant code modifications (excluding documentation-only PRs).

Step 2: High quality Management

4 PhD-level annotators reviewed all queries for semantic alignment with actual duties. Solely the very best 78 have been chosen.

Step 3: Trajectory Technology

Utilizing the SII CLI surroundings, a tool-rich interface supporting code execution, file system entry, and net search: human annotators collaborated with GPT-5 to finish every process. Each profitable trajectory was logged in full.

The result’s a compact however extraordinarily wealthy dataset the place every pattern encapsulates hours of sensible problem-solving.

Analysis: AgencyBench and Extra

To check LIMI’s capabilities, the group used AgencyBench, a brand new benchmark with 10 complicated, real-world duties:

- Vibe Coding Duties (4):

- C++ chat system with login, associates, concurrency

- Java to-do app with search and multi-user sync

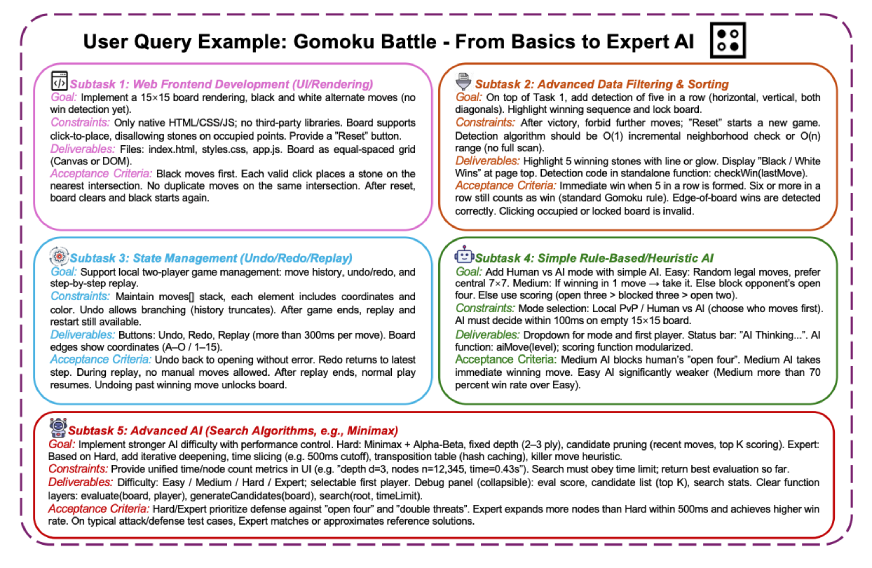

- Net-based Gomoku recreation with AI opponents

- Self-repairing microservice pipeline

- Analysis Workflow Duties (6):

- Evaluating LLM efficiency on DynToM dataset

- Statistical evaluation of reasoning vs. direct fashions

- Dataset discovery on Hugging Face

- Scientific perform becoming to excessive precision

- Complicated NBA participant commerce reasoning

- S&P 500 firm evaluation utilizing monetary knowledge

Every process has a number of subtasks, requiring planning, software use, and iterative refinement.

Along with AgencyBench, LIMI was examined on generalization benchmarks:

- SciCode (scientific computing)

- TAU2-bench (software use)

- EvalPlus-HumanEval/MBPP (code technology)

- DS-1000 (knowledge science)

Experimental Outcomes

LIMI was carried out by fine-tuning GLM-4.5 (355B parameters) on the 78-sample dataset. It was in contrast in opposition to:

- Baseline fashions: GLM-4.5, Kimi-K2, DeepSeek-V3.1, Qwen3

- Information-rich variants: Fashions educated on CC-Bench (260 samples), AFM-WebAgent (7,610), and AFM-CodeAgent (10,000)

On AgencyBench, LIMI scored 73.5%, far forward of all rivals:

- First-Flip Practical Completeness: 71.7% vs. 37.8% (GLM-4.5)

- Success Price (inside 3 rounds): 74.6% vs. 47.4%

- Effectivity (unused rounds): 74.2% vs. 50.0%

Much more putting: LIMI outperformed the ten,000-sample mannequin by 53.7% absolute factors, utilizing 128 instances fewer samples.

On generalization benchmarks, LIMI averaged 57.2%, beating all baselines and data-rich variants. It achieved prime scores on coding (92.1% on HumanEval) and aggressive outcomes on software use (45.6% on TAU2-retail).

Case Research: Actual-World Efficiency

The paper consists of detailed case comparisons:

- Gomoku Recreation (Process 3):

GLM-4.5 failed at board rendering, win detection, and AI logic. LIMI accomplished all subtasks with minimal intervention.

- Dataset Discovery (Process 7):

GLM-4.5 retrieved much less related datasets. LIMI’s selections higher matched question necessities (e.g., philosophy of AI consciousness, Danish hate speech classification). - Scientific Operate Becoming (Process 8):

GLM-4.5 reached loss = 1.14e-6 after a number of prompts. LIMI achieved 5.95e-7 on the primary attempt. - NBA Reasoning (Process 9):

GLM-4.5 usually failed or required most prompts. LIMI solved most subtasks with zero or one trace, utilizing fewer tokens and fewer time.

These examples illustrate LIMI’s superior reasoning, software use, and flexibility.

Additionally Learn: Make Mannequin Coaching and Testing Simpler with MultiTrain

Remaining Verdict

LIMI establishes the Company Effectivity Precept:

Machine autonomy emerges not from knowledge abundance however from strategic curation of high-quality agentic demonstrations.

This challenges the trade’s reliance on large knowledge pipelines. As an alternative, it means that:

- Understanding the essence of company is extra vital than scaling knowledge

- Small, expert-designed datasets can yield state-of-the-art efficiency

- Sustainable AI growth is feasible with out monumental compute or knowledge prices

For practitioners, this implies investing in process design, human-AI collaboration protocols, and trajectory high quality: not simply knowledge quantity.

Additionally Learn: Understanding the Architecture of Qwen3-Subsequent-80B-A3B

Conclusion

The LIMI paper delivers a daring message: you don’t want 10,000 examples to show an AI tips on how to work. You want 78 actually good ones. By specializing in high-quality, real-world collaborations, LIMI achieves state-of-the-art agentic efficiency with a fraction of the info. It proves that company isn’t about scale. It’s about sign.

As AI strikes from chatbots to coworkers, this perception will probably be essential. The longer term belongs to not those that acquire essentially the most knowledge, however to those that design essentially the most significant studying experiences.

Within the age of agentic AI, much less isn’t simply extra. It’s higher!

Login to proceed studying and luxuriate in expert-curated content material.