As Databricks Engineers, now we have the privilege of engaged on difficult issues with nice colleagues. On this Engineering weblog submit, we are going to stroll you thru how we constructed a excessive efficiency charge limiting system at Databricks. In case you are a Databricks consumer, you don’t want to grasp this weblog to have the ability to use the platform to its fullest. However in the event you’re excited about taking a peek beneath the hood, learn on to listen to about a number of the cool stuff we’ve been engaged on!

Background

Price limiting is about controlling useful resource utilization to offer isolation and overload safety between tenants in a multitenant system. Within the context of Databricks, this might be offering isolation between accounts, workspaces, customers, and so on., and is most frequently seen externally as a per time unit restrict, such because the variety of jobs launched per second, variety of API requests per second, and so on. However there may be inside usages of charge limits, corresponding to managing capability between purchasers of a service. Price restrict enforcement performs an vital function in making Databricks dependable, but it surely’s vital to notice that this enforcement incurs overhead that must be minimized.

The Drawback

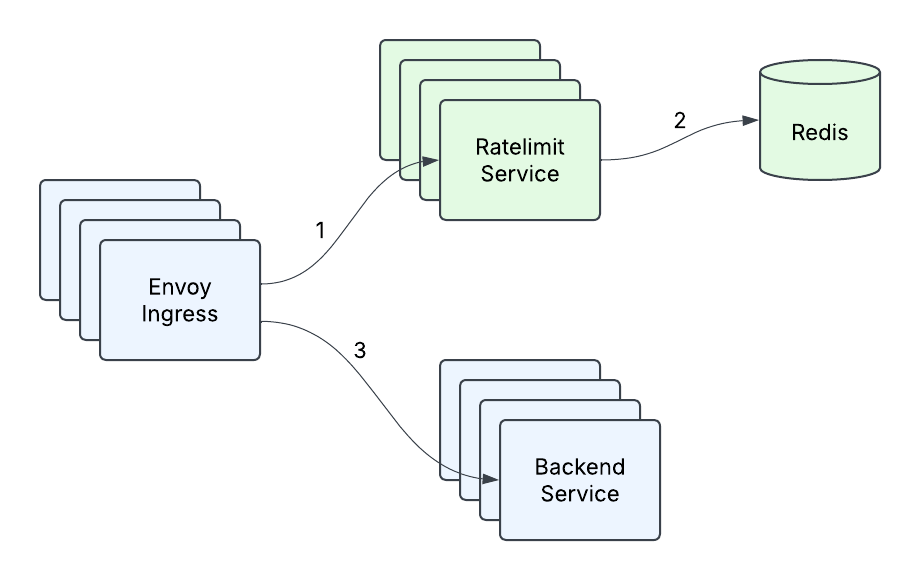

Again in early 2023, the prevailing Ratelimit infrastructure at Databricks consisted of an Envoy ingress gateway making calls to the Ratelimit Service, with a single occasion of Redis backing the service (Determine 1). This was completely appropriate for the prevailing queries per second (QPS) that any cluster of machines inside a area was anticipated to obtain, in addition to for the transient nature of per second counting. However as the corporate expanded its buyer base and added new use instances, it grew to become clear that what had gotten us to that time wouldn’t be enough to fulfill our future wants. With the introduction of real-time mannequin serving and different excessive qps use instances, the place one buyer might be sending orders of magnitude extra visitors than what the Ratelimit Service may at present deal with, just a few issues emerged:

- Excessive tail latency – the tail latency of our service was unacceptably excessive beneath heavy visitors, particularly when contemplating there are two community hops concerned and that there was P99 community latency of 10ms-20ms in one of many cloud suppliers.

- Restricted Throughput – at a sure level, including extra machines and doing level optimizations (corresponding to caching) now not allowed us to deal with extra visitors.

- Redis as a single level of failure – Our single Redis occasion was our single level of failure, and we needed to do one thing about that. It was time to revamp our service.

Terminology

At Databricks, now we have an idea of a RatelimitGroup (RLG), which is a string identifier that represents a useful resource or set of assets that we have to defend, corresponding to an API endpoint. These assets can be protected on sure Dimensions, corresponding to setting limits on the workspace/consumer/account degree. For instance, a dimension would convey “I need to defend FooBarAPI on workspaceId and the workspaceId for this request is 12345.” A Dimension can be represented like this:

A single shouldRateLimit request may have a number of descriptors, and an instance could be setting limits, for a selected API, on the workspace and on the consumer degree.

The place the Descriptor schema will appear to be this:

Answer

Low Latency Responses

The primary drawback we needed to deal with was to enhance the latency of our Ratelimit Service. Price Limiting is in the end only a counting drawback, and we knew we ideally needed to maneuver to a mannequin the place we may at all times reply charge restrict requests in-memory, as a result of it’s ultra-fast and most of our charge limits have been based mostly on QPS, which meant that these counts have been transient and didn’t have to be resilient to service cases restarting or crashing. Our current setup did a restricted quantity of in-memory counting already through the use of Envoy’s constant hashing to extend cache hit charges, by sending the identical request to the identical machine. Nonetheless, 1) this was not doable to share with non-Envoy providers, 2) the project churn throughout service resizes and restarts meant we nonetheless needed to usually synchronize with Redis, and three) constant hashing is liable to hotspotting, and when load wasn’t distributed evenly we oftentimes may solely enhance the variety of cases to try to distribute load higher, resulting in suboptimal service utilization.

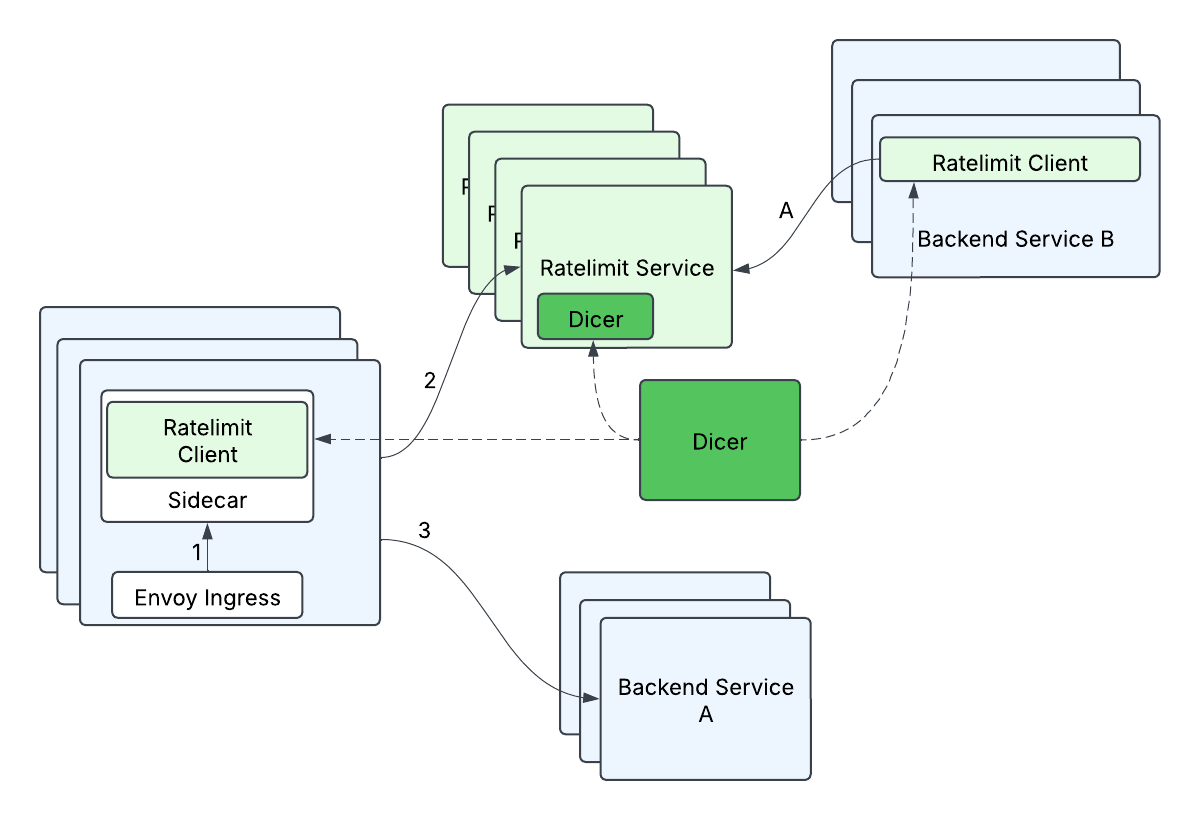

As luck would have it, we had some superior people be a part of Databricks, they usually have been designing Dicer, an autosharding know-how that will make stateful providers simpler to handle, whereas nonetheless holding all the advantages of a stateless service deployment. This might enable us to tame our server aspect latency by holding all the charge restrict counting in reminiscence, as a result of the purchasers would have the ability to ask Dicer to map a request to a vacation spot server, and the server would have the ability to validate with Dicer that it was the correct proprietor of a request. Counting in reminiscence is clearly a lot easier and sooner than wanting up this info from one other supply, and Dicer enabled us to each enhance our server aspect tail latency and scale horizontally with out worrying a few storage answer. i.e this eliminated our single level of failure (Redis) and gave us sooner requests on the identical time!

Scaling Effectively

Despite the fact that we understood how we may clear up a part of our issues, we nonetheless didn’t have a extremely good method to deal with the anticipated large quantity of requests. We needed to be extra environment friendly and smarter about this, moderately than throwing an enormous variety of servers on the drawback. In the end, we didn’t need one consumer request to translate into one request to the Ratelimit Service, as a result of at scale, thousands and thousands of requests to the Ratelimit Service can be costly.

What have been our choices? We thought by way of lots of them however a number of the choices we thought-about have been

- Prefetching tokens on the consumer and making an attempt to reply requests domestically.

- Batching up a set of requests, sending, after which ready for a response to let the visitors by way of.

- Solely sending a fraction of the requests (i.e. Sampling).

None of those choices have been significantly engaging; Prefetching (a) has quite a lot of edge instances throughout initialization and when the tokens run out on the consumer or expire. Batching (b) provides pointless delay and reminiscence stress. And Sampling (c) would solely be appropriate for prime qps instances, however not typically, the place we really may have low charge limits.

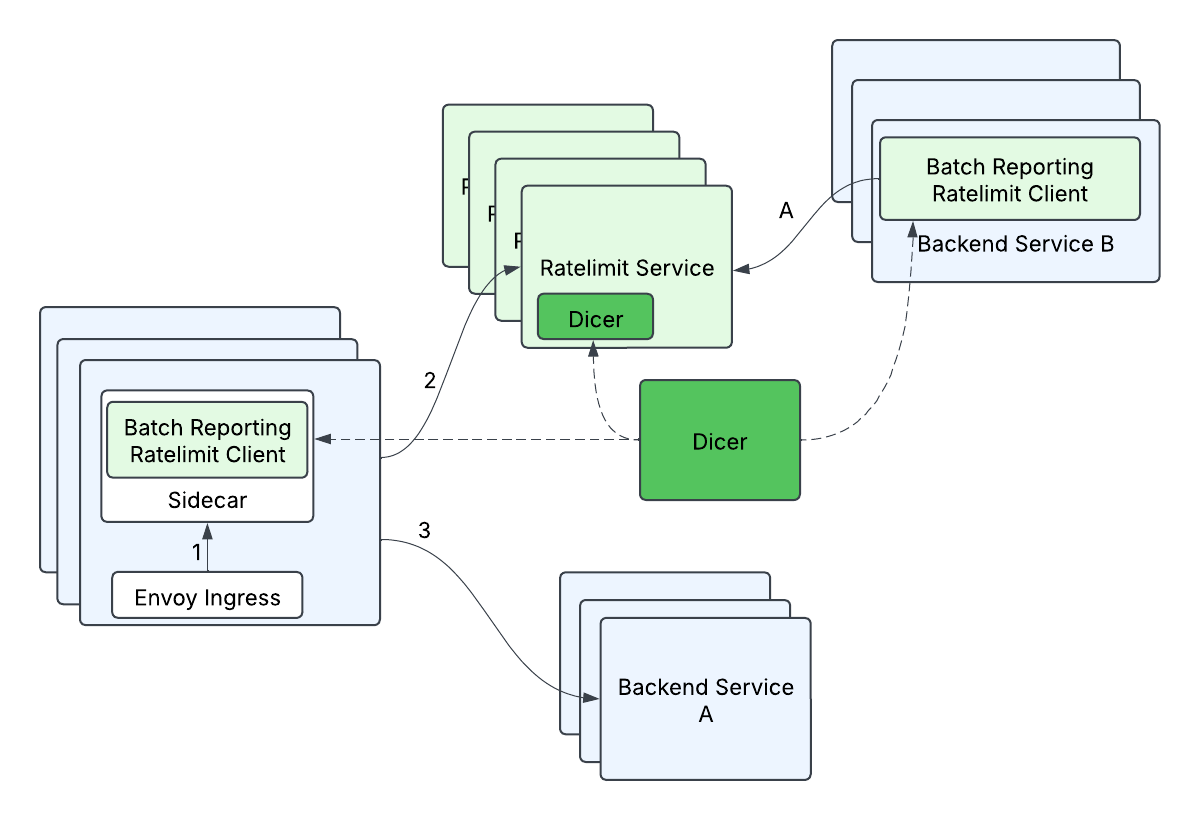

What we ended up designing is a mechanism we name batch-reporting, that mixes two rules: 1) Our purchasers wouldn’t make any distant calls within the important charge restrict path, and a pair of) our purchasers would carry out optimistic charge limiting, the place by default requests can be let by way of except we already knew we needed to reject these explicit requests. We have been effective with not having strict ensures on charge limits as a tradeoff for scalability as a result of backend providers may tolerate some proportion of overlimit. At a excessive degree, batch-reporting does native relying on the consumer aspect, and periodically (e.g. 100ms) reviews again the counts to the server. The server would inform the consumer whether or not any of the entries wanted to be charge restricted.

The batch-reporting stream regarded like this:

- The consumer information what number of requests it let by way of (outstandingHits) and what number of requests it rejected (rejectedHits)

- Periodically, a course of on the consumer will report the collected counts to the server.

- E.g.

KeyABC_SubKeyXYZ: outstandingHits=624, rejectedHits=857;KeyQWD_SubKeyJHP: outstandingHits=876, rejectedHits=0

- E.g.

- Server returns an array of responses

- KeyABC_SubKeyXYZ: rejectTilTimestamp=…, rejectionRate=…

KeyQWD_SubKeyJHP: rejectTilTimestamp=…, rejectionRate=…

- KeyABC_SubKeyXYZ: rejectTilTimestamp=…, rejectionRate=…

The advantages of this strategy have been big; we may have virtually zero-latency charge restrict calls, a 10x enchancment when in comparison with some tail latency calls, and switch spiky charge restrict visitors into (comparatively) fixed qps visitors! Mixed with the Dicer answer for in-memory charge limiting, it’s all easy crusing from right here, proper?

Satan’s within the Particulars

Despite the fact that we had a good suggestion of the tip purpose, there was quite a lot of laborious engineering work to truly make it a actuality. Listed here are a number of the challenges we encountered alongside the best way, and the way we solved them.

Excessive Fanout

As a result of we would have liked to shard based mostly on the RateLimitGroup and dimension, this meant {that a} beforehand single RateLimitRequest with N dimensions may flip into N requests, i.e. a typical fanout request. This might be particularly problematic when mixed with batch-reporting, since a single batched request may fan-out to many (500+) totally different distant calls. If unaddressed, the client-side tail latency would enhance drastically (from ready on only one distant name to ready on 500+ distant calls), and the server-side load would enhance (from 1 distant request total to 500+ distant requests total). We optimized this by grouping descriptors by their Dicer assignments – descriptors assigned to the identical reproduction have been grouped right into a single charge restrict batch request and despatched to that corresponding vacation spot server. This helped to attenuate the rise in client-side tail latencies (some enhance in tail latency is appropriate since batched requests are usually not on the important path however moderately processed in a background thread), and minimizes the elevated load to the server (every server reproduction will deal with at most 1 distant request from a consumer reproduction per batch cycle).

Enforcement Accuracy

As a result of the batch-reporting algorithm is each asynchronous and makes use of a time-based interval to report the up to date counts to the Ratelimit Service, it was very doable that we may enable too many requests by way of earlier than we may implement the speed restrict. Despite the fact that we may outline these limits as fuzzy, we nonetheless needed to provide ensures that we might go X% (e.g. 5%) over the restrict. Going excessively over the restrict may occur due to two foremost causes:

- The visitors throughout one batching window (e.g. 100ms) may exceed the speed restrict coverage.

- Lots of our use instances used the fixed-window algorithm and per-second charge limits. A property of the fixed-window algorithm is that every “window” begins recent (i.e. resets and begins from zero), so we may doubtlessly exceed the speed restrict each second, even throughout fixed (however excessive) visitors!

The best way we fastened this was three-fold:

- We added a rejection-rate within the Ratelimit Service response, in order that we may use previous historical past to foretell when and the way a lot visitors to reject on the consumer.

rejectionRate=(estimatedQps-rateLimitPolicy)/estimatedQpsThis makes use of the idea that the upcoming second’s visitors goes to be much like the previous second’s visitors. - We added defense-in-depth by including a client-side native charge limiter to chop off apparent instances of extreme visitors instantly.

- As soon as we had autosharding in place, we carried out an in-memory token-bucket ratelimiting algorithm, which got here with some nice advantages:

- We may now enable managed bursts of visitors

- Extra importantly, token-bucket “remembers” info throughout time intervals as a result of as a substitute of resetting each time interval just like the fixed-window algorithm, it might probably repeatedly depend, and even go damaging. Thus, if a buyer sends too many requests, we “keep in mind” how a lot over the restrict they went and may reject requests till the bucket refills to no less than zero. We weren’t in a position to help this token bucket in Redis beforehand as a result of token-bucket wanted pretty complicated operations in Redis, which might enhance our Redis latencies. Now, as a result of the token-bucket didn’t undergo from amnesia each time interval, we may eliminate the rejection-rate mechanism.

- Token-bucket with out enabling further burst performance can approximate a sliding-window algorithm, which is a greater model of fixed-window that doesn’t undergo from the “reset” drawback.

The advantages of the token-bucket strategy have been so nice that we ended up changing all our ratelimits to token bucket.

Rebuilding a Aircraft In-Flight

We knew the tip state that we needed to get to, however that required making two impartial main adjustments to a important service, neither of which have been assured to work properly on their very own. And rolling these two adjustments out collectively was not an possibility, for each technical and danger administration causes. A few of the fascinating stuff we needed to do alongside the best way:

- We constructed a localhost sidecar to our envoy ingress in order that we may apply each batch-reporting and auto-sharding, as a result of envoy is third social gathering code we can not change.

- Earlier than we had in-memory charge limiting, we needed to batch writes to Redis through a Lua script with the intention to convey down the tail latency of batch-reporting requests, as a result of sending descriptors one after the other to Redis was too sluggish with all of the community round-trips, even when we had switched to batch execution.

- We constructed a visitors simulation framework with many various visitors patterns and charge restrict insurance policies, so we may consider our accuracy, efficiency, and scalability all through this transition.

Present State and Future Work

With the profitable rollout of each batch-reporting and in-memory token bucket charge limiting, we noticed drastic tail latency enhancements (as much as 10x in some instances!) with sub-linear development in server aspect visitors. Our inside service purchasers are significantly comfortable that there’s no distant name after they make charge restrict calls, and that they’ve the liberty to scale independently of the Ratelimit Service.

The staff has additionally been engaged on different thrilling areas, corresponding to service mesh routing and zero-config overload safety, so maintain tuned for extra weblog posts! And Databricks is at all times wanting for extra nice engineers who could make a distinction, we’d love so that you can be a part of us!