From Linux kernel code to AI at scale, uncover Microsoft’s open supply evolution and influence.

Microsoft’s engagement with the open supply group has remodeled the corporate from a one-time skeptic to now being one of many world’s main open supply contributors. The truth is, over the previous three years, Microsoft Azure has been the biggest public cloud contributor (and the second largest general contributor) to the Cloud Native Computing Basis (CNCF). So, how did we get right here? Let’s take a look at some milestones in our journey and discover how open-source applied sciences are on the coronary heart of the platforms powering lots of Microsoft’s greatest merchandise, like Microsoft 365, and massive-scale AI workloads, together with OpenAI’s ChatGPT. Alongside the way in which, we now have additionally launched and contributed to a number of open-source tasks impressed by our personal experiences, contributing again to the group and accelerating innovation throughout the ecosystem.

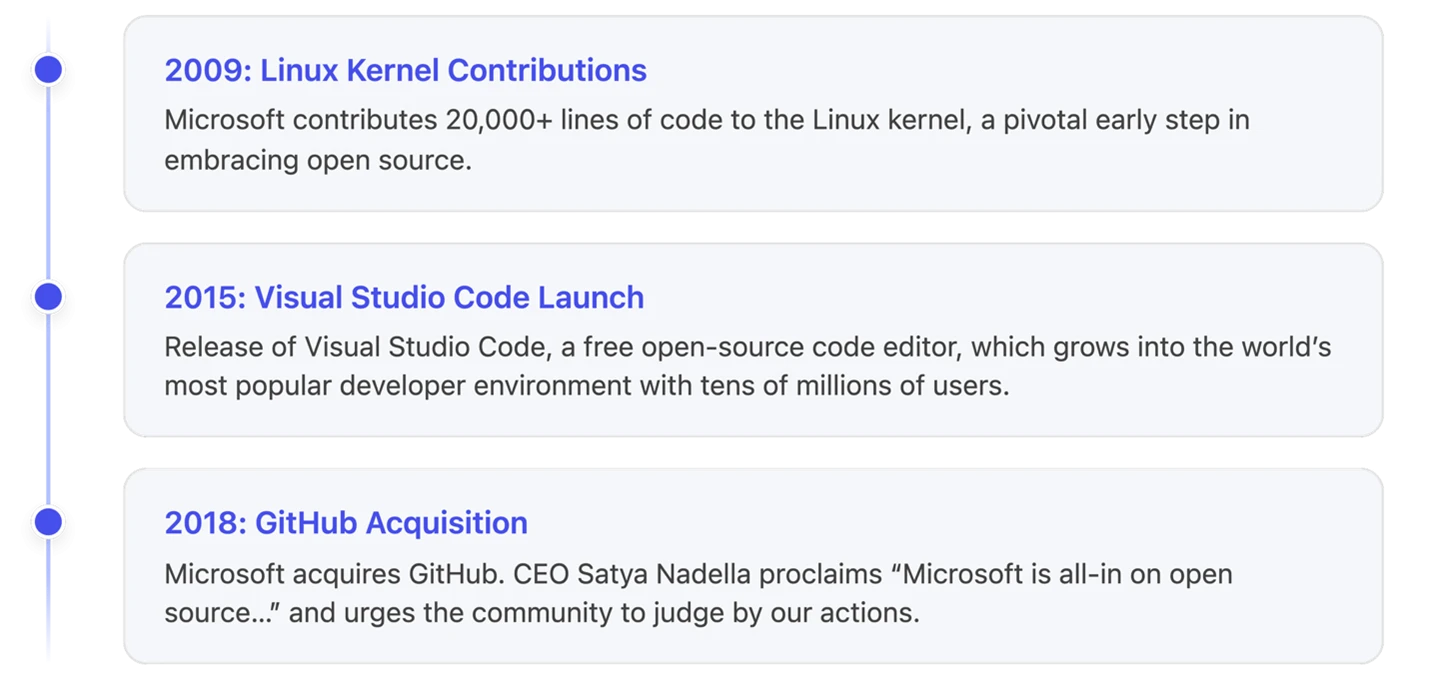

Embracing open supply: Key milestones in Microsoft’s journey

2009—A brand new leaf: 20,000 strains to Linux. In 2009, Microsoft contributed greater than 20,000 strains of code to the Linux kernel, initially Hyper‑V drivers, beneath Normal Public License, model 2 (GPLv2). It wasn’t our first open supply contribution, however it was a visual second that signaled a change in how we construct and collaborate. In 2011, Microsoft was within the prime 5 firms contributing to Linux. Right now, 66% of buyer cores in Azure run Linux.

2015—Visible Studio code: An open supply hit. In 2015, Microsoft launched Visible Studio Code (VS Code), a light-weight, open-source, cross-platform code editor. Right now, Visible Studio and VS Code collectively have greater than 50 million month-to-month energetic builders, with VS Code itself broadly considered the preferred improvement atmosphere. We consider AI experiences can thrive by leveraging the open-source group, simply as VS Code has efficiently executed over the previous decade. With AI changing into an integral a part of the trendy coding expertise, we’ve launched the GitHub Copilot Chat extension as open supply on GitHub.

2018—GitHub and the “all-in” dedication. In 2018, Microsoft acquired GitHub, the world’s largest developer group platform, which was already house to twenty-eight million builders and 85 million code repositories. This acquisition underscored Microsoft’s transformation. As CEO Satya Nadella stated within the announcement, “Microsoft is all-in on open supply… With regards to our dedication to open supply, decide us by the actions we now have taken within the latest previous, our actions at present, and sooner or later.” Within the 2024 Octoverse, GitHub reported 518 million public or open-source tasks, over 1 billion contributions in 2024, about 70,000 new public or open-source generative AI tasks, and a couple of 59% year-over-year surge in contributions to generative AI tasks.

Open supply at enterprise scale: Powering the world’s most demanding workloads

Open-source applied sciences, like Kubenetes and PostgreSQL, have grow to be foundational pillars of recent cloud-native infrastructure—Kubernetes is the second largest open-source undertaking after Linux and now powers tens of millions of containerized workloads globally, whereas PostgreSQL is without doubt one of the most generally adopted relational databases. Azure Kubernetes Service (AKS) and Azure’s managed Postgres take one of the best of those open-source improvements and elevate them into sturdy, enterprise-ready managed providers. By abstracting away the operational complexity of provisioning, scaling, and securing these platforms, AKS and managed PostgreSQL lets organizations concentrate on constructing and innovating. The mixture of open supply flexibility with cloud-scale reliability permits providers like Microsoft 365 and OpenAI’s ChatGPT to function at huge scale whereas staying extremely performant.

COSMIC: Microsoft’s geo-scale, managed container platform powers Microsoft 365’s transition to containers on AKS. It runs tens of millions of cores and is without doubt one of the largest AKS deployments on the earth. COSMIC bakes in safety, compliance, and resilience whereas embedding architectural and operational greatest practices into our inside providers. The consequence: drastically diminished engineering effort, sooner time-to-market, improved price administration, even whereas scaling to tens of millions of month-to-month customers around the globe. COSMIC makes use of Azure and open-source applied sciences to function at planet-wide scale: Kubernetes event-driven autoscaling (KEDA) for autoscaling, Prometheus, and Grafana for real-time telemetry and dashboards to call a couple of.

OpenAI’s ChatGPT: ChatGPT is constructed on Azure utilizing AKS for container orchestration, Azure Blob Storage for person and AI-generated content material, and Azure Cosmos DB for globally distributed information. The dimensions is staggering: ChatGPT has grown to nearly 700 million weekly energetic customers, making it the fastest-growing client app in historical past.1 And but, OpenAI operates this service with a surprisingly small engineering crew. As Microsoft’s Cloud and AI Group Government Vice President Scott Guthrie highlighted at Microsoft Construct in Could, ChatGPT “must scale … throughout greater than 10 million compute cores around the globe,” …with roughly 12 engineers to handle all that infrastructure. How? By counting on managed platforms like AKS that mix enterprise capabilities with one of the best of open supply innovation to do the heavy lifting of provisioning, scaling, and therapeutic Kubernetes clusters throughout the globe.

Think about what occurs whenever you chat with ChatGPT: Your immediate and dialog state are saved in an open-source database (Azure Database for PostgreSQL) so the AI can bear in mind context. The mannequin runs in containers throughout 1000’s of AKS nodes. Azure Cosmos DB then replicates information in milliseconds to the datacenter closest to the person, making certain low latency. All of that is powered by open-source applied sciences beneath the hood and delivered as cloud providers on Azure. The consequence: ChatGPT can deal with “unprecedented” load—over one billion queries per day, and not using a hitch, and without having a large operations crew.

What Azure groups are constructing within the open

At Microsoft, our dedication to constructing within the open runs deep, pushed by engineers throughout Azure who actively form the way forward for open-source infrastructure. Our groups don’t simply use open-source applied sciences, they assist construct and evolve them.

Our open-source philosophy is easy: we contribute upstream first after which combine these improvements into our downstream merchandise. To help this, we play a pivotal position in upstream open-source tasks, collaborating throughout the trade with companions, prospects, and even opponents. Examples of tasks we now have constructed or contributed to incorporate:

- Dapr (Distributed Software Runtime): A CNCF-graduated undertaking launched by Microsoft in 2019, Dapr simplifies cloud-agnostic app improvement with modular constructing blocks for service invocation, state, messaging, and secrets and techniques.

- Radius: A CNCF Sandbox undertaking that lets builders outline utility providers and dependencies, whereas operators map them to sources throughout Azure, AWS, or personal clouds—treating the app, not the cluster, because the unit of intent.

- Copacetic: A CNCF Sandbox software that patches container pictures with out full rebuilds, rushing up safety fixes—initially constructed to safe Microsoft’s cloud pictures.

- Dalec: A declarative software for constructing safe OS packages and containers, producing software program invoice of supplies (SBOMs) and provenance attestations to supply minimal, reproducible base pictures.

- SBOM Instrument: A command line interface (CLI) for producing SPDX-compliant SBOMs from supply or builds—open-sourced by Microsoft to spice up transparency and compliance.

- Drasi: A CNCF Sandbox undertaking launched in 2024, Drasi reacts to real-time information adjustments utilizing a Cypher-like question language for change-driven workflows.

- Semantic Kernel and AutoGen: Open-source frameworks for constructing collaborative AI apps—Semantic Kernel orchestrates giant language fashions (LLMs) and reminiscence, whereas AutoGen allows multi-agent workflows.

- Phi-4 Mini: A compact 3.8 billion-parameter AI mannequin launched in 2025, optimized for reasoning and arithmetic on edge gadgets; out there on Hugging Face.

- Kubernetes AI Toolchain Operator (KAITO): A CNCF Sandbox Kubernetes operator that automates AI workload deployment—supporting LLMs, fine-tuning, and retrieval-augmented technology (RAG) throughout cloud and edge with AKS integration.

- KubeFleet: A CNCF Sandbox undertaking for managing functions throughout a number of Kubernetes clusters. It affords sensible scheduling, progressive deployments, and cloud-agnostic orchestration.

That is only a small sampling of among the open-source tasks that Microsoft is concerned in—every one sharing, in code, the teachings we’ve realized from working techniques at a world scale and welcoming the group to construct alongside us.

Open Supply + Azure = Empowering the subsequent technology of innovation

Microsoft’s journey with open supply has come a great distance from that 20,000-line Linux patch in 2009. Right now, open-source applied sciences are on the coronary heart of many Azure options. And conversely, Microsoft’s contributions are serving to drive many open-source tasks ahead—whether or not it’s commits to Kubernetes; new instruments like KAITO, Dapr, and Radius; or analysis developments like Semantic Kernel and Phi-4. Our engineers perceive that the success of finish person options like Microsoft 365 and ChatGPT depend on scalable, resilient platforms like AKS—which in flip are constructed on and sustained by sturdy, vibrant open supply communities.

Be a part of us at Open Supply Summit Europe 2025

As we proceed to contribute to the open supply group, we’re excited to be a part of Open Supply Summit Europe 2025, happening August 25–27. You’ll discover us at sales space D3 with stay demos, in-booth periods overlaying a variety of matters, and loads of alternatives to attach with our Open Supply crew. Remember to catch our convention periods as properly, the place Microsoft specialists will share insights, updates, and tales from our work throughout the open supply ecosystem.

1 TechRepublic, ChatGPT’s On Observe For 700M Weekly Customers Milestone: OpenAI Goes Mainstream, August 5, 2025.