When folks ask about the way forward for Generative AI in coding, what they

usually need to know is: Will there be a degree the place Giant Language Fashions can

autonomously generate and keep a working software program utility? Will we

be capable of simply writer a pure language specification, hit “generate” and

stroll away, and AI will be capable of do all of the coding, testing and deployment

for us?

To be taught extra about the place we’re at this time, and what must be solved

on a path from at this time to a future like that, we ran some experiments to see

how far we might push the autonomy of Generative AI code era with a

easy utility, at this time. The usual and the standard lens utilized to

the outcomes is the use case of creating digital merchandise, enterprise

utility software program, the kind of software program that I have been constructing most in

my profession. For instance, I’ve labored so much on massive retail and listings

web sites, programs that sometimes present RESTful APIs, retailer information into

relational databases, ship occasions to one another. Threat assessments and

definitions of what good code appears like shall be totally different for different

conditions.

The principle objective was to find out about AI’s capabilities. A Spring Boot

utility just like the one in our setup can in all probability be written in 1-2 hours

by an skilled developer with a strong IDE, and we do not even bootstrap

issues that a lot in actual life. Nonetheless, it was an fascinating take a look at case to

discover our major query: How may we push autonomy and repeatability of

AI code era?

For the overwhelming majority of our iterations, we used Claude-Sonnet fashions

(both 3.7 or 4). These in our expertise constantly present the best

coding capabilities of the out there LLMs, so we discovered them probably the most

appropriate for this experiment.

The methods

We employed a set of “methods” one after the other to see if and the way they will

enhance the reliability of the era and high quality of the generated

code. All the methods had been used to enhance the likelihood that the

setup generates a working, examined and top quality codebase with out human

intervention. They had been all makes an attempt to introduce extra management into the

era course of.

Selection of the tech stack

We selected a easy “CRUD” API backend (Create, Learn, Replace, Delete)

carried out in Spring Boot because the objective of the era.

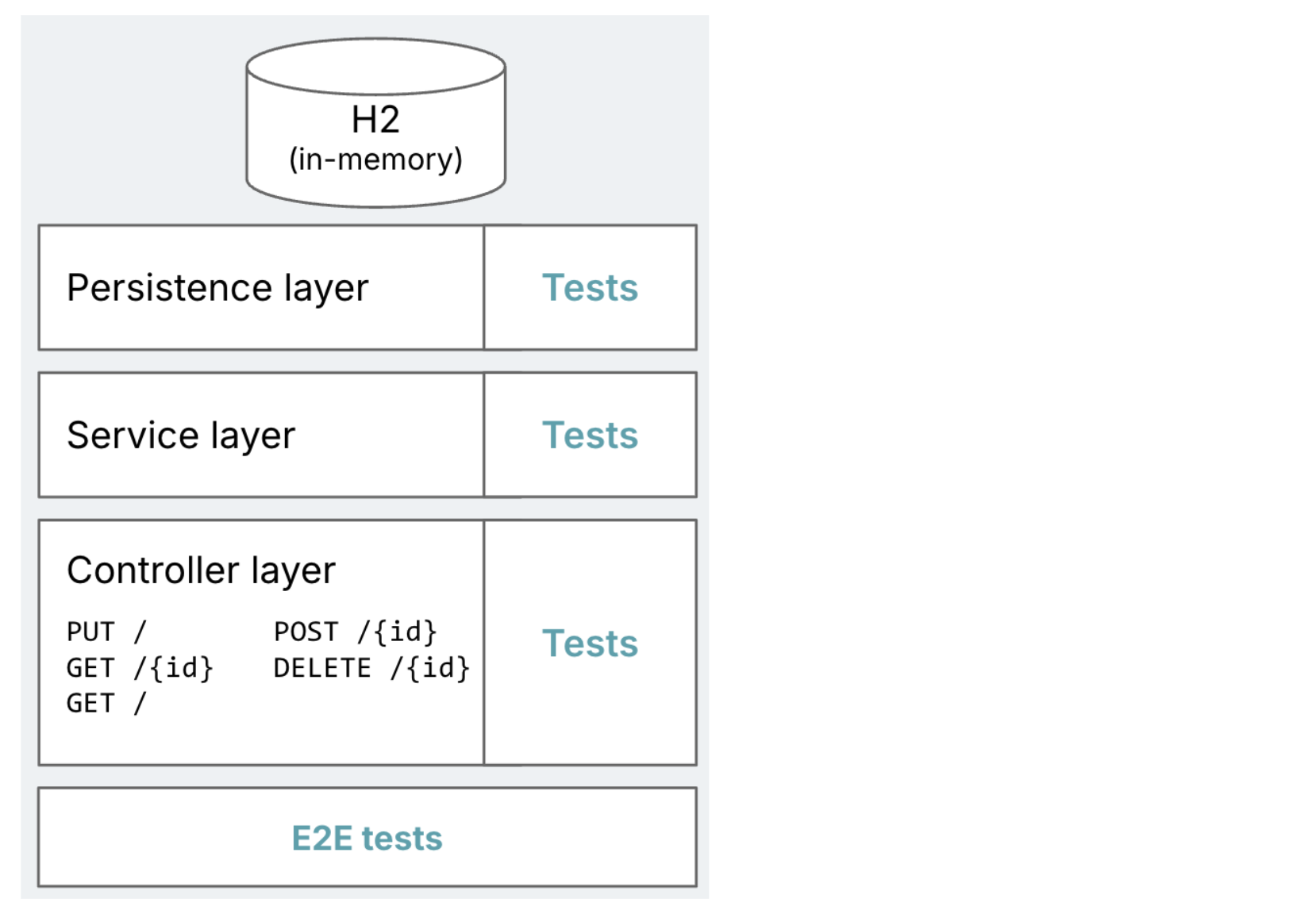

Determine 1: Diagram of the meant

goal utility, with typical Spring Boot layers of persistence,

providers, and controllers. Highlights how every layer ought to have exams,

plus a set of E2E exams.

As talked about earlier than, constructing an utility like it is a fairly

easy use case. The concept was to start out quite simple, after which if that

works, crank up the complexity or number of necessities.

How can this enhance the success charge?

The selection of Spring Boot because the goal stack was in itself our first

technique of accelerating the probabilities of success.

- A frequent tech stack that ought to be fairly prevalent within the coaching

information - A runtime framework that may do a variety of the heavy lifting, which implies

much less code to generate for AI - An utility topology that has very clearly established patterns:

Controller -> Service -> Repository -> Entity, which signifies that it’s

comparatively straightforward to offer AI a set of patterns to observe

A number of brokers

We cut up the era course of into a number of brokers. “Agent” right here

signifies that every of those steps is dealt with by a separate LLM session, with

a selected position and instruction set. We didn’t make another

configurations per step for now, e.g. we didn’t use totally different fashions for

totally different steps.

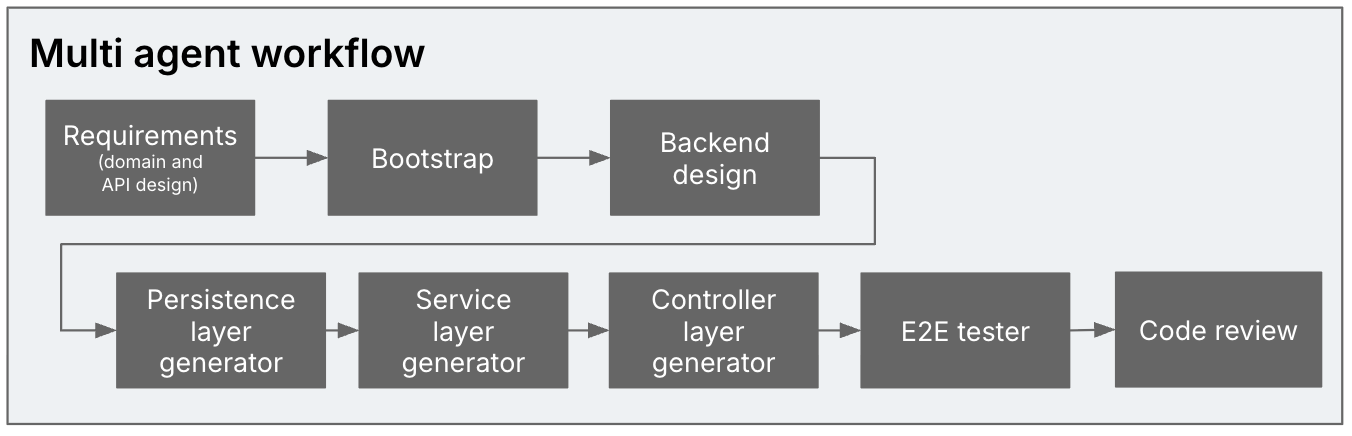

Determine 2: A number of brokers within the era

course of: Necessities analyst -> Bootstrapper -> Backend designer ->

Persistence layer generator -> Service layer generator -> Controller layer

generator -> E2E tester -> Code reviewer

To not taint the outcomes with subpar coding talents, we used a setup

on high of an present coding assistant that has a bunch of coding-specific

talents already: It will possibly learn and search a codebase, react to linting

errors, retry when it fails, and so forth. We wanted one that may orchestrate

subtasks with their very own context window. The one one we had been conscious of on the time

that may do that’s Roo Code, and

its fork Kilo Code. We used the latter. This gave

us a facsimile of a multi-agent coding setup with out having to construct

one thing from scratch.

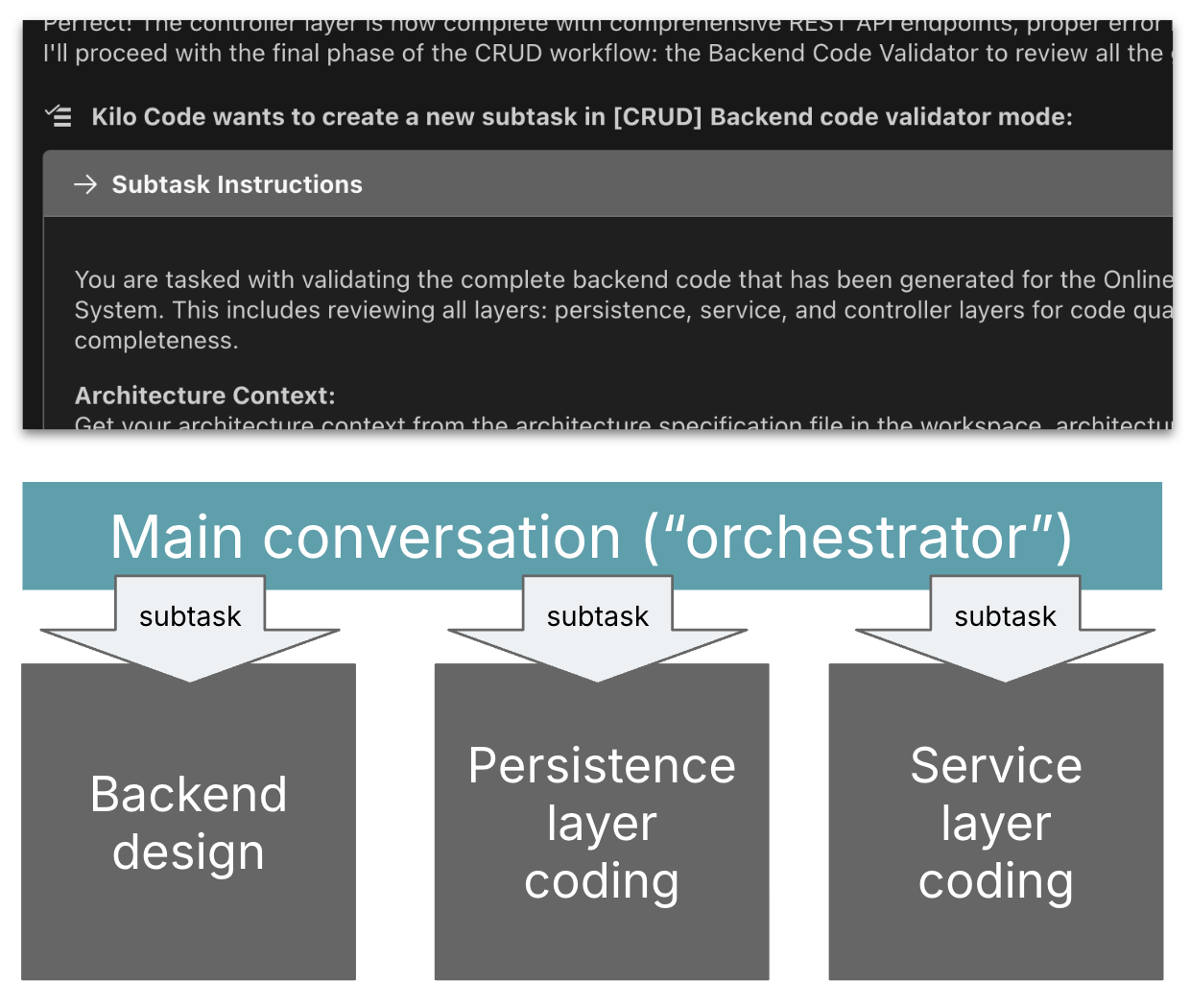

Determine 3: Subtasking setup in Kilo: An

orchestrator session delegates to subtask classes

With a rigorously curated allow-list of terminal instructions, a human solely

must hit “approve” right here and there. We let it run within the background and

checked on it every so often, and Kilo gave us a sound notification

every time it wanted enter or an approval.

How can this enhance the success charge?

Regardless that technically the context window sizes of LLMs are

growing, LLM era outcomes nonetheless turn out to be extra hit or miss the

longer a session turns into. Many coding assistants now supply the power to

compress the context intermittently, however a typical recommendation to coders utilizing

brokers continues to be that they need to restart coding classes as often as

potential.

Secondly, it’s a very established prompting apply is to assign

roles and views to LLMs to extend the standard of their outcomes.

We might make the most of that as effectively with this separation into a number of

agentic steps.

Stack-specific over normal function

As you may perhaps already inform from the workflow and its separation

into the standard controller, service and persistence layers, we did not

draw back from utilizing strategies and prompts particular to the Spring goal

stack.

How can this enhance the success charge?

One of many key issues persons are enthusiastic about with Generative AI is

that it may be a normal function code generator that may flip pure

language specs into code in any stack. Nonetheless, simply telling

an LLM to “write a Spring Boot utility” will not be going to yield the

top quality and contextual code you want in a real-world digital

product situation with out additional directions (extra on that within the

outcomes part). So we needed to see how stack-specific our setup would

need to turn out to be to make the outcomes top quality and repeatable.

Use of deterministic scripts

For bootstrapping the applying, we used a shell script slightly than

having the LLM do that. In any case, there’s a CLI to create an as much as

date, idiomatically structured Spring Boot utility, so why would we

need AI to do that?

The bootstrapping step was the one one the place we used this method,

but it surely’s value remembering that an agentic workflow like this by no

means needs to be completely as much as AI, we will combine and match with “correct

software program” wherever acceptable.

Code examples in prompts

Utilizing instance code snippets for the varied patterns (Entity,

Repository, …) turned out to be the best technique to get AI

to generate the kind of code we needed.

How can this enhance the success charge?

Why do we want these code samples, why does it matter for our digital

merchandise and enterprise utility software program lens?

The only instance from our experiment is using libraries. For

instance, if not particularly prompted, we discovered that the LLM often

makes use of javax.persistence, which has been outdated by

jakarta.persistence. Extrapolate that instance to a big engineering

group that has a selected set of coding patterns, libraries, and

idioms that they need to use constantly throughout all their codebases.

Pattern code snippets are a really efficient option to talk these

patterns to the LLM, and be certain that it makes use of them within the generated

code.

Additionally think about the use case of AI sustaining this utility over time,

and never simply creating its first model. We might need it to be prepared to make use of

a brand new framework or new framework model as and when it turns into related, with out

having to attend for it to be dominant within the mannequin’s coaching information. We might

want a means for the AI tooling to reliably choose up on these library nuances.

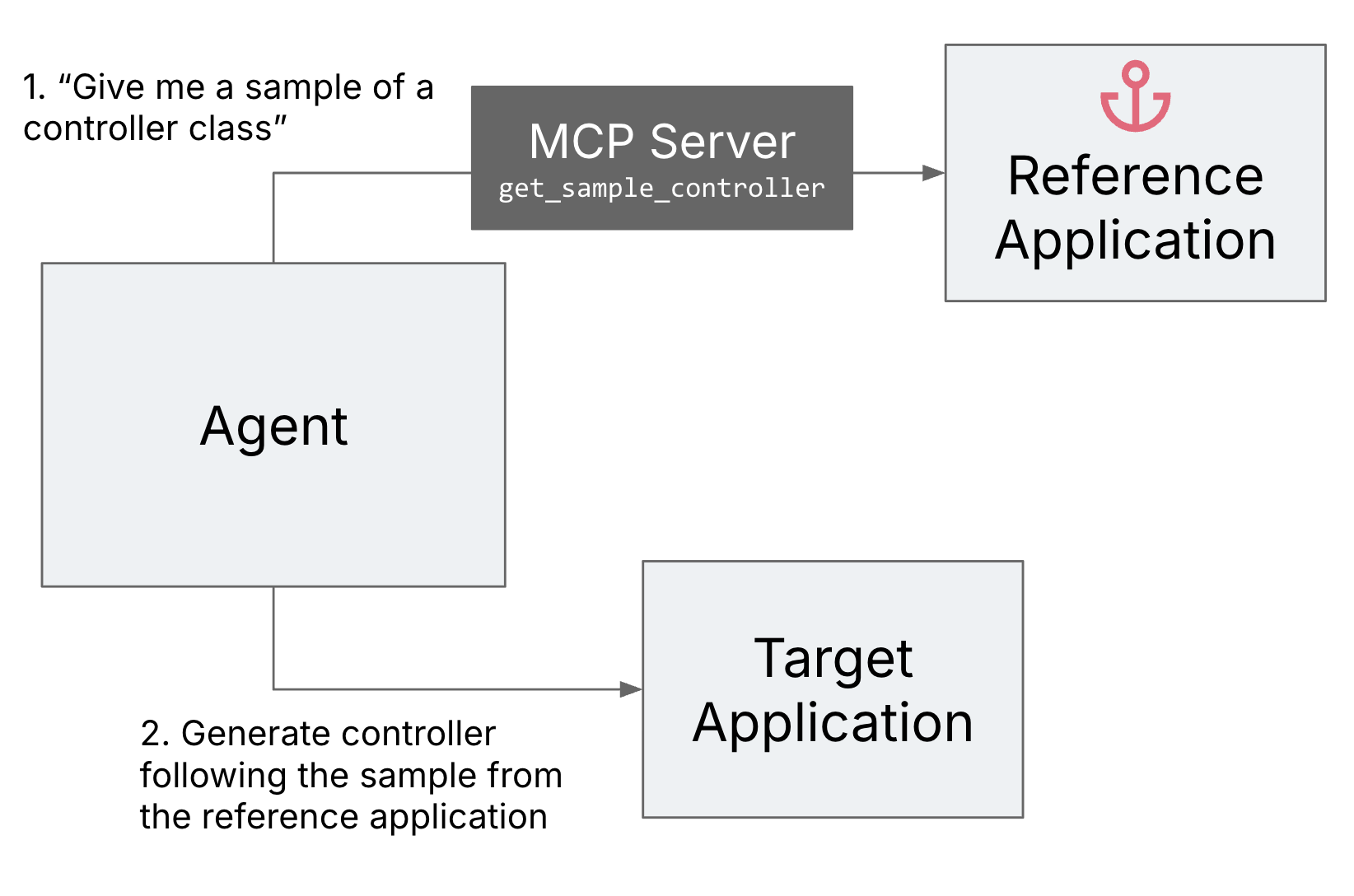

Reference utility as an anchor

It turned out that sustaining the code examples within the pure

language prompts is sort of tedious. If you iterate on them, you do not

get instant suggestions to see in case your pattern would truly compile, and

you additionally need to guarantee that all of the separate samples you present are

in keeping with one another.

To enhance the developer expertise of the developer implementing the

agentic workflow, we arrange a reference utility and an MCP (Mannequin

Context Protocol) server that may present the pattern code to the agent

from this reference utility. This fashion we might simply guarantee that

the samples compile and are in keeping with one another.

Determine 4: Reference utility as an

anchor

Generate-review loops

We launched a overview agent to double examine AI’s work in opposition to the

authentic prompts. This added a further security internet to catch errors

and make sure the generated code adhered to the necessities and

directions.

How can this enhance the success charge?

In an LLM’s first era, it usually doesn’t observe all of the

directions appropriately, particularly when there are a variety of them.

Nonetheless, when requested to overview what it created, and the way it matches the

authentic directions, it’s normally fairly good at reasoning concerning the

constancy of its work, and might repair lots of its personal errors.

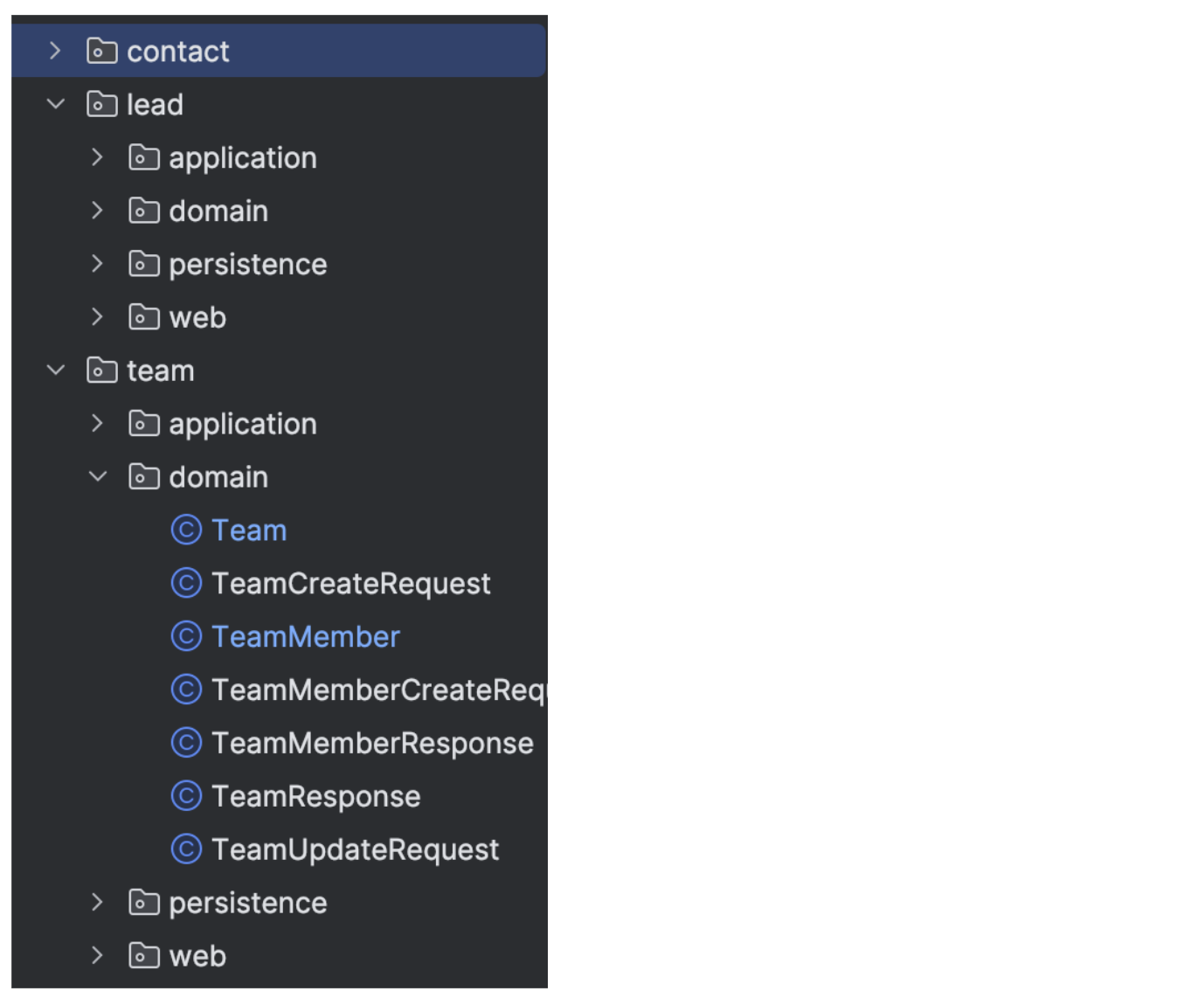

Codebase modularization

We requested the AI to divide the area into aggregates, and use these

to find out the package deal construction.

Determine 5: Pattern of modularised

package deal construction

That is truly an instance of one thing that was onerous to get AI to

do with out human oversight and correction. It’s a idea that can also be

onerous for people to do effectively.

Here’s a immediate excerpt the place we ask AI to

group entities into aggregates through the necessities evaluation

step:

An combination is a cluster of area objects that may be handled as a single unit, it should keep internally constant after every enterprise operation. For every combination: - Identify root and contained entities - Clarify why this combination is sized the way in which it's (transaction measurement, concurrency, learn/write patterns).

We did not spend a lot effort on tuning these directions they usually can in all probability be improved,

however basically, it is not trivial to get AI to use an idea like this effectively.

How can this enhance the success charge?

There are numerous advantages of code modularisation that

enhance the standard of the runtime, like efficiency of queries, or

transactionality issues. However it additionally has many advantages for

maintainability and extensibility – for each people and AI:

- Good modularisation limits the variety of locations the place a change must be

made, which implies much less context for the LLM to bear in mind throughout a change. - You possibly can re-apply an agentic workflow like this one to at least one module at a time,

limiting token utilization, and decreasing the scale of a change set. - Having the ability to clearly restrict an AI job’s context to particular code modules

opens up prospects to “freeze” all others, to scale back the possibility of

unintended modifications. (We didn’t do this right here although.)

Outcomes

Spherical 1: 3-5 entities

For many of our iterations, we used domains like “Easy product catalog”

or “E book monitoring in a library”, and edited down the area design executed by the

necessities evaluation part to a most of 3-5 entities. The one logic in

the necessities had been a couple of validations, aside from that we simply requested for

simple CRUD APIs.

We ran about 15 iterations of this class, with growing sophistication

of the prompts and setup. An iteration for the total workflow normally took

about 25-Half-hour, and price $2-3 of Anthropic tokens ($4-5 with

“pondering” enabled).

Finally, this setup might repeatedly generate a working utility that

adopted most of our specs and conventions with hardly any human

intervention. It all the time bumped into some errors, however might often repair its

personal errors itself.

Spherical 2: Pre-existing schema with 10 entities

To crank up the scale and complexity, we pointed the workflow at a

pared down present schema for a Buyer Relationship Administration

utility (~10 entities), and likewise switched from in-memory H2 to

Postgres. Like in spherical 1, there have been a couple of validation and enterprise

guidelines, however no logic past that, and we requested it to generate CRUD API

endpoints.

The workflow ran for 4–5 hours, with fairly a couple of human

interventions in between.

As a second step, we supplied it with the total set of fields for the

major entity, requested it to develop it from 15 to 50 fields. This ran

one other 1 hour.

A recreation of whac-a-mole

General, we might positively see an enchancment as we had been making use of

extra of the methods. However in the end, even on this fairly managed

setup with very particular prompting and a comparatively easy goal

utility, we nonetheless discovered points within the generated code on a regular basis.

It is a bit like whac-a-mole, each time you run the workflow, one thing

else occurs, and also you add one thing else to the prompts or the workflow

to attempt to mitigate that.

These had been among the patterns which might be notably problematic for

an actual world enterprise utility or digital product:

Overeagerness

We often bought further endpoints and options that we didn’t

ask for within the necessities. We even noticed it add enterprise logic that we

did not ask for, e.g. when it got here throughout a website time period that it knew how

to calculate. (“Professional-rated income, I do know what that’s! Let me add the

calculation for that.”)

Potential mitigation

Might be reigned in to an extent with the prompts, and repeatedly

reminding AI that we ONLY need what’s specified. The reviewer agent can

additionally assist catch among the extra code (although we have seen the reviewer

delete an excessive amount of code in its try to repair that). However this nonetheless

occurred in some form or kind in virtually all of our iterations. We made

one try at decreasing the temperature to see if that might assist, however

because it was just one try in an earlier model of the setup, we won’t

conclude a lot from the outcomes.

Gaps within the necessities shall be full of assumptions

A precedence: String subject in an entity was assumed by AI to have the

worth set “1”, “2”, “3”. Once we launched the enlargement to extra fields

later, regardless that we did not ask for any modifications to the precedence

subject, it modified its assumptions to “low”, “medium”, “excessive”. Other than

the truth that it will be so much higher to have launched an Enum

right here, so long as the assumptions keep within the exams solely, it may not be

an enormous situation but. However this may very well be fairly problematic and have heavy

affect on a manufacturing database if it will occur to a default

worth.

Potential mitigation

We might by some means need to guarantee that the necessities we give are as

full and detailed as potential, and embrace a worth set on this case.

However traditionally, we now have not been nice at that… We’ve got seen some AI

be very useful in serving to people discover gaps of their necessities, however

the chance of incomplete or incoherent necessities all the time stays. And

the objective right here was to check the boundaries of AI autonomy, in order that

autonomy is unquestionably restricted at this necessities step.

Brute drive fixes

“[There is a ] lazy-loaded relationship that’s inflicting JSON

serialization issues. Let me repair this by including @JsonIgnore to the

subject”. Related issues have additionally occurred to me a number of occasions in

agent-assisted coding classes, from “the construct is operating out of

reminiscence, let’s simply allocate extra reminiscence” to “I can not get the take a look at to

work proper now, let’s skip it for now and transfer on to the following job”.

Potential mitigation

We haven’t any thought how you can forestall this.

Declaring success regardless of purple exams

AI often claimed the construct and exams had been profitable and moved

on to the following step, regardless that they weren’t, and regardless that our

directions explicitly acknowledged that the duty will not be executed if construct or

exams are failing.

Potential mitigation

This is likely to be easier to repair than the opposite issues talked about right here,

by a extra refined agent workflow setup that has deterministic

checkpoints and doesn’t enable the workflow to proceed until exams are

inexperienced. Nonetheless, expertise from agentic workflows in enterprise course of

automation have already proven that LLMs discover methods to get round

that. Within the case of code era,

I might think about they might nonetheless delete or skip exams to get past that

checkpoint.

Static code evaluation points

We ran SonarQube static code evaluation on

two of the generated codebases, right here is an excerpt of the problems that

had been discovered:

| Concern | Severity | Sonar tags | Notes |

|---|---|---|---|

| Change this utilization of ‘Stream.acquire(Collectors.toList())’ with ‘Stream.toList()’ and be certain that the listing is unmodified. | Main | java16 | From Sonar’s “Why”: The important thing drawback is that .acquire(Collectors.toList()) truly returns a mutable sort of Checklist whereas within the majority of circumstances unmodifiable lists are most popular. |

| Merge this if assertion with the enclosing one. | Main | clumsy | Typically, we noticed a variety of ifs and nested ifs within the generated code, specifically in mapping and validation code. On a aspect be aware, we additionally noticed a variety of null checks with `if` as a substitute of using `Non-compulsory`. |

| Take away this unused methodology parameter “occasion”. | Main | cert, unused | From Sonar’s “Why”: A typical code odor referred to as unused perform parameters refers to parameters declared in a perform however not used anyplace inside the perform’s physique. Whereas this might sound innocent at first look, it might probably result in confusion and potential errors in your code. |

| Full the duty related to this TODO remark. | Data | AI left TODOs within the code, e.g. “// TODO: This might be populated by becoming a member of with lead entity or separate service calls. For now, we’ll depart it null – it may be populated by the service layer” | |

| Outline a continuing as a substitute of duplicating this literal (…) 10 occasions. | Important | design | From Sonar’s “Why”: Duplicated string literals make the method of refactoring advanced and error-prone, as any change would must be propagated on all occurrences. |

| Name transactional strategies through an injected dependency as a substitute of straight through ‘this’. | Important | From Sonar’s “Why”: A way annotated with Spring’s @Async, @Cacheable or @Transactional annotations is not going to work as anticipated if invoked straight from inside its class. |

I might argue that each one of those points are related observations that result in

tougher and riskier maintainability, even in a world the place AI does all of the

upkeep.

Potential mitigation

It’s after all potential so as to add an agent to the workflow that appears on the

points and fixes them one after the other. Nonetheless, I do know from the actual world that not

all of them are related in each context, and groups usually intentionally mark

points as “will not repair”. So there’s nonetheless some nuance