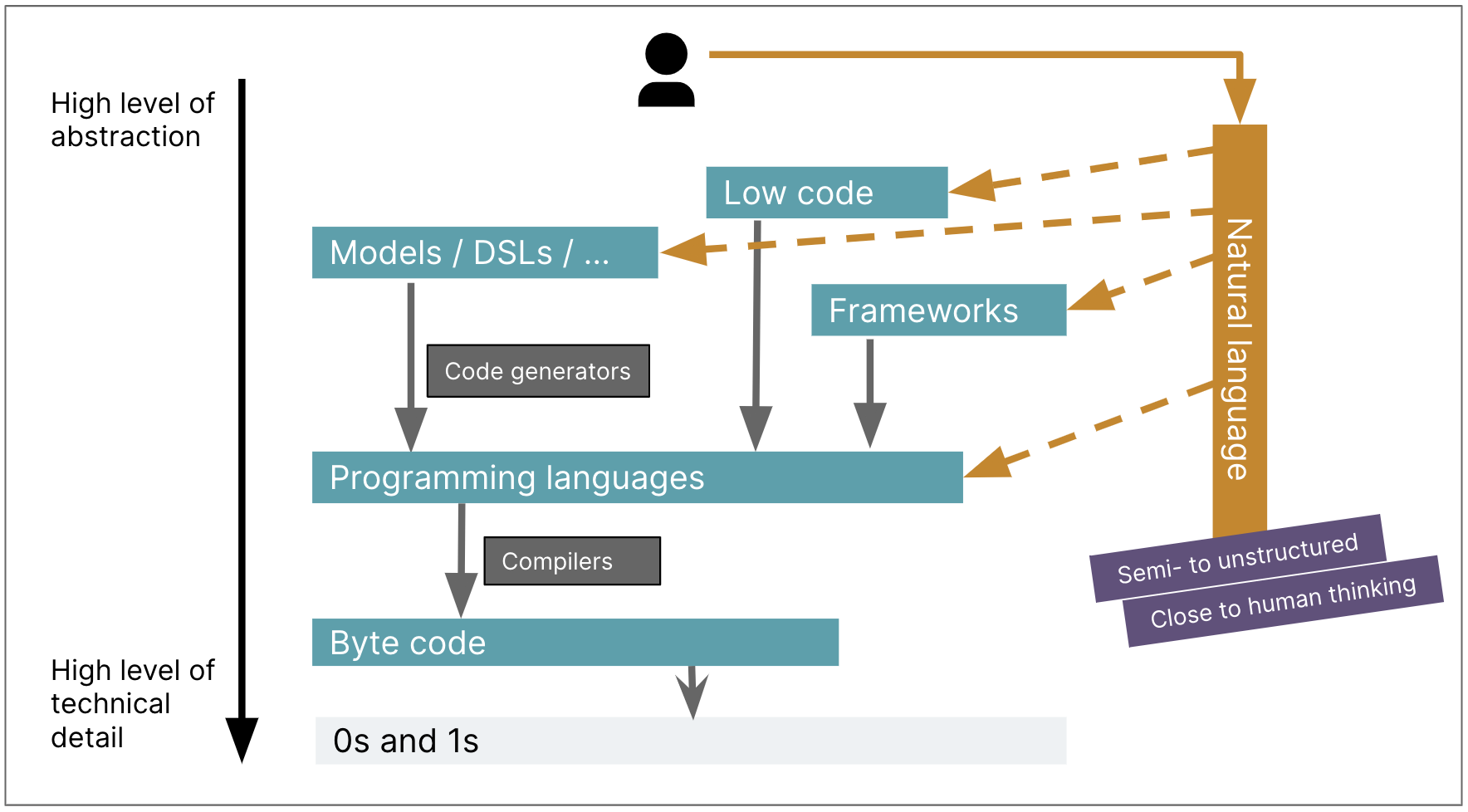

Like most loudmouths on this area, I have been paying plenty of consideration

to the function that generative AI methods might play in software program improvement. I

assume the looks of LLMs will change software program improvement to the same

diploma because the change from assembler to the primary high-level programming

languages. The additional improvement of languages and frameworks elevated our

abstraction stage and productiveness, however did not have that form of impression on

the nature of programming. LLMs are making that diploma of impression, however with

the excellence that it’s not simply elevating the extent of abstraction, however

additionally forcing us to think about what it means to program with non-deterministic

instruments.

HLLs launched a radically new stage of abstraction. With assembler I am

interested by the instruction set of a selected machine. I’ve to determine

out the way to do even easy actions by transferring knowledge into the correct registers to

invoke these particular actions. HLLs meant I might now assume when it comes to

sequences of statements, conditionals to decide on between alternate options, and

iteration to repeatedly apply statements to collections of information values. I

can introduce names into many points of my code, making it clear what

values are imagined to signify. Early languages actually had their

limitations. My first skilled programming was in Fortran IV, the place “IF”

statements did not have an “ELSE” clause, and I needed to keep in mind to call my

integer variables so that they began with the letters “I” by way of “N”.

Stress-free such restrictions and gaining block construction (“I can have extra

than one assertion after my IF”) made my programming simpler (and extra enjoyable)

however are the identical form of factor. Now I rarely write loops, I

instinctively move features as knowledge – however I am nonetheless speaking to the machine

in the same method than I did all these days in the past on the Dorset moors with

Fortran. Ruby is a much more subtle language than Fortran, nevertheless it has

the identical ambiance, in a method that Fortan and PDP-11 machine directions do

not.

Up to now I’ve not had the chance to do greater than dabble with the

finest Gen-AI instruments, however I am fascinated as I take heed to pals and

colleagues share their experiences. I am satisfied that that is one other

basic change: speaking to the machine in prompts is as completely different to

Ruby as Fortran to assembler. However that is greater than an enormous soar in

abstraction. After I wrote a Fortran perform, I might compile it 100

occasions, and the end result nonetheless manifested the very same bugs. LLMs introduce a

non-deterministic abstraction, so I can not simply retailer my prompts in git and

know that I am going to get the identical habits every time. As my colleague

Birgitta put it, we’re not simply transferring up the abstraction ranges,

we’re transferring sideways into non-determinism on the similar time.

illustration: Birgitta Böckeler

As we be taught to make use of LLMs in our work, we have now to get work out the way to

dwell with this non-determinism. This variation is dramatic, and quite excites

me. I am certain I will be unhappy at some issues we’ll lose, however there will even

issues we’ll achieve that few of us perceive but. This evolution in

non-determinism is unprecedented within the historical past of our career.