While synthetic intelligence may present itself in a seemingly human-like manner, appearances can indeed be deceiving. The thriller surrounding the inner workings of enormous language models (LLMs) originates from their massive scale, intricate training procedures, unpredictable behavior, and enigmatic interpretability.

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have recently conducted a thorough examination of language learning models’ performance on diverse tasks, uncovering significant findings about the interplay between memorization and reasoning capabilities. Their propensity to overestimate the acuity of their reasoning abilities often leads to misguided decision-making.

The examination of “default duties” – common tasks that models are trained and tested on – contrasts starkly with “counterfactual scenarios,” hypothetical situations that diverge significantly from typical conditions, which AI systems such as GPT-4 and Claude typically expect to encounter. Researchers adapted existing tasks to lie beyond the fashion’s comfort zones, rather than creating entirely new ones. The researchers leveraged a diverse assortment of datasets and evaluation metrics specifically designed to assess various aspects of AI’s problem-solving prowess, encompassing arithmetic, chess, code evaluation, logical reasoning, and more.

When collaborating with language fashion models, customers typically operate within a base-10 arithmetic system, which is the familiar foundation for these models. However, observing that students perform well in base-10 may mistakenly suggest they possess robust comprehension skills as well. In theory, individuals with exceptional arithmetic skills should consistently exhibit remarkable accuracy across all numerical systems, mirroring the capabilities of advanced calculators or computing devices. While initial assessments may suggest otherwise, the analysis ultimately reveals that these fashions do not possess the strength commonly anticipated. Their exceptional proficiency stems from adaptive processes that thrive under uncertainty, yet they remain vulnerable to precipitous performance drops in novel, high-stakes situations, underscoring limitations in generalizability and capacity for incremental improvement.

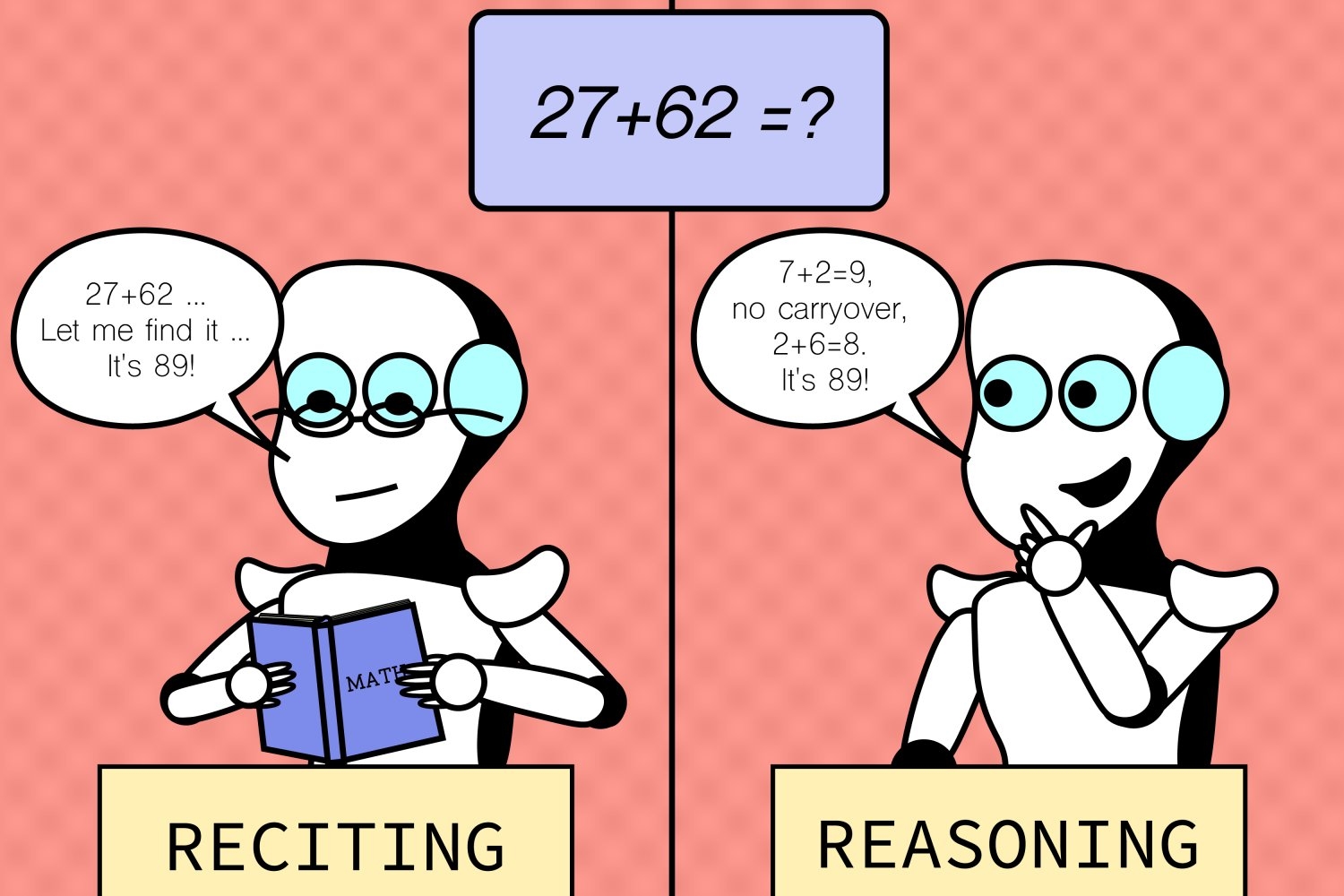

The phenomenon proved consistent across various tasks, including musical chord progressions, spatial cognition, and even chess strategies, where slight modifications to initial conditions yielded predictable outcomes. While human gamers can still make informed decisions about strike legality in modified scenarios after a sufficient amount of time, AI models failed to surpass random guessing, thereby limiting their capacity for generalizing to novel situations. The extent to which models diverge from optimal performance is often attributed less to fundamental processing abilities, but rather to overfitting to, or instantaneously memorizing patterns within, the training data they’ve been exposed to.

While large language models exhibit remarkable proficiency in familiar scenarios, they often struggle with uncharted territory, suggesting that their greatest strengths can also be their most significant limitations. According to Zhaofeng Wu, an MIT PhD scholar in electrical engineering and computer science at CSAIL, “This perception is crucial as we strive to enhance the adaptability of these fashions and expand their software capabilities.” As artificial intelligence becomes increasingly pervasive in our society, it must be able to handle a wide range of scenarios seamlessly, regardless of familiarity or prior knowledge? We anticipate that these findings will ultimately shape the development of more resilient language models in the future.

Despite the valuable understandings derived, undeniable constraints exist. While the examiners focused on specific tasks and scenarios, their evaluation did not capture the full spectrum of challenges that models might face in actual-world applications, underscoring the need for more diverse testing environments. Future work may involve expanding the scope of responsibilities and exploring alternative scenarios to identify additional vulnerabilities. This could potentially suggest a less intricate and more recurrent sequence of events. The team aims to improve model transparency by developing approaches that enable a deeper understanding of the reasoning driving their predictive outcomes.

“As language processing technologies grow in complexity, deciphering coaching data becomes increasingly challenging – a hurdle that even open-source platforms struggle with, let alone proprietary ones,” states Hao Peng, assistant professor at the University of Illinois at Urbana-Champaign. The team remains perplexed as to whether these patterns truly generalize to untested tasks, or instead achieve success through rote memorization of training data? Significant progress is made in exploring this crucial inquiry. The research constructs a comprehensive library of meticulously crafted counterfactual assessments, providing cutting-edge perspectives on the abilities of cutting-edge large language models. Their ability to tackle unanticipated responsibilities may actually be more limited than many assume. This study has the potential to inspire further investigation into identifying the potential pitfalls of current designs, ultimately leading to the development of more effective and innovative solutions.

Authors include Najoung Kim, a Boston College assistant professor and Google visiting researcher, as well as seven CSAIL associates: MIT electrical engineering and computer science PhD students Linlu Qiu, Alexis Ross, Ekin Akyürek SM ’21, Boyuan Chen; former postdoc and Apple AI/ML researcher Bailin Wang; and EECS assistant professors Jacob Andreas and Yoon Kim.

The crew’s examination was bolstered by partial support from the MIT-IBM Watson AI Lab, the MIT Quest for Intelligence, and the National Science Foundation. The team unveiled their research at the North American Chapter of the Association for Computational Linguistics (NAACL) meeting last month.