In pursuit of capturing an image of each of the approximately 11,000 tree species found in North America, you would only scratch the surface of the vast number of images contained within nature photography datasets. These massive datasets of photographic records, spanning from start to end, serve as a remarkable analytical tool for ecologists, providing evidence of species’ unique behaviors, rare events, migration patterns, and reactions to pollution and various climate shifts.

While comprehensive nature-based image databases are lacking in their potential benefits. Searching through these databases and extracting the images that align with your hypothesis is a labor-intensive process. You’d be better off investing in automated analysis tools, specifically multimodal vision language models, which combine the capabilities of artificial intelligence and machine learning. With expertise in both text and imagery, they can precisely identify subtle details, such as specific bushes in the background of an image.

Can VLMs effectively facilitate nature researchers’ access to relevant images by efficiently retrieving pictures that match their specific queries, thereby streamlining their research processes and fostering a deeper understanding of the natural world? Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), the College of London, iNaturalist, and other institutions developed an assessment tool to determine. Each Virtual Life Model (VLM) process involves identifying and reorganizing the most relevant outcomes within the team’s “INQUIRE” dataset, comprising approximately five million wildlife photographs and 250 search prompts provided by ecologists and other biodiversity experts.

The study reveals that larger, exceptionally proficient VLMs, trained on vast amounts of data, tend to yield the desired results for researchers. Fashions performed satisfactorily in addressing straightforward questions about observable content, such as identifying particles on a reef, but faced significant challenges when dealing with inquiries demanding specialized knowledge, including recognizing specific ecological conditions or behavioral patterns? Virtual labs significantly improved students’ ability to identify jellyfish specimens found on beaches, but faced challenges when tasked with more complex topics such as axanthosis in an green frog, where they struggled to understand the underlying mechanism causing the skin to turn yellow.

Researchers discovered that fashion professionals require more tailored training to navigate complex challenges. Edward Vendrow, a Computer Science and Artificial Laboratory (CSAIL) affiliate and PhD pupil at MIT, co-lead’s research on a novel dataset, envisioning Virtual Large Language Models (VLMs) as potential analysis assistants that could excel by leveraging supplementary informative data. Scientists seek retrieval methods that accurately uncover the exact outcomes they’re searching for in monitoring biodiversity and analyzing climate change, notes Vendrow. “Multimodal fashion’s limited understanding of complex scientific language notwithstanding, we believe INQUIRE will serve as a crucial yardstick for tracking their progress in grasping scientific terminology, ultimately enabling researchers to efficiently locate the desired images.”

The team’s findings revealed that larger fashion datasets generally presented simpler querying options due to their extensive training data, allowing for more efficient and detailed searches. Using the INQUIRE dataset, researchers initially investigated whether VLMs could efficiently narrow down a pool of five million images to the top 100 most relevant results (commonly referred to as “ranking”). While CLIP models excelled in finding matching images for complex searches such as “a reef with artificial buildings and particles,” smaller-sized models were less effective, revealing a stark contrast between large and small models. According to Vendrow, larger Very Large Matrices (VLMs) are just starting to prove themselves as valuable in processing longer queries.

Researchers also investigated whether multimodal models could effectively reorder the initial 100 results, readjusting the ranking of relevant images in response to user queries. Despite being trained on vast amounts of curated data, including advanced language models such as GPT-4o, even these large LLMs struggled significantly in these exams, with a mere 59.6 percent precision rate – the highest score achieved by any model to date.

Researchers presented their findings at the Conference on Neural Data Processing Programs (NeurIPS) earlier this month.

The INQUIRE dataset comprises primarily search queries derived from discussions with ecologists, biologists, oceanographers, and other experts regarding the types of images they would seek, including unique physical characteristics and behavioral patterns of various animal species. A team of annotators invested 180 hours in meticulously examining the iNaturalist dataset using these prompts, thoroughly reviewing approximately 200,000 results to accurately categorize 33,000 matches that aligned with the given prompts.

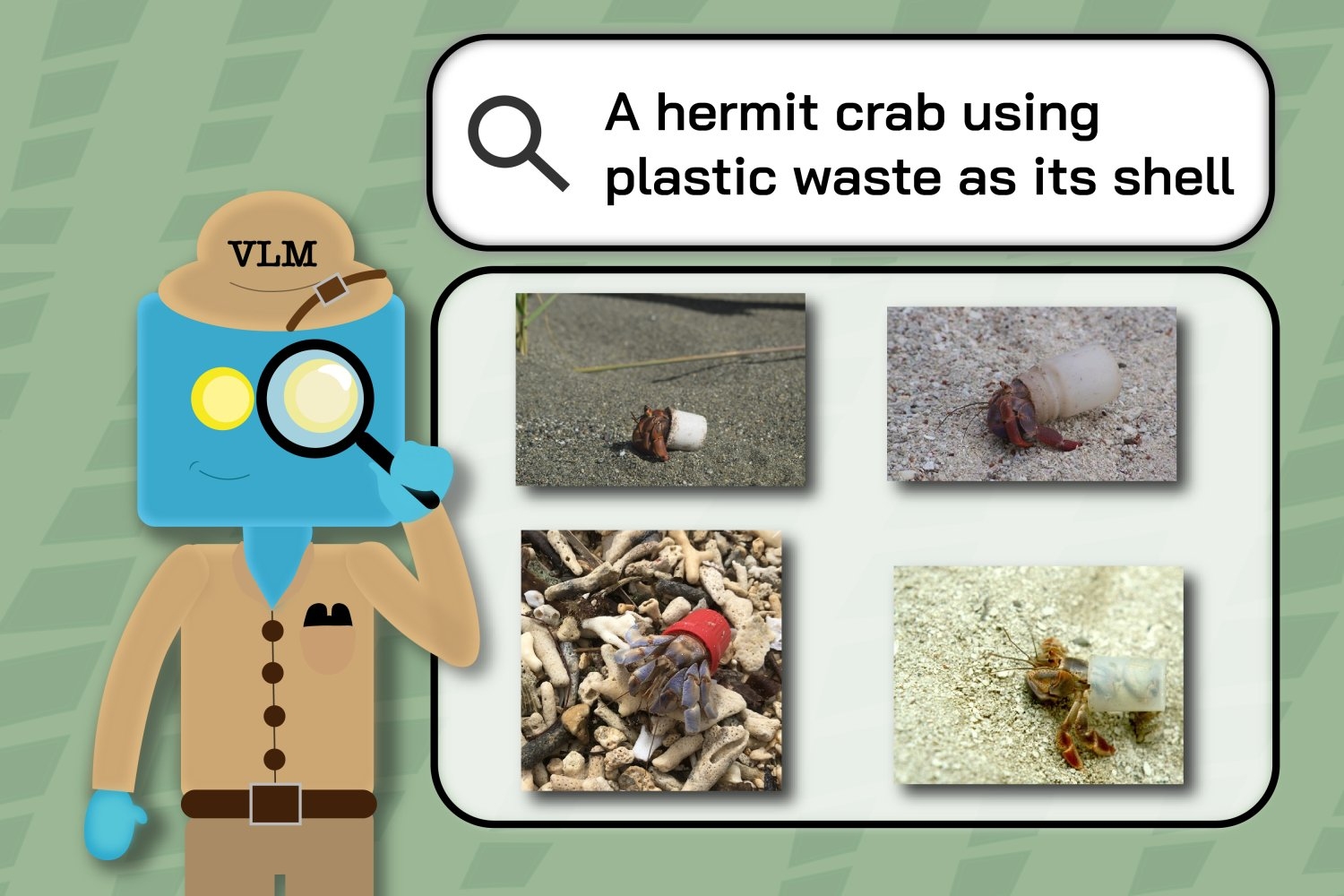

To identify specific, unusual scenarios within the larger dataset, annotators employed queries such as “a hermit crab using plastic waste as its shell” and “a California condor tagged with a green ’26’, which served to establish subsets of images depicting these distinctive events.

The researchers employed identical search queries to assess the performance of VLMs in retrieving iNaturalist images. When annotating datasets, it became apparent that fashion experts were often challenged by scientific terminology, resulting in inconsistent labelling, with many images misclassified as irrelevant to the initial query. Generally, the outcomes from Very Large Model (VLMs) for queries like “redwood bushes with fireplace scars” featured images of unmarked redwood bushes.

Sara Beery, Homer A. Jones Professor of Environmental Science, notes that “the approach embodies cautious curation of knowledge, prioritizing concrete exemplifications of scientific inquiry across ecological and environmental research domains.” As a renowned expert in his field, Burnell serves as an Assistant Professor at MIT, leading research initiatives as a principal investigator within CSAIL. Additionally, he shares top billing as a co-senior writer for the groundbreaking study. The investigation has significantly contributed to enhancing our comprehension of the current capacities of VLMs within potentially influential scientific contexts. This refinement has also identified lacunae in existing research that we can now address, particularly regarding intricate compositional issues, specialized vocabulary, and nuanced differences that distinguish categories of interest for our partners.

Researchers have discovered that while some cutting-edge AI models can effectively aid wildlife scientists in retrieving certain images, many tasks still remain challenging for even the most advanced and proficient systems, according to Vendrow. Although INQUIRE primarily focuses on ecological and biodiversity monitoring, the diverse range of queries suggests that VLMs excelling on INQUIRE will likely possess skills adaptable to analyzing large-scale datasets in various data-intensive domains.

To further their mission, researchers are collaborating with iNaturalist to create a query system that enables scientists and inquisitive individuals to more effectively locate the images they truly desire to access. Their search functionality allows customers to filter results by species, expediting the identification of relevant outcomes such as the diverse eye colors found in felines. Vendrow, alongside co-lead writer Omiros Pantazis, a newly minted PhD holder from University College London, is committed to refining the re-ranking system by integrating advanced models to produce more accurate results.

Affiliated Professor at the University of Pittsburgh, Justin Kitzes, notes that INQUIRE has the capacity to reveal concealed secondary data. “Biodiversity datasets have grown so massive that no single researcher can effectively evaluate them,” notes Kitzes, an outside observer of the study. This investigation draws attention to a pressing and unresolved challenge: developing effective strategies for querying datasets that extend beyond basic identity queries to explore individual attributes, behaviors, and species interactions. With the capacity to accurately decipher intricate patterns in biodiversity visualizations, this data will play a crucial role in both advancing fundamental scientific understanding and informing practical applications in ecology and conservation.

The authors of the paper are Vendrow, Pantazis, and Beery, in collaboration with Alexander Shepard, software engineer at iNaturalist; Gabriel Brostow, Kate Jones, professors at College School London; Oisin Mac Aodha, affiliate professor at College of Edinburgh, co-senior author; and Grant Van Horn, Assistant Professor at University of Massachusetts Amherst, also a co-senior author. Their research received partial funding from the Generative AI Laboratory at the University of Edinburgh and the U.S. Funded by the National Science Foundation’s Pure Sciences and Engineering Analysis Council, in collaboration with the Canadian International Heart on AI and Biodiversity Change, and supported by a Royal Society Analysis Grant, as well as the Biome Well being Undertaking, generously funded by the World Wildlife Fund United Kingdom.