While the primary challenge for robotics engineers remains generalization – the ability to design machines capable of adapting to diverse environments and scenarios. Because of the advancements in the 1970s, the field has evolved from crafting nuanced algorithms to harnessing the power of deep learning, enabling robots to learn instantly by observing human behavior. Despite progress being made, a critical hurdle remains: ensuring the quality of knowledge. To truly elevate, robots need to confront novel scenarios that challenge the limits of their expertise, operating at the cusp of their proficiency. Historically, this process has relied on human oversight, as operators carefully supervise robots to hone their skills. As robotics technology advances and robots become increasingly sophisticated, a significant challenge emerges: the requirement for top-tier training data grows exponentially, yet human capacity to generate it lags behind.

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have engineered a groundbreaking approach to robotic training, poised to significantly accelerate the introduction of versatile, intelligent machines into real-world settings? The newly developed “system” leverages cutting-edge advancements in generative artificial intelligence and physics simulation technology to generate a multitude of realistic digital training environments, thereby enabling robots to achieve exceptional proficiency in complex tasks without requiring any prior real-world experience.

By integrating physics simulations with generative AI models, LucidSim tackles a long-standing obstacle in robotics: effectively translating skills learned in virtual environments to real-world applications. Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory have long grappled with the ‘sim-to-real gap’, a fundamental issue in robotics education where simulated training environments starkly contrast with the complex, unpredictable real world. “Prior methods often employed depth sensors, a straightforward solution that neglected crucial real-world intricacies, thereby limiting their effectiveness.”

The multipronged system constitutes a diverse amalgamation of interrelated applied sciences. LucidSim leverages vast linguistic patterns to produce diverse, formatted descriptions of settings. Artistic depictions of these descriptions are subsequently recreated using innovative generative models. To accurately replicate real-world physics in these images, a sophisticated physics simulator is employed to inform the technological process.

The spark that ignited the creation of LucidSim unexpectedly arose during a conversation outside Beantown Taqueria in Cambridge, Massachusetts. “Alan Yu, an undergraduate student in electrical engineering and computer science at MIT and co-lead author of LucidSim, notes that the team initially aimed to demonstrate how robots equipped with vision could utilize human suggestions for improvement.” As we strolled along the road, our conversation continued until we paused outside the taqueria for a leisurely 30 minutes. The restaurant where we had our second date was that very location.

To refine their understanding, the team produced high-quality images by deriving detailed depth maps providing geometric data and semantic masks identifying distinct image elements from the simulated environment. As they soon discovered, however, a keen eye for detail was necessary to ensure the model produced unique images by varying the prompt and composition. To address this need, they developed a system that generates a substantial number of textual content prompts for utilization by ChatGPT.

Despite this, the method yielded a solitary image only. Scientists developed “Goals in Motion,” a novel approach that enables them to create brief, coherent movies by hacking together picture magic. This innovative system computes the actions of every pixel between frames, warping a single generated image into a short, multi-frame video that functions as a unique experience for robots. By analyzing the three-dimensional geometry of the scene and incorporating adjustments from the robotic’s unique vantage point, Goals In Movement achieves its innovative approach.

“According to Yu, our approach outshines area randomization, a technique introduced in 2017 that injects arbitrary colours and patterns into environmental contexts. While this method yields extensive data, its reliance on random elements hampers realistic portrayals.” LucidSim effectively tackles a range of variety and realism concerns. Despite never having seen the actual world during training, robots are now capable of recognizing and overcoming obstacles in real-world settings.

The team is thrilled about the prospect of applying LucidSim beyond its core applications in quadruped locomotion and parkour, their primary focus area. In one instance, cell manipulation involves placing a cell robot that handles objects in an open environment, where shading plays a crucial role. According to Yang, “Currently, these robots are still learning from real-world demonstrations.” While collecting data for robotic teleoperation is relatively simple, the challenge lies in scaling up to thousands of scenarios, as humans must physically set each scene. By digitizing knowledge collection, we aim to create a more streamlined and scalable process.

The workforce pitted LucidSim against a rival, where a seasoned trainer showcased the robot’s capacity to learn from experience? The results were striking: Robots trained by professionals faltered, achieving a paltry 15 percent success rate – and even doubling the amount of professional training data made little difference. When robots autonomously gathered their own coaching expertise through LucidSim, the narrative took a dramatic turn. By simply duplicating the dataset’s dimensions, success rates surged to an impressive 88 percent. As Yang notes, “By continuously updating our robotic systems with new knowledge, their efficiency is naturally enhanced – a scholar can thus evolve into a proficient expert.”

“In the quest to seamlessly integrate simulation and reality in robotics, one major hurdle lies in achieving visually realistic simulations,” notes Dr. Shuran Mook, an assistant professor of electrical engineering at Stanford University, who was not involved in the research. The LucidSim framework provides a sleek solution by harnessing generative models to generate numerous, highly realistic visual representations for any simulation. This innovation could significantly accelerate the transition from digitally trained robots to real-world applications.

From the streets of Cambridge to the cutting-edge fringes of robotics research, LucidSim is forging a path towards a revolutionary era of intelligent, adaptive machines – capable of learning to navigate our complex world without ever leaving the digital realm?

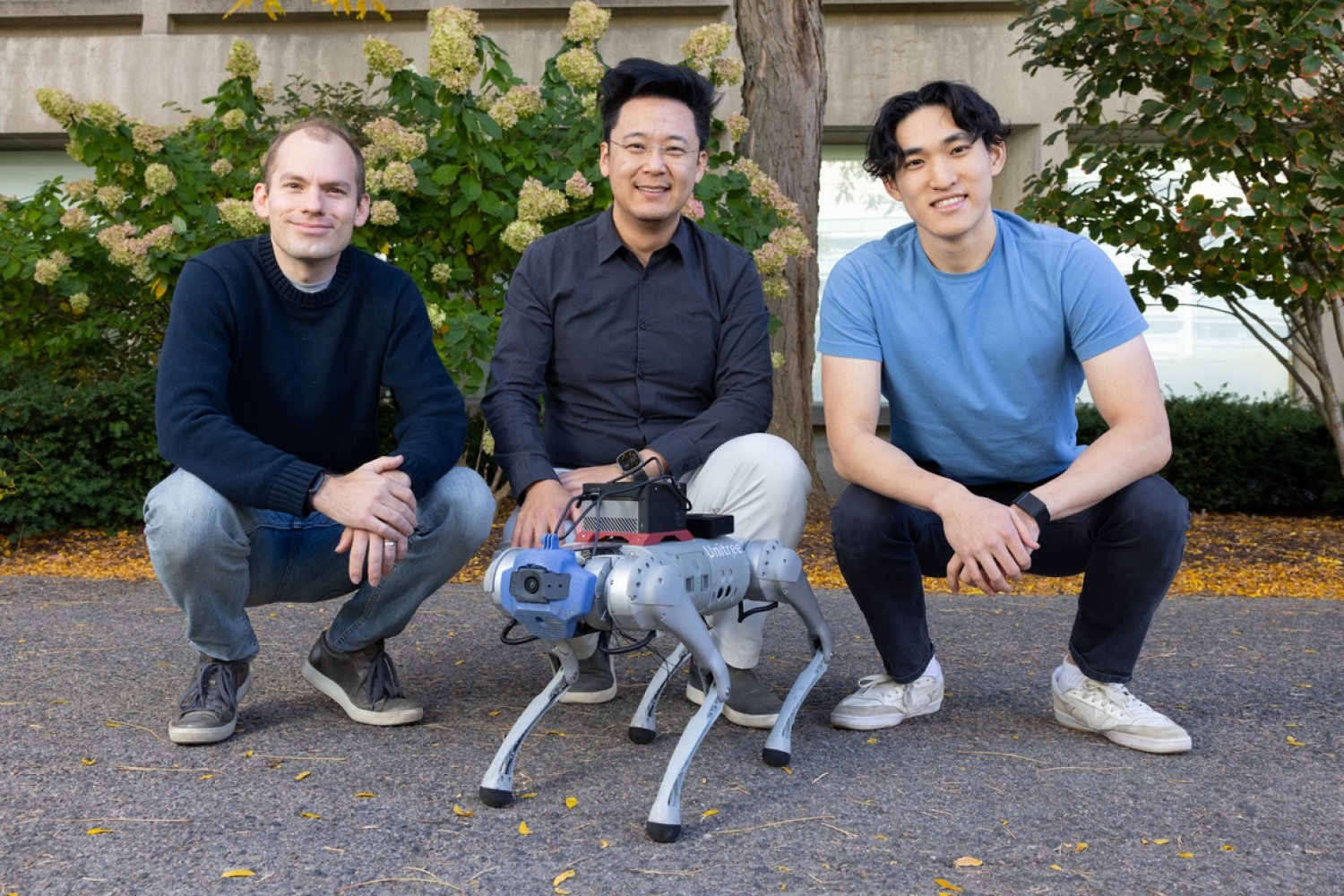

Yu and Yang collaborated on the paper with three other researchers: Ran Choi, a mechanical engineering postdoctoral researcher at MIT; Yajun Ravindranath, an undergraduate student majoring in Electrical Engineering and Computer Science (EECS) at MIT; John Leonard, a professor of mechanical engineering and aeronautics at MIT. A leading Collins Professor of Mechanical and Ocean Engineering at MIT’s Department of Mechanical Engineering, alongside Phillip Isola, a faculty member affiliated with MIT’s Electrical Engineering and Computer Science department. The researchers’ work received funding from a combination of sources, including a Packard Fellowship, a Sloan Analysis Fellowship, the Office of Naval Research, Singapore’s Defence Science and Technology Agency, Amazon, MIT Lincoln Laboratory, and the National Science Foundation’s Institute for Artificial Intelligence and Basic Interactions. Researchers presented their findings on the Convention on Robotic Learning (CoRL) in early November.