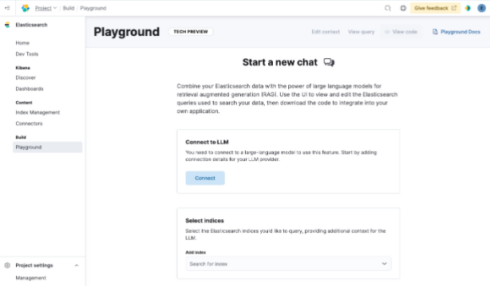

Elastic has simply launched a brand new instrument referred to as Playground that can allow customers to experiment with retrieval-augmented era (RAG) extra simply.

RAG is a observe wherein native knowledge is added to an LLM, akin to personal firm knowledge or knowledge that’s extra up-to-date than the LLMs coaching set. This permits it to offer extra correct responses and reduces the prevalence of hallucinations.

Playground provides a low-code interface for including knowledge to an LLM for RAG implementations. They will use any knowledge saved in an Elasticsearch index for this.

It additionally permits builders to A/B take a look at LLMs from completely different mannequin suppliers to see what fits their wants finest.

The platform can make the most of transformer fashions in Elasticsearch and likewise makes use of the Elasticsearch Open Inference API that integrates with inference suppliers, akin to Cohere and Azure AI Studio.

“Whereas prototyping conversational search, the flexibility to experiment with and quickly iterate on key elements of a RAG workflow is crucial to get correct and hallucination-free responses from LLMs,” stated Matt Riley, international vice chairman and basic supervisor of Search at Elastic. “Builders use the Elastic Search AI platform, which incorporates the Elasticsearch vector database, for complete hybrid search capabilities and to faucet into innovation from a rising checklist of LLM suppliers. Now, the playground expertise brings these capabilities collectively by way of an intuitive person interface, eradicating the complexity from constructing and iterating on generative AI experiences, finally accelerating time to marketplace for our clients.”

You might also like…

RAG is the following thrilling development for LLMs

Forrester shares its high 10 rising expertise tendencies for 2024